1. Objective of This Article

This article will accomplish two tasks:

- Run the official dynamic target recognition example using the camera on the RK3588 development board.

- Interpret the source code to enhance understanding of the rknn development.

2. Development Environment Description

- Host System: Windows 11

- Target Device: Android development board equipped with RK3588 chip

- Core Tools: Android Studio Koala | 2024.1.1 Patch 2, NDK 27.0

3. Compilation and Execution

0. Source Code Location

The official path is rknn-toolkit2\rknpu2\examples\rknn_yolov5_android_apk_demo. If you cannot find it, feel free to contact me.

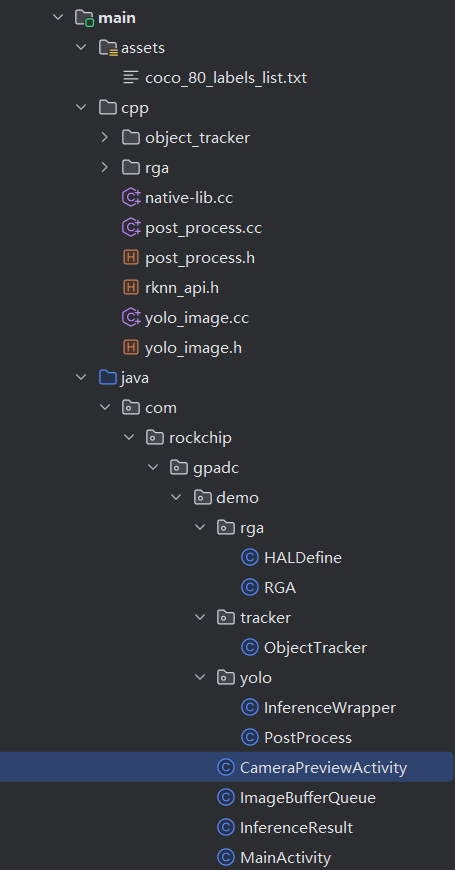

1. Directory Structure

As shown in the figure below:

2. Compilation and Execution

After opening the project, dependencies are automatically downloaded. Click run, and it runs smoothly on the RK3588 development board without any obstacles. The following image shows the running effect, recognizing the three giants in the mobile phone image.

4. Code Interpretation

1. Core Issues

This case involves real-time target detection during camera preview, so there are several core issues to address:

- Camera Usage: The case uses the first-generation Camera API, which is no longer recommended, but it works well.

- Concurrent Operation: The camera previews while the program uses YOLOv5 for target detection. A dedicated inference thread is needed for detection.

- Image Format Conversion: The preview frames from the Camera API in Android are in NV21 format, while the Rockchip NPU requires RGB888 format.

- A container to cache preview frames is needed: A queue for image caching must be designed.

2. Main Process of the Program

It is recommended to start analyzing from CameraPreviewActivity, where the logic gradually unfolds.

Initialization Phase:

- onCreate():

- Initialize UI components (FPS display, result canvas).

- Load the preset YOLO model files into the cache directory based on the device platform (e.g., RK3588/RK356x).

- Initialize the inference engine mInferenceWrapper and the target detection result processor mInferenceResult.

- createPreviewView():

- Create a SurfaceView for camera preview and bind the SurfaceHolder callback.

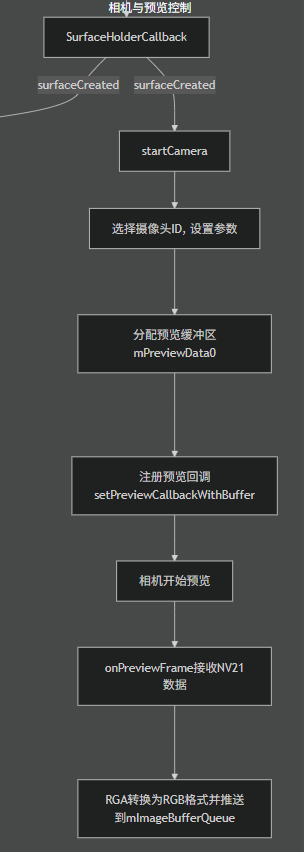

Camera and Preview Control:

See the flowchart:

TSurfaceHolderCallback

- surfaceCreated(): Calls

<span>startCamera()</span>to open the camera and start preview. - surfaceDestroyed(): Stops the preview and releases camera resources.

startCamera()

- Select the camera ID based on the number of cameras (prefer ID=2).

- Configure camera parameters (resolution, preview format), set the preview buffer

<span>mPreviewData0</span>. - Register the preview data callback using

<span>setPreviewCallbackWithBuffer</span>.

onPreviewFrame()

- Receives preview frames in NV21 format.

- Uses RGA hardware acceleration to convert to RGB format.

- Pushed to the image queue

<span>mImageBufferQueue</span>.

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

mCamera0.addCallbackBuffer(data);

ImageBufferQueue.ImageBuffer imageBuffer = mImageBufferQueue.getFreeBuffer();

if (imageBuffer != null) {

// RK_FORMAT_YCrCb_420_SP -> RK_FORMAT_RGBA_8888

// flip for CAMERA_FACING_FRONT

RGA.colorConvertAndFlip(data, RK_FORMAT_YCrCb_420_SP, imageBuffer.mImage_handle,

RK_FORMAT_RGB_888,

CAMERA_PREVIEW_WIDTH, CAMERA_PREVIEW_HEIGHT, this.flip);

mImageBufferQueue.postBuffer(imageBuffer);

}

}

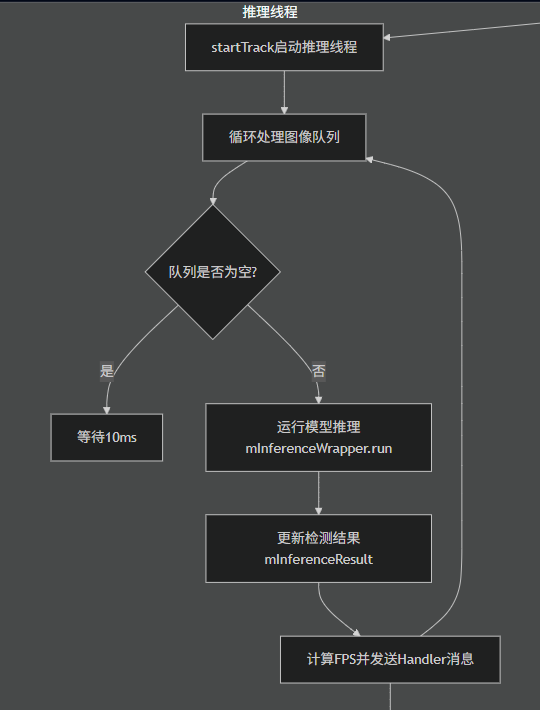

Inference Thread

See the diagram below:

startTrack()

- Initializes the image queue and starts the inference thread

<span>mInferenceRunnable</span><span>.</span>

Inference Loop

- Gets RGB images from the queue.

- Calls

<span>mInferenceWrapper.run()</span>to run the YOLO model inference. - Calculates FPS and updates the UI via

<span>Handler</span>. - Detection results are stored in

<span>mInferenceResult</span>.

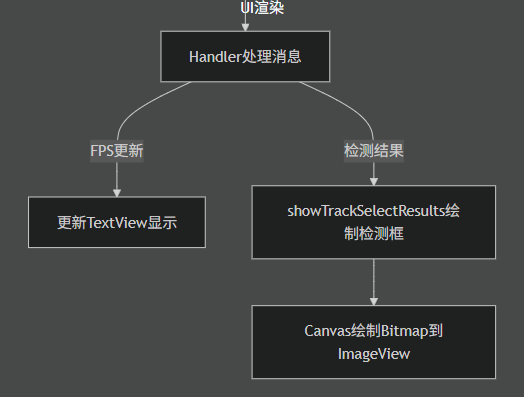

UI Rendering

See the diagram below:

showTrackSelectResults()

- Draws detection boxes and category labels on

<span>Canvas</span>. - Displays results via

<span>ImageView</span>.

Handler Message Mechanism

- The main thread updates the FPS value and the bitmap of detection results.

3. Java Layer Related Classes

3.1 Core Wrapper Class for YOLO Target Detection Model: InferenceWrapper

This class is responsible for interacting with the underlying C++ implementation of the RKNN inference engine, completing the entire process of model initialization, inference execution, and post-processing.Member Variables:

OutputBuffer mOutputs; // Stores the raw output of the model

DetectResultGroup mDetectResults; // Container for native post-processing results

int OBJ_NUMB_MAX_SIZE = 64; // Maximum limit for the number of detected objects

The two classes (data structures) of DetectResultGroup are introduced later in the InferenceResult class.Model Initialization:

public int initModel(int im_height, int im_width, int im_channel, String modelPath) throws Exception {

mOutputs = new InferenceResult.OutputBuffer();

// Pre-allocate memory for YOLO's three-layer output:

mOutputs.mGrid0Out = new byte[255 * 80 * 80]; // 80x80 feature map

mOutputs.mGrid1Out = new byte[255 * 40 * 40]; // 40x40 feature map

mOutputs.mGrid2Out = new byte[255 * 20 * 20]; // 20x20 feature map

if (navite_init(im_height, im_width, im_channel, modelPath) != 0) {

throw new IOException("rknn init fail!");

}

return 0;

}

255 = 3(5+80) corresponds to the 3 anchors of YOLOv5, each anchor predicts 5 parameters + 80 categories.Inference Execution:

public OutputBuffer run(long img_buf_handle, int camera_width, int camera_height) {

native_run(img_buf_handle, camera_width, camera_height,

mOutputs.mGrid0Out, mOutputs.mGrid1Out, mOutputs.mGrid2Out);

return mOutputs;

}

// JNI interface

private native int native_run(long img_buf_handle, int cam_width, int cam_height, byte[] grid0Out, byte[] grid1Out, byte[] grid2Out);

The parameter img_buf_handle is the native handle of the image buffer. The results are directly written into the pre-allocated Java layer buffer via JNI.Post-Processing Flow:

public ArrayList<Recognition> postProcess(OutputBuffer outputs) {

// 1. Initialize result container

mDetectResults = new DetectResultGroup();

mDetectResults.ids = new int[OBJ_NUMB_MAX_SIZE];

mDetectResults.scores = new float[OBJ_NUMB_MAX_SIZE];

mDetectResults.boxes = new float[4 * OBJ_NUMB_MAX_SIZE];

// 2. Call native post-processing

int count = native_post_process(outputs.mGrid0Out, outputs.mGrid1Out, outputs.mGrid2Out,

mDetectResults.ids, mDetectResults.scores, mDetectResults.boxes);

// 3. Convert to Recognition objects

ArrayList<Recognition> recognitions = new ArrayList<>();

for (int i = 0; i < count; ++i) {

RectF rect = new RectF(

mDetectResults.boxes[i*4+0], // left

mDetectResults.boxes[i*4+1], // top

mDetectResults.boxes[i*4+2], // right

mDetectResults.boxes[i*4+3] // bottom

);

recognitions.add(new Recognition(

mDetectResults.ids[i],

mDetectResults.scores[i],

rect

));

}

return recognitions;

}

Native Methods:

| Method Signature | Function Description |

|---|---|

<span>navite_init()</span> |

Initializes the RKNN model, loading the specified model file from the path |

<span>native_deinit()</span> |

Releases model resources |

<span>native_run()</span> |

Executes inference, writing results to the Java layer buffer |

<span>native_post_process()</span> |

Executes NMS and other post-processing, returning detection results |

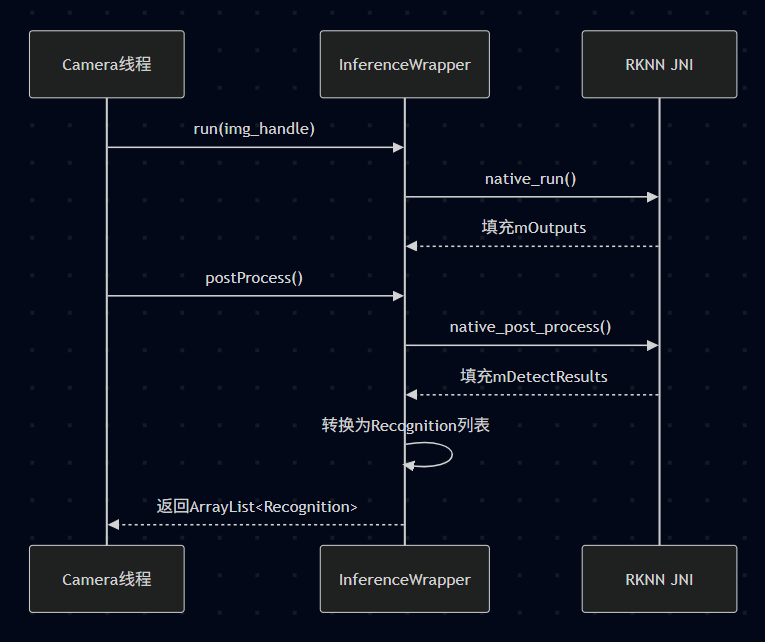

Relationship between InferenceWrapper and Camera Thread and RKNN JNI Timing Diagram:

Relationship with InferenceResult:

- Provides raw output (OutputBuffer) to InferenceResult for storage

- Executes postProcess() to generate final recognition resultsIn Cooperation with ObjectTracker:

- The generated list of Recognition will be passed to the tracker for ID association

3.2 InferenceResult Class

A utility class for processing target detection inference results, mainly responsible for storing inference outputs, processing detection results, and tracking detected objects.Main Member Variables:

OutputBuffer mOutputBuffer; // Stores the raw data of inference output

ArrayList<Recognition> recognitions = null; // Stores processed recognition results

private boolean mIsVaild = false; // Marks whether results need to be recalculated

PostProcess mPostProcess = new PostProcess(); // Post-processor

private ObjectTracker mSSDObjectTracker; // Object tracker

Important Internal Classes (Data Structures):

- OutputBuffer: Stores the three-layer output grids of the YOLO model

public static class OutputBuffer {

public byte[] mGrid0Out;

public byte[] mGrid1Out;

public byte[] mGrid2Out;

}

- Recognition: Represents a single detection result

public static class Recognition {

private int trackId = 0; // Tracking ID

private final int id; // Category ID

private final Float confidence; // Confidence

private RectF location; // Location rectangle

// Methods include getter/setter and toString()

}

- DetectResultGroup: Used to store native post-processing results

public static class DetectResultGroup {

public int count = 0; // Number of detections

public int[] ids; // Array of category IDs

public float[] scores; // Array of scores

public float[] boxes; // Array of bounding boxes

}

Main Member Methods:

- Reset Method: Clears results, resets flags, and resets ObjectTracker object

public void reset() {

if (recognitions != null) {

recognitions.clear();

mIsVaild = true;

}

mSSDObjectTracker = new ObjectTracker(CAMERA_PREVIEW_WIDTH, CAMERA_PREVIEW_HEIGHT, 3);

}

- Set Inference Result:

public synchronized void setResult(OutputBuffer outputs) {

// Clone or copy inference output data

if (mOutputBuffer.mGrid0Out == null) {

mOutputBuffer.mGrid0Out = outputs.mGrid0Out.clone();

mOutputBuffer.mGrid1Out = outputs.mGrid1Out.clone();

mOutputBuffer.mGrid2Out = outputs.mGrid2Out.clone();

} else {

arraycopy(outputs.mGrid0Out, 0, mOutputBuffer.mGrid0Out, 0, outputs.mGrid0Out.length);

arraycopy(outputs.mGrid1Out, 0, mOutputBuffer.mGrid1Out, 0, outputs.mGrid1Out.length);

arraycopy(outputs.mGrid2Out, 0, mOutputBuffer.mGrid2Out, 0, outputs.mGrid2Out.length);

}

mIsVaild = false; // Mark as needing reprocessing

}

3.3 ObjectTracker

The core class that implements target tracking functionality, based on JNI calls to the underlying tracking algorithm, assigns a unique ID to detected objects and maintains tracking across frames.Core Member Variables:

| Variable | Type | Description |

|---|---|---|

<span>mHandle</span> |

long | Native handle of the underlying tracker |

<span>mMaxTrackLifetime</span> |

int | Maximum number of frames to retain after target loss (default 3) |

<span>mWidth/mHeight</span> |

int | Image resolution |

<span>MAX_TRACKED_NUM</span> |

static int | Maximum number of tracked targets (64) |

<span>track_input_*</span> |

static array | Buffer for input data |

<span>track_output_*</span> |

static array | Buffer for output data |

Core Tracking Method:

public ArrayList<InferenceResult.Recognition> tracker(

ArrayList<InferenceResult.Recognition> recognitions) {

// 1. Prepare input data

for (int i = 0; i < recognitions.size(); ++i) {

RectF loc = recognitions.get(i).getLocation();

track_input_location[4*i] = loc.left;

track_input_location[4*i+1] = loc.top;

track_input_location[4*i+2] = loc.right;

track_input_location[4*i+3] = loc.bottom;

track_input_class[i] = recognitions.get(i).getId();

track_input_score[i] = recognitions.get(i).getConfidence();

}

// 2. Call Native Tracking

int[] track_output_num = new int[1];

native_track(mHandle, mMaxTrackLifetime,

recognitions.size(), track_input_location,

track_input_class, track_input_score,

track_output_num, track_output_location,

track_output_class, track_output_score,

track_output_id, mWidth, mHeight);

// 3. Convert output results

ArrayList<InferenceResult.Recognition> results = new ArrayList<>();

for (int i = 0; i < track_output_num[0]; ++i) {

RectF rect = new RectF(

track_output_location[i*4]/mWidth,

track_output_location[i*4+1]/mHeight,

track_output_location[i*4+2]/mWidth,

track_output_location[i*4+3]/mHeight);

InferenceResult.Recognition recog = new InferenceResult.Recognition(

track_output_class[i],

track_output_score[i] == -10000 ? 0 : track_output_score[i],

rect);

recog.setTrackId(track_output_id[i]); // Set tracking ID

results.add(recog);

}

return results;

}

Target tracking is a very important function in this case, and the specific algorithm is implemented at the native layer, which will be introduced later.native_track(): Executes cross-frame target tracking, parameter description: – handle: Tracker instance handle – maxTrackLifetime: Maximum number of lost frames – track_input_: Input data (location/class/score) – track_output_: Output data (location/class/score/ID) – width/height: Image resolution

3.4 ImageBufferQueue

Implementation of a circular buffer queue, mainly used to manage the production-consumption process of image buffers, optimized specifically for Rockchip NPU hardware acceleration.Buffer State Machine:

public class ImageBuffer {

static public final int STATUS_INVAILD = 0; // Invalid/Idle state

static public final int STATUS_READY = 1; // Data ready for processing

static public final int STATUS_USING = 2; // Currently in use

}

Member Variables

| Variable Name | Type | Description |

|---|---|---|

<span>mQueueBuffer</span> |

<span>ImageBuffer[]</span> |

Buffer array |

<span>mQueueBufferSize</span> |

<span>int</span> |

Total number of buffers |

<span>mCurrentFreeBufferIndex</span> |

<span>int</span> |

Current free buffer index |

<span>mCurrentUsingBufferIndex</span> |

<span>int</span> |

Current using buffer index |

Timing Diagram:

3.4.1 Buffer Acquisition and Release

Get Free Buffer (Producer):

public synchronized ImageBuffer getFreeBuffer() {

// Circularly find the first buffer that is not in the USING state

int index = mCurrentFreeBufferIndex;

for (int i=0; i<mQueueBufferSize; ++i) {

++index;

if (index >= mQueueBufferSize) {

index = 0;

}

if (mQueueBuffer[index].mStatus != ImageBuffer.STATUS_USING) {

break;

}

}

mCurrentFreeBufferIndex = index;

mQueueBuffer[index].mStatus = ImageBuffer.STATUS_INVAILD;

return mQueueBuffer[index];

}

Submit Ready Buffer:

public synchronized void postBuffer(ImageBuffer buffer) {

buffer.mStatus = ImageBuffer.STATUS_READY;

}

Get Ready Buffer (Consumer):

public synchronized ImageBuffer getReadyBuffer() {

// Circularly find a buffer in the READY state

int index = mCurrentUsingBufferIndex;

for (int i=0; i<mQueueBufferSize; ++i) {

++index;

if (index >= mQueueBufferSize) {

index = 0;

}

if (mQueueBuffer[index].mStatus == ImageBuffer.STATUS_READY) {

break;

}

}

if ((index != mCurrentUsingBufferIndex) && (mQueueBuffer[index].mStatus == ImageBuffer.STATUS_READY)) {

mCurrentUsingBufferIndex = index;

mQueueBuffer[index].mStatus = ImageBuffer.STATUS_USING;

return mQueueBuffer[index];

}

return null;

}

Release Used Buffer:

public synchronized void releaseBuffer(ImageBuffer buffer) {

buffer.mStatus = ImageBuffer.STATUS_INVAILD; // Reset to invalid state

}

3.4.2 NPU Memory Management

public class ImageBuffer {

public long mImage_handle; // NPU memory handle

public ImageBuffer(int width, int height) {

// Create NPU image buffer (RGB888 format)

mImage_handle = create_npu_img_buffer(RK_FORMAT_RGB_888);

}

protected void finalize() {

// Release NPU memory

release_npu_img_buffer(mImage_handle);

}

}

4. Native Layer

From the flow in the Java layer, you will gradually call the implementation in the native layer. All JNI interfaces are in the native-lib.cc source file. Below are some key points:

4.1 Image Format Conversion

In the Java layer onPreviewFrame, the native layer’s color_convert_and_flip interface is called, as seen in RGA.java

public static int colorConvertAndFlip(byte[] src, int srcFmt, long npu_buf_handle, int dstFmt,

int width, int height, int flip) {

if (src == null) {

Log.w("rkyolo.RGA", "src or dst is null");

return -1;

}

return color_convert_and_flip(src, srcFmt, npu_buf_handle, dstFmt, width, height, flip);

}

private static native int color_convert_and_flip(byte[] src, int srcFmt, long npu_buf_handle, int dstFmt,

int width, int height, int flip);

JNI Bridge Implementation: See native-lib.cc

extern "C"

JNIEXPORT jint JNICALL

Java_com_rockchip_gpadc_demo_rga_RGA_color_1convert_1and_1flip(

JNIEnv* env, jclass clazz,

jbyteArray src, jint src_fmt,

jlong npu_buf_handle,

jint dst_fmt,

jint width, jint height, jint flip)

{

// Get the Native pointer of the Java array

jbyte* src_buf = env->GetByteArrayElements(src, nullptr);

// Call the core processing function

jint ret = colorConvertAndFlip(src_buf, src_fmt, npu_buf_handle,

dst_fmt, width, height, flip);

// Release Java array resources

env->ReleaseByteArrayElements(src, src_buf, 0);

return ret;

}

Core Logic Implementation: See yolo-image.cc

// Convert NPU buffer handle

auto p_dst_buf = reinterpret_cast<img_npu_buffer*>(npu_buf_handle);

// Create RGA source buffer

rga_buffer_handle_t rga_src_handle =

importbuffer_virtualaddr(src, width*height*4);

rga_buffer_t rga_src =

wrapbuffer_handle(rga_src_handle, width, height, srcFmt);

// Image processing

if (DO_NOT_FLIP == flip) {

// Only color space conversion

ret = imcvtcolor(rga_src, p_dst_buf->rga_buf,

rga_src.format, p_dst_buf->rga_buf.format);

} else {

// Color conversion + flip processing

ret = imflip(rga_src, p_dst_buf->rga_buf, flip);

}

// Resource release

if (rga_src_handle) {

releasebuffer_handle(rga_src_handle);

}

The core methods imcvtcolor and imflip are implemented in the RGB library…

4.2 Target Tracking

The target tracking code is located in cpp/object_tracker, with JNI bridging in native-lib.cc

4.2.1 Starting with JNI Bridging Methods

Bridge Method Signature:

JNIEXPORT void JNICALL Java_com_rockchip_gpadc_demo_tracker_ObjectTracker_native_1track

(JNIEnv * env, jobject obj,

jlong handle,

jint maxTrackLifetime,

jint track_input_num,

jfloatArray track_input_locations,

jintArray track_input_class,

jfloatArray track_input_score,

jintArray track_output_num,

jfloatArray track_output_locations,

jintArray track_output_class,

jfloatArray track_output_score,

jintArray track_output_id,

jint width,

jint height)

Parameter Description:

| Parameter Type | Parameter Name | Description |

|---|---|---|

| jlong | handle | Native tracker instance handle |

| jint | maxTrackLifetime | Maximum number of frames to retain after target loss |

| jint | track_input_num | Number of input targets |

| jfloatArray | track_input_locations | Input target location array (4*num) |

| jintArray | track_input_class | Input target category array |

| jfloatArray | track_input_score | Input target confidence array |

| jintArray | track_output_num | Output target count |

| jfloatArray | track_output_locations | Output target location array (4*num) |

| jintArray | track_output_class | Output target category array |

| jfloatArray | track_output_score | Output target confidence array |

| jintArray | track_output_id | Output target tracking ID array |

| jint | width | Image width |

| jint | height | Image height |

4.2.2 Core Data Structures

- Basic Structure:

typedef struct {

int x, y; // Top-left coordinates

int width, height; // Width and height

} Rect_T;

typedef struct {

Rect_T r; // Bounding box

int obj_class; // Category ID

float score; // Confidence

int id; // Tracking ID

int reserve[1]; // Reserved field

} object_T;

- Extended Structure for Tracking Objects:

struct ExtObject {

int id; // Tracking ID

Rect_T location; // Current location

Rect_T predict_loc_when_miss; // Predicted location when lost

Rect_T smooth_rect; // Smoothed position

ObjectStatus status; // Status

int detectedNum; // Number of detections

int noDetectedNum; // Number of non-detections

int obj_class; // Category

float score; // Confidence

int miss; // Loss flag

};

track Method

The bridge method directly calls the track method in track_link.cpp: responsible for associating detection results with existing tracking targets and outputting tracking results with stable IDs.Parameter Description:

| Parameter Type | Parameter Name | Description |

|---|---|---|

<span>long</span> |

handle | Native handle of the tracker instance |

<span>int</span> |

maxTrackLifetime | Maximum number of frames to retain after target loss |

<span>int</span> |

track_input_num | Number of input targets |

<span>float*</span> |

c_track_input_locations | Input target location array (each target has 4 floats: x1,y1,x2,y2) |

<span>int*</span> |

c_track_input_class | Input target category array |

<span>float*</span> |

c_track_input_score | Input target confidence array |

<span>int*</span> |

c_track_output_num | Output target count |

<span>float*</span> |

c_track_output_locations | Output target location array |

<span>int*</span> |

c_track_output_class | Output target category array |

<span>float*</span> |

c_track_output_score | Output target confidence array |

<span>int*</span> |

c_track_output_id | Output target tracking ID array |

<span>int</span> |

width | Image width |

<span>int</span> |

height | Image height |

Input Data Conversion: Converts the bounding box format passed from the Java layer to the internal processing format

for (int i = 0; i < track_input_num; i++) {

// Convert [x1,y1,x2,y2] to [x,y,width,height] format

object_input[i].r.x = (int)c_track_input_locations[i*4 +0];

object_input[i].r.y = (int)c_track_input_locations[i*4 +1];

object_input[i].r.width = (int)(c_track_input_locations[i*4 +2] - c_track_input_locations[i*4 +0]);

object_input[i].r.height = (int)(c_track_input_locations[i*4 +3] - c_track_input_locations[i*4 +1]);

// Copy category and confidence

object_input[i].score = c_track_input_score[i];

object_input[i].obj_class = c_track_input_class[i];

}

Execute Tracking Update: Calls the tracking algorithm to update the target state

object->update(maxTrackLifetime, track_input_num, object_input,

c_track_output_num, object_output, width, height);

Output Data Conversion: Converts the internal format to the output format expected by the Java layer

for (int i = 0; i < *c_track_output_num; i++) {

// Convert [x,y,width,height] back to [x1,y1,x2,y2] format

c_track_output_locations[i*4 +0] = (float)object_output[i].r.x;

c_track_output_locations[i*4 +1] = (float)object_output[i].r.y;

c_track_output_locations[i*4 +2] = (float)(object_output[i].r.x + object_output[i].r.width);

c_track_output_locations[i*4 +3] = (float)(object_output[i].r.y + object_output[i].r.height);

// Copy category, confidence, and tracking ID

c_track_output_class[i] = object_output[i].obj_class;

c_track_output_score[i] = object_output[i].score;

c_track_output_id[i] = object_output[i].id;

}

4.2.4 OdtDetector::update Method

This method is responsible for processing newly detected targets and associating them with existing tracking targets, outputting tracking results with stable IDs.Parameter Description:

| Parameter | Type | Description |

|---|---|---|

<span>maxTrackLifetime</span> |

int | Maximum number of frames to retain after target loss |

<span>track_num_input</span> |

int | Number of input targets |

<span>object_input</span> |

object_T* | Input target array (includes location, category, score) |

<span>track_num_output</span> |

int* | Output target count (returned via pointer) |

<span>object_output</span> |

object_T* | Output target array |

<span>width</span> |

int | Image width |

<span>height</span> |

int | Image height |

Main Process

- Data Preparation Phase: Converts input data to vector format for subsequent processing

std::vector<Rect_T> objects_Rect_T;

std::vector<int> objects_class;

std::vector<float> object_score;

for (int i = 0; i < track_num_input; i++) {

objects_Rect_T.push_back(object_input[i].r);

objects_class.push_back(object_input[i].obj_class);

object_score.push_back(object_input[i].score);

}

- Core Tracking Update: Calls the underlying tracker to associate targets and update their states

m_objects_tracker.updateTrackedObjects(objects_Rect_T, objects_class,

object_score, maxTrackLifetime,

m_width, m_height);

- Get Tracking Results: Retrieves extended target information from the tracker

std::vector<ObjectsTracker::ExtObject> extObjects;

m_objects_tracker.getObjects(extObjects);

- Result Output Processing: Selects output location information based on target status and fills complete output data

int nobjects = (int)extObjects.size();

track_num_output[0] = nobjects;

for (; i < nobjects && i < 100; i++) {

if(extObjects[i].miss == 0) {

object_output[i].r = extObjects[i].smooth_rect; // Use smoothed position

} else {

object_output[i].r = extObjects[i].predict_loc_when_miss; // Use predicted position

}

object_output[i].obj_class = extObjects[i].obj_class;

object_output[i].score = extObjects[i].score;

object_output[i].id = extObjects[i].id;

}

4.2.5 ObjectsTracker::updateTrackedObjects Method

The core algorithm implementation of the multi-target tracking system, using an IOU (Intersection over Union)-based matching strategy, combined with motion prediction and state management, to achieve stable association between detection boxes and tracking targets.TrackedObject Tracking Target Structure:

struct TrackedObject {

std::vector<Rect_T> lastPositions; // Historical position records

Rect_T predict_loc_when_miss; // Predicted location when lost

Rect_T smooth_Positionn; // Smoothed position

float vx, vy; // Motion speed

int numDetectedFrames; // Total number of detection frames

int numFramesDetected; // Continuous detection frames

int numFramesNotDetected; // Continuous loss frames

int id; // Unique ID

int obj_class; // Target category

float score; // Confidence

int miss; // Loss flag

};

Main Process:

- Initialization

int N1 = trackedObjects.size(); // Number of existing tracking targets

int N2 = detectedObjects.size(); // Number of new detected targets

// Initialize matching relationship table

std::vector<int> correspondence(N2, NEW_RECTANGLE);

std::vector<float> correspondenceScore(N2, 0);

- Motion Prediction: Calculates motion speed based on historical positions to predict current location

for(int i=0; i < N1; i++) {

if(numpositions>1) {

predict_loctation(curObject, image_width, image_height, pre_x, pre_y, vx, vy);

curObject.vx = vx;

curObject.vy = vy;

// Update predicted location

prevRect.x = (int)pre_x;

prevRect.y = (int)pre_y;

curObject.predict_loc_when_miss = prevRect;

}

}

- Data AssociationPrimary Matching: Calculates matching scores based on IOU and number of lost frames

for(int j=0; j < N2; j++) {

float iou = CalculateIOU(prevRect, detectedObjects[j]);

if (iou > 0.1f) {

float trackScore = iou / (curObject.numFramesNotDetected + 1);

if (iou > bestArea && correspondenceScore[j] < trackScore) {

bestIndex = j;

bestArea = iou;

correspondenceScore[j] = trackScore;

}

}

}

Conflict Resolution: Handles conflicts of overlapping detection boxes

for(int j=0; j < N2; j++) {

if(correspondence[j] >= 0) continue;

float iou = CalculateIOU(detectedObjects[j], detectedObjects[bestIndex]);

if(iou > 0.45f) {

correspondence[j] = INTERSECTED_RECTANGLE; // Mark as conflict box

}

}

- State UpdateUpdate Existing Targets: Uses weighted average for position smoothing, combined with motion prediction

trackedObjects[i].lastPositions.push_back(detectedObjects[j]);

// Maintain the length of the historical position queue

while(trackedObjects[i].lastPositions.size() > parameters.numLastPositionsToTrack) {

trackedObjects[i].lastPositions.erase(trackedObjects[i].lastPositions.begin());

}

// Smoothing processing

trackedObjects[i].smooth_Positionn.x =

((smooth.x + smooth.width*0.5f)*0.5f + (detect.x + detect.width*0.5f)*1.f)/1.5f

+ vx*0.5f/1.5f - smooth.width*0.5f;

Create New Targets: Creates new tracking targets for unmatched detection boxes

trackedObjects.push_back(detectedObjects[j]);

trackedObjects.back().obj_class = objects_class[j];

trackedObjects.back().score = objects_score[j];

Conclusion

This article indeed took many days to write (and was quite exhausting). Some C++ code still needs further contemplation. Target tracking, RGB conversion, and image buffer queues are all very meaningful. They can still be referenced in other projects. Writing is not easy, please provide guidance and support.