The Objective of This Article

This article will compile the YOLOv8-Pose C Demo in the RKNN Docker environment and deploy it to the RK3588 development board using the adb tool.

Development Environment Description

- Host System: Windows 11

- Target Device: Android development board equipped with the RK3588 chip

- Core Tools: Docker image containing rknn-toolkit2, rknn_model_zoo, and other tools, ADB debugging tools

YOLOv8-Pose (Pose Estimation)

Pose detection technology, simply put, is the technology that allows computers to understand “what actions a person (or object) is performing.” From initially being able to vaguely recognize a few body parts, it has now evolved to track complex movements of dozens of people in real-time. In the early days, to depict a person’s action, the computer needed to take two steps: first, use a red pen to circle all the people in the photo (object detection); then switch to a blue pen to mark the joints on each person (keypoint detection). This approach had two issues: it was relatively slow, and if the first step incorrectly circled a person, the entire process would be wrong (for example, misidentifying a couple hugging as one person). Later, engineers invented a “multi-functional pen”: a single pen that could simultaneously draw red circles and blue dots (detecting + pose estimation occurring synchronously), but the internal structure of the pen was complex and required an expensive “drawing board” (GPU) to operate. This significantly improved accuracy (able to distinguish overlapping individuals) and allowed for simultaneous recognition of actions and objects (such as a hand “holding a cup”). However, it was still not fast enough, and installation and usage were quite complicated. Then came YOLOv8-Pose. It can simultaneously find people and mark actions in the blink of an eye, adapting to various devices, from budget smartphones to professional cameras (corresponding to different models from YOLOv8n to YOLOv8x). Thus, it became popular. There are three classic reference cases:

- Gym mirrors: Real-time correction of your yoga poses

- Factory quality inspection: Checking whether workers’ assembly actions are standard

- Game control: Playing motion-sensing games without lag using a camera

Starting the RKNN Docker Environment

# Use the docker run command to create and run the RKNN Toolkit2 container. The following command is for reference only

# and maps local files into the container using the additional -v <host src folder>:<image dst folder> parameter

docker run -t -i --privileged -v /dev/bus/usb:/dev/bus/usb -v D:\rknn\rknn_model_zoo-v2.3.0:/rknn_model_zoo rknn-toolkit2:2.3.0-cp38-custom /bin/bash

Running the YOLOv8-Pose Example

Downloading yolov8n-pose.onnx

cd /rknn_model_zoo/examples/yolov8_pose/model

./download_model.sh

Converting ONNX to RKNN Format

cd ../python

# python convert.py <onnx_model> <TARGET_PLATFORM> <dtype(optional)> <output_rknn_path(optional)>

python convert.py ../model/yolov8n-pose.onnx rk3588

Running result:

I rknn-toolkit2 version: 2.3.0

--> Config model

done

--> Loading model

done

I rknn building ...

I rknn building done.

done

--> Export rknn model

output_path: ../model/yolov8_pose.rknn

done

Running the Android C++ Example

1. Compile

# Go back to the rknn_model_zoo root directory

cd ../../

./build-android.sh -t rk3588 -a arm64-v8a -d yolov8_pose

Compilation was successful without any surprises.

===================================

BUILD_DEMO_NAME=yolov8_pose

BUILD_DEMO_PATH=examples/yolov8_pose/cpp

TARGET_SOC=rk3588

TARGET_ARCH=arm64-v8a

BUILD_TYPE=Release

ENABLE_ASAN=OFF

DISABLE_RGA=

INSTALL_DIR=/rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_yolov8_pose_demo

BUILD_DIR=/rknn_model_zoo/build/build_rknn_yolov8_pose_demo_rk3588_android_arm64-v8a_Release

ANDROID_NDK_PATH=/rknn_model_zoo/android-ndk-r19c

===================================

-- Install configuration: "Release"

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_yolov8_pose_demo/./rknn_yolov8_pose_demo

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_yolov8_pose_demo/./model/bus.jpg

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_yolov8_pose_demo/./model/yolov8_pose_labels_list.txt

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_yolov8_pose_demo/model/yolov8_pose.rknn

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_yolov8_pose_demo/lib/librknnrt.so

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_yolov8_pose_demo/lib/librga.so

2. Push to Development Board

# Switch to root user privileges

adb root

adb remount

adb push install/rk3588_android_arm64-v8a/rknn_yolov8_pose_demo /data/rknn-test

3. Run Demo on Development Board

adb shell

# Enter the rknn_yolov5_demo directory on the development board

cd /data/rknn-test/rknn_yolov8_pose_demo/

# Set the library environment

export LD_LIBRARY_PATH=./lib

# Run the executable

./rknn_yolov8_pose_demo model/yolov8-pose.rknn model/bus.jpg

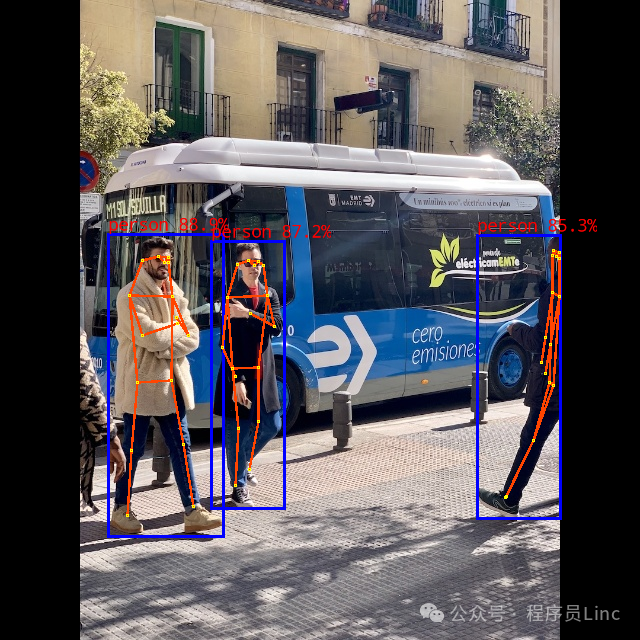

Running result is as follows:

load label ./model/yolov8_pose_labels_list.txt

model input num: 1, output num: 4

model is NHWC input fmt

model input height=640, width=640, channel=3

origin size=640x640 crop size=640x640

input image: 640 x 640, subsampling: 4:2:0, colorspace: YCbCr, orientation: 1

scale=1.000000 dst_box=(0 0 639 639) allow_slight_change=1 _left_offset=0 _top_offset=0 padding_w=0 padding_h=0

rga_api version 1.10.1_[0]

rknn_run

rknn_run time=22.99ms, FPS = 43.49

post_process time=0.47ms, FPS = 2145.92

person @ (108 234 223 536) 0.889

person @ (211 241 284 508) 0.872

person @ (477 235 560 518) 0.853

write_image path: out.png width=640 height=640 channel=3 data=0xb400007b08fc6000

Main Function Source Code

int main(int argc, char **argv)

{

if (argc != 3)

{

printf("%s <model_path> <image_path>\n", argv[0]);

return -1;

}

const char *model_path = argv[1];

const char *image_path = argv[2];

int ret;

rknn_app_context_t rknn_app_ctx;

memset(&rknn_app_ctx, 0, sizeof(rknn_app_context_t));

init_post_process();

ret = init_yolov8_pose_model(model_path, &rknn_app_ctx);

if (ret != 0)

{

printf("init_yolov8_pose_model fail! ret=%d model_path=%s\n", ret, model_path);

goto out;

}

image_buffer_t src_image;

memset(&src_image, 0, sizeof(image_buffer_t));

ret = read_image(image_path, &src_image);

if (ret != 0)

{

printf("read image fail! ret=%d image_path=%s\n", ret, image_path);

goto out;

}

object_detect_result_list od_results;

ret = inference_yolov8_pose_model(&rknn_app_ctx, &src_image, &od_results);

if (ret != 0)

{

printf("inference_yolov8_pose_model fail! ret=%d\n", ret);

goto out;

}

// Draw boxes and probabilities

char text[256];

for (int i = 0; i < od_results.count; i++)

{

object_detect_result *det_result = &(od_results.results[i]);

printf("%s @ (%d %d %d %d) %.3f\n", coco_cls_to_name(det_result->cls_id),

det_result->box.left, det_result->box.top,

det_result->box.right, det_result->box.bottom,

det_result->prop);

int x1 = det_result->box.left;

int y1 = det_result->box.top;

int x2 = det_result->box.right;

int y2 = det_result->box.bottom;

draw_rectangle(&src_image, x1, y1, x2 - x1, y2 - y1, COLOR_BLUE, 3);

sprintf(text, "%s %.1f%%", coco_cls_to_name(det_result->cls_id), det_result->prop * 100);

draw_text(&src_image, text, x1, y1 - 20, COLOR_RED, 10);

for (int j = 0; j < 38/2; ++j)

{

draw_line(&src_image, (int)(det_result->keypoints[skeleton[2*j]-1][0]),(int)(det_result->keypoints[skeleton[2*j]-1][1]),

(int)(det_result->keypoints[skeleton[2*j+1]-1][0]),(int)(det_result->keypoints[skeleton[2*j+1]-1][1]),COLOR_ORANGE,3);

}

for (int j = 0; j < 17; ++j)

{

draw_circle(&src_image, (int)(det_result->keypoints[j][0]),(int)(det_result->keypoints[j][1]),1, COLOR_YELLOW,1);

}

}

write_image("out.png", &src_image);

out:

deinit_post_process();

ret = release_yolov8_pose_model(&rknn_app_ctx);

if (ret != 0)

{

printf("release_yolov5_model fail! ret=%d\n", ret);

}

if (src_image.virt_addr != NULL)

{

free(src_image.virt_addr);

}

return 0;

}