Before diving into AI chips, let’s start with a small quiz to see how much you know about AI chips!

AI Chip Quiz

1. The core difference between AI chips and traditional CPUs is:

A) Higher clock speed

B) Stronger parallel computing capability

C) Larger storage capacity

D) Lower power consumption

2. Which of the following scenarios is more suitable for using AI chips?

A) Word processing

B) Real-time image recognition for autonomous driving

C) Playing high-definition videos

D) Running an operating system

3. The core function of a GPU in AI computation is:

A) Replacing the CPU

B) Accelerating large-scale parallel computing

C) Reducing hardware costs

D) Increasing network transmission speed

4. Which company is known for designing AI-specific chips (like TPU)?

A) Intel

B) NVIDIA

C) Google

D) Samsung

5. The chip specifically designed for deep learning inference is:

A) CPU

B) GPU

C) TPU

D) FPGA

6. In edge computing scenarios, the key performance indicator for AI chips is:

A) Ultra-high performance

B) Low power consumption and real-time capability

C) Large-scale data storage

D) Graphics rendering capability

Answers will be revealed at the end of the article!

As one of the three core elements driving the development of artificial intelligence, the importance of computing power is self-evident.

AI chips are the main source of computing power, essentially the “brain hardware” specifically designed for artificial intelligence.

Unlike ordinary chips that “do a bit of everything,” AI chips are specially designed and optimized to run machine learning and deep learning algorithms faster and more efficiently, significantly improving the training and usage efficiency of AI models.

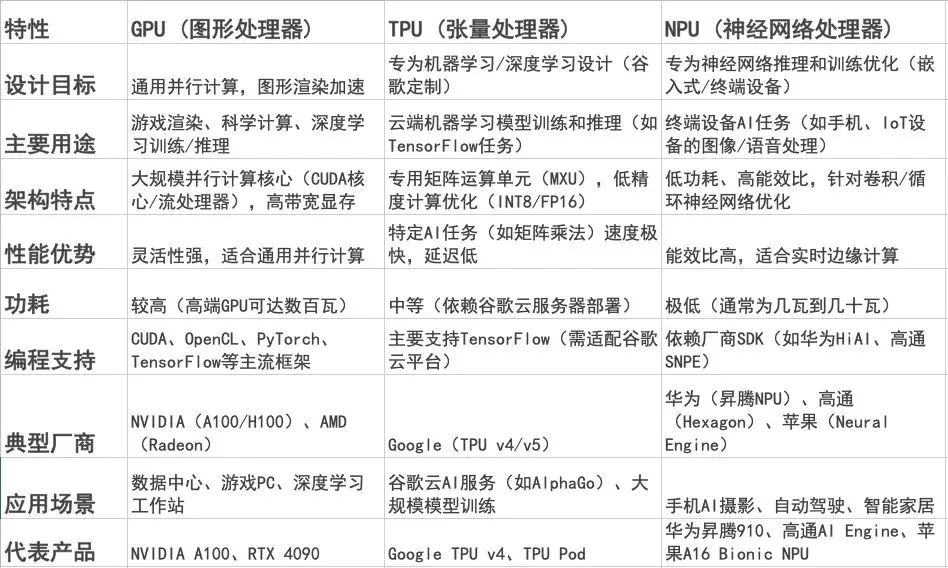

In the field of AI chips, the three most commonly heard types are GPU, TPU, and NPU.

Each has different design philosophies and areas of expertise, playing key roles in various scenarios. Next, let’s discuss the differences between these three types of chips in an easy-to-understand way and where they are used.

The Most Familiar AI Chip: GPU

When it comes to AI chips, the most familiar one is definitely the GPU.

GPU stands for Graphic Processing Unit, which, as the name suggests, was originally designed for image processing and is widely used in the gaming industry.

Take the popular game “Black Myth: Wukong” from last year as an example; the game’s visual details are so rich that it feels immersive. I recorded a video on the official website for you to experience it.

To smoothly run such exquisite graphics, the recommended computer configuration specifically mentions the GPU.

However, the GPU here is NVIDIA’s GeForce series, which is a “discrete graphics card” aimed at ordinary consumers, priced between 2000 to 8000 yuan.

But the GPUs used for training large AI models are different; they are high-performance computing GPUs that provide extremely high computing power and parallel processing capabilities, such as the A100, H series, and H200 that we often talk about.

For instance, the H200 costs 2.3 million yuan!

We have previously written about how to choose the right GPU based on different needs.

“A Comprehensive Guide to GPU Selection for Training, Fine-tuning, and Inference of Large Models”

Why do large AI models prefer GPUs?Because it has four major “killer features”:

-

Super capable: It can handle thousands of tasks simultaneously, whether rendering game graphics or running AI computations;

-

Fast transmission: It has a “high-speed channel” that moves data quickly, easily handling high-definition video;

-

Super energy-efficient: It works more efficiently than CPUs with the same power consumption;

-

Upgradeable: Multiple GPUs can be combined to double the computing power.

Currently, most AI large models, from GPT and Claude to domestic models like Tongyi Qianwen and Doubao, are almost all “fed” by GPUs.

When it comes to major manufacturers producing GPUs, the most famous is NVIDIA, whose products are used by many international large models.

AMD is also catching up, and the competition between the two has been intense in recent years.

Domestic manufacturers like Jingjia Micro, Moore Threads, and Huawei HiSilicon are also striving to catch up.

How much does it cost to train a large AI model?

Although OpenAI is “tight-lipped,” some speculate that the massive GPT-4 model used tens of thousands or even hundreds of thousands of GPUs.

It is said that Deepseek V3 used 2048 NVIDIA H800 GPUs, costing less than 5% of GPT-4, but some say the actual cost is much higher.

Let’s take a look at the prices of common AI GPUs: the official price of NVIDIA H20 for domestic supply is between 85,000 to 107,000 yuan, while dealers sell it for 110,000 to 150,000 yuan; H100 is even more expensive, costing 250,000 to 290,000 yuan. Huawei Ascend 910 is about 80,000 to 120,000 yuan.

No wonder it’s said that training large models is a “money-burning business” that only large companies can afford.

However, ordinary people need not worry; there is no need to train large models from scratch. We can fine-tune AI models to better fit actual needs. We have previously written many articles that guide you through preparing data and practical operations.

“Fine-tuning Large Models Series (1): How to Make Large Models Domain Experts?”

“Fine-tuning Large Models Series (2): A Comprehensive Guide to Preparing High-Quality Datasets”

“Practical Experience: The Process of Fine-tuning Qwen to Build a Cultural Tourism Large Model with LLamaFactory”

Moreover, obtaining GPU computing power is not difficult; if we can’t afford it, we can rent it!

The Jiuzhang Cloud Extreme Cloud Computing Platform specifically provides high-performance computing resources, charging based on usage, and offers a nearly 500 yuan 25-degree computing power package for registered enterprise and university users.

To learn more, scan the code to add the assistant, open a dedicated channel, and receive benefits immediately!

Google’s TPU Developed to Accelerate AI Computation

Having discussed the familiar GPU, let’s talk about TPU.

In simple terms, TPU is Google’s “custom chip” specifically designed for artificial intelligence, officially known as Tensor Processing Unit.

The birth of TPU is closely related to a Google project.

In 2011, Google X Lab launched the Google Brain project. This lab is highly confidential, comparable to the CIA.

Andrew Ng, a Stanford University computer science professor and Google advisor at the time, was deeply involved in this project.

OpenAI’s former chief scientist Ilya Sutskever was also involved.

As the Google Brain project progressed, insufficient computing power became a major issue. Therefore, Google decided to develop a dedicated AI chip—thus, TPU was born.

What makes TPU so powerful?

-

Professional player: Born for AI computation, whether training AI models or running tasks like speech recognition and image generation, it excels at these tasks;

-

Fast processor: When processing massive data like images and speech, it is much faster than ordinary chips, especially adept at complex calculations like matrix multiplication;

-

Energy-efficient: For the same tasks, TPU consumes less power than GPU, making it suitable for long hours of “overtime” work;

-

Team player: Multiple TPUs can form a “super team,” easily training large AI models like ChatGPT;

-

Google ecosystem VIP: It is perfectly compatible with Google’s AI tools (like TensorFlow), making it very user-friendly.

Logically, with such capabilities, why do we rarely hear about large models trained with TPU?

The main reason lies in the usage method.

Google’s TPU is mainly rented through Google Cloud, so companies must go through the cloud to use it. In contrast, directly purchasing GPUs is obviously more flexible, and since NVIDIA has long occupied the market, people are accustomed to using GPUs as the first choice for training large models.

However, there are exceptions; Google’s own Gemini and Apple’s Apple Foundation Model were trained using TPU.

Perhaps in the future, TPU will break the “domination” of GPU and give birth to more amazing large models!

The NPU Closest to Us Ordinary People

Finally, let’s talk about NPU.

As usual, let’s check the definition on Deepseek (laughs)—NPU stands for Neural Network Processing Unit, which is a chip specifically designed for computation, inference, and prediction based on the principles of the human brain’s neural network.

If we were to make an analogy, NPU is like AI’s dedicated “tutor,” enabling AI to quickly “digest” various knowledge and easily achieve “overtaking on curves”!

What are the advantages of NPU?

-

Compact size: Often directly “hidden” in mobile chipsets, popular chips like Snapdragon and Kirin feature it without taking up extra space;

-

Super-fast computation: It is specifically optimized for AI computation tasks, processing matrix multiplication and neural networks much faster than ordinary CPUs, making it efficient and convenient;

-

AI task specialist: Tasks like facial recognition for unlocking phones, voice assistants responding instantly, and automatic beautification of photos are all strengths of NPU;

-

Good partner for mobile devices: Whether it’s smartphones, smartwatches, or home cameras, devices that need to be “online” for long periods can use NPU to ensure performance while being energy-efficient.

NPU is actually ubiquitous in our lives. Video surveillance in communities automatically recognizes faces, access control systems “unlock” with facial recognition, voice assistants instantly understand your commands, and smart speakers accurately play songs… all of these are powered by NPU.

However, it is important to note that NPU is rarely used to train large AI models.

Compared to the massive computing power required for training large models, NPU is better suited for performing “small but precise” tasks in everyday scenarios, closely related to our daily lives.

Perhaps one day, as AI applications become more popular, the AI functions in our smartphones will all be handled by NPU, bringing more surprises!

Now that we have clarified the three types of AI chips, let’s directly present a comparison chart of GPU, TPU, and NPU for a clear view of their differences!

If you still feel a bit confused after reading, or if you have any questions about the details of any chip, please like and bookmark this article so you can “review and learn anew” at any time.

By the way, how many questions did you get right on the quiz at the beginning of the article?

The correct answers are: 1B 2B 3B 4C 5C 6B. Did you get them all right?

What else would you like to know about AI?

Feel free to share your thoughts in the comments, or you can scan the code to join the 【Jiuzhang AlayaNeW Large Model Technology】 community and exchange ideas with fellow AI enthusiasts.

Exciting Content

1. “Enterprise Application Large Model Report: How to Respond to Change and Build Your Own ‘AI'”

2. “DeepSeek All-in-One Machine Selection Reference Guide”3. “High-Performance DeepSeek Cloud All-in-One Machine Shines Bright”4.“Practical Approaches for Enterprises to Access Large Models: Flexible Choices of Five AI Forms”👇Click“Read the Original” to register for Jiuzhang Cloud Extreme AlayaNew Cloud Computing Platform