As we all know, the “AI PC” has become one of the hottest topics in the consumer electronics industry today. For consumers who may not be well-versed in technical details but are intrigued by this concept, they believe that the “AI PC” can assist them in completing tasks they are not skilled at or alleviate their daily workload.

However, for users like us, who have high expectations for the “AI PC” but also possess a relatively good understanding of it, we often ponder why the AI PC has been around for some time, yet it is only now being truly implemented?

-

How Early Did AI PCs Actually Appear? They Emerged 7 Years Ago

Leaving aside those professional supercomputers, when did the “AI PC” actually begin to appear for personal consumers?

From the perspective of the CPU, the answer is 2019. In that year, Intel introduced the “DL BOOST” instruction set specifically designed to accelerate 16-bit operations for its 10th generation Core-X processors (such as the i9-10980XE). This instruction set was later extended to lower-tier 10th generation Core mobile versions and the entire 11th generation Core product line, theoretically doubling their efficiency in processing deep learning and AI applications.

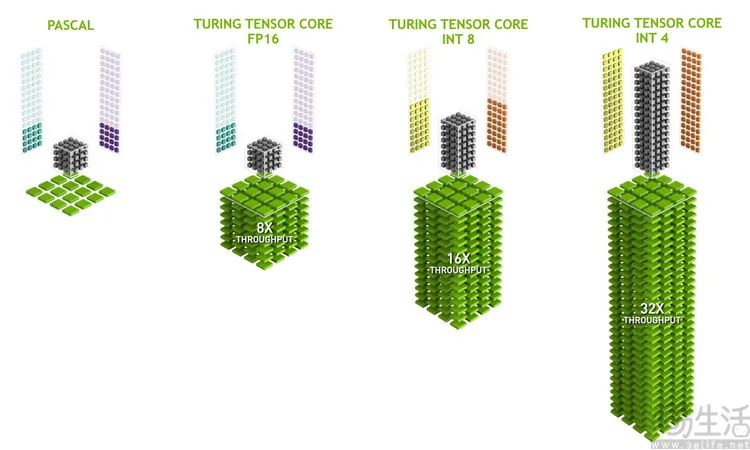

From the graphics card perspective, the first consumer-grade “AI graphics card” with integrated Tensor Cores, the NVIDIA TITAN V, appeared in the market as early as 2017. According to publicly available technical documentation, it integrates 640 first-generation Tensor Core units, which can provide 119 TFlops of AI acceleration power in FP16 mode.

Interestingly, if you are sensitive to numbers, you might realize that this 7-year-old graphics card has AI computing power that is more than 10 times higher than many of the so-called AI PC capabilities claimed today.

-

Why Use NPU Despite Its Low AI Performance?

So why does this situation arise? Based on our practical experience, it mainly stems from three aspects.

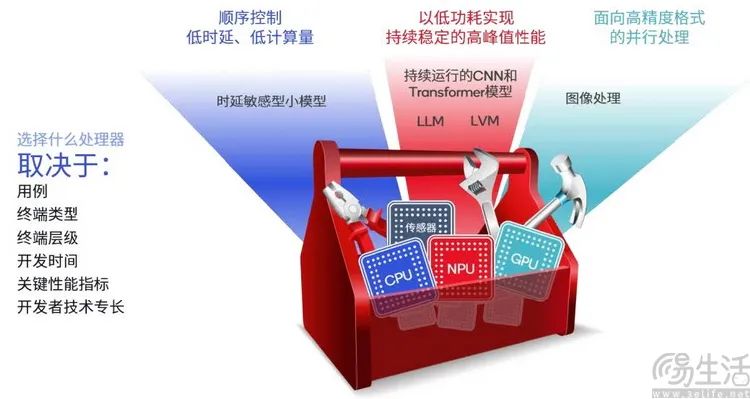

First, there is the issue of energy efficiency. Indeed, modern CPUs and GPUs possess certain AI acceleration capabilities, especially the impressive AI computing power of GPUs. However, CPU AI computing efficiency is not high, and the high power consumption of GPUs during AI processing cannot be ignored, especially for devices like laptops. Therefore, the NPU, which processes AI tasks faster than CPUs and is more energy-efficient than GPUs, naturally has its value in energy savings.

Some may argue that if a more powerful device can complete tasks in a shorter time, wouldn’t that also save energy? This is indeed true, but the problem is that for the current “AI PC,” AI tasks are not necessarily high-computation demands like video super-resolution or generative images; they might also include AI voice assistants or AI performance scheduling that need to run in the background and provide feedback with minimal latency.

Clearly, in such cases, laptops cannot allow CPUs or GPUs to remain in high-power states continuously to meet the demands of these “always-on AI applications.”

AMD’s edge-side AI large model chat functionality is very demanding on graphics card performance

Moreover, from practical use cases, many commonly used PC programs and popular games are not based on “AI,” which means if CPUs or GPUs are constantly used for AI computing, they would effectively reduce the computer’s performance. Clearly, except for specialized AI tasks, the vast majority of users would not want to see this situation.

-

Computational Power Fusion Is the Future, But It Is Not Easy to Achieve

Of course, even though NPUs offer high energy efficiency, low power consumption, and can operate continuously without affecting CPU and GPU performance, some friends might still wonder, why can’t we use CPUs, GPUs, and NPUs simultaneously for AI computing, thus flexibly balancing “high performance” and “high energy efficiency”?

In theory, this is certainly achievable. For instance, according to relevant information, Qualcomm has implemented a design where CPUs, GPUs, and NPUs can all participate in AI computing in their upcoming Snapdragon X Elite series, allowing for automatic allocation based on code types and task loads, achieving “heterogeneous collaboration”.

However, from this, we can also glimpse why other “AI PC” hardware solutions struggle to achieve collaborative computing among different processing units. Simply put, the problem lies in the “each fighting their own battles” product composition.

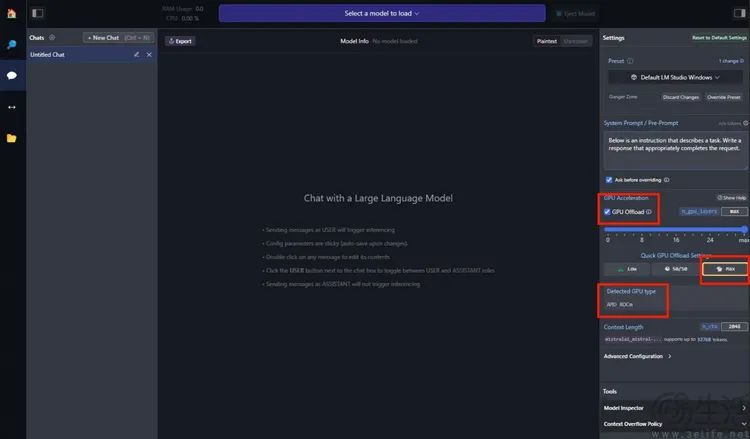

The latest NVIDIA driver interface provides a series of AI acceleration features based on RTX graphics cards

For example, in the GPU field, NVIDIA’s entire RTX series, AMD’s RX7000 series, and Intel’s ARC series all contain independent AI computing units. However, NVIDIA does not produce consumer-grade PC CPUs, so we can see that they do not consider AI computing collaboration with CPUs at all. Instead, they have been continuously updating AI video super-resolution, AI color enhancement, AI audio noise reduction, and even AI voice chat functions based on graphics cards, suggesting that “AI PCs only need graphics card computing power to be sufficient.”

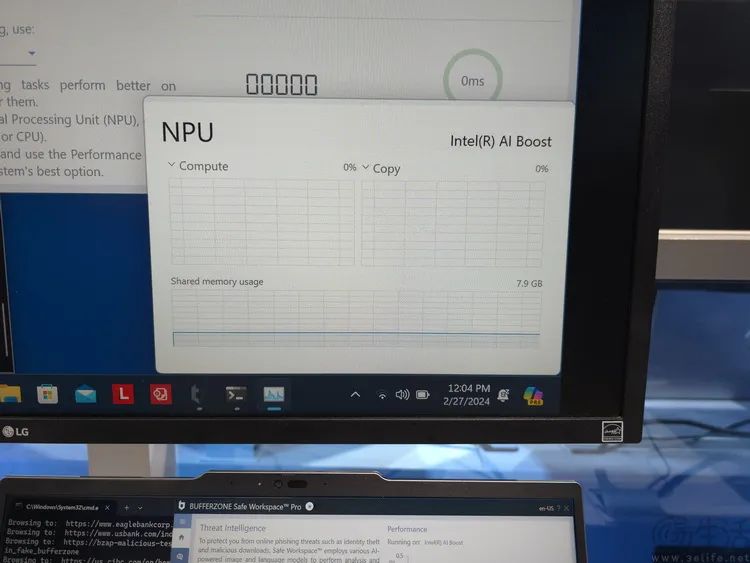

In contrast, Intel and AMD are in a more awkward position. For example, although Intel’s ARC discrete graphics cards contain XMX matrix computing units, the current generation of ARC integrated graphics integrated into CPUs has canceled this design, resulting in the current MTL architecture CPUs having only the integrated NPU as an independent AI computing unit. Even when paired with ARC discrete graphics, it cannot achieve “stacking” of AI computing power between integrated and discrete graphics.

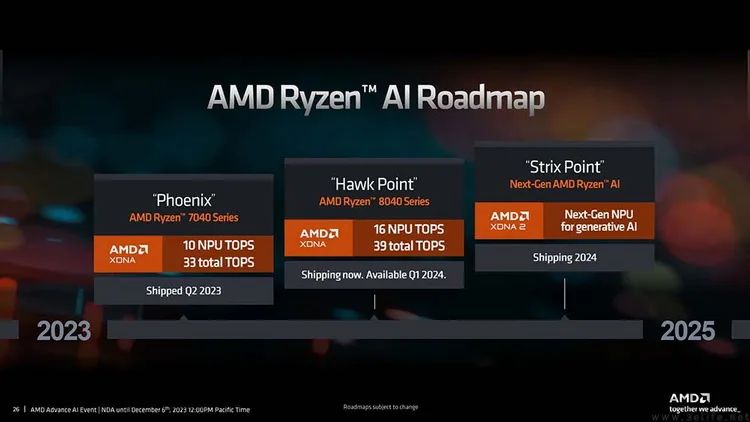

AMD’s CPUs and GPUs now both have AI units, but their architectures and software are not compatible

Meanwhile, AMD uses the XDNA mature architecture from its enterprise computing cards as the NPU unit in its CPUs, theoretically providing an advantage of easier software adaptation. However, for some reason, AMD seems to have utilized a different AI unit design in the RDNA 3 discrete graphics architecture, leading to the fact that they have yet to resolve the AI code-based game image super-resolution function. Moreover, in many previously demonstrated use cases of graphics cards for AI, only the floating-point computing power of the GPU itself was utilized, which also means it would have higher power consumption compared to processing solely with the AI unit of the graphics card, and it would be impossible to “compute AI while gaming.”

-

Chip Manufacturers Lack Unity, Downstream Brands Can Only Seek Self-Rescue

Having discussed all this, is there a way to resolve these issues? There is indeed a possibility. For Intel and AMD, they certainly hope to address the issue of “inconsistent computing power” in their future product lines through architectural adjustments. As for NVIDIA, although they do not have a consumer-grade x86 CPU product line, it is clear that NVIDIA’s potential entry into the Windows on ARM ecosystem through ARM CPUs cannot be ruled out.

Of course, all the above-mentioned factors essentially throw the problems to future architectures and the next generation of hardware platforms. So for consumers who are about to purchase a machine, or even for those who have been using graphics cards or CPUs with integrated AI units for many years, is there truly no solution?

Not necessarily. On one hand, from the perspective of operating system manufacturers, Microsoft certainly does not wish to see a situation of “AI PC standard fragmentation.” Therefore, they have been working on the driver and API levels to integrate AI computing power across different hardware architectures. A typical example is that regardless of whether it is the graphics card (floating-point computing power), NPU, or AI units built into graphics cards, they all fall under the unified scheduling of the Direct ML API in the Windows system, allowing a certain degree of “computing power fusion.”

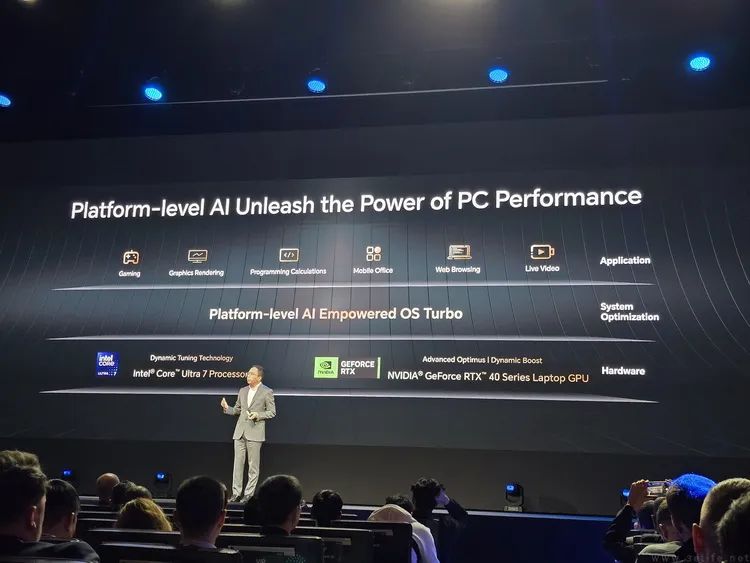

On the other hand, aside from Microsoft, PC manufacturers will also make some efforts. They will attempt to unify the computing power of CPUs, GPUs, and NPUs through self-developed AI foundations or integrate the GPU acceleration API more deeply into the system’s core, thereby enhancing efficiency during AI computations. Of course, these technical means are indeed meaningful, but they may be constrained by PC brands and sometimes even specific product lines, so their effects may be significant but may not necessarily drive widespread advancements in the entire industry.