The 2021 Research and Electronics Competition has come to a close ~

The editor has compiled a collection of award-winning works, sharing them every Monday, so be sure to check back for the series ~

National Third Prize Work: Vision Navigation Autonomous Driving Car Based on EAIDK310

Team Introduction

Participating Unit: Shanghai University of Applied Technology

Team: EAIDK666

Instructors: Jiang Wenping, Ding Zhaohong

Team Members: Wen Xiaojing, Sun Yijie, Du Weidong

Award Status: National First Prize in “ARM” Enterprise Special, National Finals Third Prize, Shanghai Regional Third Prize

Project Background

The automotive industry has entered a revolutionary new era, where intelligence is an inevitable trend. Compared to traditional manual driving, autonomous driving has significant advantages in safety, convenience, and efficiency. Currently, autonomous vehicles are being tested in China, achieving certain results on designated roads. On one hand, the development trend of autonomous driving is in full swing, and autonomous driving technology has become a mainstream research direction. On the other hand, China is under pressure in the chip electronics field internationally. Adhering to the principle of challenging oneself to become familiar with domestic chips, we chose this ARM China enterprise topic, which was jointly designed by Arm China and OPEN AI LAB. This autonomous driving competition topic revolves around specific application scenarios, integrating sensors, algorithms, and designated control devices to build a complete application product.

Technical Challenges and Innovations

Technical Challenges

-

Software Porting: Since EAIDK310 is not a widely used development board like Raspberry Pi, there is limited information available online. Completing the functionality already implemented on other development boards through software porting is the first major challenge.

-

Performance Optimization: The onboard chip of the EAIDK310 is Rockchip’s RK3228H. As an entry-level embedded AI processor, achieving autonomous driving based on image recognition still has significant performance bottlenecks, making performance optimization the second major challenge.

-

Usability Barrier: Since this topic is an enterprise topic rather than an open topic, there was no preparatory work or inheritance. Facing an unfamiliar development board, system, and chip, completing the specified tasks within a limited time is a significant barrier.

Innovations

-

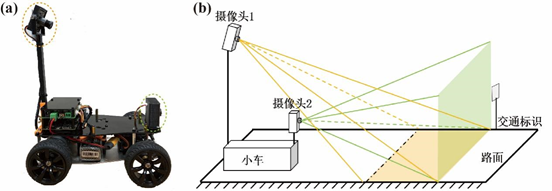

Hardware: A dual-camera architecture was adopted: one camera is installed from a bird’s-eye view to capture the car’s position on the road, with a forward view of about twice the car’s length, while the other is a wide-angle camera used to capture pedestrian traffic signs and other road conditions. The dual-camera architecture greatly improves the efficiency of image capture and processing, reducing the software’s burden of processing images.

-

Software Architecture: Due to the comprehensive factors of the EAIDK310 platform, we abandoned the commonly used ROS solution in the industry and instead used a lightweight data caching library, Redis, and DSDL to achieve similar functionality to ROS, reducing program coupling and implementing a high-performance architecture across processes, nodes, and languages at a lower cost.

-

Algorithm Optimization: The image recognition part was optimized using the Tengine edge inference framework for performance acceleration, improving the real-time capabilities of the entire system.

Practical Value

Thanks to the innovations in hardware and software architecture, this work proposes a low-cost, high-efficiency embedded visual navigation framework, lowering the hardware cost threshold for researching this technology. The software solution based on Redis proposed in this work is also a replicable framework in embedded systems. Additionally, the experimental results verified Tengine’s excellent performance on embedded platforms, providing ideas for optimizing and accelerating AI inference in embedded systems.

System Design

System Architecture Diagram

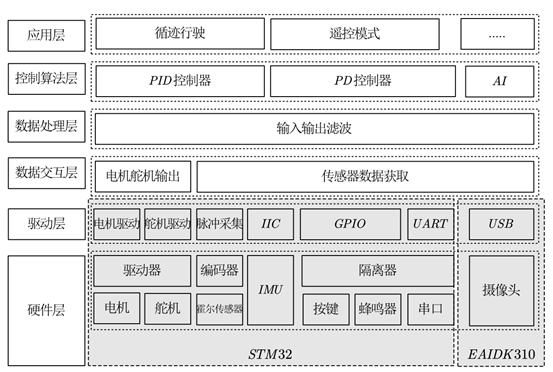

The overall system architecture is shown in the diagram, with layers from bottom to top: hardware layer, driver layer, data interaction layer, data processing layer, control algorithm layer, and application layer. The hardware layer is the most intuitive physical manifestation of this design, mainly including motors, servos, speed sensors, IMU sensors, buttons, and buzzers. The driver layer is the lowest level of software that directly interacts with the hardware to make it operational, including PWM for driving motors and servos, input capture for speed sensors, IIC bus for driving IMU, and GPIO and UART for human-machine and machine-machine interaction. The data interaction layer is a key layer connecting the lower and upper layers, further abstracting and encapsulating the data flow from the driver layer, providing a unified interface and permission control for upper-layer applications to access lower-layer hardware. The data filtering layer is the first layer of processing for the data, mainly providing basic mathematical operations and digital signal processing methods. Based on the raw data or processed data provided by the lower layer, the control algorithm layer incorporates this data into the actual model to complete the control of the model. The application layer is the top logical processing layer, where the most specific functions are implemented, such as enabling the car to follow a line and controlling the car’s autonomous driving strategy.

The overall system architecture is shown in the diagram, with layers from bottom to top: hardware layer, driver layer, data interaction layer, data processing layer, control algorithm layer, and application layer. The hardware layer is the most intuitive physical manifestation of this design, mainly including motors, servos, speed sensors, IMU sensors, buttons, and buzzers. The driver layer is the lowest level of software that directly interacts with the hardware to make it operational, including PWM for driving motors and servos, input capture for speed sensors, IIC bus for driving IMU, and GPIO and UART for human-machine and machine-machine interaction. The data interaction layer is a key layer connecting the lower and upper layers, further abstracting and encapsulating the data flow from the driver layer, providing a unified interface and permission control for upper-layer applications to access lower-layer hardware. The data filtering layer is the first layer of processing for the data, mainly providing basic mathematical operations and digital signal processing methods. Based on the raw data or processed data provided by the lower layer, the control algorithm layer incorporates this data into the actual model to complete the control of the model. The application layer is the top logical processing layer, where the most specific functions are implemented, such as enabling the car to follow a line and controlling the car’s autonomous driving strategy.

Hardware Architecture Diagram

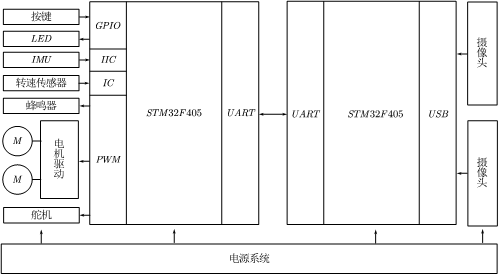

The above diagram shows the overall hardware framework of this design. The hardware system adopts a main controller plus auxiliary controller architecture. The main controller is the EAIDK310 development board with the onboard RK3228H processor, while the auxiliary processor is the STM32F405 processor. The main controller is responsible for camera data collection and processing, as well as executing the main control algorithms. The auxiliary processor is a 32-bit microcontroller, mainly responsible for motor driving, speed and steering control, and body posture calculation.

The above diagram shows the overall hardware framework of this design. The hardware system adopts a main controller plus auxiliary controller architecture. The main controller is the EAIDK310 development board with the onboard RK3228H processor, while the auxiliary processor is the STM32F405 processor. The main controller is responsible for camera data collection and processing, as well as executing the main control algorithms. The auxiliary processor is a 32-bit microcontroller, mainly responsible for motor driving, speed and steering control, and body posture calculation.

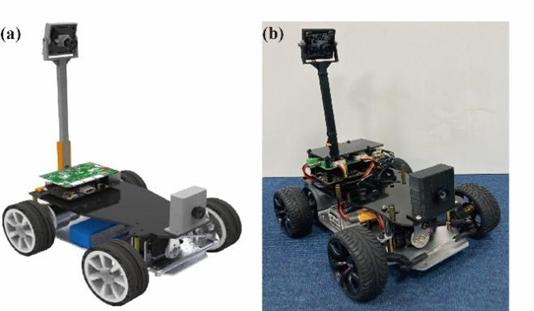

Specifically, the main processing side adopts a dual-camera scheme, as shown in the diagram (a) below, where Camera 1 is used to capture the car’s position on the road, installed at a height of about 15 cm at the rear of the car, with an installation angle of 12 to 15 degrees, determining its forward view of about twice the car’s length. Camera 2 uses a wide-angle camera to capture real-time road condition information such as pedestrians and traffic signs in front of the car, installed at the front of the car, facing directly ahead. The field of view diagram of the dual-camera scheme is shown in diagram (b). Compared to a single-camera scheme, the data collected by the dual cameras can be directly used for road recognition segmentation and traffic sign processing, thereby reducing the processor’s pre-processing workload on images.

Software Architecture

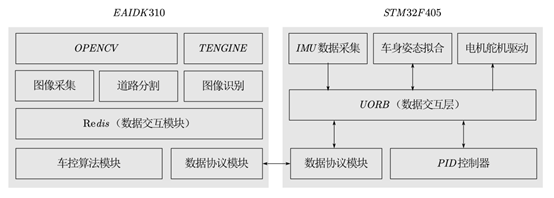

Corresponding to the hardware system, the software is also divided into two parts, with the architecture diagram shown. It is mainly divided into the main processor side and the auxiliary processor side. On the main processor side, OpenCV is used for image capture and basic processing, while Tengine is used for inference calculations of AI training models. All data processing and calculation results are written to Redis for other programs (vehicle control algorithms, data protocols, image recognition modules, etc.) to call. The vehicle control driver is a node written in Python that communicates with the STM32 microcontroller via serial port, reading control commands from the upper application (vehicle control algorithm) in real-time from Redis and sending them to the auxiliary processor side via serial protocol to command the car to perform corresponding actions.

Corresponding to the hardware system, the software is also divided into two parts, with the architecture diagram shown. It is mainly divided into the main processor side and the auxiliary processor side. On the main processor side, OpenCV is used for image capture and basic processing, while Tengine is used for inference calculations of AI training models. All data processing and calculation results are written to Redis for other programs (vehicle control algorithms, data protocols, image recognition modules, etc.) to call. The vehicle control driver is a node written in Python that communicates with the STM32 microcontroller via serial port, reading control commands from the upper application (vehicle control algorithm) in real-time from Redis and sending them to the auxiliary processor side via serial protocol to command the car to perform corresponding actions.

System Testing

The final design diagram and overall diagram of the car are shown in the diagram

Traffic sign props

Dataset

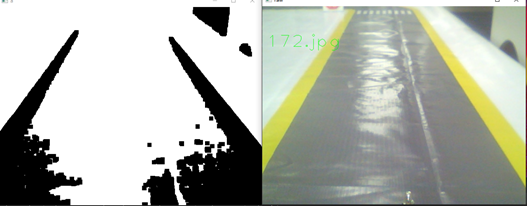

Track segmentation line following

Traffic sign recognition

Future Prospects

Based on the robot framework proposed in this work using Redis and DSDL, further improvements and supplements will be made to make it a viable alternative to ROS, especially on low-performance processors.

Conclusion

Through this exploration and learning of the EAIDK310, we have implemented an autonomous driving demo based on dual cameras, with practical needs as the application background. For the initial functional requirements, we tested line following and traffic sign recognition. Since the line following used basic machine vision algorithms, the results were not ideal for complex backgrounds, but it performed very well on simple track line following. The traffic sign recognition used machine learning algorithms, and a large amount of training data was recorded during the testing process for training. Since the training data and actual verification scenarios were the same, we achieved good recognition results. This project demonstrates that even though the performance limitations of the RK3228H processor cannot run large AI model algorithms, thanks to the data interaction framework based on Redis and the Tengine edge inference computing framework, basic embedded devices can also play a role in AI projects, providing a low-cost solution.

Reflections on Participation

First of all, I would like to thank Arm China for recognizing our team’s work. Our team’s award status is quite polarized; we won third prize in the horizontal comparison of the Shanghai regional competition and first prize in the vertical comparison of the ARM enterprise topic. Unsurprisingly, we also received third prize in the national competition, and we can accept either third or first prize. I clearly remember the shock we felt when we saw the posters of other participating teams at the Shanghai regional competition. Our work was so simple and unadorned, with no incomprehensible topics, no high-end technologies, and no strong financial support. The camera on our car was still the kind used for QQ video chatting ten years ago due to budget constraints. Therefore, we felt that receiving third prize was justified as we indeed did not match the quality of other teams’ works. However, we felt that we deserved the first prize in the enterprise topic because our work was closely aligned with the competition topic, without any deviation, and we tackled each problem step by step, overcoming challenges one by one….

When I first received the EAIDK310 development board, I fell into the predicament of reinstalling the system twice a day on average, whether due to insufficient memory when installing software or system crashes. I could hardly get along with this board. Later, I finally learned to use rsync to back up the system, which improved the situation, but it only changed from reinstalling the system to restoring the system, which was at least an improvement. However, there was still a month left before the registration deadline, and I fell into the struggle with OpenCV, ROS, pygame, Tengine, and Rockchip…. This board is too niche, and many software packages had to be compiled by ourselves. The performance was not very high, and what worked on other platforms ran poorly here. Until we finally decided to abandon ROS and many dependencies, starting everything from scratch and using an STM32 auxiliary processor, our work finally got on track. But then again, isn’t it better to stand on the shoulders of giants? At this point, we aimed for Redis, which is well-known in the internet field (students doing embedded work may not be very familiar with this). Combining Redis and DSDL is essentially a lightweight ROS framework. We decided to go for it, studying the source code of Redis and DSDL. Finally, a week before the registration deadline, we implemented the basic framework model, and the car could move. Communication between various nodes was established, and at this point, we confirmed our registration for the ARM topic, naming our team EAIDK666 and applying for the development board (the one we previously purchased out of pocket). For image recognition, we carefully studied the videos of the public courses, expanded the dataset, and retrained the model for deployment. At this point, the registration deadline was approaching, and we still hadn’t made the PPT or recorded the video…. We were racing against time.

Thus, we walked step by step, and the first prize is a great recognition and encouragement for us!

It must be the gratitude towards Arm China that kept me going to finish this sharing. As of today (August 27, 2021), I just finished the finals of the last competition of the summer (the Ji Chuang competition). I have no expectations for the results of this competition because at this moment, I just want to rest well. I am really exhausted. Thank you, Arm China, for recognizing our work. Finally, I would like to thank Arm China and my mentor! Since some content in the work is to be written into a paper for submission, it is not very detailed. Once the paper is submitted, I will definitely share it with everyone, and if conditions permit, we will also make the source code of the framework created with Redis and DSDL public to inspire others.

Recommended Reading

【2021 Research and Electronics Competition Work Sharing】 Issue 17 | Intelligent Material Transport Vehicle Based on EAIDK310 by Changan Dapaidang

【2021 Research and Electronics Competition Work Sharing】 Issue 16 | Cloud-Connected Autonomous Driving System Based on EAIDK-310

【2021 Research and Electronics Competition Work Sharing】 Issue 15 | Pipeline Inspection Robot

END

About Anxin Education

Anxin Education is an innovative education platform focused on AIoT (Artificial Intelligence + Internet of Things), providing a comprehensive AIoT education solution from primary and secondary schools to higher education institutions. Anxin Education relies on Arm technology to develop the ASC (Arm Smart Connectivity) curriculum and talent training system, which has been widely applied in industry-university-research cooperation in higher education and STEM education in primary and secondary schools, aiming to cultivate talents in the field of intelligent connectivity that meet the demands of the times.