The research findings “Distributed Robust Kalman Filtering Fusion Algorithm for ADAS System Visual and Millimeter-Wave Radar” were published in the 2024 Issue 5 of Automobile Engineering. To achieve accurate multi-target detection and tracking tasks for Advanced Driver Assistance Systems (ADAS), the paper employs a sensor configuration scheme of one visual sensor and five millimeter-wave radars (1V5R), designing a multi-sensor information fusion algorithm based on distributed robust Kalman filtering, enabling the ADAS to perform integrated perception of surrounding targets and improving the accuracy of multi-target detection and tracking.

In many functional applications of ADAS, accurately perceiving surrounding targets is a prerequisite for functionality. Multi-target detection and tracking using a single sensor suffer from limitations in perception range and detection accuracy. Additionally, during the measurement of moving targets on the road, the sensor measurements can experience frame drops, offsets, abrupt changes, and measurement errors varying with distance due to complex environmental interference, sensor measurement mechanisms, and the motion of the measurement platform. These phenomena differ significantly from the Gaussian assumption of the Kalman filter, leading to lower accuracy in current sensor fusion methods based on Kalman filtering.

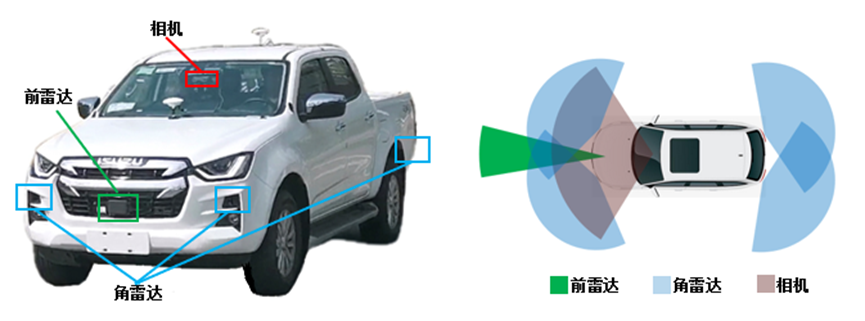

1. Sensor Configuration Scheme: The paper designs a sensor configuration scheme with one visual sensor and five millimeter-wave radars (1V5R) to achieve environmental perception of surrounding targets by the ADAS. The detection range of the visual sensor is 120m, and its field of view is 120°; the front radar has a detection range of 150m and a field of view of 20°; the detection range of the four corner radars is 80m, and their field of view is 150°, with an output frequency of 20Hz for all sensors.

Figure 1: Vehicle Platform and Sensor Configuration Scheme

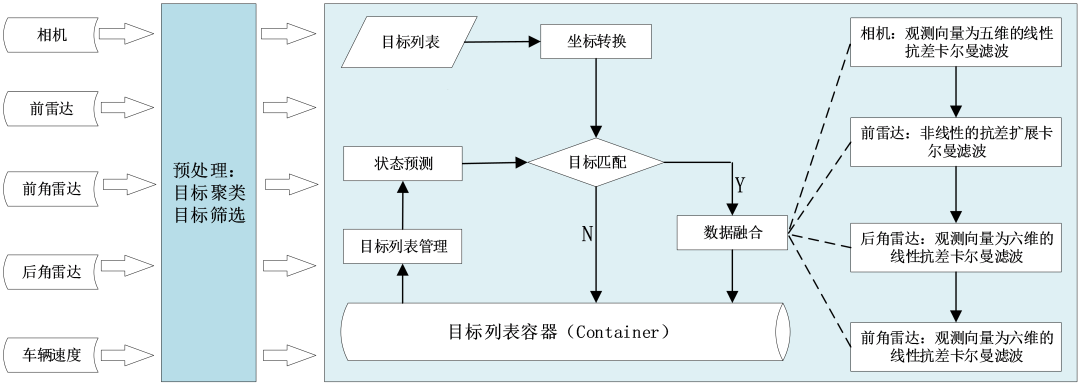

2. Fusion Algorithm Architecture Design: The paper designs a multi-sensor information fusion framework based on the distributed robust Kalman filtering algorithm; after preprocessing the sensor data, it performs information fusion on data from different sensors; to enable the Kalman filter to dynamically adjust the weight of the sensor observations during fusion according to the measurement error of the sensors, a robustness factor is introduced to adjust the covariance matrix of the measurement parameters, achieving real-time estimation and correction of dynamic sensor errors.

Figure 2: Fusion Framework Based on Distributed Robust Kalman Filtering Algorithm

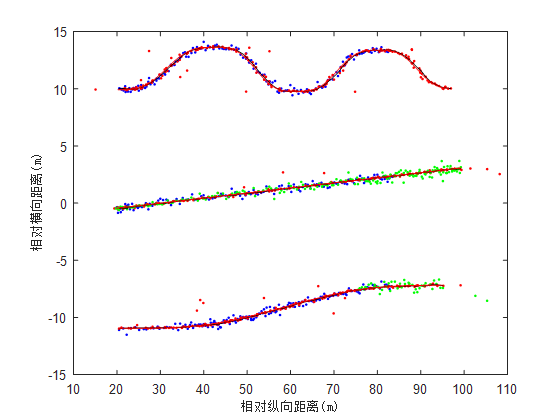

3. Experimental Verification: The accuracy of the proposed fusion algorithm was verified through simulation experiments and real vehicle tests.

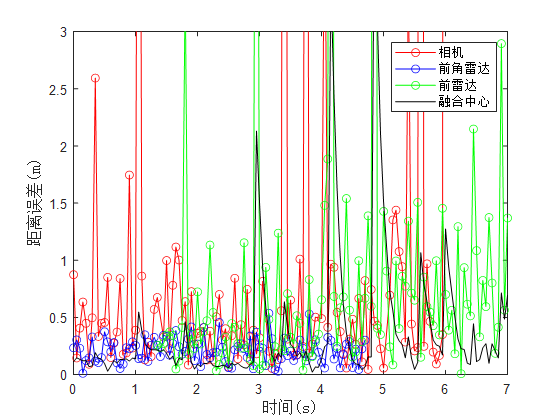

The experimental results show that the distributed robust Kalman filtering algorithm can achieve multi-sensor information fusion, and the fused error is lower than that of a single sensor’s measurement error. After introducing the robustness factor, the detection error can be reduced, improving the accuracy of multi-target detection and tracking.

(a) Relative Motion Trajectories of Three Targets

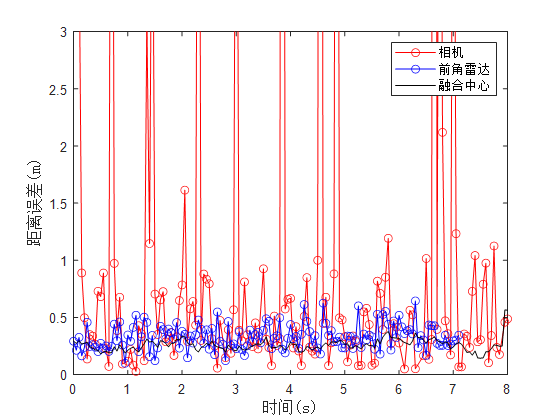

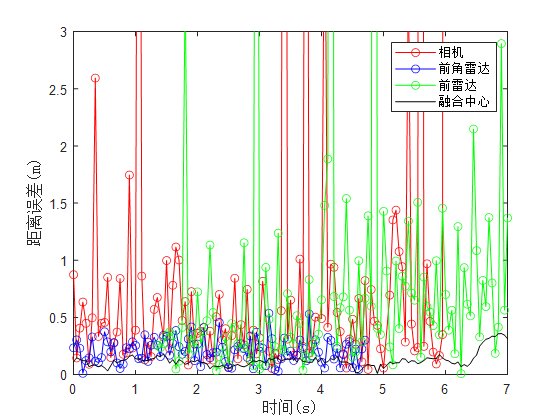

(b) Detection Error of the First Target

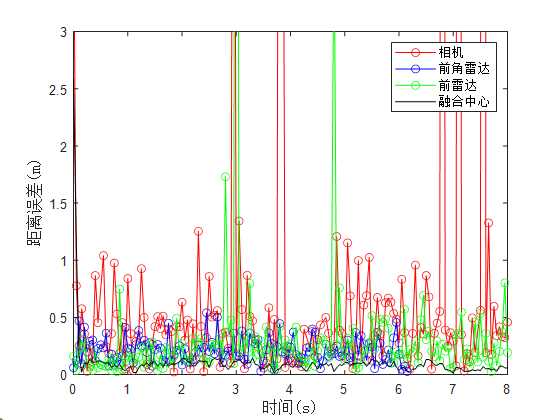

(c) Detection Error of the Second Target

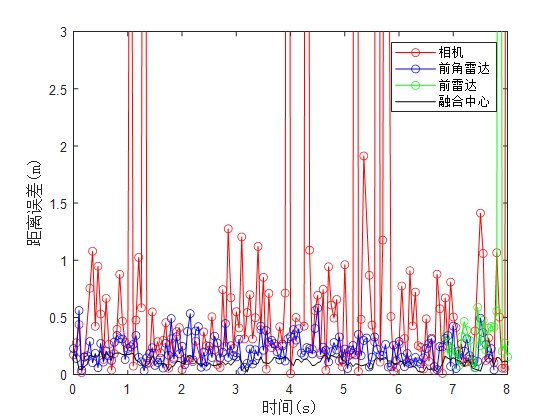

(d) Detection Error of the Third Target

Figure 3: Multi-Target Tracking Test Results

(a) Detection Error Before Introducing Robustness Factor

(b) Detection Error After Introducing Robustness Factor

Figure 4: Robustness Factor Comparison Test

4. Innovations and Significance

Addressing the different characteristics of sensor data, the paper proposes a distributed robust Kalman filtering algorithm that effectively fuses information from multiple sensors to enhance target detection and tracking accuracy. This research provides ideas and frameworks for multi-source sensor data fusion in advanced driver assistance, holding significant theoretical significance and engineering application value.

About ‘Automobile Engineering’

4.Online Submission: www.qichegongcheng.com

Contact Us