In the era of AI in smart cars, cameras have become very important as the “eyes of the car.” Currently, camera-based ADAS is widely adopted in smart driving due to its applications, higher reliability, and adaptability to new requirements. ADAS cameras are typically deployed at the front, sides, and rear of the vehicle, providing driving and parking assistance. The front camera system is most commonly installed at the front of the car, behind the rearview mirror. The rear camera is installed near the license plate at the back, while side cameras are positioned on both sides of the car near the mirrors.

This article provides insights into the resolution (in Mpixel) and frame rate (fps) choices for automotive camera systems, focusing on the single front camera, which can also serve as a reference for rear/side camera selection.

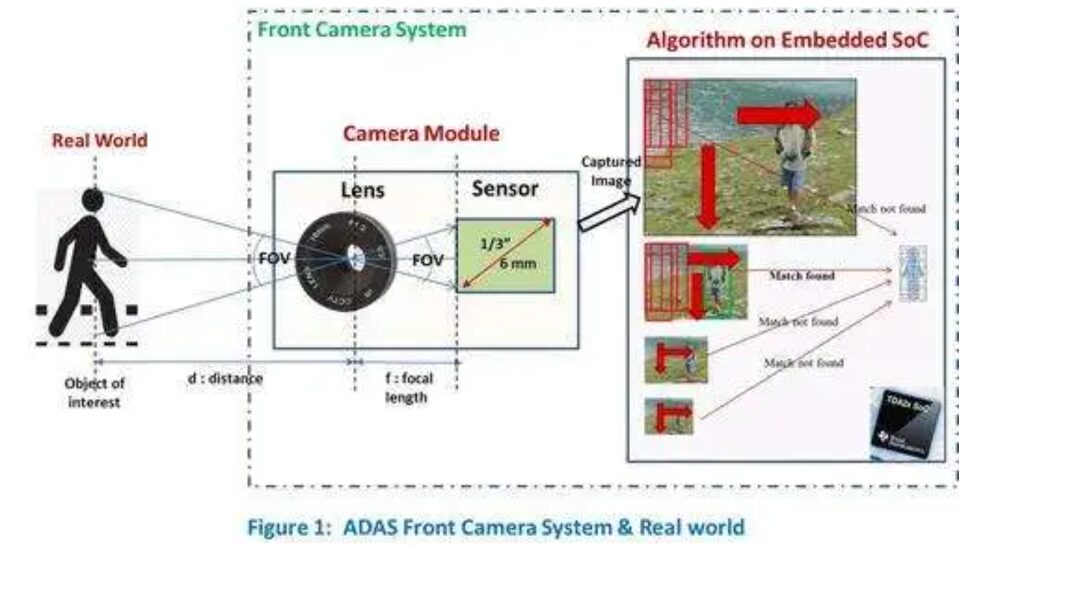

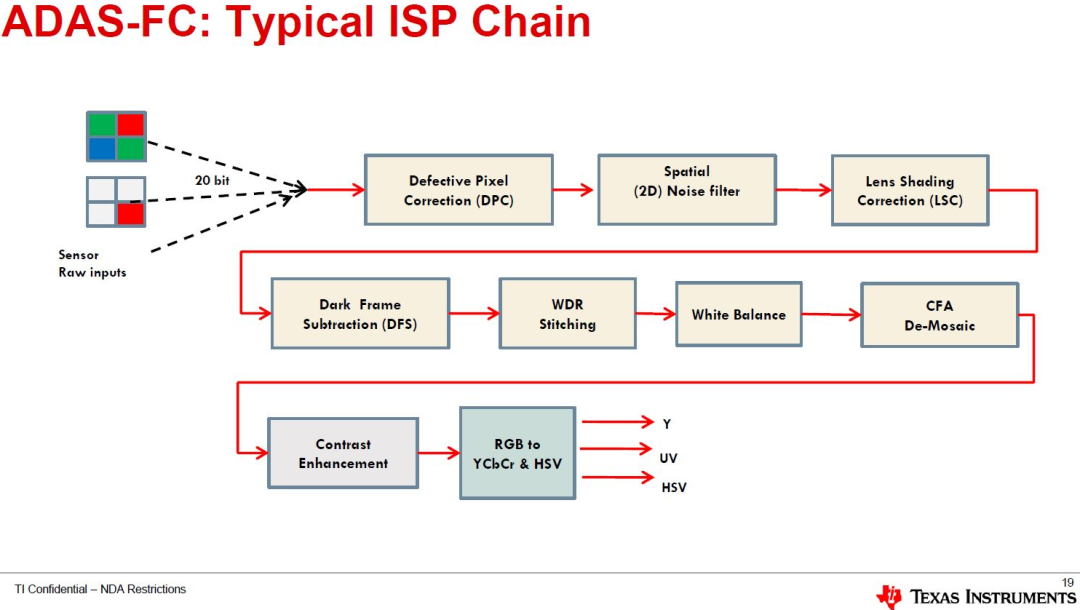

The ADAS Front Camera (FC) system captures and identifies objects of interest (such as pedestrians, cars, and motorcycles) from the real world. As shown in the figure below, the ADAS FC system consists of a camera module (lens and sensor) that captures the scene as a series of images (i.e., video), which are then analyzed on an embedded processor chip to identify objects of interest.

The key function of the ADAS front camera is to identify objects of interest and the distance to the car, applying emergency braking at the right time to avoid collisions. These objectives must be achieved under the strict constraints of high precision detection, low cost, low power consumption, and small size. The solution must be capable of functioning in different scenarios, such as nighttime or low-light driving.

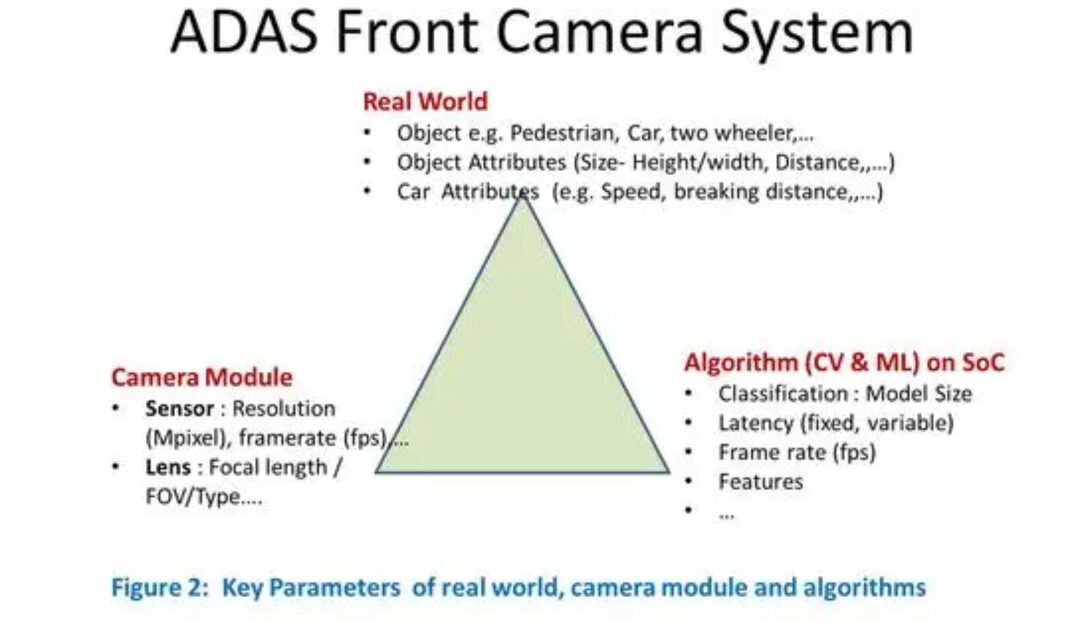

The three participants in the overall solution interact with each other to achieve the goals of the ADAS system:

-

Real World: This includes vehicles and objects of interest that may become potential accident targets (e.g., pedestrians, vehicles, motorcycles, etc.).

-

Camera Module: Composed of lens and sensor components. It captures the real world as image pixels (resolution) at fixed intervals (fps).

-

Algorithm: It typically finds objects of interest (e.g., pedestrians) and alerts the driver in case of potential collisions. Since pedestrian targets may exist at unknown distances, it usually searches for given pedestrians in a series of scaled-down images with fixed pedestrian model sizes (e.g., 64×32 pixels), known as image pyramids. The search is performed using attributes (features extracted from pixels) rather than actual pixels for better robustness. Once a matching pedestrian is found, the distance to the pedestrian and the time to collision (TTC) are calculated. These algorithms belong to the fields of computer vision (CV) and machine learning (ML) and run on embedded SoCs (system on chip).

The figure below shows the three participants and various parameters for achieving the goals of the ADAS system.

01

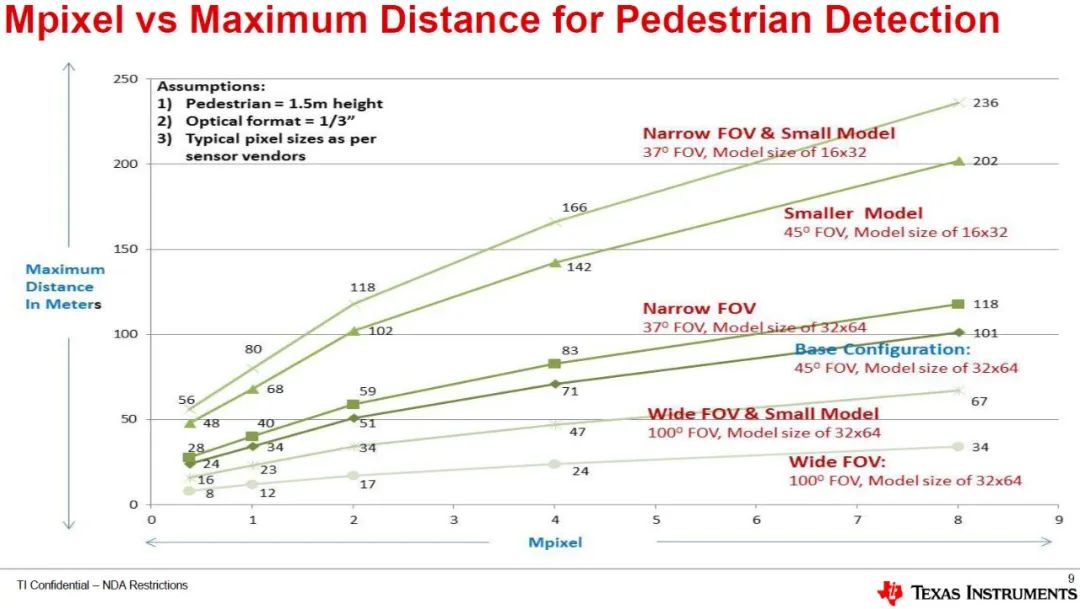

Generally, the Mpixel (MP) of the imaging sensor determines the farthest distance at which a given object can be detected and recognized. The figure below shows the typical relationship between distance to pedestrians and Mpixel under various configurations.

The curves in the figure assume that the height of pedestrians in the real world is 1.5m, and the physical size of the image sensor is 1/3″ (the standard OF value calculation method is that its actual diagonal length of 1/3 inch is approximately 6mm). The basic configuration corresponds to a field of view (FOV) of 450 for the given lens and pedestrian height, with 64 pixels in the captured image. As shown in Figure 3, the maximum distance to a pedestrian for 1MP is 34m, while for 8MP it increases to 101m. Currently, automotive image sensors use 1MP, with plans to increase to 2MP in the near future and eventually reach 8MP.

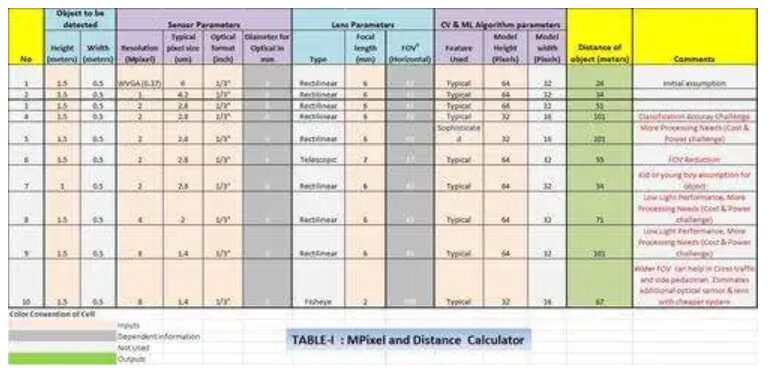

Table I summarizes the effects of increasing Mpixel (0.3 MP to 8 MP) and the maximum distance to pedestrians (24m to 101m), along with assumptions and possible trade-offs. As resolution increases, it brings the next set of issues, such as poor low-light performance and high computational/power requirements.

For a given resolution (e.g., 2MP), there are several methods to increase the maximum distance to pedestrians, summarized as follows.

-

Using smaller pedestrian model sizes during the image pyramid search process: This leads to a doubling of the distance (51m to 101m) as the model size is reduced by half (Row 4). Generally, smaller pedestrian models reduce the accuracy of detection and produce more false positives.

-

Using smaller model sizes and complex features: This results in higher distances and similar detection accuracy (Row 5). Compared to traditional features extracted from pedestrians (e.g., Haar, HoG), complex features (e.g., Co-Hog, Convolutional Neural Networks – CNN) require higher computational power (and cost).

-

Using a narrow FOV (i.e., higher focal length): This means covering a smaller frontal area in the real world, resulting in greater distances (51m to 59m) (Row 6). An extreme reduction in FOV (e.g., reaching 250 FOV) will require additional sensors to cover a similar frontal area.

In the case of higher MP (e.g., 8MP), one possible compromise is to use a wider FOV lens to cover the front and intersections. This eliminates the need for additional sensors, thus reducing costs (Row 10).

02

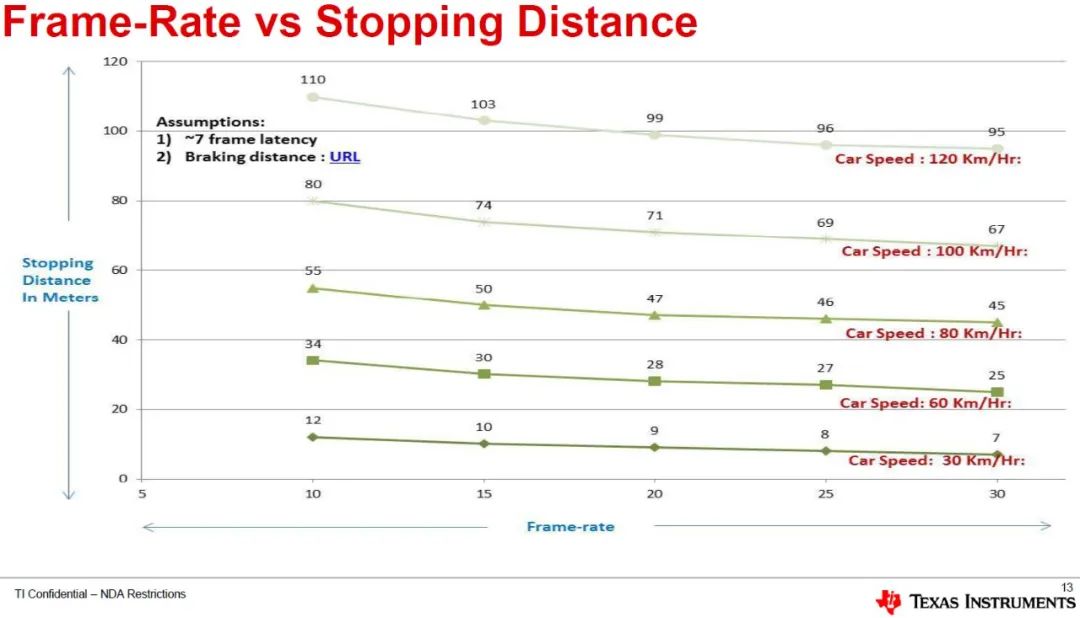

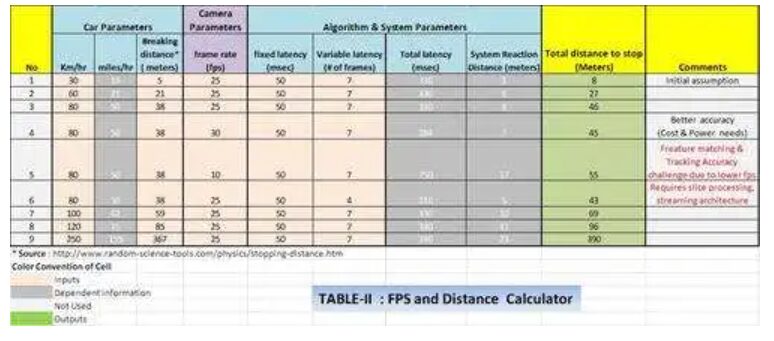

Generally, the frame rate of the imaging sensor determines the maximum stopping distance to avoid object collisions. The figure below shows the typical relationship between the parking distance of a vehicle and the frame rate under various speed configurations.

The parking distance is the sum of the distance covered during pedestrian detection before applying the brake and the distance covered during braking. The assumptions in the graph include a seven-frame delay during pedestrian detection, consisting of approximately three frames of processing delay and about four frames of tracking delay to enhance detection quality. Another assumption is based on the stopping distance according to UK road regulations. As shown in the figure, assuming a vehicle speed of 80 Km/Hr, the parking distance decreases from 55m to 45m as the frame rate increases from 10fps to 15fps (when the article was written) and now to 30fps (currently mostly at 30fps).

Table II shows the effects of increasing vehicle speed (30Km/Hr to 250Km/Hr) and the impact on parking distance (8m to 390m), along with various parameters and assumptions.

For a given vehicle speed (e.g., 80 Km/Hr), there are several methods to reduce braking distance, as follows.

-

Using higher frame rates: As the frame rate increases (from 10 to 30 fps), this reduces latency (from 750 milliseconds to 284 milliseconds). This also improves the performance of object tracking (e.g., pedestrians), but at the cost of higher processing capability (Row 4 and Row 5).

-

Using stream-based and slice-based architectures: This reduces latency (from 750 milliseconds to 210 milliseconds) because communication across multiple subcomponents occurs at a finer granularity, such as row/sub-image (Row 6). This complex system requires more time to develop and may face algorithm framework-level dependency challenges.

03

04

Therefore, many of the smart driving solutions we currently see in the country are still in the material stacking phase, while the automotive industry is essentially an application industry. Achieving an optimal configuration at the best cost that can be expanded and upgraded is the best solution. This is the core competitiveness that we should consider as industry application personnel in the OEM sector.

References and Images

-

Original Article – ADAS Front Camera: Demystifying Resolution and Frame-Rate – EE TIMES

-

Camera and Image Technology Basics PDF – Jon Chouinard PDF Download

-

Surround View Camera System for ADAS on TI’s TDAx SoCs – TI PDF Download

* Reproduction and excerpting without the original author’s permission is strictly prohibited.

Previous Recommendations

-

The first pilot for unmanned operation of passenger cars opens in Beijing

-

The first batch in the city! Intelligent connected passenger buses are allowed to be on the road in the economic development zone

-

Beijing releases the first high-level autonomous driving demonstration area standard system

-

Beijing Yizhuang has built 332 smart intersections, reducing the average delay rate of citizens’ “green light freedom” by nearly 30%

-

Intelligent connected vehicles in China shine | Extraordinary Decade

-

Autonomous Driving Weekly 5.30-6.05 | Xiaomi announces three autonomous driving patents; Hyundai plans to add 10,000 software engineers

-

Summary of autonomous driving commercialization progress in May

-

Summary of autonomous driving industry policies and standards released in May

-

Commercialization continues to break through, summary of the status of 21 global Robotaxi companies

-

Overview | Dynamics of some companies’ vehicle-road collaborative development

-

Beijing’s high-level autonomous driving demonstration area information disclosure (April 2022)