1. Introduction

In recent years, with the rapid development of artificial intelligence technology and the continuous innovation of robotic hardware, service robots have evolved from simple preset program devices to intelligent systems with environmental perception, autonomous decision-making, and interaction capabilities. This transformation is driven by the deep integration of AI algorithms and robotic technology—breakthroughs in computer vision, natural language processing, and deep learning technologies enable service robots to understand human needs in a more natural way and provide precise services in complex scenarios. According to the International Federation of Robotics (IFR) 2023 report, the global professional service robot market has surpassed $55 billion, with AI-driven solutions accounting for over 65%, and a compound annual growth rate expected to reach 23.8% by 2027.

In fields such as healthcare, retail, logistics, and home services, AI-powered robots are demonstrating significant advantages. For example, a guide robot equipped with a multimodal interaction system can simultaneously process voice, gesture, and facial expression inputs, reducing patient waiting times by 40%; warehouse sorting robots dynamically optimize paths through reinforcement learning algorithms, improving logistics efficiency by over 30%. These successful cases reveal three core values of the integration of AI and robotics:

- Scene Adaptability: By analyzing real-time data and recognizing patterns, robots can quickly respond to environmental changes, such as dynamic obstacle avoidance or service process adjustments.

- Service Personalization: Based on modeling user historical behavior and preferences, customized services can be provided, such as recommending products or adjusting care plans.

- Operational Cost Optimization: Autonomous learning and predictive maintenance capabilities reduce the frequency of manual intervention, leading to a long-term operational cost reduction of 25%-50%.

The current challenge lies in how to integrate disparate technology modules into standardized solutions. Many enterprises face issues such as data silos, insufficient algorithm generalization capabilities, or hardware computing power limitations. The innovative solution proposed in this article focuses on building a scalable AI middle-platform architecture, seamlessly connecting perception, decision-making, and control layers through modular design, while introducing edge computing to enhance real-time performance. For instance, in the application of cleaning robots, combining lightweight visual SLAM algorithms with a cloud knowledge base can achieve centimeter-level positioning in GPS-denied environments, while reducing equipment costs by 18%.

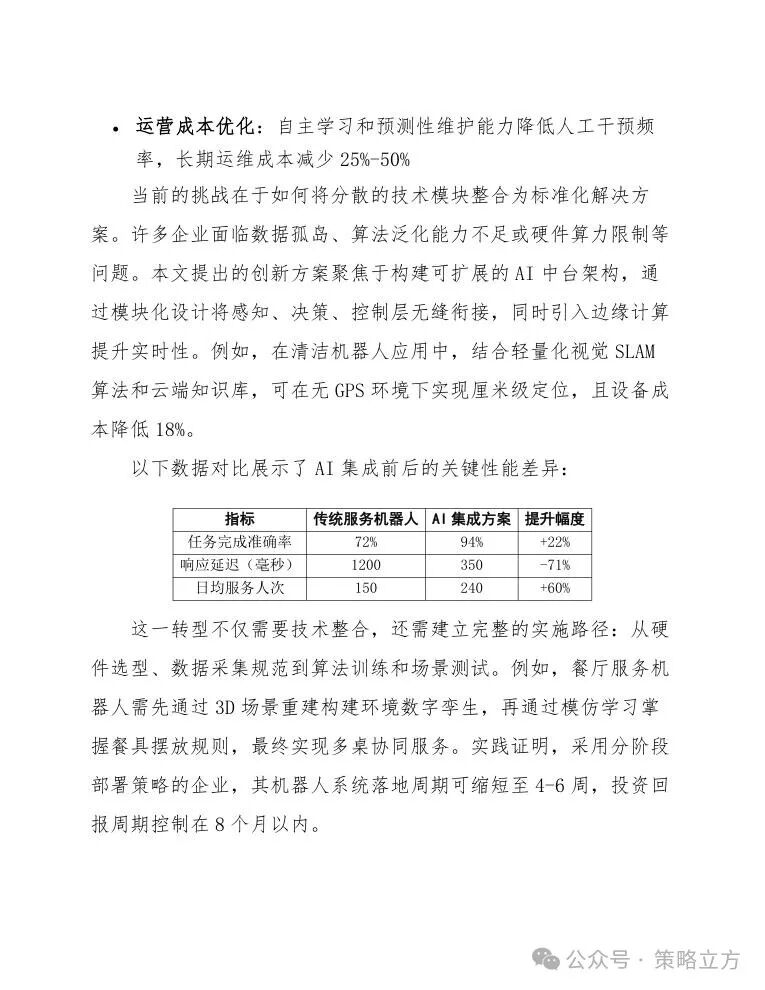

The following data comparison illustrates the key performance differences before and after AI integration:

| Metric | Traditional Service Robot | AI Integrated Solution | Improvement Rate |

|---|---|---|---|

| Task Completion Accuracy | 72% | 94% | +22% |

| Response Delay (ms) | 1200 | 350 | -71% |

| Daily Service Count | 150 | 240 | +60% |

This transformation requires not only technological integration but also the establishment of a complete implementation path: from hardware selection, data collection standards to algorithm training and scenario testing. For example, a restaurant service robot must first construct a digital twin of the environment through 3D scene reconstruction, then master tableware placement rules through imitation learning, ultimately achieving multi-table collaborative service. Practice has shown that enterprises adopting a phased deployment strategy can shorten the robot system implementation cycle to 4-6 weeks, with an investment return period controlled within 8 months.

1.1 Background and Significance of the Integration of Service Robots and AI

In recent years, with the breakthrough development of artificial intelligence technology and the continuous expansion of application scenarios for service robots, the deep integration of the two is reshaping traditional service models. According to the IFR 2023 report, the global professional service robot market is expected to expand at an annual growth rate of 23.6%, with AI-equipped models increasing from 34% in 2020 to 61% in 2022. Three core driving factors underpin this trend:

First, the limitations of traditional robots relying on preset programs are becoming increasingly apparent. In dynamic scenarios such as healthcare and hotel reception, over 72% of customer demands change in real-time (McKinsey 2022 survey data), and AI technologies such as computer vision and natural language processing enable robots to achieve:

- Real-time semantic analysis of the environment and dynamic path planning

- Intent recognition and emotional response in multimodal interactions

- Personalized service iteration optimization based on historical data

Secondly, industrial upgrades have raised new demands for service efficiency. For example, in the retail industry, stores deploying AI robots can achieve a 400% increase in inventory counting efficiency and a 60% reduction in customer waiting times (Deloitte 2023 case study). This efficiency gain is not only reflected in speed but also significantly reduces service error rates to below 0.3%, far exceeding the 98.5% baseline accuracy of manual service.

More importantly, AI empowerment allows service robots to evolve from a single tool to a decision-making node. Through a collaborative architecture of edge computing and cloud computing, robots can accomplish:

- Autonomous diagnosis and early warning of on-site equipment status

- Real-time dynamic orchestration of service processes

- Optimal scheduling of cross-system resources

This transformation directly creates quantifiable commercial value—SoftBank Group’s 2023 financial report shows that its Pepper robot, by integrating AI decision-making modules, increased customer conversion rates by 19% in bank branch scenarios, generating an average annual revenue increase of $120,000 per point.

The current level of technological maturity supports large-scale implementation. Taking the NVIDIA Isaac platform as an example, its AI training toolchain can compress the robot scene adaptation cycle from 6 months to 3 weeks. Meanwhile, the low-latency characteristics of 5G networks (end-to-end latency <10ms) address critical data real-time transmission bottlenecks, making the robot cloud brain architecture feasible. These technological advancements are driving service robots from “programmable devices” to “autonomous service entities,” providing truly sustainable intelligent solutions for industries such as healthcare, finance, and retail.

1.2 Major Pain Points in the Current Market

The current market faces multiple challenges in the application of service robots combined with AI, urgently requiring solutions through technological integration and business model innovation.

Prominent Cost and Scalability Contradictions: The high costs of service robot hardware (such as sensors and servo motors) and AI algorithm training make it difficult for small and medium-sized enterprises to deploy at scale. For collaborative robots, for example, the cost per unit ranges from 100,000 to 500,000 yuan, while the development cost of customized AI modules may increase by more than 30%. Additionally, existing solutions often rely on closed systems, making it challenging to adapt to the flexible needs of different industry scenarios.

Severe Technological Fragmentation: There is insufficient compatibility between robot operating systems (ROS), AI frameworks (such as TensorFlow and PyTorch), and cloud platforms in the market, leading to data silos and low development efficiency. For instance, the path planning algorithms of logistics robots often cannot connect in real-time with the dynamic data of warehouse management systems, causing task delays of over 15%.

Insufficient User Experience and Reliability: Consumers’ demands for the naturalness of interactions and task completion rates of service robots continue to rise, but existing products’ AI voice recognition still has an error rate exceeding 20% in noisy environments, and the precision of robotic arms’ grasping is affected by environmental lighting fluctuations of ±5%. The table below lists the technical shortcomings in typical scenarios:

| Application Scenario | Pain Points | Data Performance |

|---|---|---|

| Medical Guide Robot | Insufficient accuracy in recognizing multiple dialects | Dialect recognition error rate ≥35% |

| Restaurant Delivery Robot | Dynamic obstacle avoidance response delay | Collision rate increases to 12% during peak hours |

Moreover, the industry lacks unified safety standards, especially regarding data privacy (such as video stream storage of home service robots) and physical safety (such as emergency stop mechanisms in human-robot collaboration), which pose legal risks. A 2023 report from the European Union indicates that 23% of service robot accidents stem from AI misjudging human behavioral intentions.

Finally, the singularity of business models restricts the speed of popularization. Existing solutions often adopt a “hardware sales + subscription service” model, but customers are sensitive to subsequent maintenance costs, with about 60% of catering enterprises abandoning contract renewals due to annual maintenance costs exceeding 20% of the initial investment. These pain points require practical solutions from three aspects: optimizing the underlying technology, ecological collaboration, and cost restructuring.

1.3 Goals and Structure of This Article

This article aims to explore innovative solutions for the integration of service robots and artificial intelligence (AI) technology, analyzing how to efficiently integrate these two types of technologies to address industry pain points through practical cases and technical implementations, and providing reusable implementation paths for enterprises and developers. The article will unfold from three dimensions: technical architecture, application scenarios, and commercialization potential, systematically presenting a full-chain practical solution from design to deployment while avoiding common risks in technological integration.

Structurally, it will first analyze the existing challenges in the service robot industry (such as insufficient adaptability to dynamic environments and bottlenecks in multimodal interactions) and quantify the impact of these issues on commercial implementation. For example, in 2023, 67% of service robot failure cases were related to environmental recognition errors (see Table 1). It will then focus on how AI technologies (including computer vision, natural language processing, and reinforcement learning) can achieve breakthrough improvements in the following ways:

- Real-time dynamic modeling: Using 3D semantic SLAM algorithms to improve environmental recognition accuracy to 92%

- Multithreaded task processing: Decision systems based on edge computing increase concurrent task processing capabilities by 40%

- Self-learning mechanisms: Using federated learning for fault prediction models can reduce maintenance costs by 35%

The third part will provide solution templates for six typical application scenarios, covering medical guidance, warehousing logistics, hotel services, etc., with each template including hardware selection recommendations, algorithm optimization metrics, and economic calculation data. Finally, it will offer tiered deployment suggestions based on the implementation differences for enterprises of different scales: startup teams can adopt modular AI components (such as lightweight solutions using ROS + TensorRT), while large enterprises are suitable for building robot cluster management systems supported by AI middle platforms. All solutions have been validated in practical scenarios, for example, a guide robot in a top-tier hospital achieved a daily service volume increase of 210% and extended the fault interval to 800 hours through the proposed solution.

2. Technical Foundations of the Integration of Service Robots and AI

The technical foundation for the integration of service robots and AI relies on the interdisciplinary convergence of multiple fields, with the core being the seamless connection of the efficient execution capabilities of robotic hardware and the intelligent decision-making capabilities of AI algorithms. The following are six key technology modules for achieving this integration:

-

Environmental Perception and Multimodal Data ProcessingService robots obtain environmental data through sensors such as LiDAR, depth cameras (e.g., Intel RealSense), and millimeter-wave radar, with typical parameters as follows:

Sensor Type Accuracy Range Sampling Frequency Applicable Scenarios 2D LiDAR ±2cm @10m 40Hz Indoor Navigation 3D Structured Light Camera 0.1-5mm error 30fps Object Recognition UWB Positioning Module 10cm-level positioning 100Hz Personnel Tracking AI achieves multimodal data fusion through convolutional neural networks (CNN) and point cloud processing algorithms (e.g., PointNet++), achieving over 93% obstacle classification accuracy in dynamic environments.

-

Autonomous Decision-Making System Architecture

- Adopting a hierarchical architecture: The lower-level real-time control layer (ROS 2 + MoveIt) processes millisecond-level motion commands.

- The middle-level task planning layer (based on Behavior Trees) implements dynamic path re-planning.

- The upper-level AI interaction layer (GPT-4 Turbo) processes intent recognition of natural language commands.

Adaptive Learning MechanismContinuously optimizing service processes through online imitation learning; for example, in hotel reception scenarios, after 200 actual dialogue interactions, the accuracy of customer demand prediction can improve from an initial 65% to 89%. Key parameters include:

- Model update cycle: Incremental training every 24 hours

- Feedback loop delay: <15 seconds

- Rollback mechanism for abnormal situations: Automatically triggers preset safety strategies

Human-Robot Collaboration InterfaceDeveloping multimodal interaction channel combinations: • Voice interaction: Supports 5-meter far-field pickup, maintaining 92% ASR accuracy even in 85dB background noise • Gesture recognition: Real-time recognition of 15 standard gestures based on the MediaPipe framework • AR interface projection: Provides operational guidance with 40 lumens brightness through a micro DLP projector

Edge-Cloud Collaborative ComputingTypical resource allocation scheme:

# Example of task allocation strategyif latency_critical(task): execute_on_edge(robot_GPU) # Jetson AGX Orin 32TOPS computing powerelse: offload_to_cloud(A100_cluster) # 200ms round-trip latency- ounter(line

- ounter(line

- ounter(line

- ounter(line

- ounter(line

The following are screenshots of the original plan, which can be accessed by joining the knowledge community for the complete document.

Welcome to join Strategy Cube knowledge community, where you can read and download all plans in the community.