This article is reprinted from the public account: Goose Factory Technology.

This article is for academic/technical sharing only. If there is any infringement, please contact us to delete the article.

To MCP or not to MCP? After OpenAI announced support for MCP, Google did not hesitate for long. On April 4, Gemini announced the addition of MCP usage examples in its official API documentation. Thus, AI giants such as OpenAI, Google, and Anthropic have all embraced this “Big Model USB-C”.

As an attempt at standardized interaction between large models, MCP is expected to be the “HTTP of the AI world”. However, the AI field has never lacked “nuclear-level technologies.” Is its explosive popularity a step towards consensus or just a fleeting moment? For technical decision-makers, whether MCP can truly bridge the gap from concept to implementation may be of greater concern. One month after MCP’s explosive rise, this article delves into key questions: Why has this technology sparked competition among giants? How far is it from defining the factual standard for AI-era interactions?Chapter Overview • How did MCP become popular? • What is MCP, and what core contradictions does it fundamentally resolve? • Can MCP shake or even overturn the status of Function Call?• What similar large model protocols exist currently? How far is MCP from becoming a “factual standard”? • What impact does MCP have on the existing technology ecosystem?

01

How did MCP become popular?

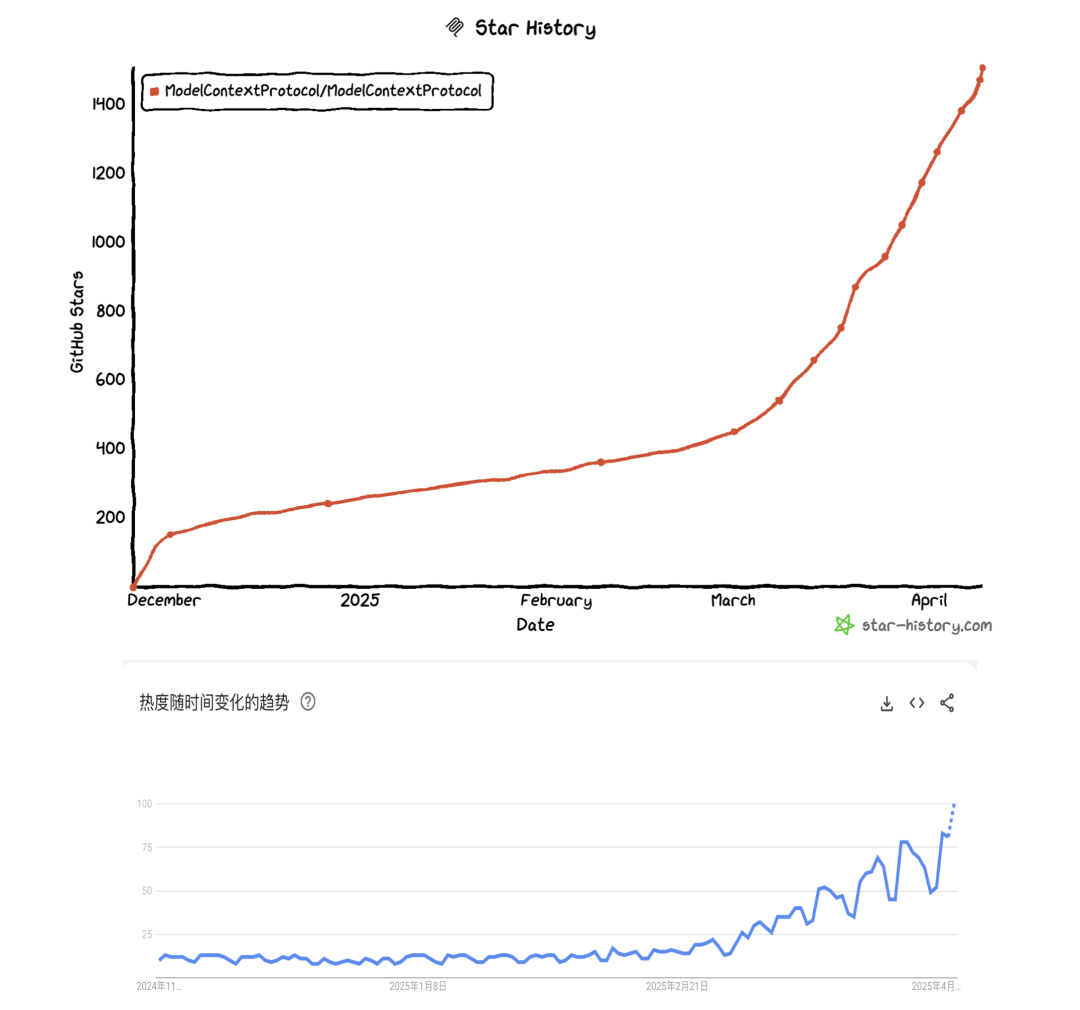

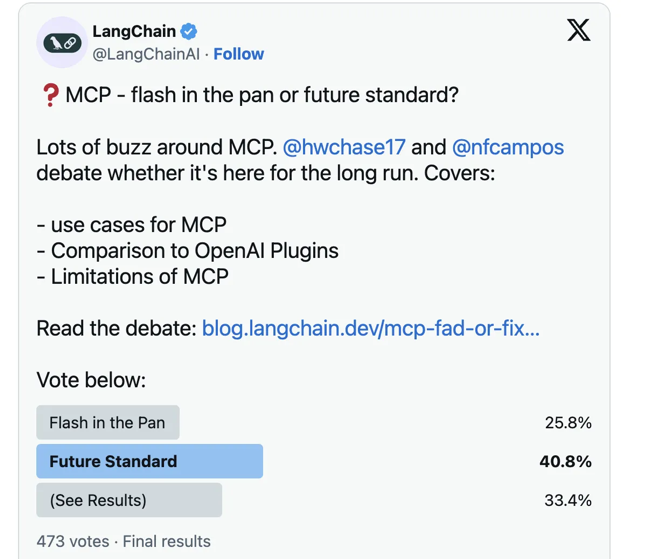

From the GitHub Star History and Google search trends, MCP is indeed a new AI darling globally. Notably, the curves of two different observation metrics show a highly similar growth trend, indicating that MCP is attracting attention from both insiders and outsiders. The explosive rise of MCP can be divided into three stages. Since its release by Anthropic last November, MCP has quickly attracted tech geeks and open-source community developers, with its core value being to solve the “last mile” problem of AI tool integration. Developers have built MCP Servers by encapsulating tools like Slack and Notion, validating the protocol’s feasibility in various scenarios. The limitation of this stage is that most practices focus on personal efficiency tools and have not yet touched on complex enterprise-level scenarios. For example, the BlenderMCP project allows natural language control of 3D modeling tools, achieving 3.8k stars on GitHub in just three days, but primarily serves the independent developer community.The first breakthrough occurred in early March, mainly stemming from the “debate on standards” and the “Manus release.” On March 11, Harrison Chase, co-founder of LangChain, and Nuno Campos, head of LangGraph, engaged in a heated debate over whether MCP would become the factual standard for future AI interactions. Although there was no conclusion, it significantly stimulated everyone’s imagination about MCP. At the same time, LangChain initiated a poll online, with 40% of participants supporting MCP as the future standard.The next day, the Manus framework was released. Although Manus did not directly adopt MCP technology, the “3-hour open-source replication” event it triggered objectively pushed more teams to focus on the value of protocol standardization. On the other hand, Manus’s demonstrated multi-agent collaboration capabilities precisely matched users’ ultimate imagination of AI productivity. Currently, the mainstream interaction form of LLMs is still primarily ChatBot-based. Although its Function Call mechanism has shown the possibility of connecting to external data, the actual application always has barriers due to the need for complex technical integration. When MCP achieves a revolutionary experience of “conversation as operation” through a chat interface—where users witness input box commands directly triggering system-level operations like file management and data retrieval—the cognitive revolution of “AI can really help me get things done” truly erupts. It is this disruptive experience’s reverse empowerment that makes the release of Manus a key driver for MCP’s popularity.Subsequently, OpenAI’s official announcement revealed the possibility of “AI’s HTTP” becoming a reality. When this giant, which occupies 40% of the global model market share, announced support for the protocol, it meant that MCP began to possess the underlying infrastructure attributes similar to HTTP, officially entering the public eye, with its popularity continuing to rise exponentially.

The explosive rise of MCP can be divided into three stages. Since its release by Anthropic last November, MCP has quickly attracted tech geeks and open-source community developers, with its core value being to solve the “last mile” problem of AI tool integration. Developers have built MCP Servers by encapsulating tools like Slack and Notion, validating the protocol’s feasibility in various scenarios. The limitation of this stage is that most practices focus on personal efficiency tools and have not yet touched on complex enterprise-level scenarios. For example, the BlenderMCP project allows natural language control of 3D modeling tools, achieving 3.8k stars on GitHub in just three days, but primarily serves the independent developer community.The first breakthrough occurred in early March, mainly stemming from the “debate on standards” and the “Manus release.” On March 11, Harrison Chase, co-founder of LangChain, and Nuno Campos, head of LangGraph, engaged in a heated debate over whether MCP would become the factual standard for future AI interactions. Although there was no conclusion, it significantly stimulated everyone’s imagination about MCP. At the same time, LangChain initiated a poll online, with 40% of participants supporting MCP as the future standard.The next day, the Manus framework was released. Although Manus did not directly adopt MCP technology, the “3-hour open-source replication” event it triggered objectively pushed more teams to focus on the value of protocol standardization. On the other hand, Manus’s demonstrated multi-agent collaboration capabilities precisely matched users’ ultimate imagination of AI productivity. Currently, the mainstream interaction form of LLMs is still primarily ChatBot-based. Although its Function Call mechanism has shown the possibility of connecting to external data, the actual application always has barriers due to the need for complex technical integration. When MCP achieves a revolutionary experience of “conversation as operation” through a chat interface—where users witness input box commands directly triggering system-level operations like file management and data retrieval—the cognitive revolution of “AI can really help me get things done” truly erupts. It is this disruptive experience’s reverse empowerment that makes the release of Manus a key driver for MCP’s popularity.Subsequently, OpenAI’s official announcement revealed the possibility of “AI’s HTTP” becoming a reality. When this giant, which occupies 40% of the global model market share, announced support for the protocol, it meant that MCP began to possess the underlying infrastructure attributes similar to HTTP, officially entering the public eye, with its popularity continuing to rise exponentially.

02

What is MCP, and what core contradictions does it fundamentally resolve?

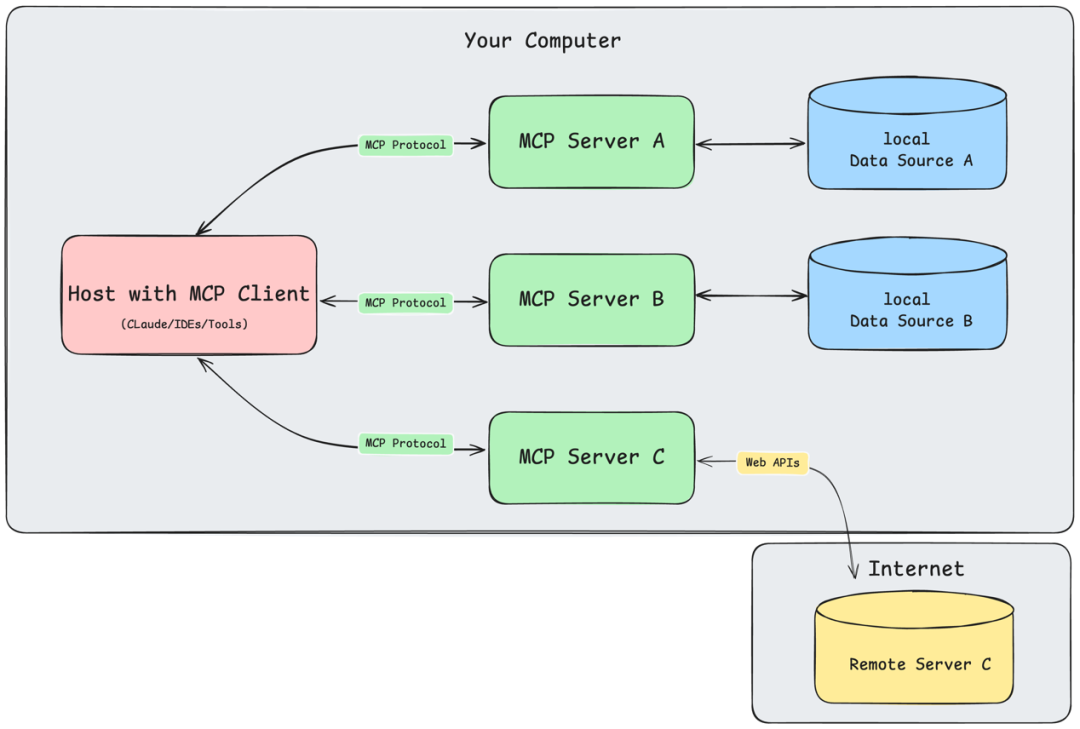

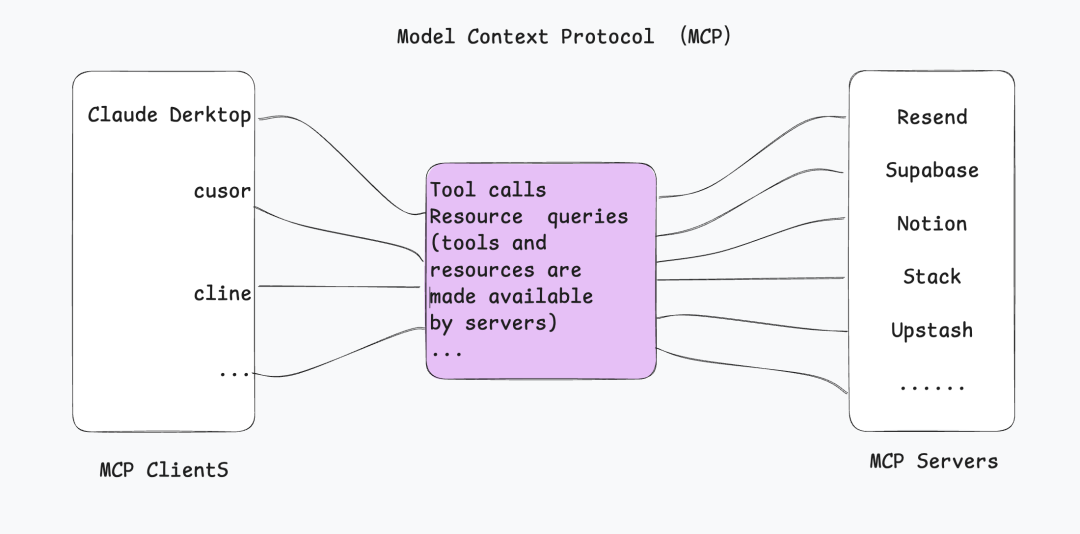

MCP abstracts the interaction between large models and external systems into a “client-server” architecture through Client, Host, and Server. Any AI application that supports MCP (MCP Host) can be directly configured and use the MCP Server from the application market (official or third-party) without pre-coding adaptation, similar to how USB devices are plug-and-play. When an LLM needs to perform a specific task, it can call these modules as if they were “plug-and-play,” receiving precise contextual support in real-time, thus achieving elastic capability expansion. From a broader perspective, MCP is actually a product of the development of Prompt Engineering. Large models are the brains of AI applications, while Prompts provide guidance and reference materials for large models. Using Prompt Engineering to accelerate the implementation of large model applications is currently the mainstream approach. Specifically, structured Prompts can provide large models with: • Additional reference materials, such as using RAG and online searches to enhance the responses of large models. • The ability to call tools, thus enabling Agents.For example, providing file operation tools, web scraping tools, and browser operation tools (like the Browser Use used by Manus). Looking back at Function Calls or RAG, they require manual execution of tool retrieval, manually adding information to the prompt, and the prompt itself also needs to be carefully designed. Especially since different large models’ Function Calls follow different calling structures and parameter formats, they are essentially incompatible with each other.

From a broader perspective, MCP is actually a product of the development of Prompt Engineering. Large models are the brains of AI applications, while Prompts provide guidance and reference materials for large models. Using Prompt Engineering to accelerate the implementation of large model applications is currently the mainstream approach. Specifically, structured Prompts can provide large models with: • Additional reference materials, such as using RAG and online searches to enhance the responses of large models. • The ability to call tools, thus enabling Agents.For example, providing file operation tools, web scraping tools, and browser operation tools (like the Browser Use used by Manus). Looking back at Function Calls or RAG, they require manual execution of tool retrieval, manually adding information to the prompt, and the prompt itself also needs to be carefully designed. Especially since different large models’ Function Calls follow different calling structures and parameter formats, they are essentially incompatible with each other. The explosion of MCP stems from its addressing the core contradiction of Prompt Engineering—the divide between dynamic intent understanding and static tool invocation. In traditional development models, Function Calls require developers to pre-write tool invocation logic, design Prompt templates, and manually manage context. This process is not only inefficient but also makes it difficult for AI applications to scale.

The explosion of MCP stems from its addressing the core contradiction of Prompt Engineering—the divide between dynamic intent understanding and static tool invocation. In traditional development models, Function Calls require developers to pre-write tool invocation logic, design Prompt templates, and manually manage context. This process is not only inefficient but also makes it difficult for AI applications to scale.

03

Can MCP shake or even overturn the status of Function Call?

To conclude, it is hard to say whether it will be overturned, but it will certainly stir up the “Function Calls.”Function Calls are essentially proprietary capabilities provided by certain large models (like GPT-4), allowing AI to call external tools through structured requests (for example, querying the weather or performing calculations).The host application receives the request, executes the operation, and returns the result.The core of this is the functional extension interface within the model vendor, with no unified standard, relying on specific vendors.The core advantage of MCP lies in unifying the originally differentiated Function Calling standards of various large models, forming a universal protocol.It not only supports Claude but is also compatible with almost all mainstream large models on the market, making it the “USB-C interface” of the AI field.Based on standardized communication specifications (like JSON-RPC 2.0), MCP resolves compatibility issues between models and external tools and data sources, allowing developers to develop an interface according to the protocol once, which can then be called by multiple models.Because both can achieve interaction with external data, when MCP was first introduced, developers often struggled with the question:“Is it a simplified version of Function Call, or the HTTP standard for AI interaction?”However, as the ecosystem develops, the open advantages of MCP compared to Function Calls are becoming clearer:The “private protocol dilemma” of Function Callsis similar to the private fast-charging protocols of mobile phone manufacturers, where mainstream AI manufacturers define their own closed calling protocols (JSON Schema, Protobuf, etc.), leading developers to repeatedly develop adaptation logic for different platforms.When switching AI service providers, the tool invocation system needs to be “rebuilt from scratch,” resulting in high cross-platform costs that slow down the scaling of AI capabilities. By unifying communication specifications and resource definition standards, MCP allows developers to “develop once and use universally across platforms”—the same tool can seamlessly adapt to different models like GPT and Claude.This is akin to the “same language and same track” in the AI world, ending the predicament of “reinventing the wheel.” However, Function Call remains the “king” of high-frequency lightweight tasks:It acts as the model’s “personal assistant” and is also the foundation for linking various parties through the MCP protocol, executing calls directly (like quick calculations or simple queries) with extremely fast responses. Meanwhile, MCP excels at “complex task outsourcing”:The model acts as a “commander” issuing demands (like web scraping), while the MCP Server serves as a “delivery person” responding on demand, delivering through HTTP/SSE protocols without requiring manual intervention from developers. It can be anticipated that MCP will not overturn Function Calls in the short term, but it will compel their evolution.When the richness of tools that models come with catches up to MCP, will developers still need to painstakingly build dedicated Servers? The answer may be not necessarily.But at the very least, the emergence of MCP has forced Function Calls to “step up their game”—promoting more standardized and convenient tool invocation. Function Calls are AI’s “instant little helpers,” while MCP is the “on-demand responding delivery person”—the better model for both is collaborative development.Function Calls represent a “code control” mindset:Developers need to finely control tool details;while MCP shifts to an “intent-driven” model:Developers only need to define capability boundaries, with specific execution decided dynamically by the large model.The coexistence of both allows developers to enjoy the efficiency of high-frequency tasks while unlocking the flexibility of complex scenarios.

04

What similar large model protocols exist currently?

How far is MCP from becoming a “factual standard”?

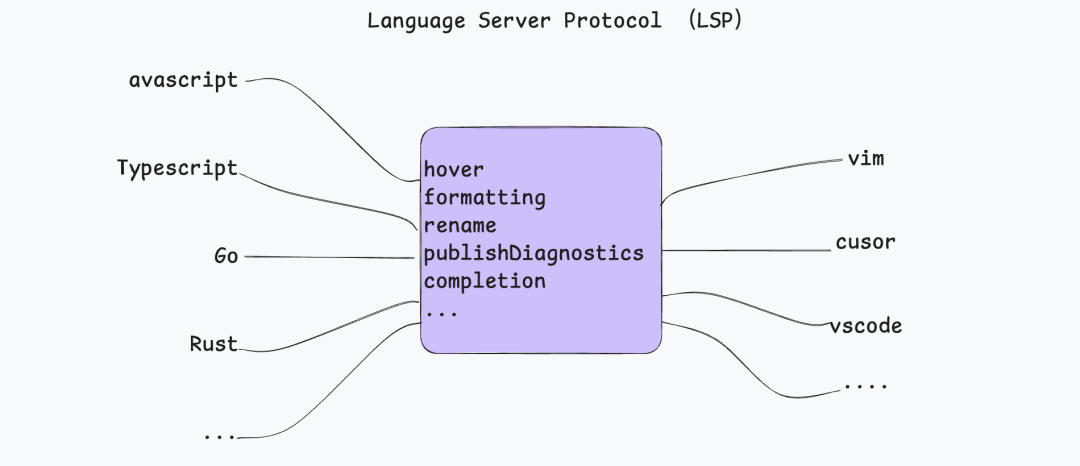

It is said that MCP is like the HTTP protocol of its time; in fact, the last protocol that resembled MCP was LSP—the Language Server Protocol.Before the release of LSP in 2016, the development tool ecosystem could be described as “each to their own.”In traditional development paradigms, integrated development environments (IDEs) and mainstream code editors (like VSCode, Sublime, VIM, etc.) had to repeatedly develop core functions such as syntax parsing, code completion, and debugging support for different programming languages like Java, Python, and C++, leading to significant resource waste and fragmentation of developer experience.The revolutionary breakthrough of LSP lies in creating a standardized communication architecture that decouples the editor front-end from the language back-end—defining a cross-process interaction protocol under the JSON-RPC specification, allowing language intelligence services to adapt to any editor in a pluggable manner. Doesn’t that sound similar to MCP?It can be said that the design inspiration for MCP largely comes from LSP, as both share a very similar philosophy, simplifying the M*N problem into an All-in-One solution.

While LSP addresses the interaction between programming languages and programming environments, other technical protocols similar to MCP can be roughly divided into two categories, each representing different technical paths but showing certain disadvantages compared to MCP.Traditional API Specification Factions • OpenAPI/Swagger: A universal API description standard that requires developers to manually define interfaces and logic, lacking AI-native design. • GraphQL: A flexible data query protocol but requires predefined schemas, lacking dynamic context extension capabilities. • Enterprise private protocols: Such as OpenAI Plugins and Google Vertex AI toolchain, which are highly closed and fragmented ecosystems. AI-Specific Framework Factions • LangChain tool library: Provides over 500 tool integrations but relies on developers for coding adaptations, leading to high maintenance costs. • Low-code platforms like Zapier: Connect tools through visual workflows but are limited in functional depth, making it difficult to meet complex scenarios. Among these, OpenAPI seems to be a potential competitor.However, in fact, OpenAPI, as the de facto standard for API definitions, provides the infrastructure for MCP rather than being a competitor.According to Speakeasy Batchu, CEO of an API management company: “The leap from OpenAPI specification to MCP is very small—the former is essentially a superset of the information required by MCP, and we just need to package it with LLM-specific parameters (like semantic descriptions and calling examples) into a real-time service.” This design difference reveals the essential distinction between the two: OpenAPI is a static interface specification, while MCP is a dynamic execution engine. When AI agents initiate requests through MCP servers, their real-time interaction capabilities can dynamically adapt to contextual changes, such as automatically completing missing parameters in API calls. This “living specification” feature addresses the fatal flaw in traditional integrations where models cannot understand API architecture information.The earlier mentioned “debate on standards” also delved into various possibilities.The proponents argue that: “The core value of MCP lies in allowing users to add tools to uncontrollable Agents. For example, when using applications like Claude Desktop or Cursor, ordinary users cannot modify the underlying Agent’s code, but through the MCP protocol, they can extend new tools for it.” The core technical support is: MCP provides a standardized tool description framework, supports guiding tool invocation through prompts, and the tool invocation capabilities of the foundational models themselves are also continuously evolving.The opposition argues that: “Existing models in Agents optimized for specific toolsets have a tool invocation accuracy of only 50%. If new tools are forcibly injected through MCP, the results may be even less ideal.” Some real-world challenges include:• Tool descriptions and Agent system prompts need to be deeply coupled. • Current MCP requires local deployment services, leading to high usage thresholds. • Lack of server deployment capabilities makes it difficult to meet scaling demands.• Security issues such as permission verification have yet to be resolved (MCP plans to address this in H1).The open discussion did not provide answers, much like the poll initiated by Langchain on X. Nearly 500 voters participated, with 40% supporting MCP as the future standard, but it did not achieve an overwhelming victory.

While LSP addresses the interaction between programming languages and programming environments, other technical protocols similar to MCP can be roughly divided into two categories, each representing different technical paths but showing certain disadvantages compared to MCP.Traditional API Specification Factions • OpenAPI/Swagger: A universal API description standard that requires developers to manually define interfaces and logic, lacking AI-native design. • GraphQL: A flexible data query protocol but requires predefined schemas, lacking dynamic context extension capabilities. • Enterprise private protocols: Such as OpenAI Plugins and Google Vertex AI toolchain, which are highly closed and fragmented ecosystems. AI-Specific Framework Factions • LangChain tool library: Provides over 500 tool integrations but relies on developers for coding adaptations, leading to high maintenance costs. • Low-code platforms like Zapier: Connect tools through visual workflows but are limited in functional depth, making it difficult to meet complex scenarios. Among these, OpenAPI seems to be a potential competitor.However, in fact, OpenAPI, as the de facto standard for API definitions, provides the infrastructure for MCP rather than being a competitor.According to Speakeasy Batchu, CEO of an API management company: “The leap from OpenAPI specification to MCP is very small—the former is essentially a superset of the information required by MCP, and we just need to package it with LLM-specific parameters (like semantic descriptions and calling examples) into a real-time service.” This design difference reveals the essential distinction between the two: OpenAPI is a static interface specification, while MCP is a dynamic execution engine. When AI agents initiate requests through MCP servers, their real-time interaction capabilities can dynamically adapt to contextual changes, such as automatically completing missing parameters in API calls. This “living specification” feature addresses the fatal flaw in traditional integrations where models cannot understand API architecture information.The earlier mentioned “debate on standards” also delved into various possibilities.The proponents argue that: “The core value of MCP lies in allowing users to add tools to uncontrollable Agents. For example, when using applications like Claude Desktop or Cursor, ordinary users cannot modify the underlying Agent’s code, but through the MCP protocol, they can extend new tools for it.” The core technical support is: MCP provides a standardized tool description framework, supports guiding tool invocation through prompts, and the tool invocation capabilities of the foundational models themselves are also continuously evolving.The opposition argues that: “Existing models in Agents optimized for specific toolsets have a tool invocation accuracy of only 50%. If new tools are forcibly injected through MCP, the results may be even less ideal.” Some real-world challenges include:• Tool descriptions and Agent system prompts need to be deeply coupled. • Current MCP requires local deployment services, leading to high usage thresholds. • Lack of server deployment capabilities makes it difficult to meet scaling demands.• Security issues such as permission verification have yet to be resolved (MCP plans to address this in H1).The open discussion did not provide answers, much like the poll initiated by Langchain on X. Nearly 500 voters participated, with 40% supporting MCP as the future standard, but it did not achieve an overwhelming victory. By the way, Speakeasy Batchu also has a view on this—“I believe that for a while, there will be some pattern disputes until a standard like OpenAPI is ultimately formed.”At this point, Batchu was unaware that just a few days later, both OpenAI and Google would announce support for MCP.

By the way, Speakeasy Batchu also has a view on this—“I believe that for a while, there will be some pattern disputes until a standard like OpenAPI is ultimately formed.”At this point, Batchu was unaware that just a few days later, both OpenAI and Google would announce support for MCP.

05

What impact does MCP have on the existing technology ecosystem?

The “universal plug” advantage of MCP allows for further decoupling in AI application development, significantly lowering the technical threshold, making “everyone an AI developer” a tangible reality.For AI vendors, the technical focus shifts from tool adaptation to protocol compatibility.The MCP protocol acts like a “universal socket” in the AI field, allowing model vendors to ensure compatibility with the protocol standard and automatically connect to all MCP ecosystem tools.For example, by supporting the MCP protocol, OpenAI’s models can call thousands of tool services like GitHub and Slack without developing separate interfaces.This shift allows large model vendors to focus on core algorithm optimization rather than repeatedly developing tool adaptation layers.For tool developers, MCP achieves a technical inclusivity of “develop once, use universally across the ecosystem.”Once developers encapsulate functionality into an MCP Server, it can be called by all AI applications compatible with the protocol.For instance, the database Server developed by PostgreSQL has been integrated into over 500 AI applications without needing to adapt for each model.This has provided all applications with a fast path to AI integration, much like how “every industry deserves to be redone with the internet” a decade ago; now, all products deserve an MCP adaptation transformation.  (A few days ago, the total number of MCP servers was still 6800) For application developers, MCP breaks the boundaries of technical capabilities and accelerates the transition of interaction paradigms from GUI (Graphical User Interface) to LUI (Language User Interface).Through protocol standardization, developers can combine various resources without needing to understand the underlying technical details:Educational institutions can use natural language commands to call multilingual databases to generate customized lesson plans, while retail companies can manage inventory by integrating ERP systems and AI models through voice commands.The protocol compatibility of MCP allows natural language interactions to be directly mapped to specific functional implementations. For example, the Tencent Maps MCP Server supports users in completing complex searches with colloquial commands like “find nearby Sichuan restaurants with an average cost of 200 yuan per person,” replacing the multi-level menu operations in traditional GUIs.This transformation is particularly significant in manufacturing—an engineer at a factory can schedule a cluster of devices connected via MCP using voice commands, achieving a response speed five times faster than traditional industrial control interfaces.The revolutionary improvement in LUI development efficiency is also attributed to MCP’s decoupling of the interaction layer:■ Traditional GUI dilemma:Requires developing independent interface components for different platforms (Web/iOS/Android), with maintenance costs consuming 60% of development resources;■ MCP+LUI advantages:Developers only need to describe functional requirements in natural language (like generating weekly report charts), and MCP automatically matches database queries, visualization tools, etc., and outputs results through standardized protocols. This transformation may be reconstructing the underlying logic of human-computer interaction. Just as the iPhone replaced keyboards with touch screens, the MCP protocol, through unified functional calling standards, makes natural language the “ultimate interface” connecting user intent with system capabilities.The rise of MCP signifies a new phase of ecological competition in AI development. Just as the HTTP protocol laid the foundation for the internet, MCP is building the “digital nervous system” of the intelligent era. Its value may lie not only in the technical specifications themselves but also in pioneering a new paradigm of open collaboration—allowing models, tools, and data to flow freely under a unified protocol.Whether MCP can dominate the landscape remains to be seen, but it clearly brings us one step closer to AGI.

(A few days ago, the total number of MCP servers was still 6800) For application developers, MCP breaks the boundaries of technical capabilities and accelerates the transition of interaction paradigms from GUI (Graphical User Interface) to LUI (Language User Interface).Through protocol standardization, developers can combine various resources without needing to understand the underlying technical details:Educational institutions can use natural language commands to call multilingual databases to generate customized lesson plans, while retail companies can manage inventory by integrating ERP systems and AI models through voice commands.The protocol compatibility of MCP allows natural language interactions to be directly mapped to specific functional implementations. For example, the Tencent Maps MCP Server supports users in completing complex searches with colloquial commands like “find nearby Sichuan restaurants with an average cost of 200 yuan per person,” replacing the multi-level menu operations in traditional GUIs.This transformation is particularly significant in manufacturing—an engineer at a factory can schedule a cluster of devices connected via MCP using voice commands, achieving a response speed five times faster than traditional industrial control interfaces.The revolutionary improvement in LUI development efficiency is also attributed to MCP’s decoupling of the interaction layer:■ Traditional GUI dilemma:Requires developing independent interface components for different platforms (Web/iOS/Android), with maintenance costs consuming 60% of development resources;■ MCP+LUI advantages:Developers only need to describe functional requirements in natural language (like generating weekly report charts), and MCP automatically matches database queries, visualization tools, etc., and outputs results through standardized protocols. This transformation may be reconstructing the underlying logic of human-computer interaction. Just as the iPhone replaced keyboards with touch screens, the MCP protocol, through unified functional calling standards, makes natural language the “ultimate interface” connecting user intent with system capabilities.The rise of MCP signifies a new phase of ecological competition in AI development. Just as the HTTP protocol laid the foundation for the internet, MCP is building the “digital nervous system” of the intelligent era. Its value may lie not only in the technical specifications themselves but also in pioneering a new paradigm of open collaboration—allowing models, tools, and data to flow freely under a unified protocol.Whether MCP can dominate the landscape remains to be seen, but it clearly brings us one step closer to AGI.

END

Click the business card below

Follow us now