Skip to content

Will agents become the key to unlocking AGI? The Fudan NLP team comprehensively explores LLM-based Agents.

Recently, the Fudan University Natural Language Processing team (FudanNLP) released a survey paper on LLM-based Agents, spanning 86 pages and citing over 600 references! The authors start from the history of AI Agents and provide a comprehensive overview of the current state of large language model-based intelligent agents, including: the background, composition, application scenarios, and the increasingly significant agent society. At the same time, the authors discuss forward-looking open issues related to Agents, which hold significant value for the future development trends in related fields.

The team members will also add a “one-sentence summary” for each related paper, and you are welcome to star the repository.

For a long time, researchers have been pursuing artificial general intelligence (AGI) that is comparable to or even surpasses human levels. As early as the 1950s, Alan Turing expanded the concept of “intelligence” to artificial entities and proposed the famous Turing test. These artificial intelligence entities are commonly referred to as – agents (Agent*). The concept of “agent” originates from philosophy, describing an entity that possesses desires, beliefs, intentions, and the ability to take action. In the field of artificial intelligence, this term has been given a new meaning: intelligent entities characterized by autonomy, reactivity, proactivity, and social abilities.

*There is no consensus on the Chinese translation of the term Agent; some scholars translate it as intelligent entity, behavioral entity, agent, or intelligent agent. In this article, “agent” and “intelligent agent” both refer to Agent.

Since then, the design of agents has become a focal point in the artificial intelligence community. However, past work has primarily focused on enhancing specific capabilities of agents, such as symbolic reasoning or mastery of specific tasks (e.g., chess, Go). These studies have placed greater emphasis on algorithm design and training strategies, while neglecting the development of the model’s inherent general capabilities, such as knowledge memory, long-term planning, effective generalization, and efficient interaction. It has been shown that enhancing the inherent capabilities of models is a key factor in advancing intelligent agents.

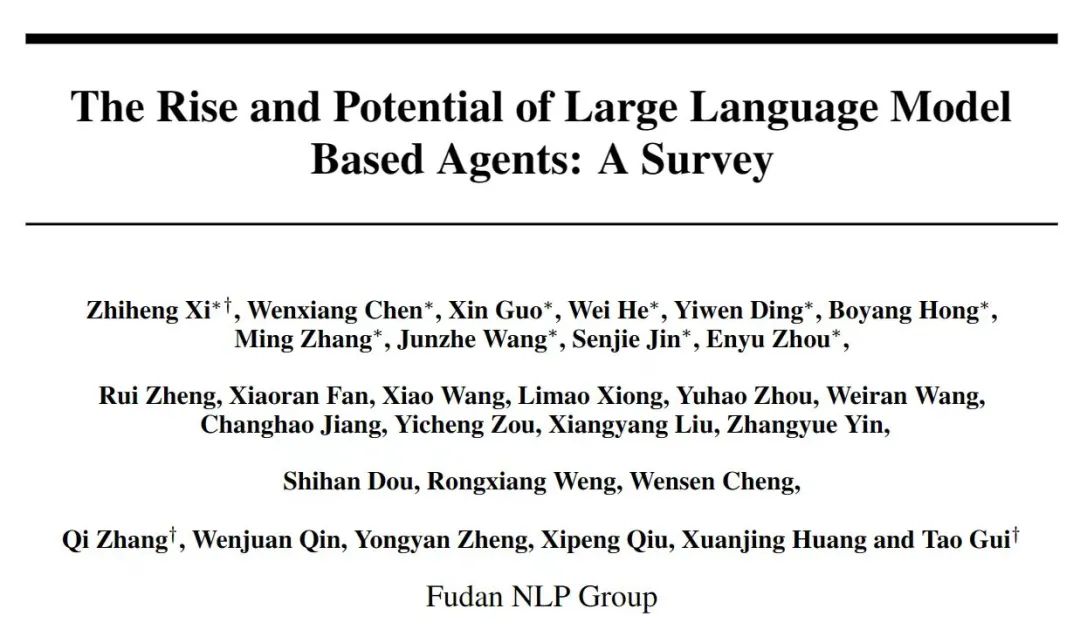

The emergence of large language models (LLMs) has brought hope for the further development of intelligent agents. If we divide the development path from NLP to AGI into five levels: corpus, internet, perception, embodiment, and social attributes, the current large language models have reached the second level, with text input and output at the scale of the internet. On this basis, if LLM-based Agents are given perceptual and action spaces, they will reach the third and fourth levels. Furthermore, multiple agents solving more complex tasks through interaction and cooperation, or reflecting social behaviors in the real world, have the potential to reach the fifth level – the agent society.

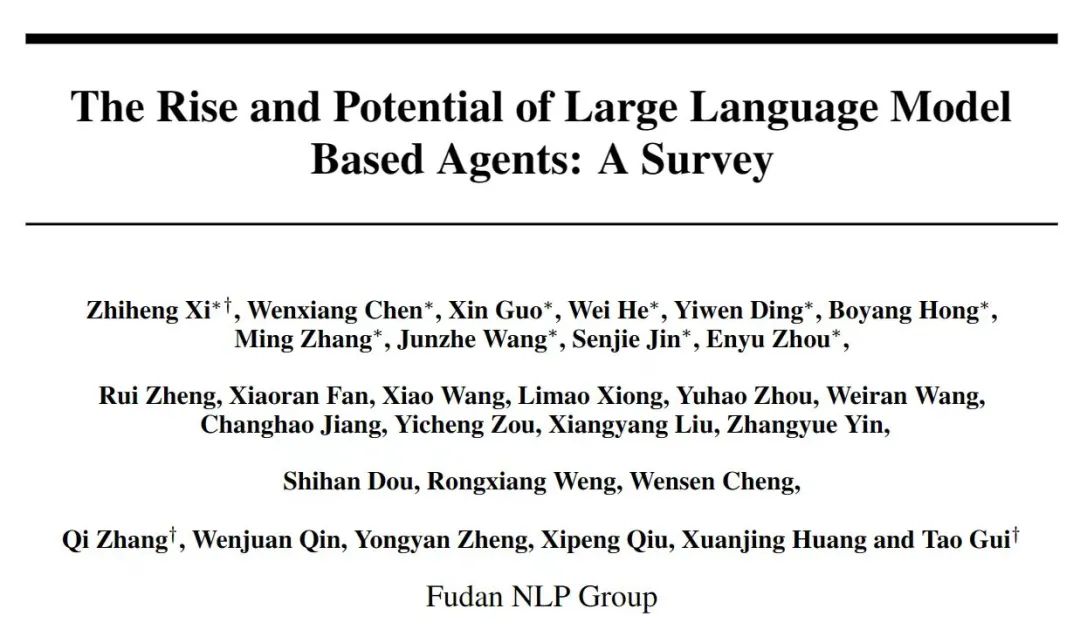

A harmonious society composed of intelligent agents envisioned by the authors, in which humans can also participate. The scene is inspired by the Lantern Festival in “Genshin Impact”.

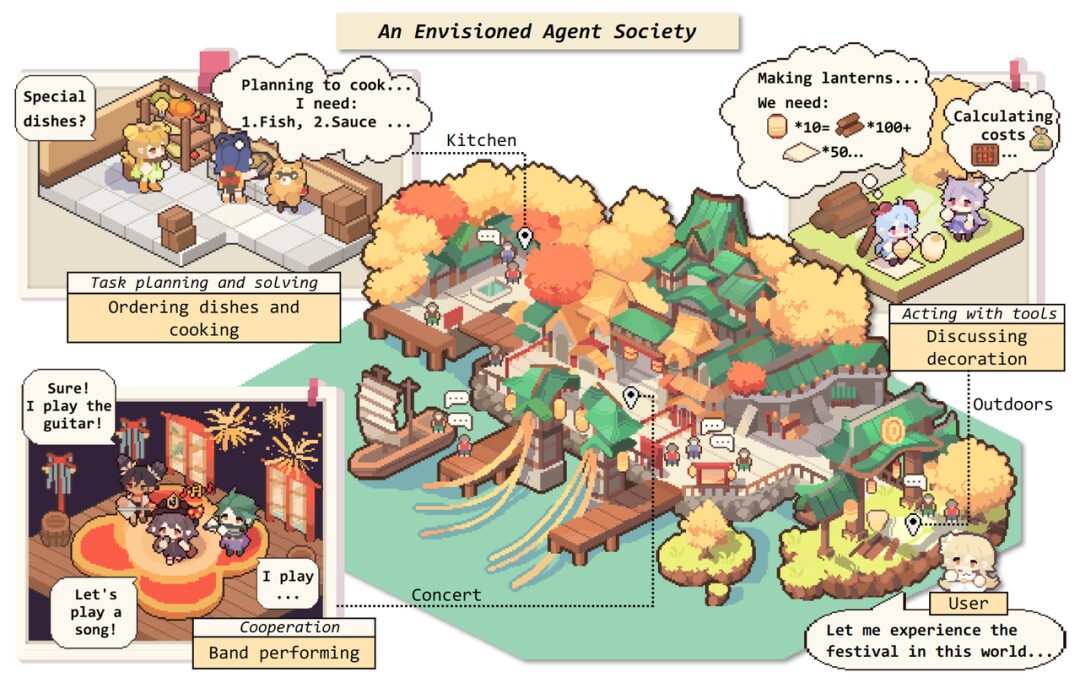

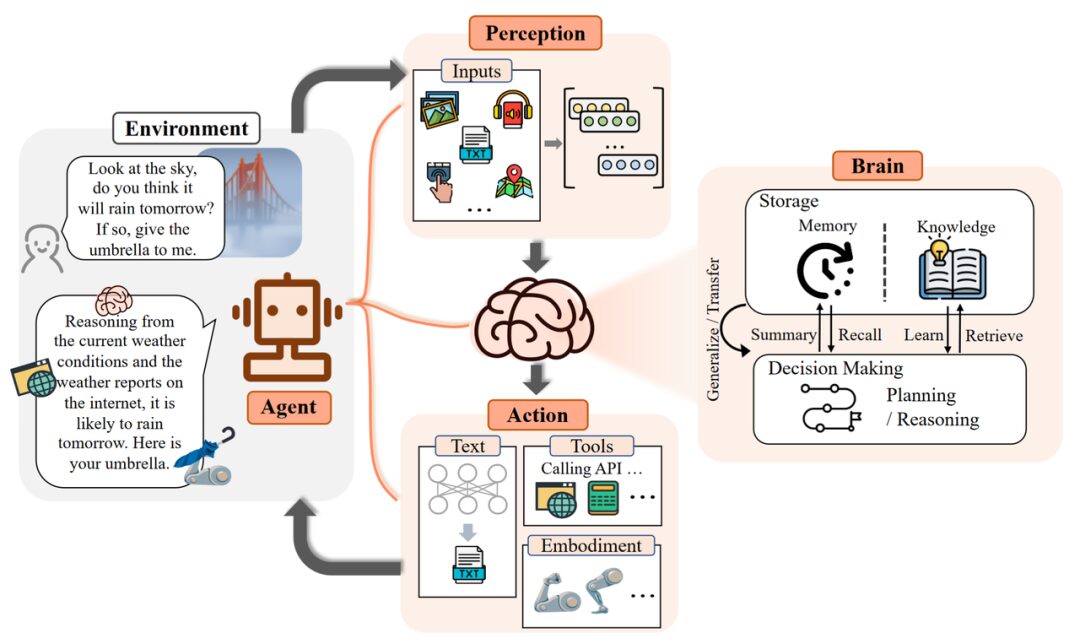

What will an intelligent agent powered by a large model look like? Inspired by Darwin’s “survival of the fittest” principle, the authors propose a general framework for intelligent agents based on large models. If a person wants to survive in society, they must learn to adapt to their environment, which requires cognitive abilities and the ability to perceive and respond to external changes. Similarly, the framework of intelligent agents consists of three parts: Control (Brain), Perception, and Action.

-

Control:Typically composed of LLMs, it is the core of the intelligent agent. It can not only store memories and knowledge but also undertake essential functions such as information processing and decision-making. It can present the processes of reasoning and planning and respond well to unknown tasks, reflecting the generalization and transferability of intelligent agents.

-

Perception:Expands the perceptual space of intelligent agents from pure text to include multimodal fields such as text, vision, and hearing, allowing agents to more effectively acquire and utilize information from their surroundings.

-

Action:In addition to conventional text output, it endows agents with embodied capabilities and the ability to use tools, enabling them to better adapt to environmental changes, interact with the environment through feedback, and even shape the environment.

The conceptual framework of LLM-based Agents includes three components: Control (Brain), Perception, and Action.

The authors illustrate the workflow of LLM-based Agents with an example: When a human asks whether it will rain, the perception component converts the instruction into a representation that LLMs can understand. Then the control component begins reasoning and planning actions based on the current weather and weather forecasts available online. Finally, the action component responds and hands an umbrella to the human.

By repeating the above process, intelligent agents can continuously obtain feedback and interact with the environment.

As the core component of intelligent agents, the authors introduce its capabilities from five aspects:

Natural Language Interaction:Language is the medium of communication, containing rich information. Thanks to the powerful natural language generation and understanding capabilities of LLMs, intelligent agents can engage in multi-turn interactions with the external world through natural language to achieve their goals. Specifically, this can be divided into two aspects:

-

High-Quality Text Generation: A large number of evaluation experiments have shown that LLMs can generate fluent, diverse, novel, and controllable text. Although performance may be lacking in certain languages, they generally possess good multilingual capabilities.

-

Understanding Implications: Beyond the content explicitly presented, language may also convey the speaker’s intentions, preferences, and other information. Understanding implications helps agents communicate and cooperate more efficiently, and large models have already shown potential in this area.

Knowledge:LLMs trained on vast amounts of corpus have the ability to store massive amounts of knowledge. In addition to language knowledge, common sense knowledge and domain-specific skills are important components of LLM-based Agents.

Although LLMs themselves still face issues such as knowledge obsolescence and hallucination, some existing research can alleviate these issues to some extent through knowledge editing or external knowledge base calls.

Memory:In this framework, the memory module stores the agent’s past observations, thoughts, and action sequences. Through specific memory mechanisms, agents can effectively reflect and apply previous strategies, drawing on past experiences to adapt to unfamiliar environments.

Three common methods to enhance memory capabilities include:

-

Expanding Backbone Architecture Length Limitations: Improvements addressing the inherent sequence length limitations of Transformers.

-

Summarizing Memory: Summarizing memory to enhance the agent’s ability to extract key details from memory.

-

Compressing Memory: Using vectors or appropriate data structures to compress memory can improve memory retrieval efficiency.

Additionally, the method of memory retrieval is crucial; only by retrieving suitable content can agents access the most relevant and accurate information.

Reasoning & Planning:Reasoning ability is critical for intelligent agents to perform decision-making and analysis in complex tasks. Specifically for LLMs, this involves a series of prompting methods represented by the Chain-of-Thought (CoT). Planning is a commonly used strategy when facing large challenges, helping agents organize thoughts, set goals, and determine the steps to achieve those goals. In practical implementation, planning can include two steps:

-

Plan Formulation: Agents decompose complex tasks into more manageable sub-tasks. For example: decompose and execute sequentially, plan and execute step by step, or plan multiple paths and select the optimal one. In scenarios requiring specialized knowledge, agents can integrate with specific domain Planner modules to enhance their capabilities.

-

Plan Reflection: After formulating a plan, it can be reflected upon and evaluated for strengths and weaknesses. This reflection generally derives from three aspects: leveraging internal feedback mechanisms; interacting with humans for feedback; and obtaining feedback from the environment.

Transferability & Generalization:LLMs with world knowledge endow intelligent agents with strong transfer and generalization capabilities. A good agent is not a static knowledge base but should also possess dynamic learning abilities:

-

Generalization to Unknown Tasks: As model scale and training data increase, LLMs have exhibited astonishing capabilities in solving unknown tasks. Large models fine-tuned with instructions perform well in zero-shot tests, achieving results comparable to expert models in many tasks.

-

In-context Learning: Large models can not only learn by analogy from a few examples in context but this capability can also be extended to multimodal scenarios beyond text, providing more possibilities for agent applications in the real world.

-

Continual Learning: The main challenge of continual learning is catastrophic forgetting, where models tend to lose knowledge from previous tasks when learning new ones. Domain-specific intelligent agents should strive to avoid losing general domain knowledge.

Humans perceive the world through multimodal means, so researchers have similar expectations for LLM-based Agents. Multimodal perception deepens agents’ understanding of the working environment and significantly enhances their generality.

Text Input:As the most fundamental capability of LLMs, this will not be elaborated further.

Visual Input:LLMs themselves do not possess visual perception capabilities and can only understand discrete text content. Visual input typically contains a wealth of information about the world, including object properties, spatial relationships, scene layouts, etc. Common methods include:

-

Converting visual input into corresponding text descriptions (Image Captioning): This can be directly understood by LLMs and is highly interpretable.

-

Encoding representations of visual information: Composing the perception module with visual foundational models + LLMs, allowing the model to understand content from different modalities through alignment operations, which can be trained end-to-end.

Auditory Input:Hearing is also an important component of human perception. Given the excellent tool-calling capabilities of LLMs, an intuitive idea is that agents can use LLMs as a control hub to cascade existing toolsets or expert models to perceive audio information. Additionally, audio can be visually represented through spectrograms. Spectrograms can display 2D information as planar images, allowing some visual processing methods to be transferred to the speech domain.

Other Inputs:Information in the real world goes far beyond text, vision, and hearing. The authors hope that in the future, intelligent agents will be equipped with richer perceptual modules, such as touch, smell, and other organs, to acquire more abundant attributes of target objects. At the same time, agents should also have a clear perception of the temperature, humidity, and light levels of their surroundings, taking more Environment-aware actions.

Furthermore, perception of the broader overall environment can be introduced for agents: using lidar, GPS, inertial measurement units, and other mature perception modules.

After the brain makes analyses and decisions, agents still need to act to adapt to or change their environment:

Text Output:As the most fundamental capability of LLMs, this will not be elaborated further.

Tool Usage:Although LLMs possess excellent knowledge reserves and professional capabilities, they may encounter robustness issues, hallucinations, and other challenges when facing specific problems. Meanwhile, tools can extend the capabilities of users, providing assistance in professionalism, factuality, interpretability, etc. For example, using calculators to solve mathematical problems or search engines to find real-time information.

Additionally, tools can expand the action space of intelligent agents. For instance, by calling expert models for speech generation, image generation, etc., to obtain multimodal action methods. Therefore, how to enable agents to become proficient tool users, i.e., learning how to effectively utilize tools, is a very important and promising direction.

Currently, the main methods for tool learning include learning from demonstrations and learning from feedback. Furthermore, meta-learning, curriculum learning, and other approaches can enable agent programs to generalize across various tools. Even further, intelligent agents can learn how to “self-sufficiently” create tools, enhancing their autonomy and independence.

Embodied Action:Embodiment refers to the ability of agents to understand, modify the environment, and update their own states during interactions with the environment. Embodied Action is seen as a bridge between virtual intelligence and physical reality.

Traditional reinforcement learning-based agents have limitations in sample efficiency, generalization, and complex problem reasoning. However, LLM-based Agents, by introducing the rich internal knowledge of large models, enable Embodied Agents to perceive and influence the physical environment proactively, much like humans. Depending on the level of autonomy of agents in tasks or the complexity of actions, there are the following atomic actions:

-

Observation helps intelligent agents locate their position in the environment, perceive objects, and obtain other environmental information;

-

Manipulation involves completing specific tasks such as grabbing and pushing;

-

Navigation requires intelligent agents to change their position based on task objectives and update their states according to environmental information.

By combining these atomic actions, agents can complete more complex tasks. For example, a task like “Is the watermelon in the kitchen bigger than the bowl?” requires the agent to navigate to the kitchen and observe the sizes of both to arrive at an answer.

Due to the high cost of physical world hardware and the lack of embodied datasets, research on embodied actions is still primarily focused on virtual sandbox environments such as the game “Minecraft.” Therefore, on one hand, the authors hope for a more realistic task paradigm and evaluation standards; on the other hand, more exploration is needed in efficiently constructing relevant datasets.

Agents in Practice: Diverse Application Scenarios

Currently, LLM-based Agents have demonstrated remarkable diversity and strong performance. Well-known applications such as AutoGPT, MetaGPT, CAMEL, and GPT Engineer are flourishing at an unprecedented pace.

Before introducing specific applications, the authors discuss the design principles of Agents in Practice:

1. Help users free themselves from daily tasks and repetitive labor, reduce human work pressure, and improve task-solving efficiency;

2. No longer requiring users to provide explicit low-level instructions, agents can autonomously analyze, plan, and solve problems;

3. After freeing users’ hands, attempts to liberate their minds: fully harnessing potential in cutting-edge scientific fields to complete innovative and exploratory work.

Based on this, the applications of agents can take three paradigms:

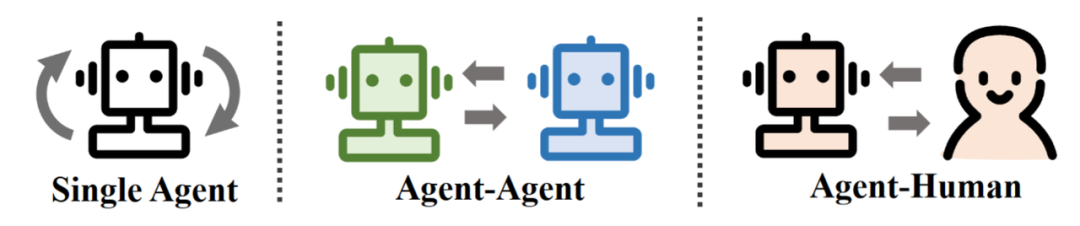

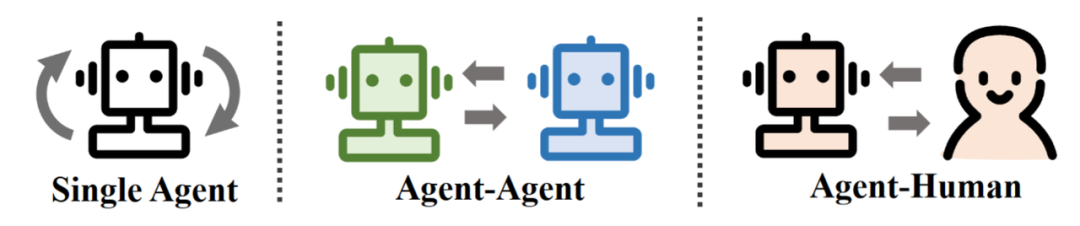

Three application paradigms of LLM-based Agents: Single Agent, Multi-Agent, Human-Agent Interaction.

Intelligent agents that can accept natural language commands from humans and perform daily tasks are currently highly favored by users and have significant practical value. The authors first elaborate on the diverse application scenarios and corresponding capabilities of single intelligent agents.

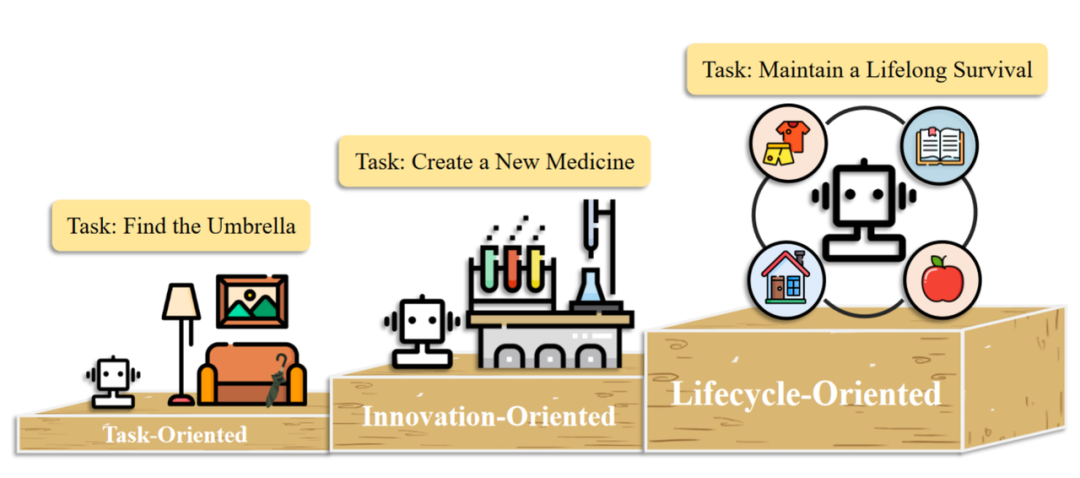

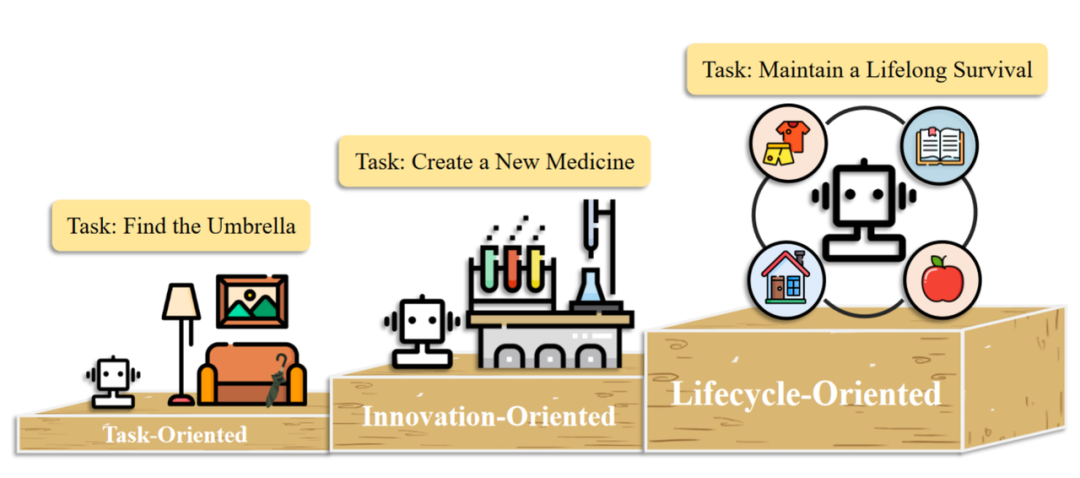

In this article, the applications of single intelligent agents are divided into the following three levels:

Three levels of single agent application scenarios: Task-oriented, Innovation-oriented, Lifecycle-oriented.

-

In Task-oriented deployments, agents assist human users in handling basic daily tasks. They need to possess basic capabilities in instruction understanding, task decomposition, and interaction with the environment. Specifically, based on existing task types, the actual applications of agents can be further divided into simulating online environments and simulating life scenarios.

-

In Innovation-oriented deployments, agents can demonstrate the potential for autonomous exploration in cutting-edge scientific fields. Although inherent complexities from specialized fields and the lack of training data pose challenges to the construction of intelligent agents, significant progress has been made in fields such as chemistry, materials, and computer science.

-

In Lifecycle-oriented deployments, agents possess the ability to continuously explore, learn, and use new skills in an open world, ensuring long-term survival. In this section, the authors use the game “Minecraft” as an example. The survival challenges in the game can be considered a microcosm of the real world, and many researchers have used it as a unique platform to develop and test agents’ comprehensive abilities.

As early as 1986, Marvin Minsky made a prescient prediction. In his book “The Society of Mind,” he proposed a novel theory of intelligence, suggesting that intelligence arises from the interactions of many smaller, functionally specific agents. For instance, some agents may be responsible for pattern recognition, while others may be responsible for decision-making or solution generation.

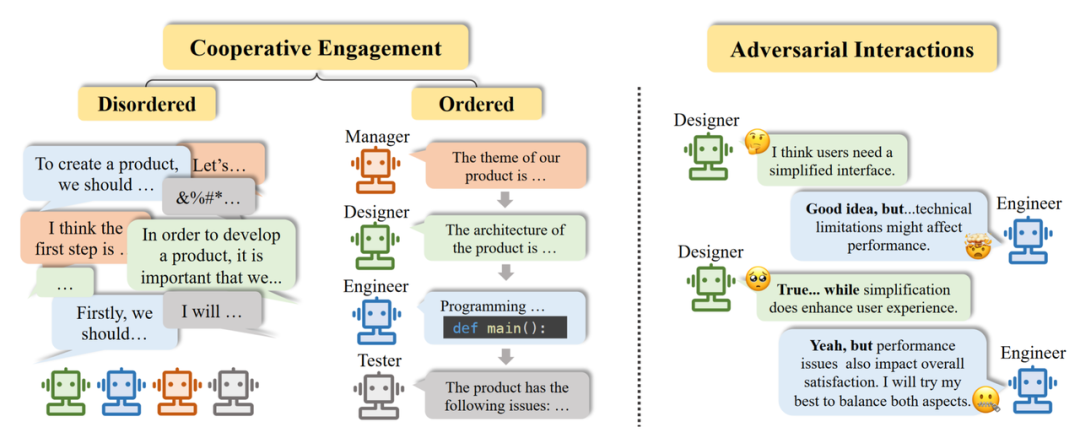

This idea has been concretely practiced with the rise of distributed artificial intelligence. Multi-Agent Systems, as one of the main research issues, primarily focus on how agents can effectively coordinate and collaborate to solve problems. The authors categorize interactions between multi-agents into the following two forms:

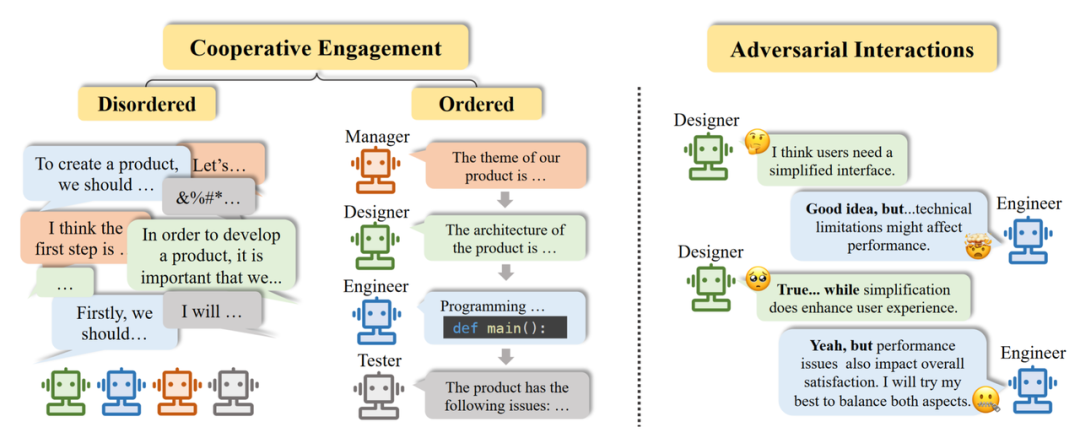

Two interaction forms in multi-agent application scenarios: Cooperative Interaction and Adversarial Interaction.

Cooperative Interaction:As the most widely deployed type in practical applications, cooperative agent systems can effectively improve task efficiency and jointly enhance decision-making. Specifically, based on different forms of cooperation, the authors further categorize cooperative interactions into unordered and ordered cooperation.

-

When all agents freely express their opinions and cooperate in a disordered manner, it is referred to as unordered cooperation.

-

When all agents follow certain rules, for example, expressing their opinions one by one in a pipeline manner, the entire cooperation process is orderly, referred to as ordered cooperation.

Adversarial Interaction:Intelligent agents interact in a tit-for-tat manner. Through competition, negotiation, and debate, agents discard previously erroneous beliefs, meaningfully reflect on their actions or reasoning processes, ultimately improving the quality of responses from the entire system.

Human-Agent Interaction Scenarios

Human-Agent Interaction, as the name suggests, involves intelligent agents collaborating with humans to complete tasks. On one hand, the dynamic learning capabilities of agents require communication and interaction for support; on the other hand, current agent systems still exhibit insufficient performance in interpretability, which may pose issues related to safety and legality, necessitating human participation for regulation and supervision.

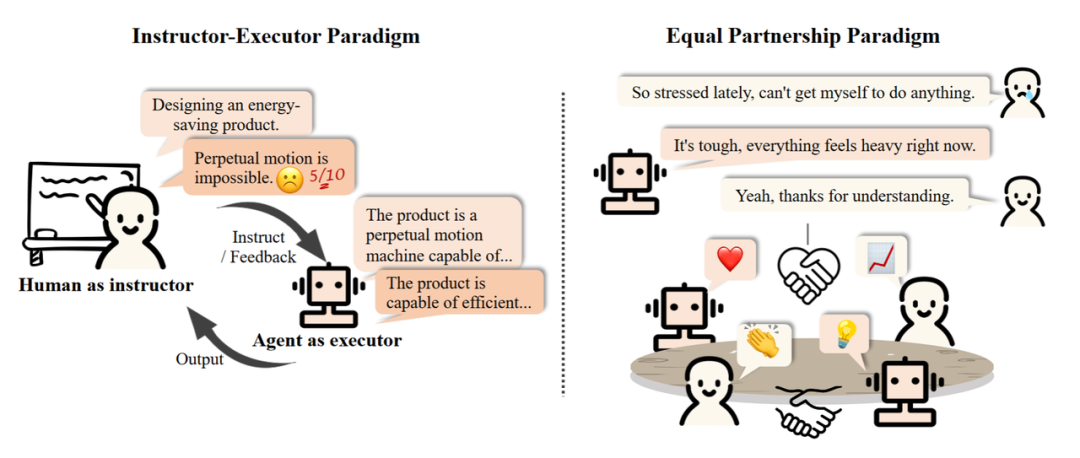

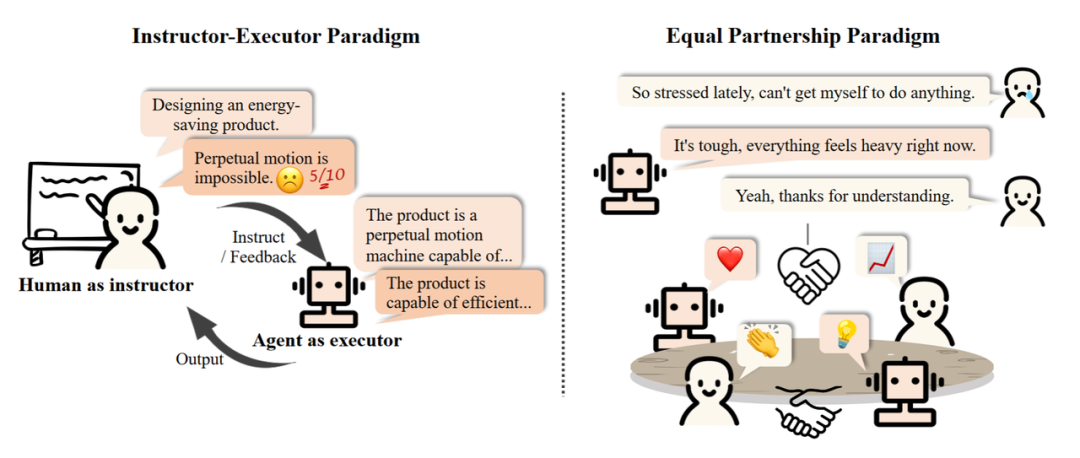

The authors categorize Human-Agent interactions into the following two modes:

Two modes of human-agent interaction scenarios: Instructor-Executor Mode vs. Equal Partnership Mode.

-

Instructor-Executor Mode:In this mode, humans act as instructors, providing instructions and feedback, while agents act as executors, gradually adjusting and optimizing according to the instructions. This mode has been widely applied in fields such as education, healthcare, and business.

-

Equal Partnership Mode:Some studies have observed that agents can demonstrate empathetic abilities in interactions with humans or participate in task execution as equals. Intelligent agents exhibit potential for applications in daily life, with prospects for integration into human society in the future.

Agent Society: From Individuality to Sociality

For a long time, researchers have envisioned constructing “interactive artificial societies,” from sandbox games like “The Sims” to the “metaverse.” The definition of simulated societies can be summarized as: environment + individuals surviving and interacting within that environment.

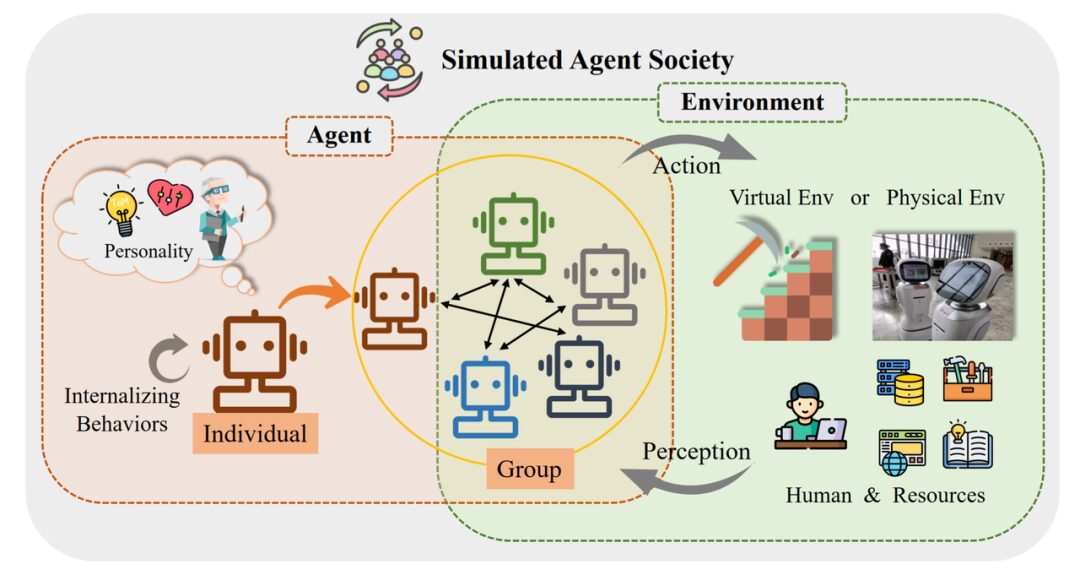

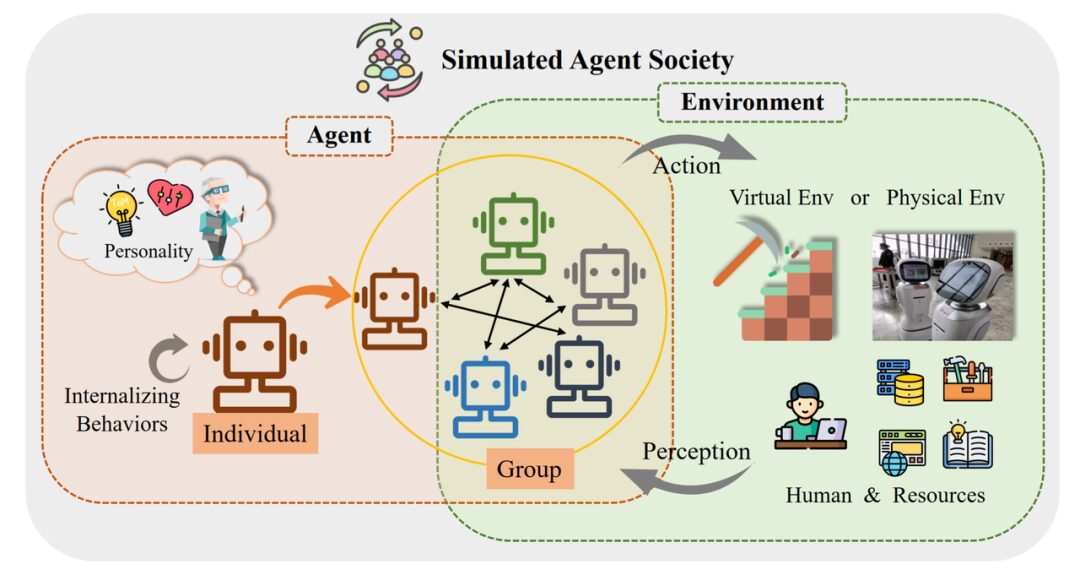

In the article, the authors describe the conceptual framework of Agent society with an illustration:

The conceptual framework of agent society is divided into two key parts: agents and environment.

In this framework, we can see:

-

Left Part:At the individual level, agents exhibit various internalized behaviors, such as planning, reasoning, and reflection. Additionally, agents also display inherent personality traits, encompassing cognitive, emotional, and personality aspects.

-

Middle Part:A single agent can form groups with other agent individuals, collectively exhibiting group behaviors such as cooperation.

-

Right Part:The forms of the environment can be virtual sandbox environments or real physical worlds. Elements within the environment include human participants and various available resources. For a single agent, other agents also constitute part of the environment.

-

Overall Interaction:Agents actively participate in the entire interaction process through perceiving the external environment and taking actions.

Social Behavior and Personality of Agents

The article examines the performance of agents in society from external behaviors and internal personalities:

Social Behavior:From a social perspective, behaviors can be divided into individual and collective levels:

-

Individual behaviors form the basis of the agent’s operation and development. This includes inputs represented by perception, outputs represented by actions, and the agent’s own internalized behaviors.

-

Collective behaviors refer to behaviors that arise when two or more agents interact spontaneously. This includes positive behaviors represented by cooperation, negative behaviors represented by conflict, and neutral behaviors such as conformity and bystander behavior.

Personality:Includes cognitive, emotional, and character traits. Just as humans gradually form their characteristics during socialization, agents also exhibit what is known as “human-like intelligence,” gradually shaping their personalities through interactions with groups and environments.

-

Cognitive abilities: Encompass the processes by which agents acquire and understand knowledge. Research indicates that LLM-based agents can exhibit levels of thoughtfulness and intelligence comparable to humans in certain aspects.

-

Emotional intelligence: Involves subjective feelings and emotional states, such as joy, anger, sorrow, and the ability to show sympathy and empathy.

-

Character portrayal: To understand and analyze the personality traits of LLMs, researchers have utilized established assessment methods, such as the Big Five personality traits and MBTI tests, to explore the diversity and complexity of personalities.

Operating Environment of Simulated Societies

The agent society is composed not only of independent individuals but also includes the environment with which they interact. The environment influences agents’ perceptions, actions, and interactions. Conversely, agents also change the state of the environment through their behaviors and decisions. For a single agent, the environment includes other autonomous agents, humans, and available resources.

Here, the authors explore three types of environments:

Text-Based Environment:Since LLMs primarily rely on language as their input and output format, text-based environments are the most natural operating platforms for agents. Describing social phenomena and interactions through text provides semantic and contextual knowledge. Agents exist in such a textual world, relying on textual resources to perceive, reason, and take actions.

Virtual Sandbox Environment:In the computing field, a sandbox refers to a controlled and isolated environment commonly used for software testing and virus analysis. The virtual sandbox environment of agent society serves as a platform for simulating social interactions and behavior. Its main characteristics include:

-

Visualization: The world can be presented using simple 2D graphical interfaces or complex 3D models to intuitively depict all aspects of the simulated society.

-

Scalability: Various different scenarios (web, games, etc.) can be constructed and deployed for various experiments, providing agents with ample exploration space.

Real Physical Environment:The physical environment consists of tangible environments made up of actual objects and spaces where agents observe and act. This environment introduces rich sensory inputs (visual, auditory, and spatial awareness). Unlike virtual environments, physical spaces impose more demands on agent behaviors, requiring agents to be adaptive and generate executable motion controls.

The authors provide an example to explain the complexity of the physical environment: Imagine a scenario where intelligent agents operate robotic arms in a factory; precise control of force is needed to avoid damaging objects of different materials; additionally, agents must navigate through the physical workspace, timely adjusting movement paths to avoid obstacles and optimize the robotic arm’s motion trajectory.

These requirements add complexity and challenges to agents operating in physical environments.

In the article, the authors argue that a simulated society should possess openness, persistence, contextuality, and organization. Openness allows agents to enter and exit the simulated society autonomously; persistence refers to the society having a coherent trajectory that evolves over time; contextuality emphasizes the existence and operation of subjects in specific environments; and organization ensures that the simulated society has rules and constraints similar to the physical world.

As for the significance of simulated societies, Stanford University’s Generative Agents town provides a vivid example – the Agent society can be used to explore the boundaries of collective intelligence, such as agents collectively organizing a Valentine’s Day party; it can also accelerate research in social sciences, such as observing communication phenomena through simulating social networks. Additionally, some studies have simulated moral decision-making scenarios to explore the values behind agents and simulated the impact of policies on society to assist decision-making.

Furthermore, the authors point out that these simulations may also pose certain risks, including but not limited to: harmful social phenomena; stereotypes and biases; privacy and security issues; over-reliance and addiction.

Forward-looking Open Issues

At the end of the paper, the authors also discuss some forward-looking open issues, inviting readers to ponder:

How should research on intelligent agents and large language models mutually promote and develop together?Large models demonstrate strong potential in language understanding, decision-making, and generalization capabilities, becoming a key role in the construction of agents, while the progress of agents also raises higher demands for large models.

What challenges and concerns will LLM-based Agents bring?Whether intelligent agents can truly be implemented requires rigorous safety assessments to avoid harm to the real world. The authors summarize more potential threats, such as: illegal abuse, unemployment risks, and impacts on human well-being, etc.

What opportunities and challenges will arise from scaling up the number of agents?In simulated societies, increasing the number of individuals can significantly enhance the credibility and authenticity of the simulation. However, as the number of agents rises, communication and message propagation issues become quite complex, and information distortion, misunderstandings, or hallucination phenomena can significantly reduce the overall efficiency of the simulation system.

The debate on whether LLM-based Agents are the right path to AGI.Some researchers believe that large models represented by GPT-4 have been trained on sufficient corpora, and agents built on this foundation have the potential to become the key to unlocking AGI. However, other researchers argue that autoregressive language modeling does not exhibit true intelligence, as they merely respond. A more complete modeling approach, such as world modeling, is needed to achieve AGI.

The evolutionary history of collective intelligence. Collective intelligence is a process of gathering opinions from many to convert them into decisions.However, simply increasing the number of agents, will it produce true “intelligence”? Additionally, how to coordinate individual agents so that the intelligent agent society can overcome “groupthink” and individual cognitive biases?

Agent as a Service (AaaS).Due to the complexity of LLM-based Agents compared to large models themselves, it is more challenging for small and medium-sized enterprises or individuals to build them locally. Therefore, cloud vendors might consider implementing intelligent agents in the form of services, namely Agent-as-a-Service. Like other cloud services, AaaS has the potential to provide users with high flexibility and on-demand self-service.

Source: WeChat Official Account [Machine Heart]