Machine Learning (ML) is a vibrant and powerful field of computer science that permeates almost all digital devices we interact with, whether it’s social media, mobile phones, cars, or even household appliances.

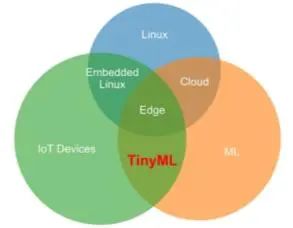

Artificial Intelligence (AI) is rapidly moving from the “cloud” to the “edge,” entering increasingly smaller IoT devices. The machine learning processes implemented on microprocessors at the terminal and edge are referred to as Tiny Machine Learning or TinyML.

01

What is TinyML?

What is TinyML?

TinyML stands for “Tiny Machine Learning.” It refers to the methods, tools, and techniques used by engineers to implement machine learning on devices operating below the mW power range.

Therefore, it can support various battery-powered devices and applications that need to be always online. These devices include smart cameras, remote monitoring devices, wearables, audio collection hardware, and various sensors.

It is an intersection of different technical fields and driving factors, positioned at the junction of IoT devices, machine learning, and edge computing, and is advancing rapidly due to the combined effects of various driving forces.

02

Working Mechanism of TinyML

Working mechanism of TinyML

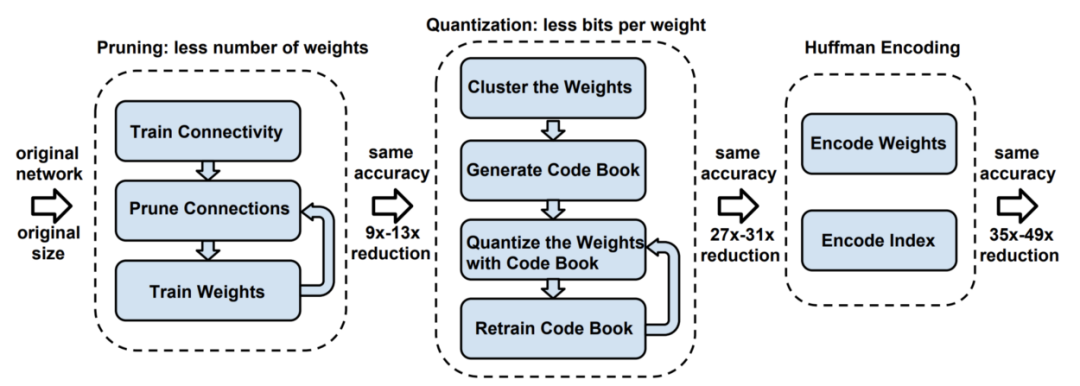

Illustration of deep compression, source: [ArXiv paper]

The working mechanism of TinyML algorithms is almost identical to traditional machine learning models, which are typically trained on user computers or in the cloud. The post-training processing is where TinyML truly shines, commonly referred to as deep compression.

How TinyML Builds Intelligent IoT Devices

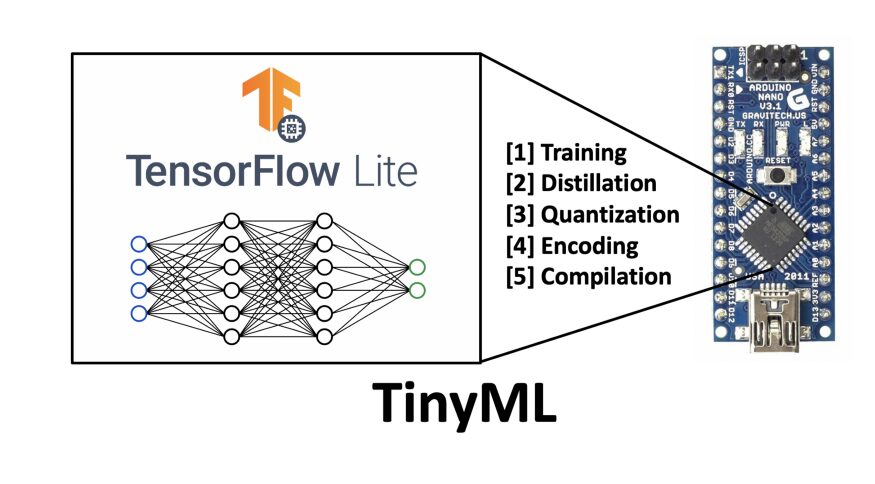

Deploying and running TensorFlow models on microcontrollers requires three steps:

Step 1: Generate a small TensorFlow model.

Keras

Keras is a high-level neural networks API written in Python, capable of running on TensorFlow, CNTK, or Theano as a backend. Here, we use Keras to build and train a TensorFlow model.

Step 2: Use the TensorFlow Lite converter to convert to a TensorFlow Lite model.

TensorFlow Lite

TensorFlow Lite is a lightweight solution for mobile and embedded devices in TensorFlow. The TensorFlow Lite Converter can convert TensorFlow models into efficient compressed flat buffer formats and supports converting 32-bit floating-point numbers to more efficient 8-bit integers for quantization to reduce computational requirements.

Step 3: Use the C++ library for inference and processing results on the device.

TensorFlow Lite Micro

TensorFlow Lite Micro is a lightweight AI engine for AIOT, designed to run machine learning models on microcontrollers and other resource-constrained devices.

03

Characteristics and Importance of TinyML

Characteristics and importance of TinyML

The emergence of TinyML is aimed at better alleviating various insurmountable issues in edge ML and cloud ML, including data privacy, network bandwidth, latency, reliability, and energy efficiency.

Data Privacy:

A significant number of end-users are very concerned about data privacy and maintain a cautious attitude towards data openness and sharing. Many users are reluctant to entrust their data to third-party cloud platforms and edge service providers for storage and management. Many users prefer to define clear “local” physical boundaries to safeguard their critical production and operational data. TinyML attempts to process and analyze sensitive data directly on IoT devices, thus protecting data privacy.

Network Bandwidth:

Many IoT devices communicate with networks through narrowband IoT (NB-IoT) or other low-power wide-area network communication protocols, with very limited bandwidth and data transmission capabilities. These devices have a strong need for local data processing to reduce data transmission, alleviate the pressure on network bandwidth and transmission power consumption, and avoid bandwidth bottlenecks between terminal and edge devices that can affect the performance of the entire IoT solution.

Latency:

With the development of technologies such as 5G, a massive number of IoT devices will be deployed, and many application scenarios are highly sensitive to latency, hoping that data can be transmitted in real-time. TinyML reduces the possibility of network latency by shifting certain machine learning tasks to the device itself.

Reliability:

In applications in remote areas, offshore platforms, space stations, and extreme environments, network communication may not always be guaranteed. Therefore, having machine learning capabilities in these IoT devices is a necessary feature. TinyML can transplant some machine learning capabilities from edge and cloud to local, enhancing reliability.

Energy Efficiency:

Many IoT devices are battery-powered and have high demands for power consumption. By analyzing data with ultra-low power TinyML, the amount of data transmitted over the network can be reduced, which can save energy consumption in IoT terminals to some extent.

Due to its potential to address multiple issues and break through limitations in cost, bandwidth, and power consumption, TinyML has garnered widespread attention and high expectations since its inception.

04

Applications of TinyML

Working mechanism of TinyML

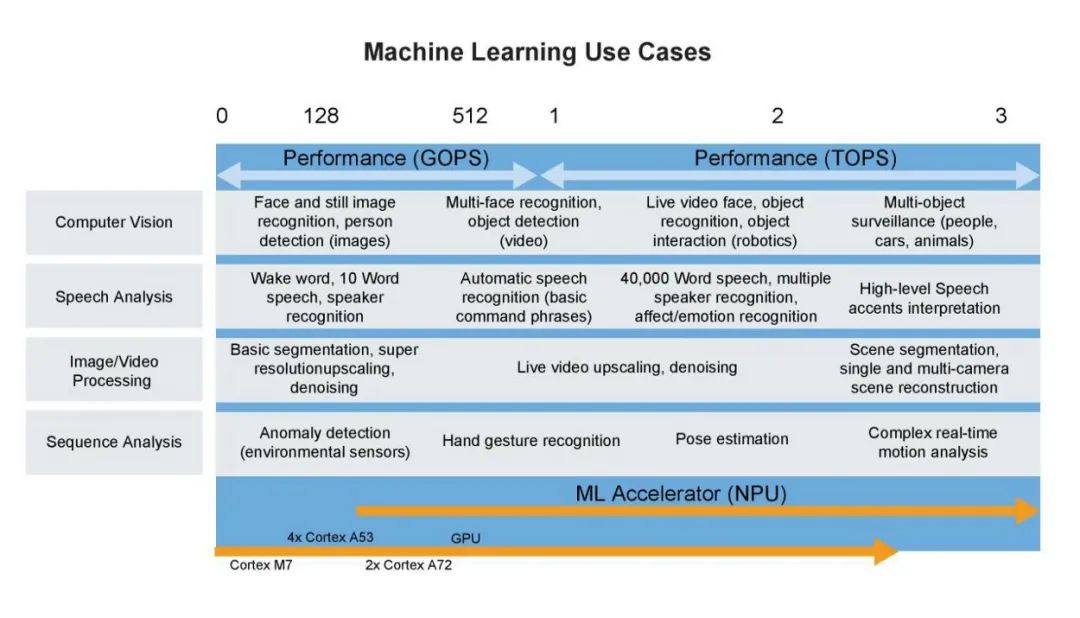

Machine learning use cases for TinyML. Image source: NXP

TinyML could potentially become a part of your daily life in some form.

Applications of TinyML include:

Keyword detection

Object recognition and classification

Gesture recognition

Audio detection

Machine monitoring

Two major examples:

1) In-vehicle applications

Swim.AI uses TinyML during real-time data transmission to enhance the intelligent processing capabilities of sensors regarding real-time traffic data, reducing passenger wait times, traffic congestion, improving vehicle emissions, and enhancing riding safety.

2) Smart factories

In the manufacturing industry, TinyML can enable real-time decision-making, reducing unplanned downtimes caused by equipment failures. It can alert workers for preventive maintenance when necessary based on equipment conditions.

Content Sharing

What problems does machine learning solve?

Differences between traditional machine learning and current machine learning technologies

Case analysis

Sharing on TinyML technology principles and applications

Guest Speaker

✦

✦

Ouyang Jun Light

Technical Director at an industrial automation software company

Has long worked in technical R&D positions in various industries including telecommunications, software companies, and internet companies, accumulating extensive project experience covering hardware, software, and the internet, with full-stack technical integration capabilities. Proficient in multiple mainstream programming languages including C++, C#, JavaScript, and Python. Experienced in various MCU development, familiar with PCB and FPGA design. Maintains a wide range of interests, stays updated on new technologies, and enjoys sharing technical experiences with peers.

Interested friends, remember to tune in to our online live broadcast on

Thursday, May 5

19:30-21:00

Lock into our online live studio!

Scan to enter the live studio

Co-organizers

Mushroom Cloud Makerspace is dedicated to providing an innovative and open communication platform. If you love creating and enjoy innovation, come to Mushroom Cloud to realize your creative ideas!

[References]

https://www.tensorflow.org/lite/guide/get_started?hl=zh-cn

https://towardsdatascience.com/tiny-machine-learning-the-next-ai-revolution-495c26463868

https://keras.io/zh/

https://mp.weixin.qq.com/s/MXcO2WHykXUN9vKOosj-Dw

https://www.51cto.com/article/630845.html