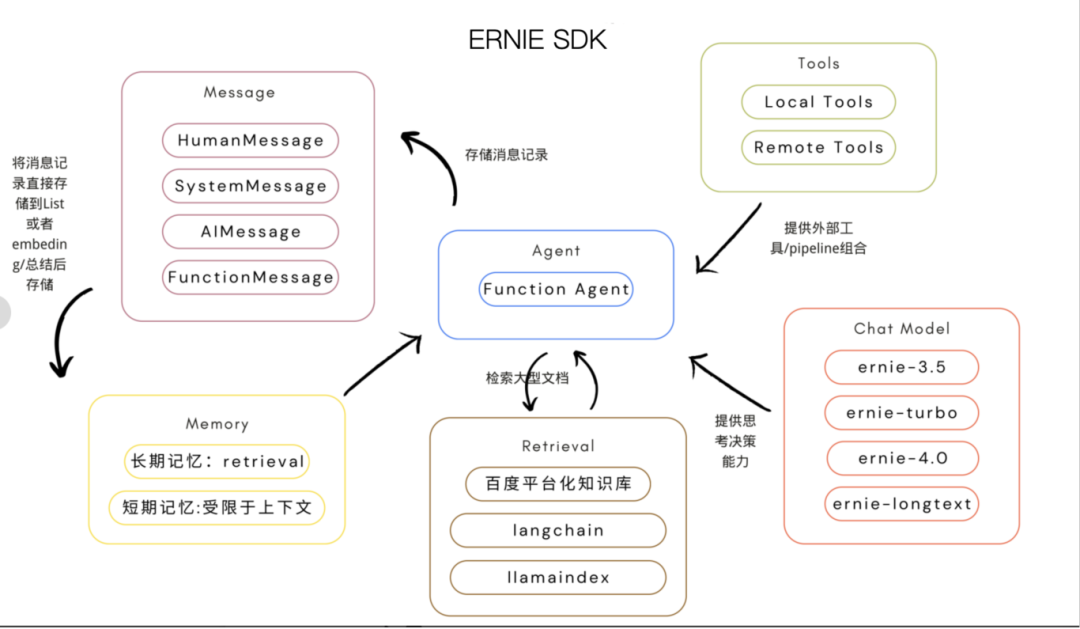

ERNIE SDK

In some complex scenarios, we need to flexibly call LLMs and a series of required tools based on user input. Agents provide the possibility for such applications. The ERNIE SDK enables Agent development driven by the Function Calling capability of the Wenxin large model. Developers can directly use preset Agents, instantiate them through Chat Model, Tool, and Memory, or customize their own Agents by inheriting from the base class erniebot_agent.agents.Agent.

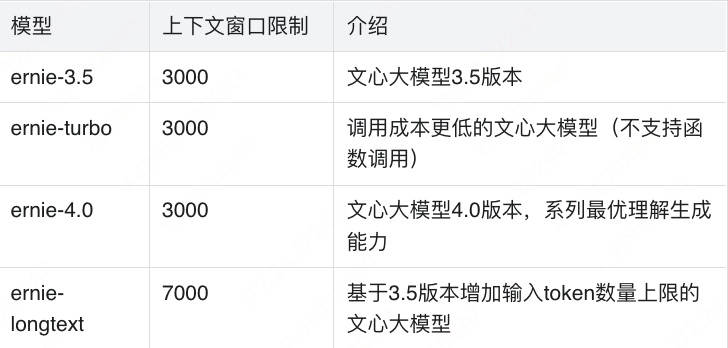

The Chat Model module in the ERNIE SDK is the core scheduler for decision-making, which is the knowledge-enhanced large language model developed by Baidu: the Wenxin large model.

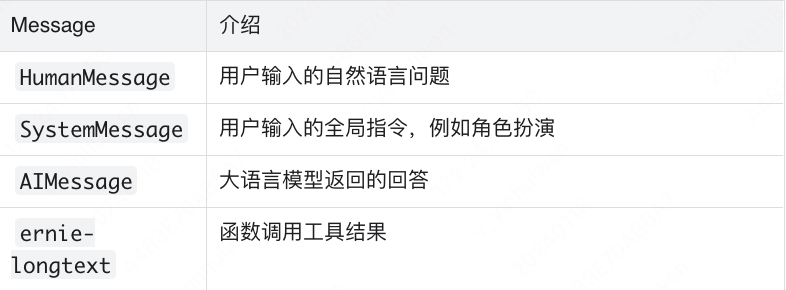

This module standardizes the user input and the feedback messages from the Wenxin large model, making it easier to store in the subsequent Memory module.

The large language model itself does not have memory, so an important aspect of building large model applications is to provide the Agent with memory functionality. The ERNIE SDK provides a fast memory function that can store information from multiple rounds of dialogue into a List and then transmit it to the context window of the Chat Model. However, this memory mode is also limited by the input tokens of the Wenxin large model. At the same time, the ERNIE SDK also allows developers to build more complex memory modules, with the following processing methods available:

1. Vector store-backed memory; each round of dialogue’s Message will be stored in the vector database after embedding processing, allowing semantic vector approximate retrieval to find the memory segments that best match the input semantics based on the user’s natural language input in subsequent dialogue environments. This method can achieve long-term memory, no longer limited by the context window of the Wenxin large model.

2. Conversation summary memory; this method summarizes the dialogue information after each round of dialogue using the Chat Model and stores a brief summary to reduce the storage pressure.

3. LangChain/LlamaIndex; the ERNIE SDK allows developers to freely integrate frameworks such as LlamaIndex to implement customized memory modules, leveraging LlamaIndex’s excellent document retrieval capabilities to achieve longer-term memory.

Allowing Agents to autonomously combine and use complex external tools to solve more complex problems is key to the widespread adoption of AI applications; the ERNIE SDK allows developers to quickly build complex applications using over 30 tools already launched in the PaddlePaddle Star Community and to customize local tools based on their business needs.

Although general large models have absorbed extensive knowledge during training, they have limited understanding of specific fields or proprietary business knowledge. The cost of fine-tuning large models with specific domain data is too high, thus introducing RAG (Retrieval Augmented Generation) technology, which integrates external knowledge bases into large models to gain a deeper understanding of specialized knowledge in specific fields. Key functions of the Retrieval module include:

-

Loading data sources, covering various data types: Structured data, such as SQL and Excel

Unstructured data, such as PDF and PPT documents

Semi-structured data, such as Notion documents

-

Chunking data transformation. -

Embedding processing of data. -

Storing processed data in the vector database. -

Quickly locating relevant information through approximate vector retrieval. The ERNIE SDK’s Retrieval module not only supports Baidu’s Wenxin Baizhong search but is also compatible with the Retrieval components of LangChain and LlamaIndex, significantly improving the efficiency and accuracy of data processing.

Now, let’s quickly understand how to develop an Agent—a manuscript review assistant. The main function of this Agent is to help us review whether the manuscripts published on various platforms comply with the standards.

First step, log in to the PaddlePaddle Star Community and create a new personal project. The free computing resources provided by the community are sufficient.

For secure management of your sensitive token information, we recommend using Dotenv. First, install Dotenv, and then save your token in a newly created .env file. Note that this file is not visible by default in the file directory; if you need to view it, you must change the settings.

Example .env file content:

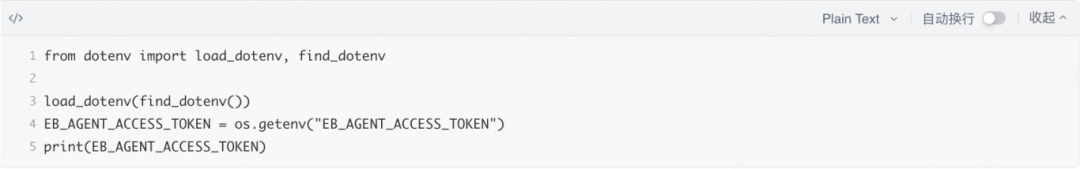

Third step, verify whether your access token can be used normally:

If everything is normal, it will print your access token. Create a new text file manuscript.txt, which should contain the text content you want to review for compliance.

Fourth step, build a basic Agent (using the pre-built tools provided by the PaddlePaddle Star Community Tool Center).

Run this code, and you will see that the Agent uses the [text-moderation/v1.2/text_moderation] tool to review the manuscript content and output the review results. In this way, the development of a simple manuscript review assistant Agent is complete. We have experienced the rapid development process and practicality of Agents based on the ERNIE SDK together.

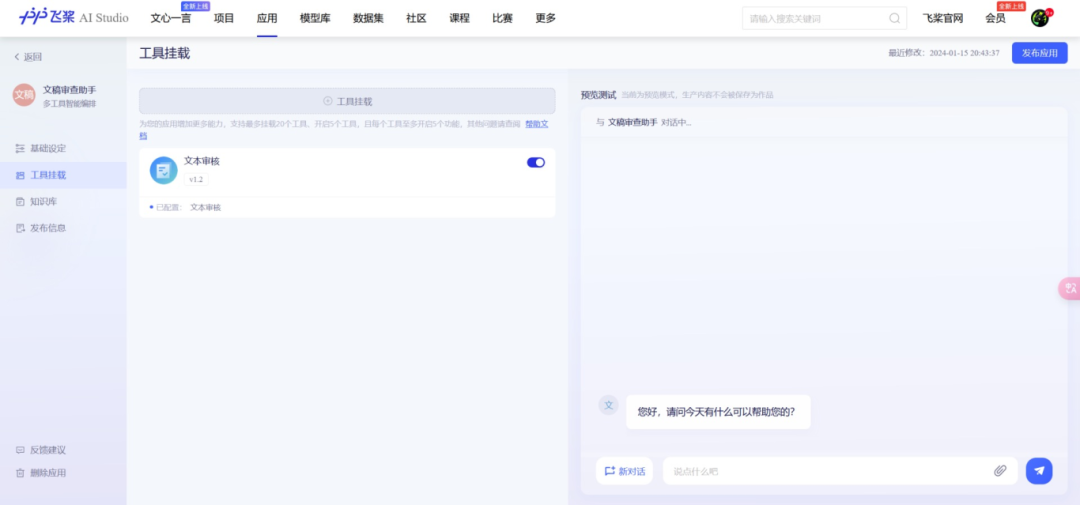

After deeply exploring the ERNIE SDK, let’s take a look at the multi-tool intelligent orchestration feature of the PaddlePaddle Star Community. The PaddlePaddle Star Community not only provides a fine-grained SDK to support the detailed needs of technical developers but also introduces a multi-tool intelligent orchestration feature. This means developers can easily integrate various external tools based on the powerful Wenxin large model to create personalized AI applications. Compared to simply using the ERNIE SDK, this method is faster and more convenient, greatly simplifying the development process. We will use multi-tool intelligent orchestration to recreate the manuscript review assistant.

First, create an application using low-code development and select intelligent orchestration.

Second, click to mount the “text review tool” in the sidebar tool mounting section, which is one of the more than 30 pre-built tools provided by the PaddlePaddle Star Community Tool Center; you can also create your own tools.

Then, set the role identity for the manuscript assistant in the basic settings. After clicking to apply all settings, you can experience it in the sidebar.

It is worth mentioning that the multi-tool intelligent orchestration of the PaddlePaddle Star Community is extremely friendly to team members without a technical background. Even without in-depth programming knowledge, team members can quickly get started and easily build their own AI applications. The creation of the above manuscript assistant only takes a few minutes, which not only accelerates the speed of product iteration but also promotes internal collaboration and innovation within the team.

Currently, Baidu PaddlePaddle has opened applications; visit the PaddlePaddle Star Community to learn more details and apply for use.

With the development of general large language models and the rise of intelligent Agent technology, we are ushering in a new era of AI application development. From the in-depth exploration of the ERNIE SDK to the application of multi-tool intelligent orchestration in the PaddlePaddle Star Community, we see how AI technology frameworks like Baidu PaddlePaddle ERNIE SDK break traditional boundaries and provide unprecedented convenience and great development potential for developers. Whether developers with a strong technical background or non-technical personnel can find their space in this new era, jointly promoting the progress of AI technology and the popularization of AI applications. The future of AI is full of infinite potential. The vast world of AI applications awaits our exploration and creation.

Related Links

Multi-tool intelligent orchestration application registration:https://aistudio.baidu.com/activitydetail/1503017298

END

#Previous Recommendations #

Performance tuning for cloud business with one-click, application performance diagnosis tool Btune launched

Paddle AI for Science fluid super-resolution FNO model case sharing

Database operation and maintenance workload reduced by 50% directly, technical sharing on building intelligent Q&A systems based on large models

A detailed explanation of the automatic differentiation mechanism in static and dynamic graphs