01

Reinforcement Learning (RL), also known as evaluative learning or enhanced learning, is one of the paradigms and methodologies in machine learning used to describe and solve the problem of agents learning strategies to maximize rewards or achieve specific goals through interactions with the environment. It is inspired by the behaviorist theory in psychology, which explains how organisms gradually form expectations of stimuli based on rewards or punishments from the environment, leading to habitual behaviors that maximize benefits. This method is universal, and thus there is research in many other fields, such as game theory, control theory, operations research, information theory, simulation optimization methods, multi-agent system learning, swarm intelligence, statistics, and genetic algorithms.

The common model of reinforcement learning is the standard Markov decision process. Based on given conditions, reinforcement learning can be divided into model-based reinforcement learning and model-free reinforcement learning, as well as active reinforcement learning and passive reinforcement learning. Variants of reinforcement learning include inverse reinforcement learning, hierarchical reinforcement learning, and reinforcement learning in partially observable systems. The algorithms used to solve reinforcement learning problems can be categorized into policy search algorithms and value function algorithms. Deep learning models can be utilized within reinforcement learning, forming deep reinforcement learning.

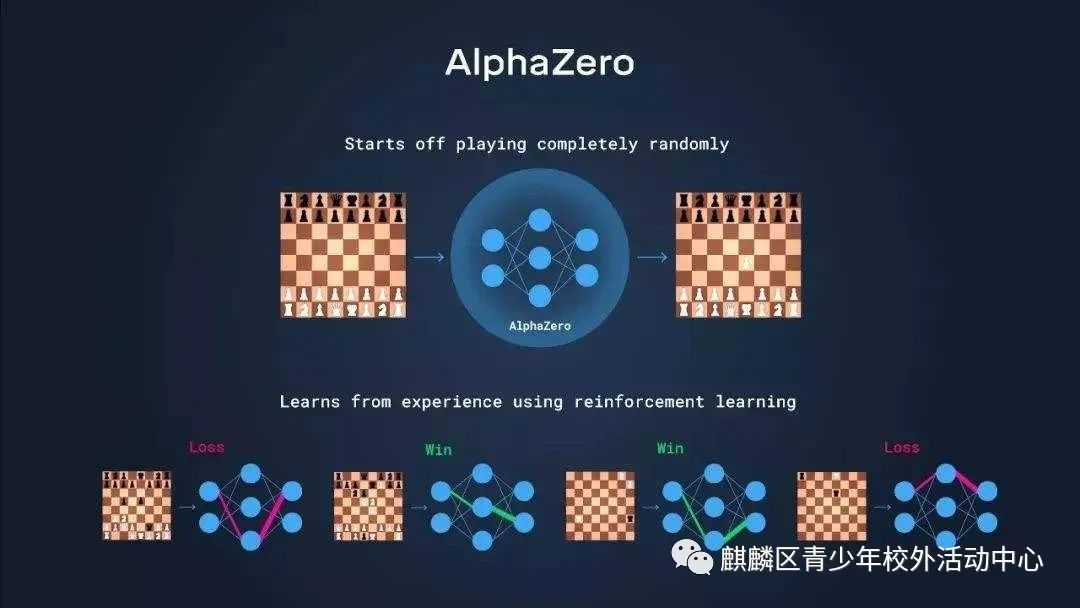

Reinforcement learning problems have been discussed in fields such as information theory, game theory, and automatic control, and have been used to explain equilibria under conditions of bounded rationality, design recommendation systems, and robot interaction systems. Some complex reinforcement learning algorithms have a degree of general intelligence capable of solving complex problems, achieving human-level performance in games like Go and video games.

02

Reinforcement learning has developed from theories such as animal learning and parameter perturbation adaptive control, with its basic principle being:

If an action strategy of the agent leads to a positive reward (reinforcement signal) from the environment, then the tendency for the agent to produce this action strategy will strengthen in the future. The agent’s goal is to discover the optimal strategy in each discrete state to maximize the expected discounted reward.

Reinforcement learning views learning as a trial-and-error evaluation process, where the agent selects an action to interact with the environment. After the environment accepts the action, the state changes, and a reinforcement signal (reward or punishment) is generated and fed back to the agent. The agent then selects the next action based on the reinforcement signal and the current state of the environment, with the principle of increasing the probability of receiving positive reinforcement (reward). The chosen action not only affects the immediate reinforcement value but also influences the state of the environment at the next moment and the final reinforcement value.

Reinforcement learning differs from supervised learning in connectionist learning primarily in the teacher signal. In reinforcement learning, the reinforcement signal provided by the environment evaluates the quality of the actions taken by the agent (usually a scalar signal) rather than instructing the agent on how to produce the correct action. Since the external environment provides very little information, the agent must learn from its own experiences. In this way, the agent gains knowledge in an environment where actions are evaluated, improving its action plan to adapt to the environment.

03

Design Considerations

1. How to represent the state space and action space.

2. How to select and establish signals and how to adjust the values of different state-action pairs through learning.

3. How to select suitable actions based on these values. Using reinforcement learning methods to study robot navigation in unknown environments, these issues become more complex due to the complexity and uncertainty of the environment.

04

Goals

To learn the mapping from environmental states to actions, allowing the agent’s chosen actions to obtain the maximum reward from the environment, making the external environment’s evaluation of the learning system (or the overall system performance) optimal in some sense.

Reinforcement learning adopts a sample acquisition and learning method, updating its model after obtaining samples and using the current model to guide the next action. After the next action receives a reward, the model is updated again, iterating continuously until the model converges. A very important aspect of this process is “given the current model, what action to choose next to best improve the current model”; this involves two very important concepts in RL: exploration and exploitation. Exploration refers to selecting actions that have not been executed before to explore more possibilities; exploitation refers to selecting actions that have been executed to refine the known actions’ model.

The three most important characteristics of reinforcement learning are:

(1) It is fundamentally a closed-loop form;

(2) It does not directly instruct which actions to choose;

(3) A series of actions and reward signals will affect subsequent outcomes over a longer period.

05

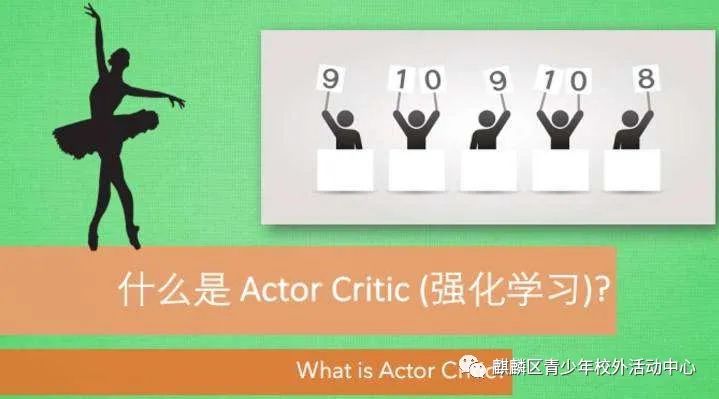

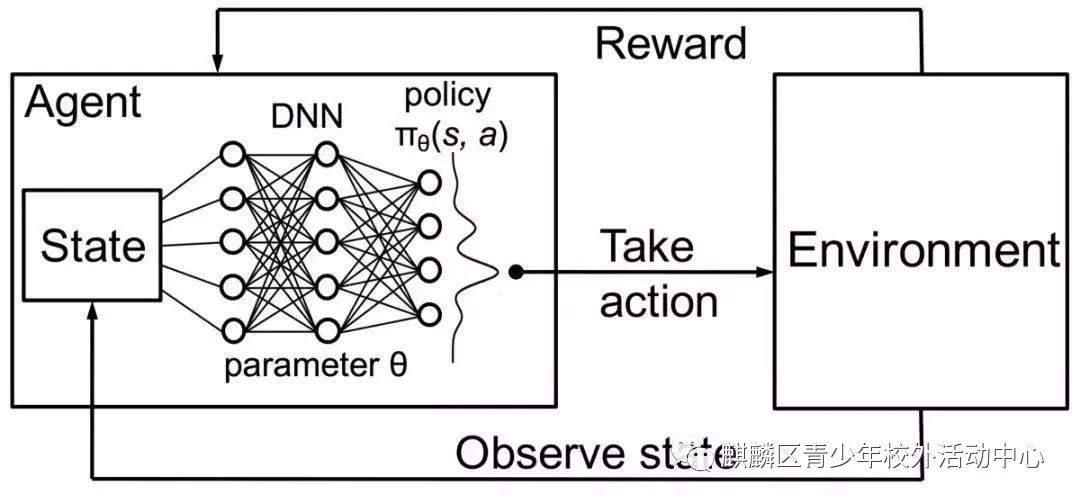

The decision-making process of reinforcement learning requires setting up an agent (the brain part in the diagram) that can receive an observation of the current environment. The agent can also receive the reward after it performs an action. The environment is the object the agent interacts with, and it is an uncontrollable entity; initially, the agent does not know how the environment will respond to different actions. The environment informs the agent of the current state through observation and can provide feedback on a reward based on possible outcomes, indicating how good or bad the agent’s decision was. The overall goal of reinforcement learning optimization is to maximize cumulative rewards.

There are four very important concepts in reinforcement learning:

(1) Policy

The policy defines the behavior of agents in a specific environment at a specific time and can be viewed as a mapping from environmental states to actions, commonly denoted by π. Policies can be divided into two categories:

Deterministic policy: a=π(s)

Stochastic policy: π(a|s)=P[At=a|St=t]

where t is the time point, t=0,1,2,3,…

St∈S, S is the set of environmental states, and St represents the state at time t, while s represents a specific state within it;

At∈A(St), A(St) is the set of actions in state St, and At represents the action at time t, while a represents a specific action within it.

(2) Reward signal

The reward is a scalar value returned to the agent by the environment based on the agent’s actions at each time step, defining the quality of performing that action in that context. The reward is commonly denoted by R.

(3) Value function

The reward defines immediate gains, while the value function defines long-term gains, which can be viewed as cumulative rewards, commonly denoted by v.

(4) Model of the environment, predicting what changes the environment will make next, thereby predicting what state or reward the agent will receive.

06

In summary, reinforcement learning, as a sequential decision-making problem, requires continuously selecting actions to achieve the best outcome with maximum rewards. It operates without any labels instructing the algorithm on what to do, by first attempting to take some actions—then receiving a result and evaluating whether this result is correct or incorrect to provide feedback on the previous actions.

Scan the QR code

Follow for more exciting content