With the continuous improvement of embedded processors’ capabilities, ultra-miniaturized hardware accelerators are being introduced, and the emergence of original and commercial development environments and tools, embedded artificial intelligence/machine learning (AI/ML) technologies have rapidly developed in recent years. At the same time, because these technologies are closely aligned with a variety of diverse application needs, they are entering a new space of differentiated development. In the future, their growth rate may rival or even exceed that of cloud-based AI applications that require a strong resource system and good communication conditions.

Artificial intelligence is not a term that has emerged in recent years, but it has gained public attention in recent years due to events such as Google’s AlphaGo defeating the human world champion in Go, which has brought technologies such as convolutional neural networks, deep learning, and machine learning into the public eye. This has also led to widespread recognition of the model “artificial intelligence = data + algorithms + computing power”.

The result is that, in the minds of many, artificial intelligence and machine learning are new, massive algorithm applications based on Intel’s latest server processors or Nvidia’s GPU acceleration modules. In particular, AI training has become a resource-intensive battle, becoming a high-barrier emerging field, where traditional chip companies that design MCUs or SoCs are basically unrelated to the expensive AI/ML.

However, people quickly discovered that the solutions provided by leading AI companies not only include complex functions such as autonomous driving traffic analysis, natural language processing, rapid medical image recognition, and high-frequency financial trading, but also include a larger number of Lite-level applications such as license plate recognition, smart speaker wake word recognition, portable smart health monitoring devices, facial recognition for booting, and smart home security.

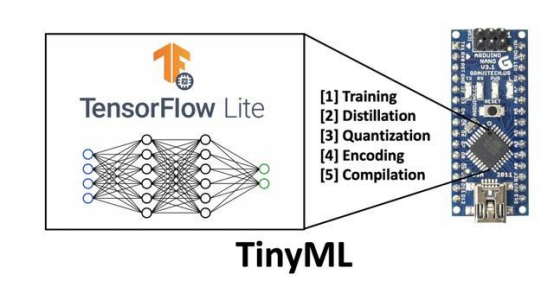

Driven by strong market demand, with the launch of Google’s open-source TensorFlow Lite embedded machine learning framework and similar products, as well as hardware accelerators like Imagination’s PowerVR Neural Network Accelerator (NNA) being commercialized on mobile or embedded devices, various low-power and cost-effective embedded AI/ML functional solutions are continuously emerging.

Through analysis, Beijing Huaxing Wanbang Management Consulting Co., Ltd. believes that the widespread rise of embedded AI/ML has brought a new model different from the traditional AI technology development paradigm centered around “artificial intelligence = data + algorithms + computing power”. The focus of embedded AI/ML for specific or certain applications and functions has shifted to “ecology + integration + customization”. Below, we will analyze from three aspects: integration into the Internet of Things ecology, integration of hardware and commercial development tools, and development of customized processors based on RISC-V:

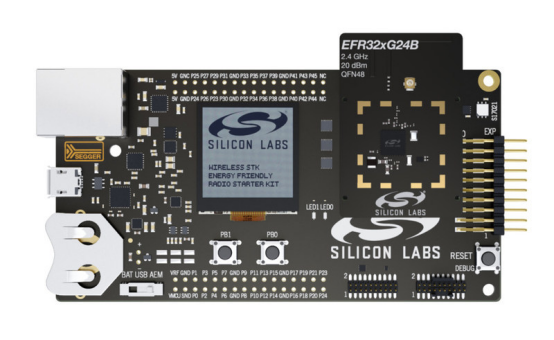

Silicon Labs (also known as “芯科科技”) is a global leader in IoT chips, software, and solutions, renowned in the industry for supporting the most comprehensive IoT communication protocols and providing excellent product performance, with clients including leading companies in smart home, smart city, industrial and commercial, smart healthcare, and energy sectors.

Earlier this year, the company announced the launch of its BG24 and MG24 series 2.4 GHz wireless SoCs, which not only support the latest Matter IoT communication protocol but also support Bluetooth and multi-protocol operation, while providing AI/ML capabilities for battery-powered edge devices and applications, along with high-performance wireless capabilities and IoT ecology.

BG24 and MG24 wireless SoCs represent the industry’s cutting-edge ecology, functionality, and technology combination, including support for wireless multi-protocol, long battery life (low power consumption), machine learning, and security for IoT edge applications. Silicon Labs provides a new software toolkit for them, allowing developers to quickly build and deploy AI/ML algorithms using commonly used toolkits (like TensorFlow).

To achieve AI/ML computing power, the BG24 and MG24 series first integrate dedicated AI/ML accelerators, helping developers deploy artificial intelligence or machine learning functions and solve power consumption challenges. This dedicated hardware is designed to process complex computations quickly and efficiently, with internal tests showing performance improvements of up to 4 times and energy efficiency improvements of up to 6 times. Since machine learning computations are performed on local devices rather than in the cloud, network latency is eliminated, accelerating decision-making and action.

Additionally, the BG24 and MG24 series have the largest flash and RAM capacity in the Silicon Labs product portfolio, allowing them to support multi-protocol, Matter, and training ML algorithms with large datasets. These chips are equipped with PSA Level 3 certified Secure VaultTM IoT security technology, providing the necessary high security for products requiring careful deployment, such as door locks, medical devices, and others.

IAR Systems is a global leader in embedded development software and services, with its leading IAR Embedded Workbench® toolchain widely adopted worldwide. IAR Systems’ development tools support Alif Semiconductor™’s highly integrated Ensemble™ and Crescendo™ series chips, creating AI-based, efficient microcontrollers (MCUs) and fusion processors, empowering the next generation of embedded interconnected applications.

Integration for more functions represents a development direction for embedded AI/ML. These high-efficiency product lines from Alif Semiconductor provide up to 4 processing cores, as well as AI/ML acceleration, multi-layer security, integrated LTE Cat-M1 and NB-IoT connectivity, and global navigation satellite system (GNSS) positioning, greatly expanding their application range.

To better utilize these functions, it is necessary to use proven, leading compiler technologies such as IAR Systems’ Arm development tools to optimize both code size and speed, while also providing high-performance debugging capabilities, thus providing a great platform for enterprises.

In November 2021, IAR Systems announced that its latest version of IAR Embedded Workbench for Arm® added support for the Arm Cortex®-M55 processor. This processor is a Cortex-M series processor that supports AI technology, bringing energy-efficient digital signal processing (DSP) and machine learning capabilities.

This collaboration enables application developers for Ensemble or Crescendo devices to leverage the IAR Embedded Workbench® for Arm development toolchain to achieve high-performance and powerful code optimization capabilities, fully unleashing the AI/ML potential of the devices while maintaining energy efficiency as much as possible.

Diverse demand is one of the characteristics of embedded applications. MCU suppliers have long met users’ personalized needs through different processor cores and peripherals. The rise of RISC-V has brought a new trend of custom processors, which will continue to extend into the embedded AI/ML field and receive support from leading industry players.

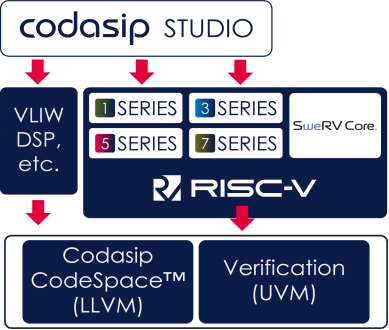

Codasip is a provider of leading RISC-V processor IP and advanced processor design tools, offering IC designers all the advantages of the RISC-V open ISA, along with unique capabilities for custom processor IP. In February this year, Codasip launched two new low-power embedded RISC-V processor cores, L31 and L11, optimized for custom processors.

Based on these new cores, customers can easily use Codasip Studio tools to customize processor designs to support challenging applications such as neural networks, AI/ML, and ultra-miniaturized, power-constrained applications like IoT edge computing. Codasip’s kernel customization capability is the cornerstone of its success, with over 2 billion processors worldwide currently using Codasip’s IP.

Codasip L31/L11 embedded cores run on Google’s TensorFlow Lite for Microcontrollers (TFLite Micro) and utilize Codasip Studio tools to customize a new class of embedded AI cores, providing sufficient performance for AI/ML compute-intensive and resource-limited embedded systems. Different applications have huge differences in device requirements, and existing processors do not load AI/ML applications well.

Codasip offers a “create differentiated designs” model, meaning that customers using its Studio tools can customize processors according to their specific system, software, and application requirements. By combining TFLite Micro, RISC-V custom instructions, and Codasip processor design tools, low latency, high security, fast communication, and low power consumption advantages can be brought to embedded, efficient edge neural network processing functions.

With the development of the industry, embedded AI/ML technologies and applications will continue to evolve. Based on the new paradigm of “ecology + integration + customization” proposed by Huaxing Wanbang, and the continuously emerging edge applications, we can see that some new technologies are worth close attention in the future, such as new hardware accelerators and security technologies suitable for edge applications.

For example, in recent years, the widely rising xPU, which has received high attention, will move from the cloud to embedded applications; in some application scenarios, hardware needs to be reprogrammed for algorithm and standard evolution and upgrades, so IP products like Achronix’s Speedcore embedded FPGA (eFPGA) will also move from the server and data center market into embedded AI/ML applications, promoting the heterogeneous computing model using different hardware accelerators forward.

As a professional enterprise that provides industry research and marketing services for the global semiconductor industry ecology, Huaxing Wanbang has always regarded the introduction and cultivation of outstanding talents as a company strategy, and has long opened its arms to connect with industry talents. The company urgently needs talents with an electronic industry/technology background, who possess deep insights and can convey their vision to the industry and society in various ways. It can provide partnership and cooperation opportunities for friends with special talents and client resources. Those interested can send their expectations and resumes to: [email protected]

References and Further Reading

To learn about the Matter IoT protocol and its collaboration with embedded AI/ML, please scan the following QR code to read the article on Silicon Labs WeChat public account

To learn about the role of the latest development toolchain in embedded AI/ML, please scan the following QR code to read the article on IAR Systems WeChat public account

To learn how to introduce custom processor solutions in the embedded AI/ML market, please scan the following QR code to read the article on Codasip WeChat public account

To learn about the role of eFPGA IP in embedded AI/ML processors, please scan the following QR code to read the article on Achronix WeChat public account

If you wish to learn more about Beijing Huaxing Wanbang Management Consulting Co., Ltd., please follow and read the “Huaxing Wanbang Technology Economics” WeChat public account.