1. Machine Vision Hardware Can Collect Environmental Information

The commonly used visual sensors include: cameras, ToF lenses, and LiDAR technology.

Machine vision cameras. The purpose of machine vision cameras is to transmit images projected onto sensors through lenses to machines that can store, analyze, and/or display them. A simple terminal can be used to display images, such as through a computer system for displaying, storing, and analyzing images.

LiDAR technology. LiDAR is a scanning sensor that uses non-contact laser ranging technology, working similarly to general radar systems, by emitting laser beams to detect targets and collecting reflected beams to form point clouds and acquire data. This data can be processed optoelectronically to generate accurate three-dimensional images. This technology can accurately obtain high-precision physical space environmental information, with ranging accuracy reaching centimeter level.

ToF camera technology. TOF stands for Time of Flight, which is a technology where the sensor emits modulated near-infrared light, which reflects after encountering an object. The sensor calculates the time difference or phase difference between the emitted and reflected light to determine the distance of the photographed object, generating depth information. Additionally, by combining this with traditional camera capture, the three-dimensional outline of the object can be presented in a topographic map format, with different colors representing different distances.

2. AI Vision Technology Algorithms Help Robots Recognize Their Surroundings

Vision technologies include: facial recognition, object detection, visual question answering, image description, and visual embedded technologies.

Facial recognition technology: Facial detection can quickly detect faces and return the location of the face frame, accurately identifying various facial attributes; facial comparison extracts facial features, calculates the similarity between two faces, and provides a similarity percentage; facial search finds similar faces in a designated face database; given a photo, it compares with N faces in the designated database to find the most similar face or multiple faces. Based on the matching degree between the face to be recognized and the existing faces in the database, it returns user information and matching degree, i.e., 1:N face retrieval.

Object detection: Object detection technology based on deep learning and large-scale image training can accurately identify the categories, locations, and confidence levels of objects in images.

Visual question answering: The visual question answering (VQA) system takes images and questions as input and generates a human-readable language output.

Image description: Needs to capture the semantic information of the image and generate human-readable sentences.

Visual embedded technologies: Include human detection and tracking, scene recognition, etc.

3. SLAM Technology Grants Robots Better Planning and Mobility Capabilities

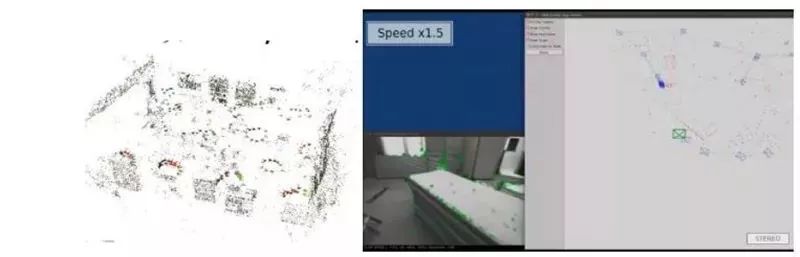

SLAM, which stands for Simultaneous Localization and Mapping, refers to the theory of simultaneously determining the location and creating a map. In SLAM theory, the first problem is called localization, the second mapping, and the third is subsequent path planning. Through machine vision mapping, robots can use complex algorithms to simultaneously locate and map their environmental locations. SLAM technology can effectively solve issues such as unreasonable planning and path planning that cannot cover all areas, leading to average cleaning results.

▲SLAM Technology

Without SLAM, there is no map or path planning, so when a cleaning robot encounters an obstacle, it will return in a random direction, unable to cover every area. With SLAM, it can cover any area. Moreover, the cleaning robot is equipped with cameras to identify shoes, socks, animal feces, and other items, achieving smart avoidance.

4. Ultra-Wideband Positioning Technology Based on ToF Machine Vision

In robots, ToF technology is mainly used for high-precision ranging and positioning, with ultra-wideband positioning technology being the most common.

UWB (Ultra-Wideband) is a wireless communication technology used for high-precision ranging and positioning. UWB sensors are simplified into two types: tags and base stations. Its basic working method is to use the TOF (Time of Flight) method for wireless ranging and quickly and accurately calculate positions based on the ranging values.

5. AI Natural Language Processing is an Important Technology for Human-Robot Interaction

In human information acquisition methods, 90% rely on vision, while 90% of expression relies on language. Language is the most natural way of human-robot interaction. However, natural language processing (NLP) is challenging due to differences in grammar, semantics, culture, and dialects, as well as non-standard languages. With the maturity of NLP, human-robot voice interaction is becoming more convenient, promoting robots to develop towards more “intelligent” capabilities.

The array microphone and speaker technology in robots have become relatively mature, and with the rapid development of smart speakers and voice assistants in recent years, microphone arrays and mini speakers are widely used. In companion robots like Iron Man, voice interactions with users rely on microphone arrays and speakers, making these companion robots akin to moving “smart speakers,” expanding their boundary forms.

Currently, chatbots can be divided into general chatbots and specialized domain chatbots. The development of natural language processing technology will enhance the interaction experience between robots and humans, making robots appear more “intelligent.”

6. AI Deep Learning Algorithms Help Robots Evolve Towards Self-Awareness

Hardware: The development of AI chip technology has given robots higher computing power. Due to the advancement of Moore’s Law, the number of transistors that can be accommodated on a chip per unit area continues to grow, promoting chip miniaturization and enhancing AI computing power. Additionally, the emergence of heterogeneous chips, such as RISC-V architecture chips, provides hardware support for enhancing AI chip computing power.

Algorithms: AI deep learning algorithms are the future of robots. AI deep learning algorithms give robots the ability to learn through input variables. Whether future robots can possess self-awareness depends on the continuous development of AI technology. Deep learning algorithms propose a possibility for robots to gain self-awareness. Through training neural network models, some algorithms have already surpassed humans in single-point domains. The success of Alpha Go demonstrates that humans can achieve self-learning capabilities in AI technology in single categories, and in some fields, such as Go, poker, and quiz competitions, they can even rival or defeat humans.

AI deep learning algorithms enable robots to have intelligent decision-making capabilities, freeing them from the previous programming logic of one input corresponding to one output, making robots more “intelligent.” However, in the field of “multimodality,” robots still cannot match humans, especially in unquantifiable signals such as smell, taste, touch, and psychology, where reasonable quantification methods have yet to be found.

7. AI + 5G Expands Robots’ Activity Boundaries, Provides Greater Computing Power and More Storage Space, and Forms Knowledge Sharing

In the 4G era, the four major pain points of mobile robots are:

1) Limited working range: Tasks can only be executed within a fixed range, and the constructed maps are not conducive to sharing, making it difficult to work in large-scale environments.

2) Limited business coverage: Limited computing power and the need for improved recognition performance; capabilities are limited, only able to discover problems, making rapid bulk deployment difficult.

3) Limited service provision: Poor capability for complex business operations, interaction capabilities need improvement, and low efficiency in deploying specialized services.

4) High operation and maintenance costs: Low deployment efficiency, requiring map construction and path planning for each scenario; equipped with inspection tasks, etc.

These four major pain points restrict the penetration of mobile robots in the 4G era. Overall, robots still need more storage space and stronger computing capabilities. The low latency, high speed, and wide connectivity of 5G can address these current pain points.

Empowerment of Mobile Robots by 5G:

1) Expanding the working range of robots. The greatest empowerment of 5G for robots is the expansion of their physical boundaries. The support of 5G for TSN (Time-Sensitive Networking) allows robots’ activity boundaries to extend from homes to all aspects of society. We can easily imagine future scenarios where humans and robots coexist. In logistics, retail, inspection, security, firefighting, traffic management, and healthcare, both 5G and AI can empower robots to help humans achieve smart cities.

2) Providing greater computing power and more storage space for robots, forming knowledge sharing. The promotion of cloud robots by 5G provides robots with greater computing power and more storage space: flexibly allocating computing resources to meet synchronous localization and mapping in complex environments. Accessing large databases for object recognition and retrieval; long-term positioning based on outsourced maps. Forming knowledge sharing: creating knowledge sharing among multiple robots.