Editor’s Note: Welcome to register for the Strata Data Conference held by O’Reilly in Beijing from July 12-15, 2017.

Use Amazon Echo and Raspberry Pi to make your own doorbell: identify thousands of visitors at your doorstep for just a few cents a month.

Recently, while preparing to install a doorbell in my new house, I thought: why not let my doorbell tell me who is at the door?

The cost of most DIY projects I undertake tends to be higher than other equivalent products, even when I value my time at $0 per hour. I think this might be related to supply chain and economies of scale. However, I derive more enjoyment from the process of creating these things myself. In this project, I built a door camera that not only costs less than my Dropcam but also has some genuinely useful features that I have not seen on the market for some reason.

Figure 1: My front door has a doorbell, an August smart lock, and a Raspberry Pi for face recognition. Image courtesy of Lukas Biewald.We will build a Raspberry Pi-based security camera device costing $60 to capture photos and upload them to the cloud for face recognition. You can also upload the data stream to Amazon S3, making it a complete Dropcam replacement. Nest charges $100 per year to save the last 10 days of video, but you can keep a year’s worth of video files in S3 for about $20. If you use Amazon Glacier, this cost can drop to around $4.

Using Amazon Rekognition for Machine Learning

This tutorial will focus on the machine learning aspect—using Amazon’s new Rekognition service to recognize your visitors’ faces and then send the recognition results to your Amazon Echo, so you always know who is at your door. To build a reliable service, we will also use one of Amazon’s coolest and most useful products: Lambda.

Components:

Amazon Echo Dot ($50)

Raspberry Pi 3 ($38) (This project can also use a Raspberry Pi 2 with a wireless USB adapter)

Raspberry Pi compatible camera ($16)

Raspberry Pi case ($6)

16GB SD card ($8)

Total: $118

We will use Amazon’s S3, Lambda, and Rekognition services for face matching. These services start free, and afterwards, you only spend a few cents a month to recognize thousands of visitors at your door.

Setting Up the Raspberry Pi System

If you have completed any of my Raspberry Pi tutorials, you will be familiar with most of the content in this tutorial.

First, download Noobs from the Raspberry Pi Foundation and follow the installation instructions. This mainly involves copying Noobs to the SD card, inserting the SD card into your board, then connecting the mouse, keyboard, and display to your board and following the installation instructions. These operations have become easier since the new desktop environment, Pixel, was launched.

Figure 2: My Raspberry Pi connected to a mini display and keyboard. Image courtesy of Lukas Biewald.Next, name your Raspberry Pi system something memorable so you can SSH into it. There’s a great guide on howtogeek that explains how to modify the /etc/hosts and /etc/hostname files to name your Raspberry Pi system. I like to name all my security camera Raspberry Pis after characters from my favorite TV show, “It’s Always Sunny in Philadelphia,” so I named the front door camera “Dennis.” This means I can SSH into dennis.local without needing to remember an IP address, even if I reset the router.

Next, you should connect the camera to the Raspberry Pi board. Remember that the ribbon cable should face the Ethernet port—this is a mistake I might have Googled a hundred times. Note: If you want a wider field of view, you can buy a wide-angle camera; if you want to add night vision, you can buy an infrared camera.

Figure 3: The Raspberry Pi with camera and case ready for installation. Image courtesy of Lukas Biewald.You may also want to put the whole device inside a protective case to protect it from the weather. You will also need to connect the Raspberry Pi to power via a micro USB cable. (I have drilled a small hole in the wall to connect my Dropcam to the indoor power outlet, so I already have a USB cable in the right place.)

So far, I have installed several such devices around the house. The camera’s ribbon cable is thin, allowing you to install the Raspberry Pi inside the room and run the cable through the door as I have done in my lab (garage).

Figure 4: The camera on the Raspberry Pi protruding from my garage door. Image courtesy of Lukas Biewald.Next, you need to install the RPi-Cam-Web interface. This is a very useful software that provides a continuous data stream from the camera via HTTP. Please follow the installation instructions and choose NGINX as the web server. There is a very useful configuration file in /etc/raspimjpeg that can be used to configure many options.

Configuring Amazon S3 and Amazon Rekognition

If you haven’t created an AWS account yet, you need to do so now. You should first create an IAM user and allow that user to access S3, Rekognition, and Lambda (which we will use later).

Install the AWS command line interface:

sudo apt install awscli

Set your Raspberry Pi’s region to US East (as of this writing, Rekognition is only available in this region).

Create a face recognition group:

aws create-collection –collection-id friends

You can use the Unix shell script I wrote to quickly add your friends’ face images:

aws s3 cp $1 s3://doorcamera > output

aws rekognition index-faces \

–image “{\”S3Object\”:{\”Bucket\”:\”doorcamera\”,\”Name\”:\”$1\”}}” \

–collection-id “friends” –detection-attributes “ALL” \

–external-image-id “$2”

Copy it to a file as a shell script. Or enter it in the command line, replacing $1 with the local file name of your friend’s image and $2 with your friend’s name.

Amazon’s Rekognition service uses machine learning to measure distances between points on a face image and then uses these points to match against face images in its index. So you can get good results by training the system with just one image of your friend.

Now you can use a similar script to test this face recognition system:

aws s3 cp $1 s3://doorcamera > output

aws rekognition search-faces-by-image –collection-id “friends” \

–image “{\”S3Object\”:{\”Bucket\”:\”doorcamera\”,\”Name\”:\”$2\”}}”

You will receive a large JSON file in return, which includes not only the matching results but also other aspects of the image, including gender, emotion, facial hair, and a bunch of other interesting things.

{

“FaceRecords”: [

{

“FaceDetail”: {

“Confidence”: 99.99991607666016,

“Eyeglasses”: {

“Confidence”: 99.99878692626953,

“Value”: false

},

“Sunglasses”: {

…

Next, we can write a Python script to download an image from our Raspberry Pi camera and check for faces. In fact, I used a second Raspberry Pi to accomplish this, but running it on the same machine would be easier. You just need to check the /dev/shm/mjpeg/cam.jpg file, and you will know the image file corresponding to a particular camera.

Whichever way, we need to expose this interface functionality in the web server for later use. I used Flask as my web server.

from flask import Flask, request

import cameras as c

app = Flask(__name__)

@app.route(‘/faces/

def face_camera(camera):

data = c.face_camera(camera)

return “,”.join(data)

if __name__ == ‘__main__’:

app.run(host=’0.0.0.0′, port=5000)

I have put the parsing code (and all other code mentioned in this article) on github.com/lukas/facerec.

If you have made it this far, you have something very interesting to play with. I found Amazon’s service excellent at recognizing my friends. The only part that seemed a bit troublesome was recognizing my emotions (though that might be more my issue than Amazon’s).

In fact, I sewed one of the Raspberry Pi cameras into a plush toy and set up a very creepy face-recognizing teddy bear sentry on my desk.

Figure 5: The face-recognizing teddy bear Freya. Image courtesy of Lukas Biewald.Using the Face Recognition Camera with Amazon Echo

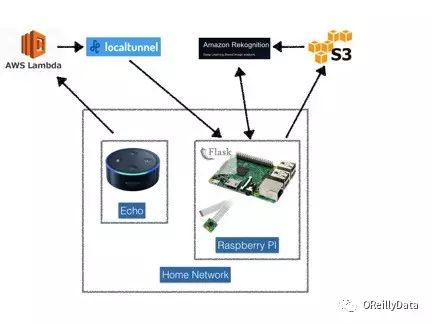

Amazon’s Echo makes high-quality voice commands very easy and provides a perfect interface for this type of project. Unfortunately, the best way to use Echo is to have it communicate directly with a stable web service, but we want to place the Raspberry Pi camera behind the router’s firewall on a local network—this makes configuration a bit tricky.

We will connect the Echo to an AWS Lambda service, which will communicate with our Raspberry Pi system through an SSH tunnel. This may be a bit complex, but it’s the simplest way.

Figure 6: Architecture diagram. Image courtesy of Lukas Biewald.Exposing the HTTP Face Recognition API via SSH Tunnel

So far, we have built a small web application for face recognition, and we need to make it accessible to the outside world. As long as we have a web server somewhere, we can configure an SSH tunnel. However, there is a sweet little application called localtunnel that does all this for us, and you can easily install it:

npm install -g localtunnel

I like to wrap it in a small script to keep it running and prevent it from crashing. Please change MYDOMAIN to something meaningful to you:

until lt –port 5000 -s MYDOMAIN; do

echo ‘lt crashed… respawning…’

sleep 1

done

Now you can ping your server by visiting http://MYDOMAIN.localtunnel.me.

Creating an Alexa Skill

To use our Echo, we need to create a new Alexa Skill. Amazon has a great getting started guide, or you can go directly to the Alexa developer portal.

First, we need to set an intent:

{

“intents”: [

{

“intent”: “PersonCameraIntent”,

“slots”: [

{

“name”: “camera”,

“type”: “LIST_OF_CAMERAS”

}

]

}]}

Then we give Alexa some sample utterances:

PersonCameraIntent tell me who {camera} is seeing

PersonCameraIntent who is {camera} seeing

PersonCameraIntent who is {camera} looking at

PersonCameraIntent who does {camera} see

Next, we need to provide Alexa with an endpoint, for which we will use a Lambda function.

Configuring a Lambda Function

If you have never used a Lambda function, you are in for a treat! Lambda functions are a simple way to define a unified API for a simple function on Amazon servers, and you only pay when it is called.

Alexa Skill is a perfect use case for Lambda functions, so Amazon has already configured a template available for Alexa Skills. When Alexa matches one of the PersonCameraIntents we listed, it will call our Lambda function. Change MYDOMAIN to the domain name used in your local tunnel script, and everything should run smoothly.

You can also use other interesting features in the metadata sent by Amazon Rekognition. For example, it can guess facial expressions, so I use it to determine whether a visitor at my door is happy or angry. You can also have Echo tell you whether the visitor has a beard, is wearing sunglasses, and other features:

def face_camera(intent, session):

card_title = “Face Camera”

if ‘camera’ in intent[‘slots’]:

robot = intent[‘slots’][‘camera’][‘value’]

try:

response = urlopen(‘http://MYDOMAIN.localtunnel.me/faces/%s’ % robot)

data = response.read()

if (data== “Not Found”):

speech_output = “%s didn’t see a face” % robot

else:

person, gender, emotion = data.split(“,”)

if person == “” or person is None:

speech_output = “%s didn’t recognize the person, but ” % robot

else:

speech_output = “%s recognized %s and ” % (robot, person)

if gender == “Male”:

speech_output += “he “

else:

speech_output += “she “

speech_output += “seems %s” % emotion.lower()

except URLError as e:

speech_output = “Strange, I couldn’t wake up %s” % robot

except socket.timeout as e:

speech_output = “The Optics Lab Timed out”

else:

speech_output = “I don’t know what robot you’re talking about”

should_end_session = False

return build_response({}, build_speechlet_response(

card_title, speech_output, None, should_end_session))

When you talk to Alexa, it actually parses your voice to find an Intent and runs a Lambda function. This function calls an external server and communicates with your Raspberry Pi through the SSH tunnel. The Raspberry Pi grabs an image from the camera, uploads it to S3, runs a deep learning inference algorithm to match it against your friend’s face image, and sends the parsed results back to your Echo. Then Echo talks to you. But this entire process happens very quickly! To see it for yourself, check out my video:

You can take the same technology and apply or extend it to many cool aspects. For example, I have put the code from my “Raspberry Pi/TensorFlow project” onto my robot. Now they can talk to me and tell me what they are looking at. I am also considering using this GitHub project to connect the Raspberry Pi to my August lock, so my door will automatically open for my friends or lock automatically for an angry visitor at the door.

This article originally appeared in English: “Build a talking, face-recognizing doorbell for about $100”.

Lukas Biewald

Lukas Biewald is the founder and CEO of CrowdFlower. Founded in 2009, CrowdFlower is a data enrichment platform that helps businesses obtain on-demand human labor to collect, generate training data, and participate in the human-machine learning loop. After earning a Bachelor’s degree in Mathematics and a Master’s degree in Computer Science from Stanford University, Lukas led the search relevance team at Yahoo Japan. He then went to Powerset, working as a senior data scientist. Powerset was acquired by Microsoft in 2008. Lukas was also named one of Forbes’ 30 Under 30. He is also an expert-level Go player.

The Strata Data Conference Beijing registration system is now open. Click the QR code in the image to access the conference website, browse the list of speakers confirmed so far, and the topics. The best ticket price period ends on May 5. Register as soon as possible to ensure your spot.