Imagine a scenario: ChatGPT, Bard, and Claude2 are gathered around a virtual round table, arguing fiercely over a math problem.

The ReConcile framework proposed by the UNC team simulates a “round table conference” approach, allowing different LLM agents to persuade each other, correct answers, and reach a better consensus through confidence-weighted voting.

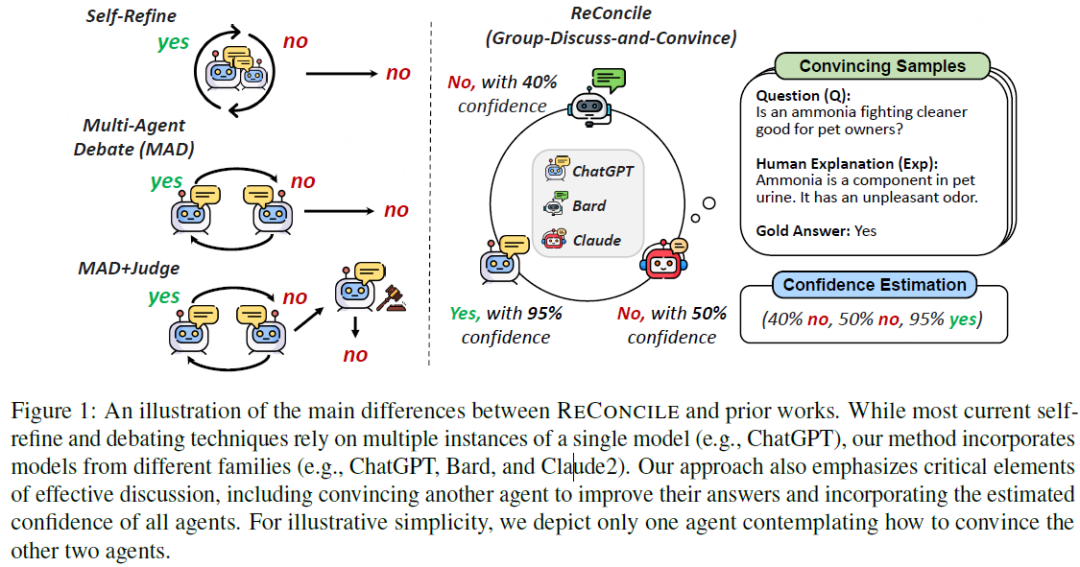

Traditional multi-agent debates (left) use homogeneous models, which are easily limited by inherent biases; ReConcile innovation (right): heterogeneous model collaboration enhances robustness through diverse inputs and weighted voting.

The core idea of ReConcile

-

Diversity-driven: Integrating models of different architectures (such as ChatGPT, Bard, Claude) to complement knowledge blind spots.

-

Dynamic discussion mechanism: Guiding agents to adjust answers through multiple rounds of interaction and human correction examples.

-

Lightweight design: Requires only a few examples to enhance performance, adaptable to open-source and domain-specific models.

Collaborative discussion framework

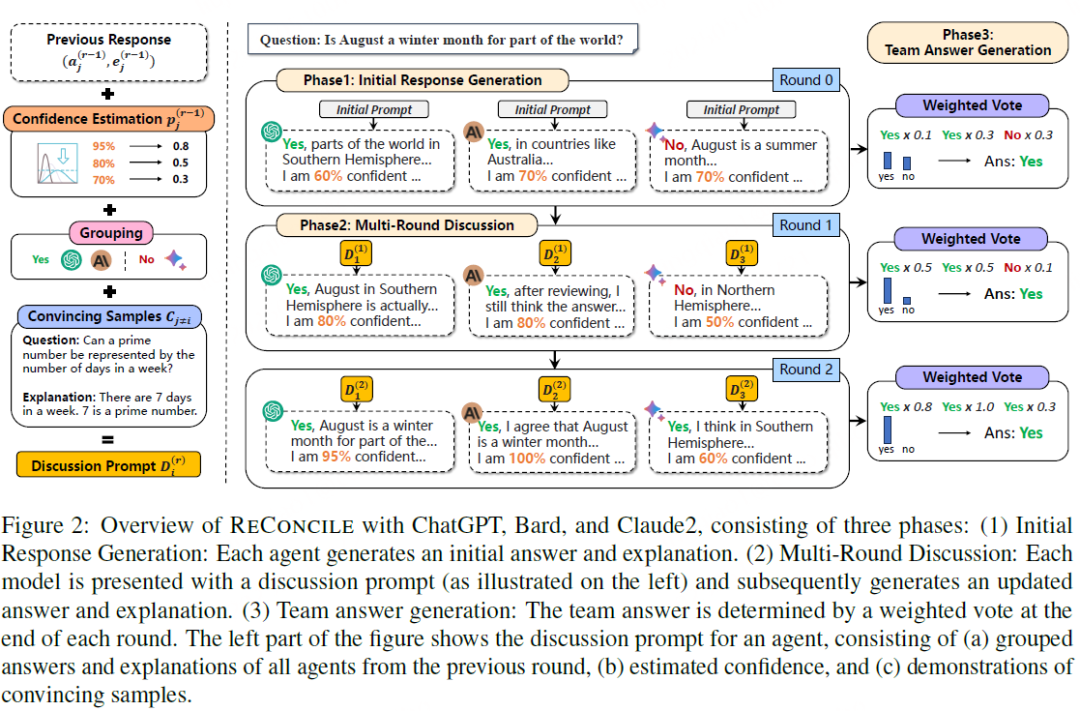

ReConcile operates in three stages: initial response generation, multi-round discussion, and team answer generation.

Stage 1: Initial response generation

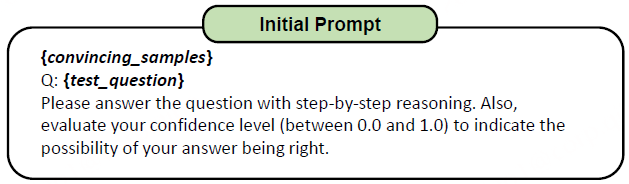

ReConcile first allows each agent to generate initial answers, explanations, and assign confidence p ∈[0,1] (as shown in Figure 2 “Phase 1” section), with the following prompt:

Convincing samples are similar to few shot, but with a difference: they include not only the question and answer but also the explanation for generating the answer. The “explanation” part of the convincing samples is provided by human annotations to correct erroneous answers, and each agent’s convincing samples are different.

Stage 2: Multi-round discussion

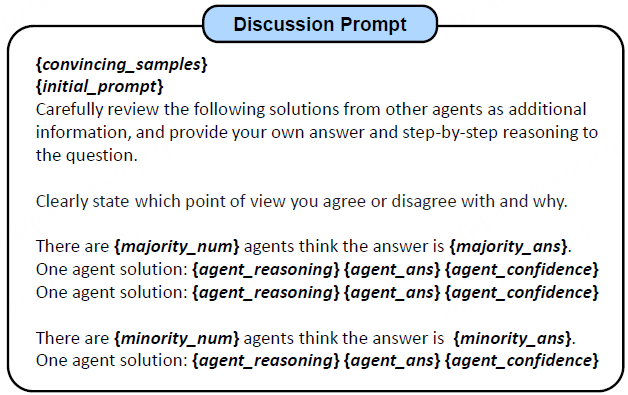

The discussion stage consists of R rounds. In the r th round of discussion, each agent’s Prompt includes four parts: the answers, explanations, confidence, and convincing samples from all agents in the previous round. Note that in the convincing samples part, each agent retains only the convincing samples from other agents, excluding its own, which is the opposite of the first step where each agent only includes its own convincing samples.

Stage 3: Team answer generation

Stage 2 ends after a maximum of R rounds or when consensus is reached. Finally, a weighted voting scheme is used to generate the team answer for that round (see the far right of Figure 2).

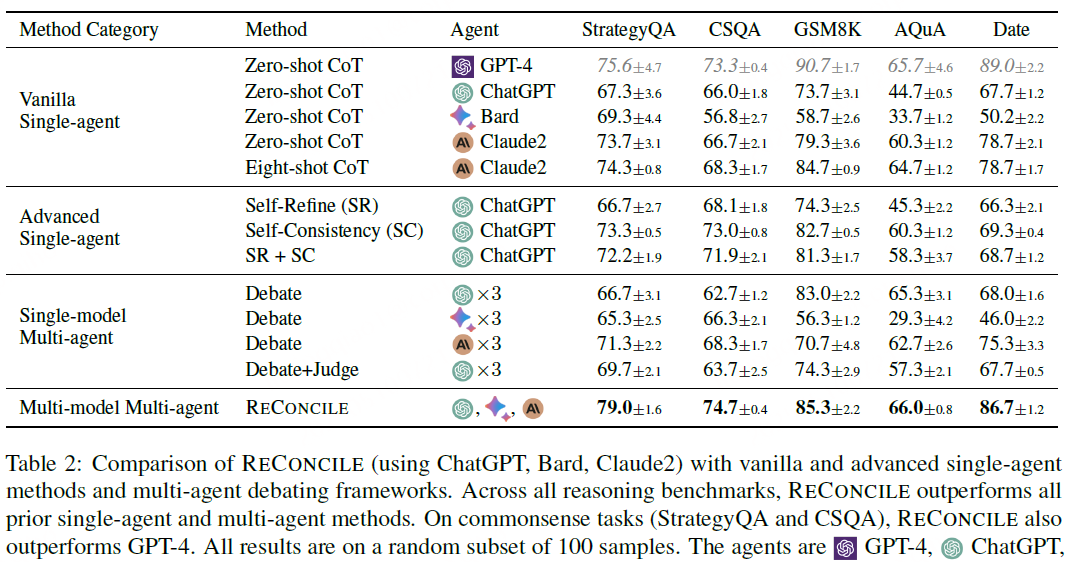

Experiments show that the experimental results are overwhelmingly superior, with an average improvement of over 10 percentage points. SummaryReConcile optimizes answers through multiple rounds of discussion, adjusting its views based on explanations and confidence from other agents. Essentially, it is a method for integrating the reasoning results of multiple large models. Key mechanisms:

SummaryReConcile optimizes answers through multiple rounds of discussion, adjusting its views based on explanations and confidence from other agents. Essentially, it is a method for integrating the reasoning results of multiple large models. Key mechanisms:

-

Grouped feedback: Organizing the previous round’s answers and explanations by category to avoid information redundancy.

-

Confidence weighting: Each agent provides the confidence of its answer for subsequent voting (higher confidence opinions carry more weight).

-

Convincing samples: Providing human correction examples to teach agents how to persuade others through explanations (e.g., correcting erroneous answers).

References

RECONCILE: Round-Table Conference Improves Reasoning via Consensus among Diverse LLMs