Efficient ML Systems: TinyChat and Edge AI 2.0

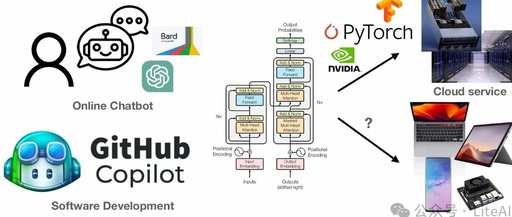

Click belowcard, follow the “LiteAI” public account Hi, everyone, I am Lite. Recently, I shared the Efficient Large Model Full Stack Technology from Part 1 to 19, which includes large model quantization and fine-tuning, efficient inference of LLMs, quantum computing, generative AI acceleration, and more. The content links are as follows: Efficient Large Model Full … Read more