Recently at CES Asia, Dr. Huang Chang, co-founder and vice president of Horizon, was invited to give a keynote speech titled “Trends in Edge AI Computing” at the main forum of CES. In a one-hour speech, Dr. Huang shared his insights on the trends of edge computing in the AI era, discussing the changes in the industry driven by edge computing, AI efficiency and corporate responsibility, the definition of effective computing power, and Horizon’s open empowerment strategy and developer platform, deeply interpreting Horizon’s strategy of “AI on Horizon, Journey Together.” Dr. Huang stated that Horizon is willing to be a platform company, serving as a technology foundation to support customers and walk alongside them to create customer value.

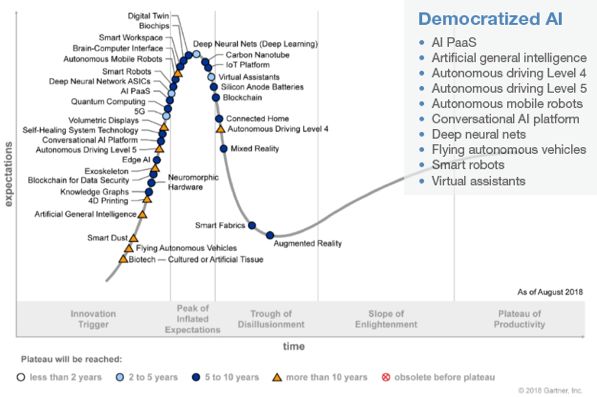

According to Gartner’s technology maturity curve, the era of AI democratization has begun, creating new value across various industries. What challenges will we face in this process? With the commercialization of AI, the most significant challenge is the exponential growth of data volume, characterized by two key features: real-time data and security. According to the International Data Corporation (IDC) white paper “Data Era 2025,” it is predicted that by 2025, over 25% of data will be real-time data, with 95% generated by IoT terminals, and the vast majority will not directly create value but will need computation to extract value; furthermore, 20% of global data by 2025 will directly impact people’s daily lives and even their safety.

On the 2018 Gartner technology maturity curve, multiple AI technologies and applications appeared, and for the first time, Gartner explicitly proposed the idea of AI democratization, indicating that we will face more severe data challenges.

This presents a severe challenge for data computation. How can we efficiently process massive amounts of data and extract its value while ensuring real-time data processing at second or even millisecond levels, all while protecting data privacy? With the commercial launch of 5G, the expansion of terminal access networks has greatly increased, raising the requirements for real-time processing. However, due to the high costs and latency of backbone network expansion, this has led to the formation of a data dam on the edge side, making edge computing imperative. Intel has stated that MEC (Multi-Access Edge Computing) does not necessarily require 5G, but 5G certainly requires MEC. With the support of edge computing, the commercial value of 5G can truly be realized.

To address the challenges faced by IoT data computation, edge computing offers five major advantages: 1. High reliability, capable of normal operation even in offline states; 2. Security and compliance, meeting privacy requirements; 3. Reduced data transmission and storage costs; 4. High real-time performance, minimizing response delays; 5. Flexible deployment of computing devices, enabling efficient collaboration.

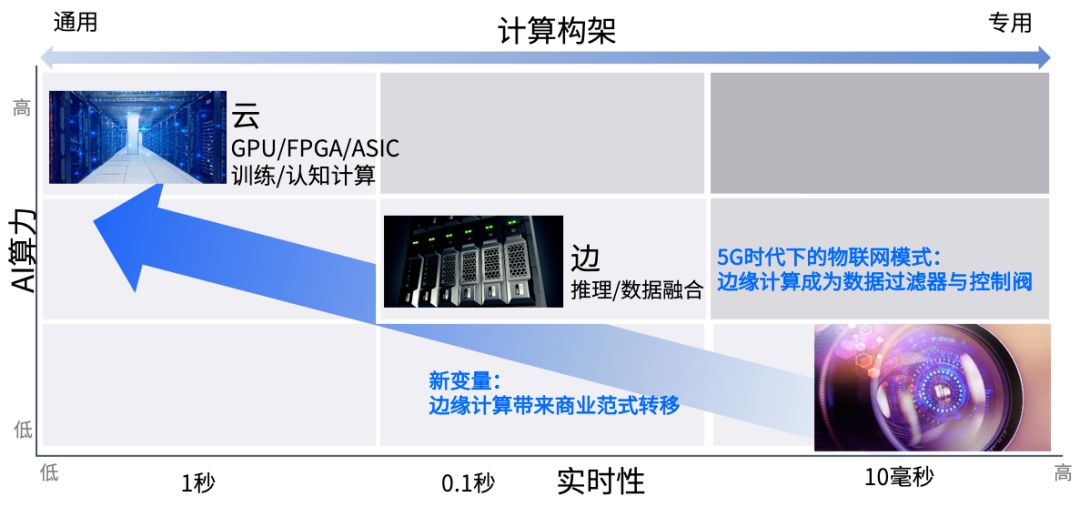

AI computation can be divided into three levels: cloud computing, edge computing, and end computing. They each have their strengths in terms of AI computing power, real-time performance, and the universality of computation:

-

Cloud computing targets the most universal computation, with the largest spatiotemporal scope, the strongest diversity, and the highest required computing power, but with poorer real-time performance and weaker scene relevance; the types of data in the cloud are also the richest, spanning multiple dimensions, thus enabling complex cognitive computing and model training.

-

End computing is another extreme, with the strongest scene relevance, very high specificity of computation, and a pursuit of extreme efficiency, mainly targeting inference.

-

Edge computing, positioned in the middle, is a new species that connects our brain (cloud) and nerve endings (end) like our spine. Its computing power far surpasses that of the end while having a much higher tolerance for power consumption than the end; compared to the cloud, it offers better real-time performance and can be specifically optimized for particular scenes. The application of 5G technology can significantly improve the data bandwidth and transmission latency between the edge and the end, allowing it to combine the advantages of both cloud and end, changing the existing network interconnection pattern.

The IoT in the 5G era requires collaboration between the end, edge, and cloud to find the optimal AI solution across a larger scope.

The IoT model in the 5G era will also change, with edge computing becoming a data filter and control valve. Through its processing, only effective data as low as one ten-thousandth can be uploaded to the cloud for processing, significantly reducing the data transmission pressure on the backbone network.

In the traditional internet era, there was a binary computing architecture of end and cloud, where data continuously transferred to the cloud for processing, with the end merely serving as a traffic entry point; however, the addition of edge computing introduces a new variable, constructing a completely new possibility between the end and the cloud, its control over data will bring about a new shift in business paradigms. From a technical perspective, edge computing has the potential to transform the traditional internet computing architecture, leading to a new structural transformation from software to hardware.

While the democratization of AI brings new value, it also brings new energy crises.

Today, data centers consume an astonishing amount of electricity. According to the “White Paper on the Current Situation of Energy Consumption in Chinese Data Centers,” there are 400,000 data centers in China, each consuming an average of 250,000 kWh, totaling over 100 billion kWh, equivalent to the total annual power generation of the Three Gorges and Gezhouba hydropower stations. If converted to carbon emissions, this amounts to approximately 96 million tons, which is nearly three times the annual carbon emissions of China’s civil aviation sector.

The average power consumption of data centers is over 100 times that of large commercial office buildings. The power required for server power supply and cooling accounts for 40% of the total operating costs of data centers, thus the energy inefficiency of data centers cannot be ignored.

To reduce power consumption, Alibaba’s data center is located in Zhangbei, where the wind is strong and the average annual temperature is below 3 degrees Celsius, acting as a natural cooling field, thereby reducing the power consumption of cooling systems, with expectations of a 45% reduction in cooling energy consumption alone. Tencent’s data center is built on the mountains in the Guizhou Province, also for cooling purposes.

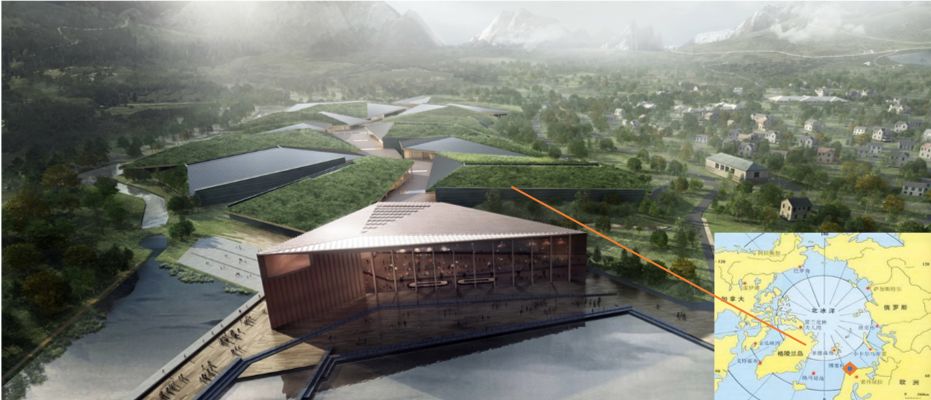

To reduce power consumption, the world’s largest data center will be built within the Arctic Circle, with a total power exceeding 1000 megawatts (Source: KOLOS Company Official Website)

It is foreseeable that the amount of data computation we will need in the future will grow at an exponential rate, and such energy consumption methods are unsustainable.

Therefore, reducing carbon emissions through extreme AI energy efficiency improvements has become a new social responsibility for AI companies, which is to make full use of limited energy to create greater value.

In the traditional chip industry, PPA (Power, Performance, Area) is the classic performance metric. However, in the AI era, we need a new paradigm to define performance.

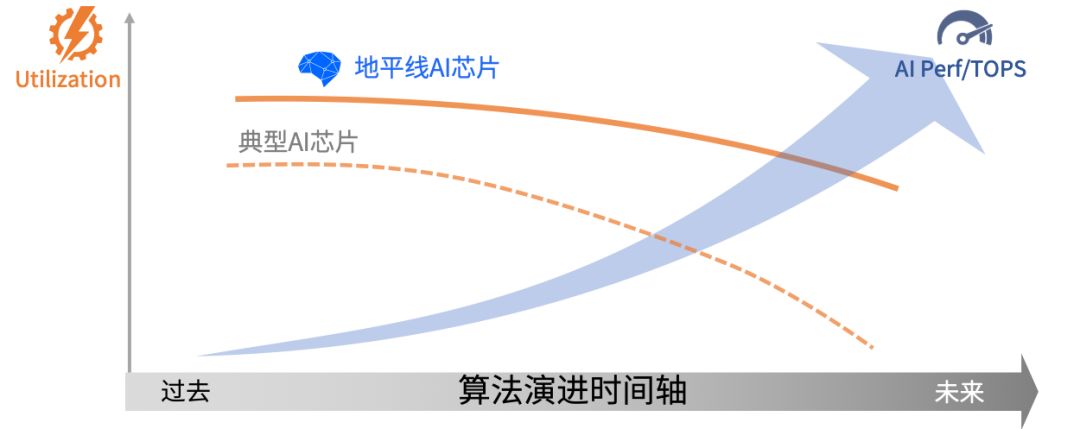

Currently, there is a significant misconception in the industry where peak computing power is often regarded as the primary metric for measuring AI chips. However, what we truly need is effective computing power and its output algorithm performance. This needs to be measured from four dimensions: peak computing power per watt and peak computing power per dollar (determined by chip architecture, frontend and backend design, and chip technology), effective utilization rate of peak computing power (determined by algorithms and chip architecture), and the ratio of effective computing power to AI performance (mainly in terms of speed and accuracy, determined by algorithms). Previously, the industry commonly used models like ResNet, but today we use smaller models like MobileNet, which can achieve the same accuracy and speed with only 1/10 of the computing power. However, these cleverly designed algorithms pose significant challenges to computing architectures, often leading to a substantial decrease in the effective utilization rate of traditional designs, ultimately resulting in a loss in AI performance.

The greatest feature of Horizon is its ability to predict the development trends of key algorithms in important application scenarios, proactively integrating their computational characteristics into the design of computing architectures. This allows Horizon’s AI processors to adapt well to the latest mainstream algorithms even after one or two years of R&D. Therefore, compared to other typical AI processors, Horizon’s AI processors maintain a high effective utilization rate as the algorithms evolve, thereby truly benefiting from the advantages brought by algorithm innovation.

The core capability of Horizon AI chips: joint optimization of algorithms and chips with flexibility, efficiently serving classic and future algorithm designs

Mastering the algorithms and computing architectures gives us tremendous potential. The compiler can unite the two for extreme optimization, unleashing all potential. For example, without optimization, the effective utilization rate of peak computing power is 34%; after the compiler optimizes the instruction sequence, this value rises to 85%. This increases the chip’s processing speed by 2.5 times, or reduces power consumption to 40% while processing the same number of tasks.

Guided by the development trends of key algorithms in important future application scenarios, Horizon AI chip architecture design prioritizes efficiency while ensuring flexibility. This principle of extreme AI energy efficiency is Horizon’s unwavering ideal and pursuit in its products.

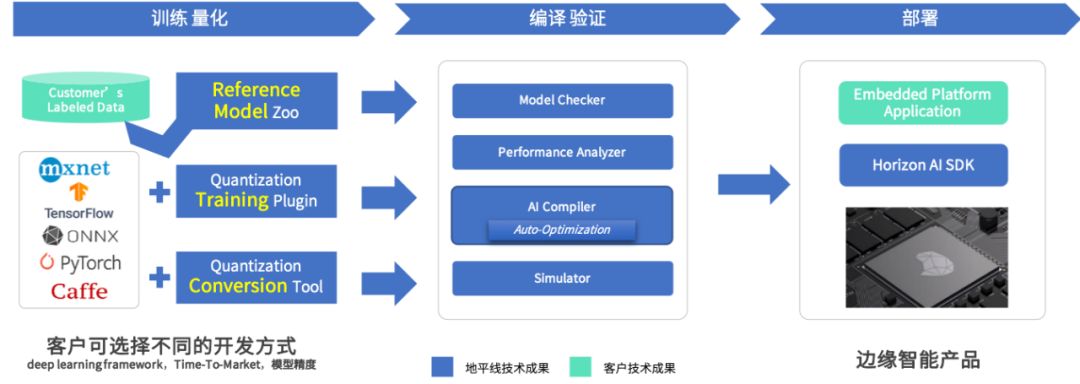

Over the years of entrepreneurship, we have deeply felt that the path from AI technology demonstration to commercial implementation is very long. Integrating AI solutions into customers’ products to empower and achieve customer success presents a significant barrier for most companies. Therefore, we are constantly working to reduce the difficulty of integrating our solutions. To this end, Horizon has designed a complete algorithm development process that includes data, training, and deployment, and developed a full-stack AI platform tool to efficiently support this development process. We even provide numerous excellent algorithm models and prototype systems as reference examples for our customers. Through these tools and examples, customers can quickly develop the algorithms needed for their products and continuously iterate, gradually optimizing and exploring their unique value in data and algorithms.

Horizon development tools support a wide range of open-source frameworks.

Horizon’s full-stack AI platform toolchain includes data, training, and device deployment tools. They form a closed loop, operating efficiently, where data generates models, which can be deployed to devices for operation, guiding model tuning during operation and even collecting new data. This development model can improve development speed, lower development barriers, and ensure development quality. Our current evaluation results show that it can reduce about 30% of development manpower, save 50% of development time, and more importantly, because the development threshold has been lowered, the scale of developers can even expand by an order of magnitude.

Horizon AI development platform: Accelerating Software 2.0 full-process R&D.

Horizon will continue to upgrade its development tools, providing customers with semi-automated processing flows, including closed-loop iteration between data tools and models, and models and devices; a wealth of model/system reference prototypes, user-friendly and intuitive interaction methods; standardized development processes, along with continuous testing, integration, and deployment mechanisms.

With a focus on empowering customers through openness, quickly meeting diverse customer needs is the mission of Horizon’s AI platform service. We aim to save more development time, reduce the number of developers, and enable more enterprises to participate in the artificial intelligence development environment, building a solid ecological foundation platform.

In this era of AI with enormous energy consumption, Horizon leverages its unique advantages and service philosophy to provide customers with cost-effective and easily inheritable products, friendly development tools and reference examples, advanced computing architectures, cutting-edge algorithm support, and high-quality services.

We have already helped partners develop many successful products, such as construction site safety helmet detection, the Xiao Ai speaker, providing multi-zone voice interaction solutions for Li Auto One, and high-precision semantic mapping products through crowdsourcing. We have also joined the 96Boards open-source community, embracing a broader development ecosystem.

We are willing to be a platform company, serving as a technological foundation, empowering customers with AI on Horizon. We never view entrepreneurship as a party but as a journey together with customers. Walking alongside customers to create value, fulfilling our mission to empower all things, making everyone’s life safer and better. Thank you all!