Selected from arstechnica

Author: JASON TORCHINSKYTranslated by: Machine HeartEdited by: Panda, Egg Sauce

The performance of the Apple M1 chip is powerful, showcasing the immense potential of ARM processors in the desktop computer market, while ARM had already become the absolute ruler of the smartphone market. Where did this chip, which has already taken control of the world, originate? You might not believe it, but the story begins with a television program.

Most of 2020 was filled with news and tragic events relentlessly hitting us, making it feel impossible to continue. However, most people persevered, and we did so by using our small handheld computers that we carry with us at all times. Currently, we still refer to this device as a “phone,” which is actually a ridiculously simplified name.Fortunately, in front of us, who are constantly scrolling through bad news, there is an inspiring story: the phones used to scroll through this news, as well as a significant amount of our digital activities, all occur on the same device, namely the ARM series CPUs. With Apple launching its highly praised Mac series products based on the new M1 CPU (ARM architecture processor), it is time to talk about the origins of these chips that have taken control of the world.If you were writing a script based on a true story, and for some reason, you had to specify what the most commonly used central processing unit in most phones, game consoles, ATMs, and countless other devices was, and you had to choose one from the current major manufacturers (like Intel), it would be reasonable and appropriate to write such things in the world you are depicting because people can understand it. If an industry giant has already established market dominance, writing it into the story would not make the viewers feel strange or unfamiliar.However, what if you decided to attribute these CPUs to a company that is almost unknown, and this company is located in a country that is not typically considered a global leader in high-tech innovation? And what if the existence of these CPUs is at least indirectly attributed to an educational television program? If you wrote such a script, the producer might ask you to rework it: “Come on, be serious!”Yet, in a way, that is the reality.The story begins with a television programARM processors control over 13 billion devices worldwide. Without them, modern life as we know it would not exist. However, the origin story of ARM processors is quite bizarre. Throughout its development journey, it has been filled with events that seemed like bad luck turning into key opportunities; its unexpected technological advantages faced initial failures in certain devices but ultimately proved to be crucial.But what changed everything was a television program—the BBC’s “The Computer Programme,” which aired in 1982. This was a popular science program by the BBC aimed at informing the British public about these fancy new machines, which at the time looked like poorly designed typewriters connected to televisions.This program was part of the “Computer Literacy Project” initiated by the British government and the BBC, which was concerned that the UK was unaware and unprepared for the personal computing revolution happening in the United States. Unlike most television programs, the BBC wanted to equip the show with a computer that would be used to explain basic computing concepts and teach some BASIC programming. These computing concepts included graphics and sound, the ability to connect to a graphic network, speech synthesis, and even some basic artificial intelligence. Therefore, the computer required for the program had to be quite good, to the extent that there were no products on the market that could meet the BBC’s requirements.As a result, the BBC issued a call to the British computer industry. At that time, the leading company in the UK computer industry was Sinclair—a company that had made its name with calculators and small televisions. However, a smaller yet ambitious startup won this lucrative contract: Acorn Computers.Acorn’s DevelopmentAcorn was a company based in Cambridge, founded in 1979 after developing a computer system originally intended for running fruit machines. Later, Acorn transformed this computer system into a small hobbyist computer system that used the 6502 processor. This CPU belonged to the same CPU family used by many computer systems such as the Apple II, Atari 2600, and Commodore 64 (this CPU design would become very important, so don’t forget it).At that time, Acorn had already developed a home computer called Atom, and when the BBC reached out, they began planning the development of a successor to Atom, which would later be known as the BBC Micro.The BBC’s requirements made the resulting machine quite powerful for its time, but it was not as powerful as Acorn’s original design for the Atom successor. The original Atom successor was supposed to have two CPUs: a tested 6502 and a yet-to-be-determined 16-bit CPU.Acorn later abandoned that CPU but retained an interface system called Tube, which allowed the machine to connect to additional CPUs (which would later become very important).The engineering development of the BBC Micro truly pushed Acorn’s capabilities to the limit, as it was a cutting-edge computer for that era. This involved some very clever design decisions—decisions that, while not mature at the time, were already feasible, such as using a resistor array that required engineers to place their fingers back on the corresponding positions on the motherboard for the machine to start working.No one knew why the machine would only work when a finger was placed on a specific point on the motherboard, but when they figured out how to make the resistor mimic a finger touch, they were satisfied that the machine worked and continued to develop on it.It turned out that the BBC Micro was a significant success for Acorn, becoming the primary educational computer in the UK during the 1980s.Those reading this may know that the 1980s were a very important period in computing history. The IBM PC was launched in 1981, setting the standard for personal computing for decades to come. In 1983, Apple introduced the pre-Mac product, Apple Lisa. Thus, the window-icon-mouse graphical user interface revolution began to dominate personal computing.Acorn saw these developments and realized that if they wanted to remain competitive, they needed a more powerful processor to drive future computers than the reliable but gradually outdated 6502. Acorn experimented with a variety of 16-bit CPUs: the 16-bit variant of the 6502, the Motorola 68000 used in the Apple Macintosh, and the relatively rare National Semiconductor 32016.None of them met Acorn’s requirements, so Acorn contacted Intel to see if they could implement the Intel 80286 CPU into their new architecture. Intel completely ignored them.RISC-Related BusinessHere’s a spoiler: this would prove to be a very bad decision for Intel.Acorn then made a fateful decision: to design their own CPU. Inspired by the lean operation model of the Western Design Center (which was developing a new version of the 6502 at the time) and researching a new type of processor design concept called Reduced Instruction Set Computing (RISC), Acorn decided to take action, with engineers Steve Furber and Sophie Wilson being core members of this project.Now, RISC processors correspond to Complex Instruction Set Computing (CISC) processors. Here’s a very simple explanation of what these two concepts mean:CPUs have a set of operations they can perform—this is their instruction set. CISC CPUs have a very large and complex instruction set, allowing them to execute complex tasks over multiple “clock cycles” of the CPU. This means that their complexity is actually built into the chip hardware itself, which allows software code to be simpler. Therefore, the code for CISC machines can reduce the number of instructions, but the number of cycles used by this CPU to execute those instructions increases.You might have guessed that RISC is the opposite: the chip itself has fewer instructions and hardware, and each instruction can be completed in a single clock cycle. The result is longer code, which seems less efficient, meaning it requires more memory, but the chip itself is simpler, allowing it to execute simple instructions faster.Acorn was well-suited to design a RISC CPU because the 6502 chip they were most familiar with was often considered a prototype design of RISC. There are many opinions on this on the internet (inevitably), and this is not intended to argue with anyone, but it can be said that the 6502 had some RISC-like characteristics.This new chip was so RISC that it can be said that Sophie Wilson was clearly inspired by several design concepts of the 6502 when designing the instruction set for Acorn’s new processor.Thanks to the internet, the full version of the “Archimedes High-Performance Computer System” manual can still be found online: http://chrisacorns.computinghistory.org.uk/Computers/Archimedes.html

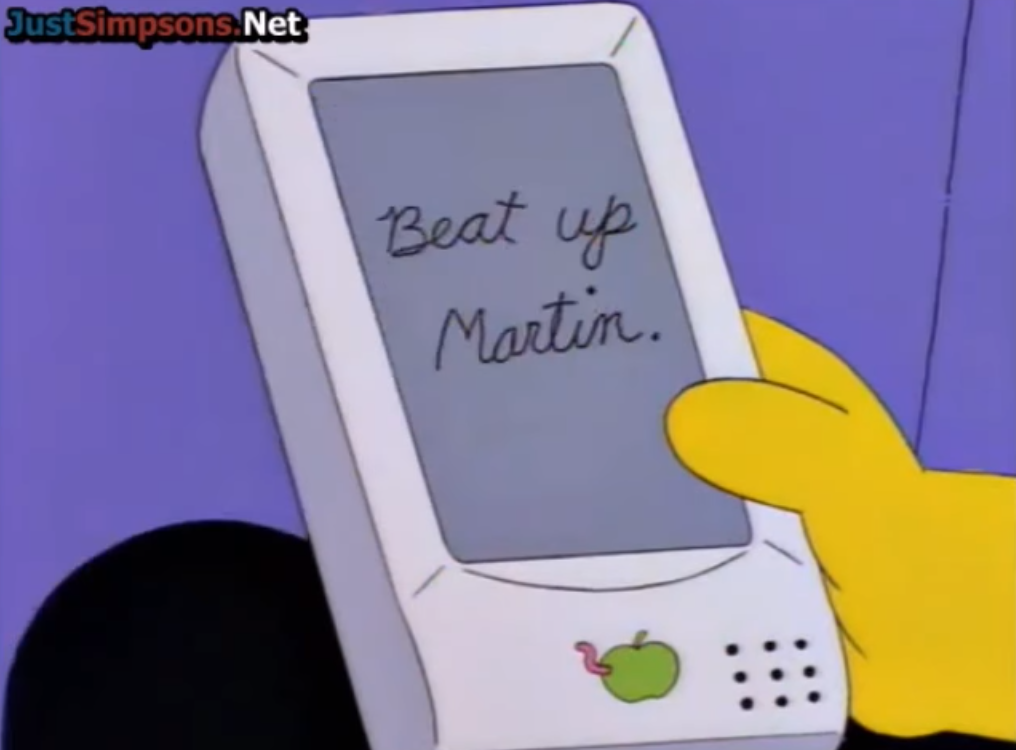

Cover of the “Archimedes High-Performance Computer System” manual.Using the Tube interface of the BBC Micro as a testing platform, Acorn named the new RISC-based CPU the Acorn RISC Machine, abbreviated as ARM. Acorn’s chip production supplier, VLSI, began producing ARM CPUs for Acorn’s internal development. Soon, a usable ARM2 version was successfully developed.In 1987, the first production-level personal computer based on RISC, the Acorn Archimedes, was released, using the ARM2 CPU. It turned out that despite having 245,000 fewer transistors than Intel’s 286 chip, this ARM processor had superior performance.It was shown that the Archimedes, along with its Arthur OS in ROM, created a flexible, fast, and powerful machine. It featured excellent graphics display for that era, a graphical user interface, and some cool fast low-polygon demos and games—this was to showcase the machine’s speed, which was attributed to its streamlined CPU.This first ARM-based computer claimed to be the fastest personal computer of its time, outperforming Intel’s 80286 by several times.“Less is More”The fewer transistors of ARM illustrate the relatively simple characteristics of ARM itself, and thus, under the same computing performance conditions, ARM chips consume much less power and generate much less heat.The low power consumption and low heat characteristics of ARM were not part of the initial design plan, as Acorn’s goal was to design a CPU for desktop computers, but it would prove to be one of the most fortunate and beneficial byproducts in computing history.This low power consumption and low heat characteristic made ARM the natural choice for mobile devices. For this reason, Apple began searching for a CPU in the late 1980s that was powerful enough to be driven by AA batteries without burning the user’s hand. Apple wanted this CPU to be powerful enough to translate handwritten text into computer text and run a GPU, which was somewhat fanciful at the time. The handheld device Apple hoped to drive was the notoriously unsuccessful Newton, and only a fast and streamlined ARM core could power it.Apple and Acorn’s chip partner VLSI collaborated with Acorn to spin off the ARM division and establish a new company called Advanced RISC Machines, which allowed the ARM abbreviation to remain. Under this alliance, along with Apple’s substantial resource injection, ARM developed the ARM6 core and the first production-level chip based on that core, the ARM610 CPU. The 20 MHz version of this chip became the processor driving the Apple Newton in 1993.Although Newton met a tragic end, in hindsight, the significance of this product was far-reaching: a handheld battery-powered touchscreen device driven by an ARM CPU. Now, billions of smartphones worldwide fit this description. In most people’s minds, its first live test was in an episode of “The Simpsons,” where Newton misinterpreted the handwritten “Beat up Martin” as “Eat up Martha.”

Apple Newton depicted in “The Simpsons.”The ARM610 later continued to drive the next generation of Acorn Archimedes computers and a quirky Newton-based laptop called eMate. In 2001, ARM7 core CPUs were used in Apple’s iPod and Nintendo’s Game Boy Advance. In 2004, the dual-screen gaming console Nintendo DS was launched with two ARM processors.Then in 2007, Apple launched the first-generation iPhone, which was powered by an ARM11 core CPU. Since then, ARM began to expand rapidly.ARM CPUs became the default choice for smartphones—whether for Apple phones or products from other companies. Whether for desktops, laptops, or servers, as long as they are not using Intel chips, they are bound to be using ARM CPUs. Now, with ARM Chromebooks and Apple’s new ARM desktop and laptop with MacOS, ARM seems to have returned to its starting point—desktop computing.Years later, the origin story of ARM is still worth telling because it is linked by a series of impossible, strange, and unplanned events, and it involves seemingly impossible origins. Although ARM has undoubtedly dominated today’s world, compared to the industry giants like Intel/AMD, which give a sense of being a colossal beast, ARM’s humble beginnings make it seem like a colossal entity without feeling.Take a moment to reflect:Because the British felt they were falling behind in the computer revolution, they decided to make a television program about computers. To make this program, they needed a computer, so a small British company designed a great computer. And when this small company needed to manufacture a faster CPU, they designed their own CPU because Intel refused to pay attention to them. This internally designed CPU happened to be low power and low heat, which caught Apple’s attention, and then it was used in what many consider to be Apple’s biggest failure. Of course, since then, this company has begun its path to world domination.If I had made this up, you would say the plot I designed was too bizarre or had too many elements, like a Wes Anderson film. But this is actually a true story.However, if reality is a simulation, I bet it is also powered by ARM.Analysis of Millions of Documents, In-Depth Interpretation of Hundreds of Thousands of Words2020-2021 Global AI Technology Development Trend Report

The report covers trend analysis of top AI conferences, overall technology trend development conclusions, detailed explanations of technology development trend data and survey conclusions in six subfields (natural language processing, computer vision, robotics and automation technology, machine learning, intelligent infrastructure, data intelligence technology, and cutting-edge intelligent technology), and finally includes breakthrough events in six technology fields over the past five years and complete data from Synced Indicator.

Scan the QR code below to purchase the report immediately.

© THE END

For reprints, please contact this public account for authorization.

For submissions or inquiries: [email protected]