This article is selected from the Jishu Community column “IC Design”, authored by Lao Qin Talks about Chips, and is authorized to be transferred from the WeChat official account Lao Qin Talks about Chips.This article will introduce what virtualization is.

Today, we will explore an interesting topic: virtualization.

Before we start, let’s casually talk about a trending term, “Metaverse”. According to Wikipedia, the definition of the Metaverse is: “The Metaverse is a collective virtual shared space, created by the convergence of virtually enhanced physical reality and physically persistent virtual space, including the sum of all virtual worlds, augmented reality, and the Internet.” What is virtualization? Simply put, it is something that is fake, not physically real.

Virtualization technology is widely used, especially in cloud computing and data center businesses. Why do processors need virtualization, or what benefits can virtualization bring?

First, in processor design and application, we need to mention the concept of “Virtual Machine (VM)”. Those who have used VMware may have some understanding of virtual machines. The counterpart to a virtual machine is the “Real Machine”, sometimes also referred to as the physical machine. A physical machine is easy to understand; for example, if we buy a computer for home use, all the physical devices of that computer, such as hard drives, network cards, etc., are for your own use. However, in the case of server machines, this exclusive usage method is undoubtedly very inefficient. By dividing the huge resources of the server into blocks for allocation or using time slices for rotation, efficiency can be greatly improved. This is where virtualization technology comes into play. Virtualization technology allows applications to run on virtual machines just as they would on real machines. In summary, the benefits of virtualization technology include the following:

-

Isolation: This allows sharing of physical systems between mutually untrusted computing environments. For example, two competitors can share the same physical machine in a data center without accessing each other’s data.

-

High Availability: Virtualization technology allows workloads to be migrated seamlessly and transparently between physical machines, often used to move workloads from hardware platforms that may require maintenance or replacement.

-

Load Balancing: Maximize the use of each hardware platform, which can be achieved through the migration of virtual machines or by co-hosting appropriate workloads on physical machines.

-

Sandboxing: Virtual machines can be used to provide a sandbox for applications that may interfere with other parts of the machine. Running these applications in a virtual machine can prevent errors or malicious parts of the application from interfering with other applications or data on the physical computer.

Of course, the benefits of virtualization technology are not limited to these; we won’t enumerate them all here.

How do we isolate virtual machines from real physical devices, or how is virtualization achieved? Industry pioneers have come up with a method: adding a layer of hypervisor above the hardware. According to Baidu Encyclopedia, a hypervisor is defined as “a software layer that runs between the physical server and the operating system, allowing multiple operating systems and applications to share hardware. It can also be called VMM (Virtual Machine Monitor), which is a virtual machine monitor. A hypervisor is a ‘meta’ operating system in a virtual environment. They can access all physical devices on the server, including disks and memory. The hypervisor not only coordinates access to these hardware resources but also imposes protection between each virtual machine. When the server starts and executes the hypervisor, it loads the operating systems of all virtual machine clients and allocates an appropriate amount of memory, CPU, network, and disk to each virtual machine.”

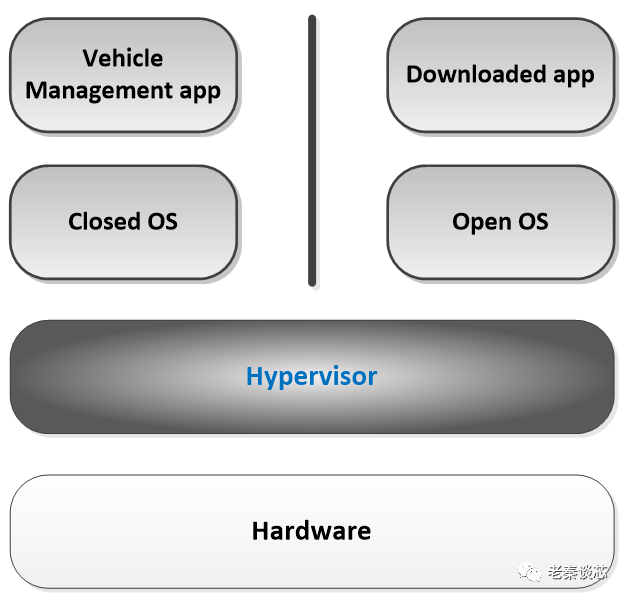

With the hypervisor in place, let’s take a look at how the system is structured. Taking automotive chips as an example, as shown in the figure below. The hypervisor is responsible for coordinating and controlling all hardware resources. Above the hypervisor, parts related to automotive driving and safety are isolated into one part, running a closed operating system (Closed OS); parts related to entertainment and so on are isolated into another part, running an open operating system (Open OS). Corresponding applications (APP) run on their respective operating systems. This achieves a good isolation effect, ensuring that user-installed apps do not affect automotive driving safety.

Figure 1: Virtualization Diagram in Automotive Chips

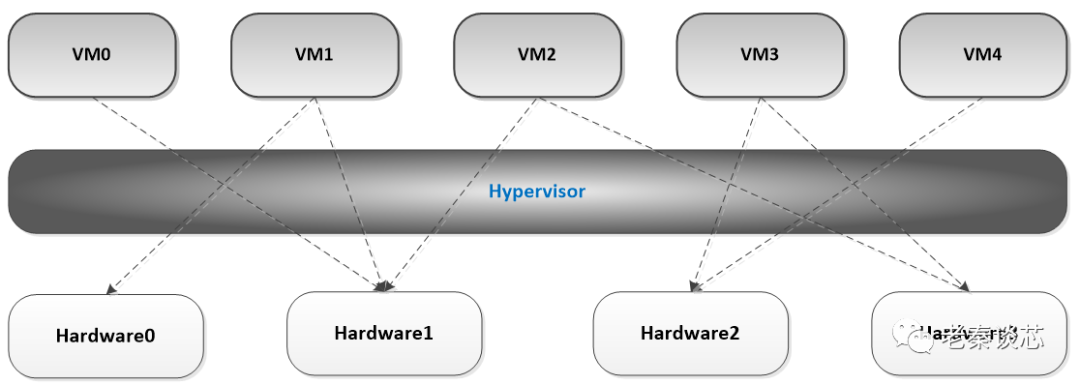

Now let’s look at an example from a server, as shown in the figure below. A server may have many processors, hard disks, etc. Through the hypervisor, different virtual machines can be allocated different hardware resources. For example, for VM1, which may run some high-security tasks, we can allocate the resources of Hardware0 exclusively to VM1, while other virtual machines cannot access it. For Hardware1, resources can be shared among multiple virtual machines like VM0, VM1, and VM2, fully utilizing its resources.

Figure 2: Virtualization Diagram in Server Chips

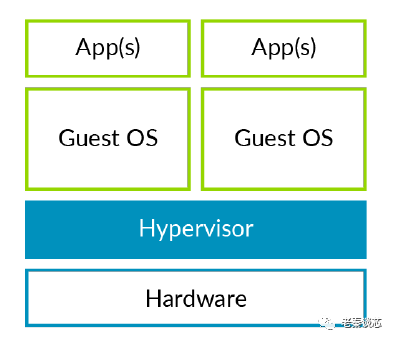

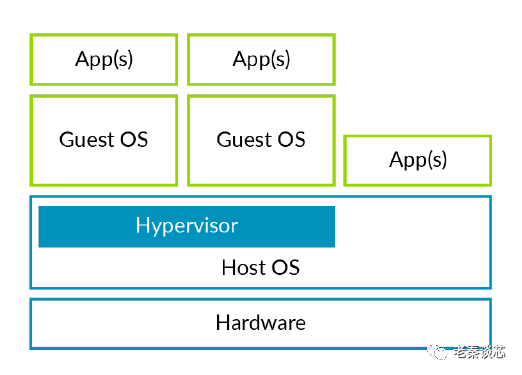

Hypervisors are divided into two main categories: standalone hypervisors, also called type 1; and hosted hypervisors, also known as type 2.

Figure 3: Type 1 Hypervisor (Standalone)

Figure 4: Type 2 Hypervisor (Hosted)

The difference between the two is that type 1 hypervisors run directly on the hardware, while type 2 hypervisors run within the Host OS. Type 1 acts like a lightweight operating system, running directly on the host machine’s hardware; while type 2 acts like an application software, running within the host machine’s operating system. The similarity is that guest operating systems (Guest OS) run on top of the hypervisor. Type 1 hypervisors usually perform better and are more secure than type 2. Type 2 has a disadvantage of higher latency because the communication between the hypervisor and hardware has to go through the operating system layer. The most common application scenario for hosted hypervisors is as client hypervisors running on end-user computers, where latency issues are generally not a concern. Most enterprises typically choose type 1 hypervisors for their data center computing needs.

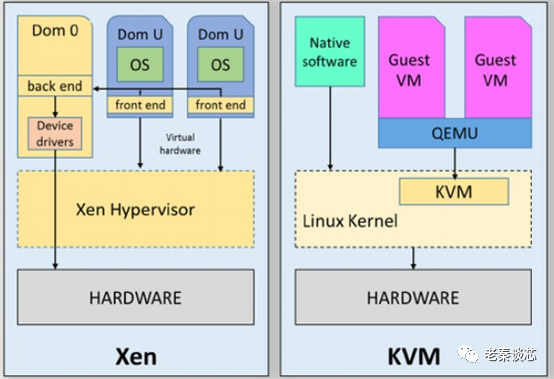

On the Arm platform, a typical representative of type 1 hypervisors is Xen. Xen is an open-source project developed by the Computer Laboratory at the University of Cambridge. It is a software layer that runs directly on computer hardware as a replacement for the operating system, allowing multiple Guest OS to run concurrently on the computer hardware. Xen supports various processors including x86, x86-64, Power PC, and Arm. On March 11, 2014, Xen released version 4.4, which provided better support for the Arm architecture. Xen is a typical representative of para-virtualization technology. Para-virtualization mainly addresses how to capture sensitive non-privileged instructions. The x86 architecture is a significant reason for the emergence of para-virtualization technology, as some sensitive instructions in the x86 architecture are not privileged instructions and cannot automatically generate exceptions. Therefore, to ensure the system runs correctly, these instructions must be captured. Xen replaces these defective instructions by modifying the Guest OS kernel. Intuitively, since there is para-virtualization, there must be full virtualization, right? Yes, you are very clever; there is indeed “full virtualization”, also known as “hardware virtualization”. In simple terms, the difference between the two is that in para-virtualization, the Guest OS knows it is running on a hypervisor rather than on hardware, and can also recognize other guest virtual machines running in the same environment. In full virtualization, the Guest OS runs directly on hardware and cannot perceive other Guest OS.

On the Arm platform, a typical representative of type 2 hypervisors is KVM. KVM (Kernel-based Virtual Machine) is an open-source virtualization solution based on the Linux environment, initially developed by the Israeli company Qumranet, and integrated into the Linux 2.6.20 kernel in February 2007, becoming part of the kernel. Unlike virtualization products such as VMware ESX/ESXi, Microsoft Hyper-V, and Xen, KVM’s idea is to add a virtual machine management module on top of the Linux kernel, reusing the well-established process scheduling, memory management, IO management, and other codes in the Linux kernel to create a hypervisor capable of supporting virtual machines.

Figure 5: Comparison Diagram of Xen and KVM

For those interested in hypervisors, you can search online for a plethora of articles; I won’t go into further detail (actually, I also have a limited understanding, haha). What we are more concerned about is what work needs to be done for chips to support virtualization.

Copyright belongs to the original author; if there is any infringement, please contact for deletion.

END

关于安芯教育

安芯教育是聚焦AIoT(人工智能+物联网)的创新教育平台,提供从中小学到高等院校的贯通式AIoT教育解决方案。

安芯教育依托Arm技术,开发了ASC(Arm智能互联)课程及人才培养体系。已广泛应用于高等院校产学研合作及中小学STEM教育,致力于为学校和企业培养适应时代需求的智能互联领域人才。