Xinzhe Xin from the Communication Research Group at Beijing Institute of Technology successfully ported the deep learning gait recognition algorithm GaitSet to the Allwinner V853 development board as part of his undergraduate thesis titled “Research on Gait Recognition Based on Embedded Systems.” This study was tested on the CASIA-B dataset, achieving a gait recognition accuracy of 94.9% under normal walking conditions, with recognition accuracies of 87.9% and 71.0% under backpack and coat walking conditions, respectively.

Xinzhe Xin from the Communication Research Group at Beijing Institute of Technology successfully ported the deep learning gait recognition algorithm GaitSet to the Allwinner V853 development board as part of his undergraduate thesis titled “Research on Gait Recognition Based on Embedded Systems.” This study was tested on the CASIA-B dataset, achieving a gait recognition accuracy of 94.9% under normal walking conditions, with recognition accuracies of 87.9% and 71.0% under backpack and coat walking conditions, respectively.

Gait recognition, as an emerging biometric method, has advantages over face recognition and fingerprint recognition, such as adaptability to environments and resistance to disguise.

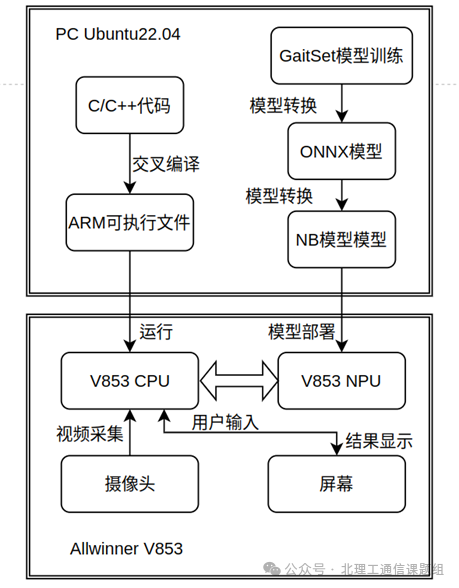

The gait recognition system designed in this paper is built on the Allwinner V853 development board, fully utilizing the onboard peripherals, CPU, and NPU to achieve a real-time gait recognition system on an embedded platform.

Allwinner V853 development board used in the experiment

Specifically, the deep learning algorithm used in the system was trained on a PC, and the resulting Pytorch model was converted into an NB model that can run on the V853 NPU using a model conversion tool. The model inference is performed on the NPU. The overall operation process of the system is divided into four main parts: preprocessing, model inference, post-processing, and UI display.

Process diagram of the gait recognition system setup

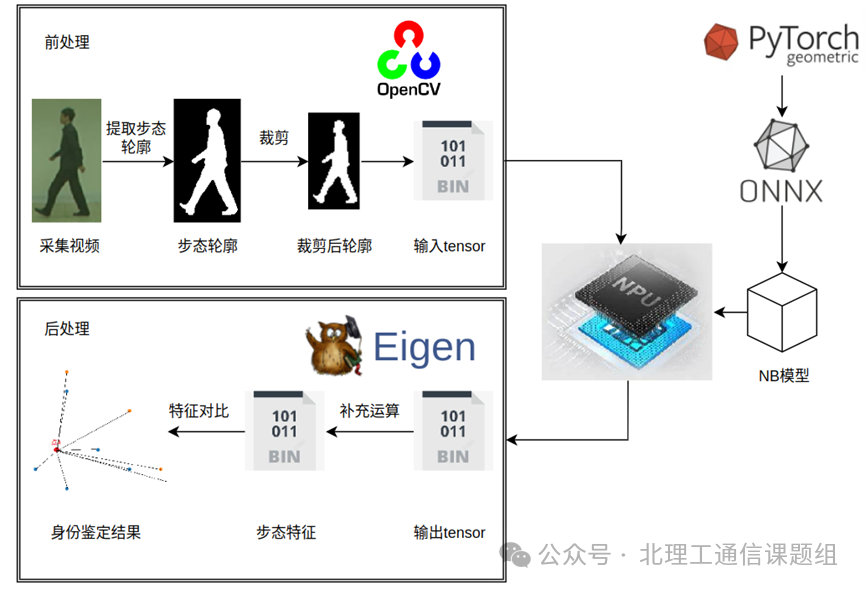

The majority of the operators in the deep learning algorithm used in this system perform inference on the onboard NPU, while a small number of operators compute results on the onboard CPU. Both preprocessing and post-processing are carried out on the onboard CPU, utilizing the OpenCV and Eigen computation libraries. The preprocessing extracts gait contours from the video captured by the onboard camera, cropping and stitching them to serve as input data for the NPU model, while the post-processing performs supplementary calculations on the output data obtained from the NPU model and conducts feature comparisons for identity verification. The UI display is implemented through an application generated by Qt.

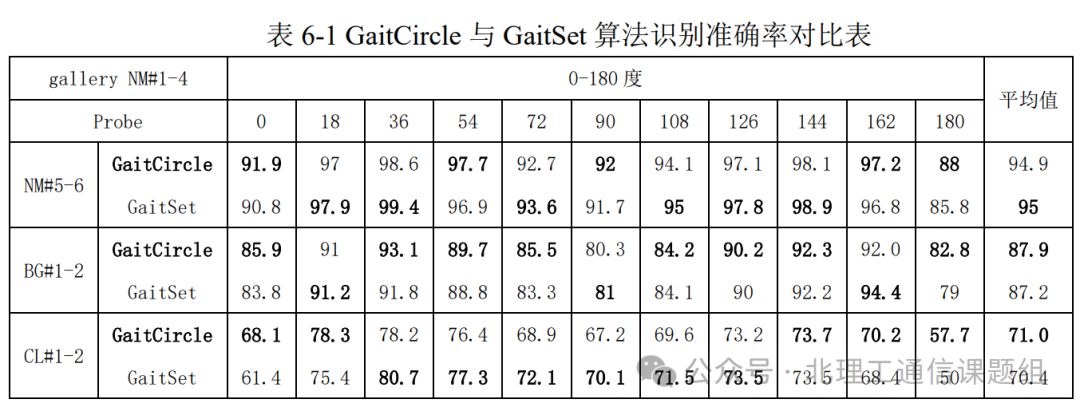

This study tested the gait recognition accuracy of the NB model on the CASIA-B dataset. CASIA-B is a large-scale, multi-view gait recognition dataset containing 124 samples, each with 10 gait sequences, divided into 6 normal walking sequences (NM), 2 sequences of walking in a long coat (CL), and 2 sequences of walking with a backpack (BG). CASIA-B emphasizes changes in perspective, with each walking sequence containing 11 different angles. 74 samples from the dataset were used as training samples, while the remaining 50 samples were used for testing.

Overall process of the gait recognition algorithm

In the test set, the first four normal walking sequences of each sample were used as the gallery set. To study the system’s performance under different human silhouettes, three probe sets were defined: the last two sequences of normal walking, two sequences of walking in a long coat, and two sequences of walking with a backpack. Considering the impact of angles on recognition performance, this study conducted separate tests at each angle to verify the recognition accuracy.

The following table was created based on the above test data, containing recognition accuracy data for the NB model used in the gait recognition system GaitCircle designed in this paper, compared with the GaitSet model under the same conditions. NM indicates normal walking, BG indicates walking with a backpack, and CL indicates walking in a coat.

In addition to testing the gait recognition accuracy, this study also evaluated the real-time performance of gait recognition. For single-person gait recognition, the preprocessing speed reached 58ms per frame, the model inference runtime was only 77ms, and the post-processing runtime was 0.73s.

Finally, this study also conducted real-time recognition tests, where the gait recognition process involved capturing video with the camera on the V853 development board and performing real-time gait recognition to output pedestrian identities. Before testing, the gait recognition program was set to auto-start in the V853 Tina Linux environment, primarily by adding the command to run the gait recognition program in the /etc/profile file. The following video shows the process of real-time gait recognition testing, where gait features of 20 individuals have been pre-entered into the gait information database.

-End-

All content in this article is reproduced from

https://mp.weixin.qq.com/s/AxUeqC-8ogck7qd2Lgo92A