Compared to large model applications such as prompt engineering and fine-tuning, the uniqueness of AI agents lies in their ability not only to provide consultation to users but also to directly participate in decision-making and execution. The core of the agent’s implementation is. The core of this advancement is that the critical aspect of task planning is entirely entrusted to the AI large model. This is based on a premise: AI large models possess profound insights and perception of the world, rich memory storage, efficient task decomposition and strategy optimization, continuous self-reflection and internal contemplation, as well as the skill to flexibly utilize various tools.

Humans today communicate with large models through dialogue, which is equivalent to the large model having only ears and a mouth, capable of receiving textual information, but lacking “eyes, ears, and limbs”. Under this limitation, the large model is more like a “brain in a vat”. In many scenarios, the large model can only serve as an advisor and cannot play a decisive role in the development of events. When discussing the unique value of AI agents, we inevitably touch upon their essential differences from large language models.

For AI large models to simulate human intelligence in complex interactions in the real world, it requires not only processing information but also the ability to perceive the environment, make decisions, and execute tasks. AI large models need to combine real-world interactions with sensory-motor predictions to achieve a higher level of artificial intelligence.

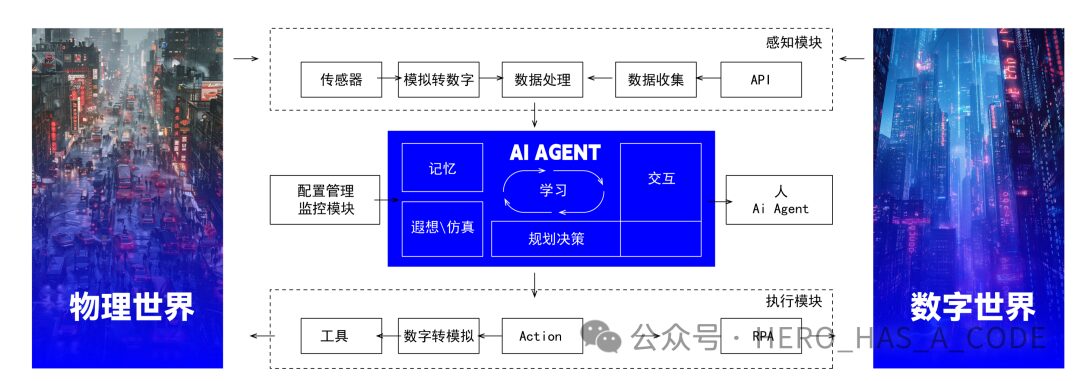

First, the AI agent perceives its environment by receiving data from the external world (such as environmental sensing, user input, etc.). Through various sensors and IoT devices, AI can obtain information from the physical world, and through API interfaces, AI can acquire information from the digital world. This is analogous to human sensory organs and forms the foundation of the agent’s connection with the world.

After processing and analyzing this data, AI needs to have a certain memory capacity to compare the current environmental information with historical decisions. The AI agent needs to have decision-making capabilities, able to plan the next actions based on the current environment and its built-in goals, and simulate the possible outcomes of decisions in a simulated environment. This is similar to the thought process of the human brain, involving understanding, planning, and problem-solving abilities.

After making a decision, the AI agent needs to translate that decision into actual actions, which may involve controlling physical devices through mechanical actions or interacting with other systems through APIs and RPA. The results of the execution will be used as new inputs, forming a closed-loop feedback system to ensure that the agent can adapt and optimize its behavior.

The AI agent is not just a tool for processing information but an intelligent entity with autonomous learning, adaptation, and innovation capabilities, able to self-optimize in complex and dynamic environments and effectively achieve its goals.

Next, we will break down the main modules of the AI agent, including the perception configuration module,

management and monitoring module, memory module, planning module, imagination/simulation module, native interaction module, learning module, execution module.

1. Perception Function

In artificial intelligence systems, the perception module (Perception Module) plays a crucial role. It serves as the bridge for AI to communicate with the external world, responsible for capturing, processing, and interpreting various signals in the environment. This module simulates the human sensory system, such as vision, hearing, and touch, enabling AI to “perceive” the surrounding world, understand the environment, and respond accordingly.

The perception module collects information through various sensors and data interfaces. These sensors can include cameras, microphones, temperature sensors, humidity sensors, GPS locators, etc., used to capture images, sounds, temperature, location, and other information. In the digital environment, data acquisition interfaces may involve web crawlers, API calls, database queries, etc., to obtain text, numbers, and other types of data.

The raw data collected usually needs to undergo preprocessing before it can be used for subsequent analysis and understanding. Preprocessing steps may include noise removal, data normalization, feature extraction, etc. For example, preprocessing in image recognition may include resizing images, adjusting contrast, edge detection, etc., to better identify objects in the images. In natural language processing (NLP), preprocessing may involve tokenization, removing stop words, part-of-speech tagging, etc., to extract useful information.

After preprocessing, the data needs to be parsed and understood through more advanced analysis. This step may involve machine learning models and algorithms, such as deep learning, pattern recognition, etc. Through these techniques, AI can identify objects in images, understand the meaning of voice commands, analyze the sentiment of texts, etc. These capabilities enable AI to extract meaningful information from raw data and convert it into knowledge that can be used for decision-making and action.

For example, in autonomous driving, artificial intelligence can use cameras, lidar, and microphones to collect information about the surrounding environment, utilizing image recognition and object detection technologies to identify vehicles, pedestrians, traffic signs, etc., to achieve safe driving.

2. Configuration Management and Monitoring Module

Core functions:

-

Agent generation strategy: Combining random combination strategies and utilizing real-world personality statistics, psychology, and behavioral analysis system data to create diverse AI agent profiles. These methods ensure the authenticity and diversity of agents while enhancing the system’s ability to simulate complex social interactions.

-

Definition and management of agent roles: Setting and managing the characteristics of AI agent roles, including their goals, capabilities, knowledge base, and behavior patterns. This allows each AI agent to play a role in specific environments based on its unique profile, closely aligning its thoughts and actions with the user’s real needs, while also increasing the system’s flexibility and diversity.

-

Evaluation testing and AI value alignment: Through continuous testing and feedback loops, ensure that the behavior of AI agents aligns with human values and goals, avoiding outcomes that are detrimental to users or society. By continuously evaluating performance, the AI system is fine-tuned to enhance its adaptability, accuracy, and user satisfaction.

-

Manual fine-tuning: The manual fine-tuning function allows administrators to directly intervene and adjust the AI agent’s neural network and knowledge system, enabling detailed adjustments and optimizations of the AI’s behavior and decision logic for specific problems or scenarios.

-

Performance monitoring and anomaly handling: Real-time monitoring of the AI agent’s operational status, promptly identifying and resolving performance declines, erroneous behavior, or anomalies to ensure stable system operation. This includes tracking key performance indicators such as the AI agent’s response time, accuracy, and resource consumption.

-

Security management: Ensuring the security of the AI agent during data processing and decision-making, preventing risks such as data leakage, malicious attacks, and abuse.

3. Memory Module

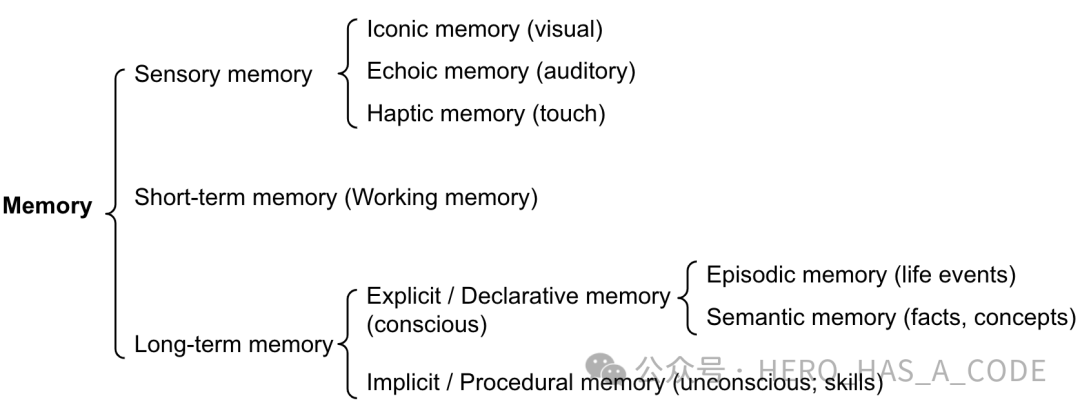

Sensory memory is the first stop for AI agents processing raw input data, similar to human sensory information processing. It can briefly retain sensory data from the external environment, such as visual, auditory, or tactile information. Although the duration of this type of memory is very short, only a few seconds, it forms the basis for the agent’s rapid response to complex environments.

Short-term memory or working memory in AI is equivalent to the model’s internal memory, processing the current flow of information. This type of memory is similar to human consciousness processing, with a limited capacity, often considered to revolve around seven items of information (according to Miller’s theory), and can last for 20 to 30 seconds. In large language models (such as Transformer models), the capacity of working memory is limited by its finite context window, which determines the amount of information the AI can directly “remember” and process.

Long-term memory provides the agent with nearly unlimited information storage space, allowing it to store and recall knowledge and experiences over extended periods. Long-term memory is divided into explicit memory and implicit memory subtypes. Explicit memory encompasses memories of facts and events that can be consciously recalled, including semantic memory (facts and concepts) and episodic memory (events and experiences). Implicit memory includes skills and habits, such as riding a bike or typing, which are results of unconscious learning.

The long-term memory of AI agents is typically implemented through external databases or knowledge bases, enabling the agent to quickly retrieve relevant information when needed. The challenge of this external vector storage implementation lies in how to efficiently organize and retrieve stored information. For this purpose, approximate nearest neighbor search (ANN) algorithms are widely used to optimize the information retrieval process, significantly improving retrieval speed even at the cost of some accuracy.

The design of the memory module has a decisive impact on the performance of the AI agent. An effective memory system not only enhances the agent’s ability to process and store information but also enables it to learn from past experiences, thus adapting to new environments and challenges. Additionally, research on memory modules raises deep questions, such as how to balance memory capacity with retrieval efficiency and how to achieve memory persistence and reliability. In the future, with the continuous advancement of AI technology, we can expect more efficient and flexible memory modules that provide agents with stronger learning and adaptation capabilities, thus unleashing greater potential in various complex environments.

4. Planning Function

Goal Setting and Analysis

Before formulating any action plan, it is essential to clarify the goals of the AI system. These goals may be predetermined or dynamically generated based on real-time data and environmental changes. Once the goals are established, the decision and planning module will analyze the information provided by the cognitive module, including the state of the environment, goal conditions, available resources, etc., to devise the optimal path to achieve the goals.

Understanding and Predicting the Environment

The decision and planning module must have a deep understanding of the environment, including the current state and possible changes of the environment. In uncertain and dynamically changing environments, the module needs to assess external changes and how various factors impact future states. This challenge requires AI systems to utilize advanced data analysis techniques, machine learning models, and algorithms to conduct in-depth analyses of vast historical data to predict potential changes in future environmental states. This capability is particularly critical in highly uncertain areas such as climate change and stock market fluctuations. By deeply understanding the environment and accurately predicting it, AI can consider potential risks and opportunities when making decisions and planning, thereby formulating more robust action strategies.

Resource Consumption and Tool Evaluation:

The most appropriate planning always assumes appropriate resource constraints. In the decision-making process, the AI agent must comprehensively evaluate multiple factors, including resource consumption, tool performance, and the costs required to execute tasks.

The AI agent needs to conduct a detailed analysis of available resources, similar to how humans compare prices, performance, and functions before purchasing goods. Before executing tasks, AI must evaluate the resource consumption of different options. For example, when performing mathematical operations, AI needs to consider whether to use a local calculator, write Python code to perform calculations, or directly leverage the computational power of neural networks; these methods may differ significantly in resource consumption and execution time. Choosing the most suitable tool not only affects the speed and efficiency of calculations but also relates to the overall system’s energy consumption and cost-effectiveness.

Additionally, the AI agent needs to evaluate different AI models to understand their performance and resource consumption levels in various scenarios. The AI agent should be familiar with the characteristics of each model, such as their performance in specific tests, their ability to solve particular problems, and the memory and energy consumption required during reasoning, thus treating AI large models as commonly used tools.

Decision-Making

Based on the understanding of goals and the environment, the decision and planning module will evaluate different action plans. This process involves weighing the pros and cons of various options, risks and benefits, and their likelihood of achieving the goals. In many cases, optimization algorithms are required to find the optimal or near-optimal solution, which may include heuristic search, dynamic programming, Monte Carlo tree search, and other methods.

The diversity of AI planning capabilities is key to its ability to tackle complex tasks. We can roughly divide it into two categories: feedback-independent planning and feedback-based planning.

-

Feedback-independent planning is typically used when the environment is relatively stable and predictable. For example, single-path reasoning executes tasks along a predetermined path, suitable for scenarios where outcomes are foreseeable. In contrast, multi-path reasoning constructs a decision tree or graph, providing alternative options for different situations, increasing the flexibility of decisions and the ability to respond to unexpected events.

-

Feedback-based planning is suitable for scenarios that require dynamic adjustments based on environmental feedback. This type of planning utilizes real-time data and feedback to reassess and adjust planning strategies to adapt to environmental changes. Feedback can come from objective data of task execution results or subjective assessments or be provided by auxiliary models.

Planning and Task Allocation

After determining the optimal action plan, the decision and planning module needs to translate this plan into specific planning and task allocation. This step is particularly important, especially in multi-agent systems, where careful consideration must be given to how to efficiently coordinate the behaviors of various agents to ensure collective actions are coordinated and efficient. The task allocation process considers individual capabilities, resource distribution, and timing arrangements, ensuring the smooth implementation of the plan.

The Chain of Thought and Tree of Thoughts represent an advanced approach for AI in solving complex problems. They simulate the human thought process by breaking a large task into multiple smaller tasks, and then gradually solving these smaller tasks to achieve the final goal. This method not only improves the efficiency of problem-solving but also increases the innovativeness of solutions.

Additionally, the strategy of combining large models with planning demonstrates a new approach to integrating AI technology with traditional planning methods. By transforming complex problems into PDDL (Planning Domain Definition Language) and then solving it using classical planners, this strategy can significantly enhance the efficiency and feasibility of planning while ensuring the quality of solutions.

Dealing with Uncertainty and Dynamic Adjustments

The decision and planning module must also possess the ability to cope with environmental uncertainty and dynamic changes. This means that the AI system must be able to monitor environmental changes and adjust its action plans based on real-time information. In certain cases, this may involve real-time decision adjustments or replanning when encountering unexpected situations. The self-reflection and dynamic adjustment capabilities of AI are central to its adaptability.

ReAct and Reflexion technologies demonstrate how AI can evaluate results after actions and self-optimize based on these evaluations by integrating feedback loops into the planning process. Chain of Hindsight (CoH) fine-tunes future planning strategies by analyzing past actions and results, enhancing the precision and efficiency of decision-making.

With the integration and application of more cutting-edge technologies, AI agents will make greater strides in complexity management, decision optimization, and adaptive adjustments, bringing revolutionary changes to various industries.

5. Imagination/Simulation Module

In these “dreams”, the AI agent may simulate a series of previously unencountered challenge scenarios, such as the entire process of establishing a base on Mars or designing an ecosystem entirely managed by AI. It may also “dream” of interactions with new technologies or unknown life forms it may encounter in the future. In this process, the AI will not only attempt to find solutions but also predict potential problems and explore how to optimize existing action plans.

Through this approach, “Imagination/Simulation” becomes a powerful learning tool. AI can test and improve its decision-making algorithms in dreams without worrying about the consequences of failures in the real world. This internal simulation process allows AI to be prepared to respond to actual situations before they occur. Furthermore, by exploring various possibilities in dreams, AI can discover new solutions and innovative methods that may never be reached in traditional learning environments.

Models like Sora, which generate text-based videos, provide the foundation for the AI “Imagination/Simulation” module, supporting the development of high-performance simulators for both physical and digital worlds, offering foundational support in applications such as game development, AR, and VR, marking a significant step towards the evolution of AI towards higher levels of intelligence.

It not only allows AI to self-improve and evolve in a safe environment but also enables AI to understand and predict the behaviors of complex systems more profoundly. Future AIs will not merely be tools for executing tasks; they will become intelligent entities capable of self-reflection, innovation, and dreaming, interacting and coexisting with human society in entirely new ways.

6. Native Interaction Module (Interaction Module)

The interaction module serves as the native communication tool for the AI agent, akin to human speech, eye contact, and body language in natural communication. It primarily handles direct communication between AI and users or other systems, ensuring both parties can effectively and accurately understand each other’s intentions and needs. This module typically encompasses natural language processing technology to parse the meanings of human language and generate responsive language output; it may also include visual and auditory recognition technologies, enabling AI to understand non-verbal communication signals.

Through natural language processing, AI can understand and generate human language, including written and spoken forms, allowing for natural communication with users. Computer vision enables AI to “see” and comprehend visual information, recognizing users’ gestures, expressions, and other non-verbal signals. Speech recognition and generation technologies provide users with intuitive and convenient interaction methods. Multimodal interaction design integrates text, speech, and visual information, enhancing the naturalness and flexibility of interactions. Contextual understanding capabilities allow AI to make more precise and personalized responses based on dialogue history and user preferences. The interaction module enables AI to communicate naturally and directly with humans or other AIs, gaining more information during communication, achieving a fuller understanding of tasks, and making better judgments and plans.

7. Learning Module

By combining the functions of the planning module and the learning module, a highly flexible and adaptive system can be formed. In such a system, the planning module not only formulates action plans based on the current learning model but also adjusts plans based on actual results and feedback during execution. Meanwhile, the learning module analyzes the effectiveness of planning execution, adjusting its learning algorithms and internal models to optimize future planning and decision-making processes.

In the path to achieving a general agent, it is essential first to establish stable performance capabilities in specific scenarios, and then continuously expand the interaction between the learning module and the planning module, enabling the agent to adapt to a broader range of environments and tasks. For instance, when learning mathematics, the initial phase often involves memorizing the multiplication table. If every math problem requires calculation, it is akin to activating the planning module in the brain, which consumes considerable energy. Through memorization, we can store common mathematical operations in the short-term memory module for quick recall when needed, saving energy consumption. With continuous practice and memorization, common mathematical operations become ingrained in our neural pathways, allowing for quick answers without complex thought processes. For the AI agent, this process is equivalent to fine-tuning its internal model through experiential learning and repeated practice, thereby executing tasks more efficiently, solidifying commonly used planning capabilities as internal tools.

The learning of AI agents also involves learning to use external tools to complete specific tasks with lower energy consumption. When AI first encounters a new tool or another AI agent, it must understand the basic functionalities and operational methods of this new “object”. This step is similar to the exploratory phase humans undergo when first learning to use a tool. AI learns through observation, experimentation, and drawing lessons from past experiences, gradually establishing a preliminary understanding of the behaviors of tools or partners. This process may involve considerable trial and error, but it is these trials that provide valuable learning opportunities for AI. Through continuous practice and environmental feedback, AI begins to form more complex strategies to efficiently utilize tools or collaborate with other AIs. It may discover that certain combinations of tools can solve previously insurmountable problems, or that collaboration with specific AI agents can significantly enhance the efficiency and quality of task completion.

AI’s learning extends beyond single tasks or environments, demonstrating an understanding of the learning strategies themselves, learning how to learn effectively. They begin to identify which learning methods are most effective and which need adjustment, and this self-reflective capability allows AI to optimize in response to ever-changing challenges. Furthermore, when AI can share the knowledge and experience it has acquired, the progress of the entire AI community will accelerate significantly. This knowledge-sharing mechanism not only speeds up the growth of individual AIs but also propels the advancement of the entire field. When AI systems master how to flexibly utilize various tools and resources, as well as how to collaborate efficiently with other intelligent entities, they will be able to tackle more complex problems and tasks, exhibiting unprecedented innovation and problem-solving capabilities.

8. Execution Module (Execution Module)

The tool usage and collaboration capabilities of AI agents are a topic of great concern. Humans are unique because we can create, modify, and utilize external tools to complete tasks that exceed our physiological capabilities. The use of tools may be the most significant feature that distinguishes humans from animals. Nowadays, researchers are dedicated to endowing AI agents with similar capabilities to expand the application scope and intelligence level of models.

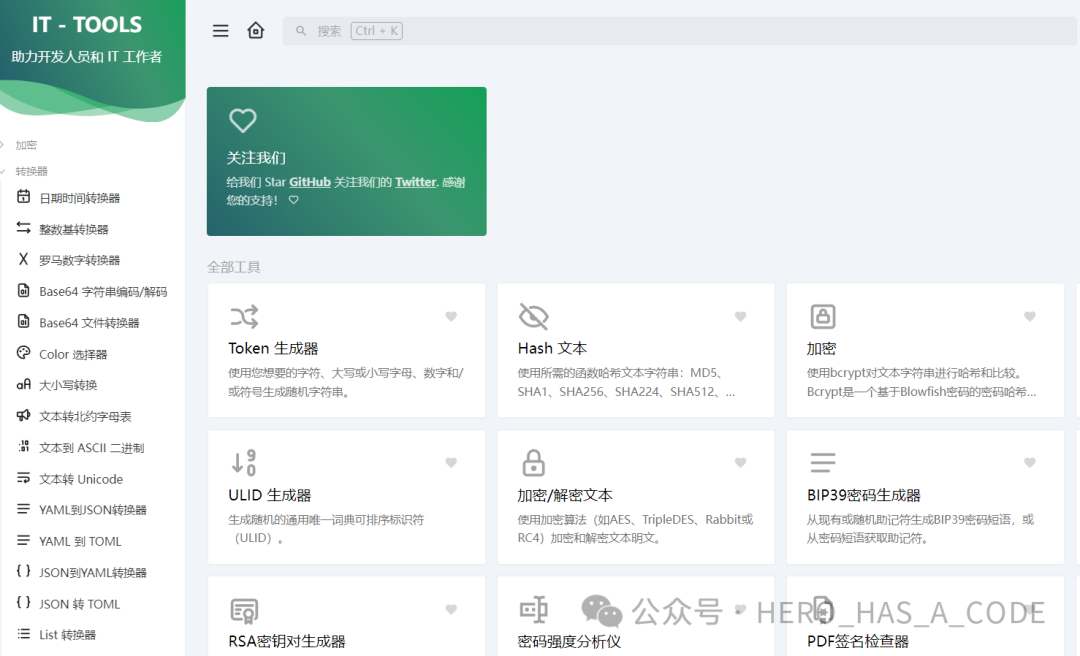

Recent studies have shown that granting language models (LLMs) the ability to use external tools can significantly enhance their performance. For instance, some research teams have utilized the “Modular Reasoning, Knowledge and Language” (MRKL) system, combining LLMs with various expert modules, enabling them to call external tools like mathematical calculators, currency converters, and weather APIs. These modules can be neural network models or symbolic models, thereby providing LLMs with more tool options to meet task requirements in different fields.For example, the following open-source tools provide a range of IT tools for large models to call.

https://github.com/CorentinTh/it-tools

However, despite the enormous potential that the ability to use external tools brings to AI agents, there are also challenges in practical applications. Some studies have found that LLMs face certain difficulties when handling verbal math problems, highlighting the importance of when and how to use external tools. Therefore, researchers have proposed new methods such as “Tool Augmented Language Models” (TALMs) and “Toolformer” to assist LLMs in learning how to use external tool APIs. These methods improve the quality of model outputs based on newly added API call annotations by expanding the datasets.

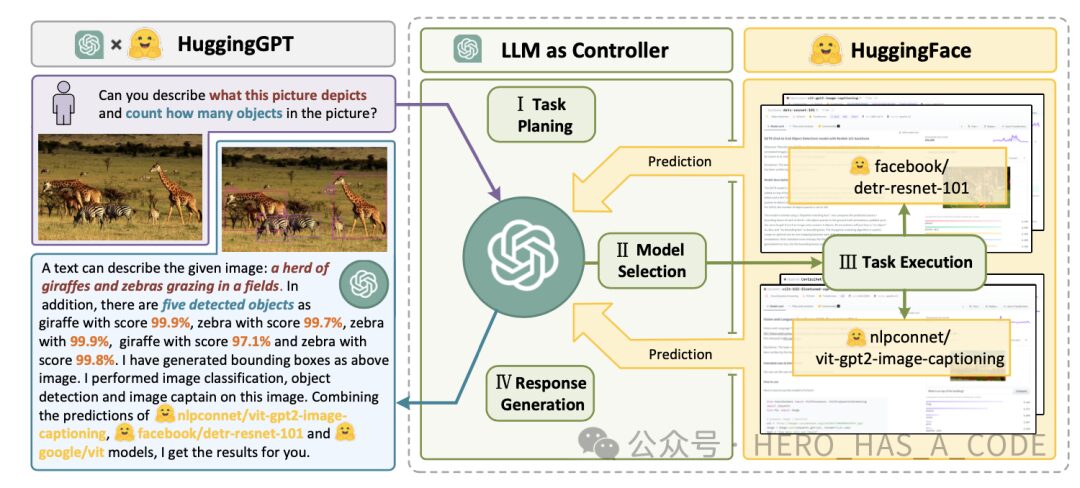

On the other hand, practical applications continue to emerge, such as ChatGPT plugins and OpenAI API function calls, which fully demonstrate the excellent potential of LLMs in utilizing external tools. For example, in April 2023, a joint team from Zhejiang University and Microsoft released HuggingGPT, which utilizes ChatGPT as a task planner to select the most suitable model based on descriptions of models on the HuggingFace platform and summarizes responses based on execution results.

Paper link: https://arxiv.org/abs/2303.17580

-

Task planning: Use ChatGPT to obtain user requests;

-

Model selection: Choose a model based on function descriptions in HuggingFace and execute AI tasks with the selected model;

-

Task execution: Use the model chosen in step 2 to execute the task and summarize the response to return to ChatGPT;

-

Response generation: Use ChatGPT to fuse the reasoning of all models and generate a response to return to the user.

To better evaluate the performance of tool-augmented LLMs, researchers have proposed the API-Bank benchmark, which includes 53 commonly used API tools and 264 dialogue annotations with 568 API calls. The API-Bank benchmark evaluates the tool usage capabilities of agents at three levels: the ability to call APIs, the ability to retrieve APIs, and the ability to plan APIs. This benchmark provides an effective method for assessing LLMs’ tool usage abilities at different levels. ToolLLM has collected over 16,000 real-world APIs and generated relevant tool usage evaluation benchmarks, open-sourcing the LLaMA model trained based on this dataset.

Paper link: https://arxiv.org/pdf/2304.08244.pdf

In the future, the tool usage capabilities and collaboration of AI agents will become important research directions in the field of artificial intelligence. Through continuous exploration and innovation, we hope to endow AI agents with more intelligent and flexible tool usage capabilities, thereby achieving broader applications and higher levels of intelligent performance.

Summary and Reflection

From 2017 to 2021, the SaaS product market rapidly developed, with many excellent SaaS products focusing on specific functions emerging one after another. However, the integration of these outstanding single-point SaaS products with traditional private deployment applications of large enterprises has become a significant challenge faced by companies. To address this pain point, companies have begun adopting API (Application Programming Interface) and RPA (Robotic Process Automation) technologies, which enable different SaaS products to quickly connect, forming a unified IT architecture and avoiding the formation of application and data silos.

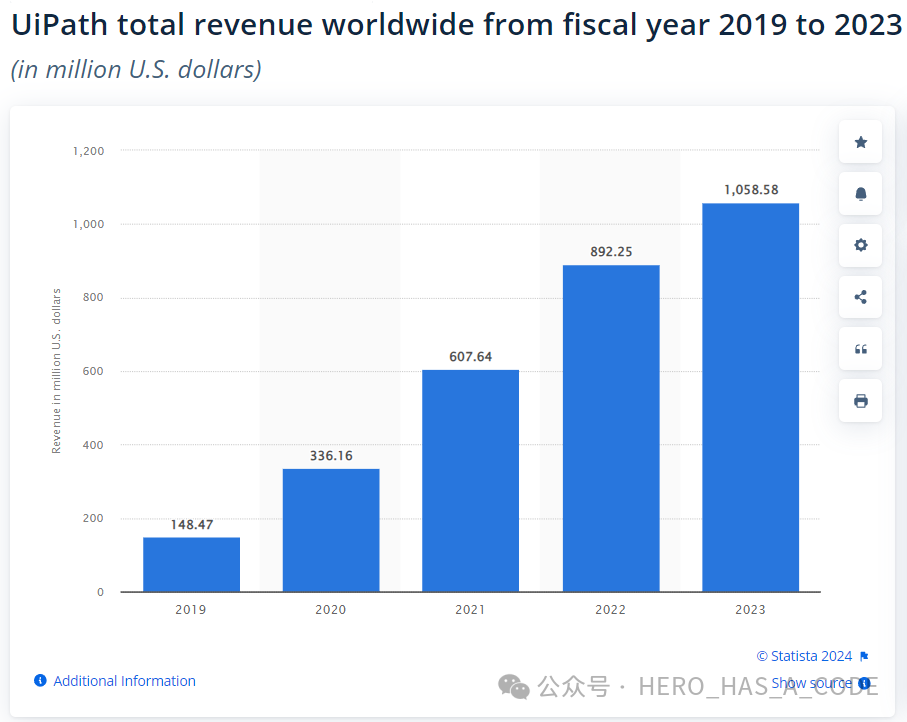

During the SaaS boom, API and RPA were not just technical tools but became the focus of the market. For example, in the API field, on November 15, 2019, MuleSoft was acquired by Salesforce for $6.5 billion, while Zapier developed into an industry star with a valuation of over $4 billion based on just $1.3 million in financing. In the RPA field, companies like Uipath and Appian achieved rapid growth through successful IPOs. Although these companies’ revenues are still significantly increasing, their valuations have seen substantial corrections as the SaaS wave gradually recedes.

Today, in the era of large models, API and RPA technologies are endowed with deeper missions. They are no longer just bridges for connecting systems but have transformed into the “hands and feet” of AI large models, playing a more critical role in data integration, process automation, and intelligent decision support. API and RPA technologies allow AI large models to effectively utilize various existing human software and systems, such as ERP systems, enterprise chat systems, and SaaS systems, creating a new collaborative and productive system driven by agents without requiring enterprises to reinvest substantial funds to rebuild all previous software.

Through deep integration with AI technology, API and RPA can not only enhance operational efficiency for enterprises but also significantly drive innovation, bringing unprecedented competitive advantages to companies.Will the next spring for API and RPA arrive soon?