Source: Global Semiconductor Observation

Author: Wang Kaiqi

The increasing cost of storage, along with the more pressing challenges of computational and bandwidth imbalances, have rendered Intel’s PCI-e (PCI Express) technology, introduced two decades ago, increasingly inadequate. We are eagerly anticipating a transformative new technology centered around memory, and the CXL technology based on the PCI-e protocol emerged in this context.

In 2019, Intel launched CXL technology, which quickly became the industry-recognized advanced interconnect standard. Its strongest competitors, Gen-Z and OpenCAPI, have withdrawn from the competition and transferred their Gen-Z and OpenCAPI protocols to CXL.

What exactly is this highly anticipated technology? And what are the currently popular concepts of memory pools and memory sharing in the industry?

Key Points of This Article:

-

CXL is a brand new interconnect technology standard recognized by the industry, ushering in a revolutionary change in server architecture.

-

CXL effectively addresses the bottlenecks of the memory wall and IO wall.

-

Currently, PCI-e technology serves as the underlying foundation for CXL technology and will undergo earlier iterations and upgrades. CXL can be seen as an enhanced version of PCI-e technology, extending more transformative functionalities.

-

The pooling function of CXL2.0 effectively realizes the memory-centric concept, while CXL3.0 achieves memory sharing and memory access, enabling multiple machines to access the same memory address jointly at the hardware level.

1

What is CXL?

CXL stands for Compute Express Link. As a new interconnect technology standard, it enables high-speed and efficient interconnection between CPUs and GPUs, FPGAs, or other accelerators, thereby meeting the demands of high-performance heterogeneous computing while maintaining consistency between the CPU’s memory space and the connected device’s memory. In summary, its advantages can be highly summarized in terms of extremely high compatibility and memory consistency.

The reason why CXL technology is worthy of anticipation is that it proposes an effective way to address the memory wall and IO wall issues that are currently troubling the industry.

△Common storage system architecture and storage wall (Illustrated by Global Semiconductor Observation)

The phenomena of the memory wall and IO wall arise from the multi-level storage in the current computational architecture. As shown in the figure, the data processing solutions used by mainstream computational systems rely on an architecture that separates data storage from data processing (Von Neumann architecture). To meet speed and capacity demands, modern computational systems typically adopt a three-tier storage structure consisting of cache (SRAM), main memory (DRAM), and external storage (NAND Flash).

Whenever an application starts working, it requires continuous back-and-forth transmission of information in memory, which consumes significant performance in terms of time and effort. The closer the memory is to the computing unit, the faster its speed; however, due to power consumption, heat dissipation, and chip area constraints, its corresponding capacity is smaller. For instance, SRAM typically has a response time in the nanosecond range, DRAM generally around 100 nanoseconds, and NAND Flash can be as high as 100 microseconds. When data is transmitted between these three levels of storage, the response time and bandwidth of the latter stages drag down overall performance, creating the “storage wall.”

The IO wall arises from external storage; due to the massive amount of data, if it cannot fit in memory, it requires external storage and uses network IO to access the data. This method of access can slow down access speeds by several orders of magnitude, severely hampering overall performance, which is known as the IO wall.

While modern processor performance continues to improve, the technological gap between memory and computational power is widening. Industry data shows that over the past 20 years, processor performance has rapidly increased at a rate of about 55% per year, while memory performance has only improved at around 10% per year. Moreover, the expansion of contemporary memory capacity faces pressure from Moore’s Law; as speed slows down year by year, costs become increasingly exorbitant. With the explosion of big data AI/ML applications, these issues have become major factors constraining the performance of computing systems.

2

From CXL1 to CXL3: The Wonderful Concepts of Memory Pooling and Memory Sharing

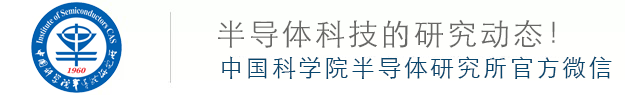

As a brand new technology, CXL has developed rapidly. Over the past four years, CXL has published three different versions: 1.0/1.1, 2.0, and 3.0, and it has a very clear technology roadmap, with the industry full of expectations for its future.

△Image source: CXL Alliance

CXL1.1

Direct Connection for Capacity and Bandwidth Expansion

In 2019, the first version of CXL, CXL1.0/1.1, was born, and its direct approach to addressing the bottlenecks of the memory wall and IO wall left a deep impression on the industry. Initially, CXL1.1 addressed only the issues of single-device systems, primarily expanding the memory capacity and bandwidth within a server (Memory Expansion).

From its inception, CXL supported three protocols: CXL.io, CXL.cache, and CXL.mem. CXL.io is an enhanced version of PCIe5.0, operating at the physical layer of the PCIe bus; CXL.cache is used for coherent host cache access, and CXL.mem is for host memory access. As defined in the CXL1.0 and 1.1 standards, these three methods form the basis of a new way to connect hosts and devices.

In terms of innovation, CXL introduced the Flex Bus port, which can flexibly negotiate whether to use the PCIe protocol or the CXL protocol based on the link layer. This helps us better understand the relationship between CXL and PCI-e. Due to CXL’s higher compatibility, it is more easily adopted by processors that support PCI-e ports (the vast majority of general-purpose CPUs, GPUs, and FPGAs). Therefore, Intel views CXL as an optional protocol running on top of the PCIe physical layer, meaning the PCI-e interconnect protocol has not been completely discarded. Intel also plans to vigorously promote the adoption of CXL on the sixth generation of the PCI-e standard.

From the development trajectory of CXL1.0/1.1, PCIe5.0, CXL2.0, and PCIe6.0CXL3.0, we can clearly see the relationship between CXL and PCI-e. The PCI-e technology serves as the underlying foundation for CXL technology and will undergo earlier iterations and upgrades. CXL can be seen as an enhanced version of PCI-e technology, extending more transformative functionalities.

Starting from CXL2.0, this technology expanded beyond single-machine scenarios and derived the concept of memory pooling.

CXL2.0

Revolution Brought by Memory Pooling

Given that the CXL standard was born not long ago, CXL2.0 is currently the focal point of market attention.

Building on CXL1.0 and 1.1, the 2.0 version added support for hot plugging, secure upgrades, persistent memory, telemetry, and RAS, but the most important function is the Switching capability that can change the server industry’s ecological configuration, along with the pooling of memory (Pooling) and acceleration cards (AI, ML, and Smart NIC).

Regarding device pooling, the industry has previously conducted many conceptual and technical attempts to place various resources or data into a shared pool for on-demand extraction. For instance, Facebook has been dedicated to disaggregating and pooling memory, while IBM’s CAPI and OpenCAPI, NVIDIA’s NVLink and NVSwitch, AMD’s Infinity Fabric, Xilinx’s CCIX, and HPE and Dell’s support for Gen-Z have all been explored. For various reasons, these technologies did not develop well. Until the pooling operation of CXL2.0 became a reality, the industry regained hope.

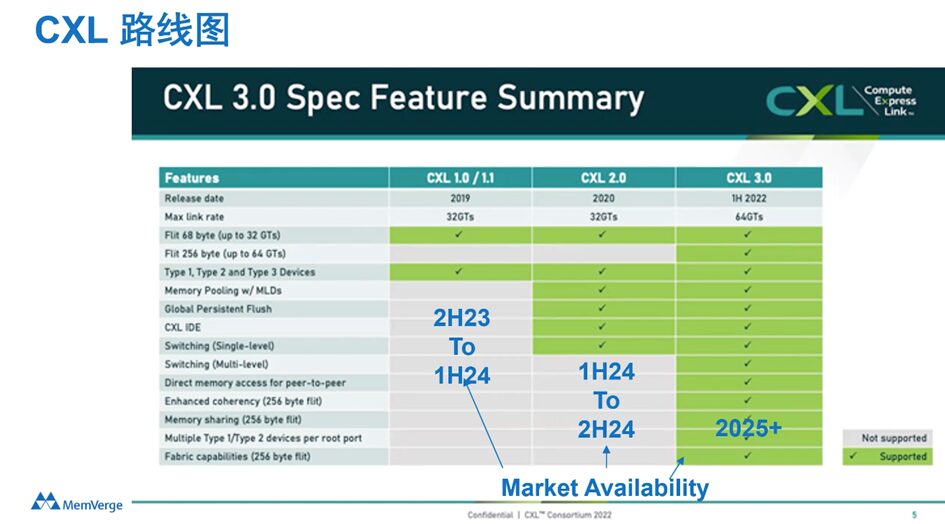

CXL1.1 was still limited to a single node, serving as a cache coherence interconnect between CPU to CPU or CPU to PCIe devices. The 2.0 technology added a single-level switch (think of PCIe’s switch), enabling multiple devices to connect to a single root port, laying the technical foundation for the subsequent pooling.

△Image source: CXL Alliance

In the above diagram, H1 to H4 to Hn represent different hosts, i.e., different servers, which can connect multiple devices through the CXL Switch. The lower D1, D2, D3, D4 represent different memory, also connected to the upper hosts through the CXL Switch. The different colors in the diagram represent the device owners; for example, D1 belongs to H2, while D2 and D3 belong to H1. Each SLD device can only be assigned to one Host.

Under this framework, it makes Memory Pooling possible, allowing you to implement shared memory pools across system devices, greatly increasing flexibility. For instance, if one machine runs out of memory, it can flexibly seek memory space from this pool; if that machine no longer needs that memory, it can return it at any time. In other words, memory closer to the CPU (like DRAM) will be used for more urgent tasks, which will undoubtedly greatly increase memory utilization or reduce memory costs.

CXL3.0

The Arrival of the Shared Memory Era

CXL 3.0, released in August 2022, introduced significant innovations in many aspects. Firstly, CXL3.0 is built on PCI-Express 6.0 (CXL1.0/1.1 and 2.0 versions are built on PCIe5.0), doubling the bandwidth and simplifying some complex standard designs to ensure usability.

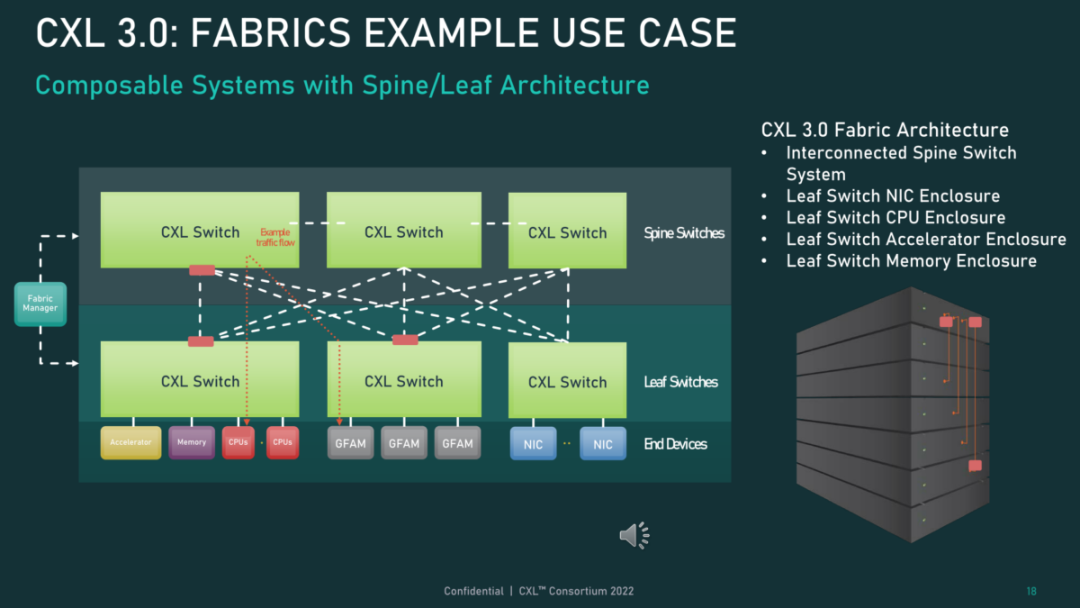

△Image source: CXL Alliance

The most critical innovation lies in the upgrades at both the physical and logical levels. At the physical level, CXL3.0 doubles the throughput per channel to 64GT/s. At the logical level, CXL3.0 expands the standard logical capabilities, allowing for more complex connection topologies, and enabling a group of CXL devices to flexibly implement memory sharing and memory access. For instance, it allows multiple switches to interconnect, enabling hundreds of servers to interconnect and share memory.

Memory sharing is a significant highlight; this capability breaks the limitation that a particular physical memory can only belong to one server, enabling multiple machines to access the same memory address at the hardware level. It can be said that CXL’s memory consistency has been greatly enhanced since the previous CXL2.0 could only achieve memory sharing through software.

CXL is gaining momentum. In the year CXL technology was launched, the CXL Alliance was also established, led by Intel, in collaboration with Alibaba, Dell EMC, Facebook (Meta), Google, HPE, Huawei, and Microsoft. Subsequently, AMD and Arm joined the alliance.

In recent years, the CXL Alliance has expanded to over 165 members, covering almost all major CPU, GPU, memory, storage, and network device manufacturers. Technically, in 2022, both the Gen-Z Alliance and Open CAPI Alliance confirmed transferring all technical specifications and assets to CXL, ensuring the advancement of CXL as an industry standard. For the industry, this is a significant choice.

3

The Future Significance of CXL from the Perspective of Storage Revolution

The memory-centric concept has long been proposed. While the future transformations brought by CXL are currently unimaginable, a similar transformation in storage occurred in the 1990s, which may help us better understand it.

Before the 1990s, storage referred to hard disks. The birth of IBM’s 350 RAMAC marked the official entry of humanity into the era of hard disks. In the early 1990s, a network called Fiber Channel emerged, which detached storage from servers, transforming it into a system that could be independently expanded and managed, known as the SAN system.

The subsequent storage market saw the emergence of various networked shared storage solutions. This technological revolution transformed storage from a simple device industry into a software and systems industry, giving rise to the concept of “storage software.”

In 1995, SAN systems that integrated software and hardware began to appear, and around 2000, the first generation of NAS was realized. Not only SAN systems, but also a large number of successful leading companies emerged during this technological revolution, including EMC, NetApp, Veritas, PureStorage, etc. Storage software and systems rapidly grew into a massive market.

Today’s memory is remarkably similar to hard disk storage from 30 years ago.

Today’s memory is still merely a device within servers. With the advent of CXL, memory can be separated from computing, just as storage and computing were separated in the 1990s. This means that memory is also expected to independently become a brand new system, endowing it with more functionalities.

Perhaps recognizing CXL’s potential, on August 24, 2022, the JEDEC Solid State Technology Association and the Compute Express Link (CXL) Alliance announced the signing of a memorandum of cooperation to establish a joint working group aimed at providing a forum for the exchange and sharing of information, requirements, suggestions, and requests, with the goal of enhancing the standards developed by each organization.

Industry sources indicate that compared to the 32GB/s bandwidth provided by DDR5 interfaces with 380 pins, CXL memory controllers can provide equal or higher bandwidth, utilizing x8 or x16 CXL channels to provide 32GB/s or 64GB/s bandwidth respectively. CXL serially connected memory can alleviate the bandwidth limitations of current solutions. Overall, the collaboration between CXL and JEDEC in the DDR field will bring a new turning point for the memory industry.

Additionally, according to previous assessments by TrendForce, CXL (Compute Express Link) originally aimed to integrate the performance of various xPUs, thereby optimizing the hardware costs required for AI and HPC, and breaking through the original hardware limitations.

The support for CXL is still primarily considered from the perspective of CPU, but currently, server CPUs that support CXL functions, such as Intel Sapphire Rapids and AMD Genoa, only support up to CXL 1.1 specifications, and the first products that can be realized under this specification are CXL memory expanders (CXL Memory Expander).

Therefore, TrendForce believes that among various CXL-related products, CXL memory expanders will become the pioneering products, closely related to DRAM.

The CXL era will arrive soon. Industry news suggests that CXL1.1 and CXL2.0 may have landing products in the first half of 2024, while the realization of CXL3.0 will take longer. Currently, relevant partners are also seeking corresponding manufacturers to develop and test engineering samples in established environments.

This reproduced content only represents the author’s viewpoint.

It does not represent the position of the Institute of Semiconductors, Chinese Academy of Sciences.

Editor: Qian Niao