Author: Yeyan, Master’s in Engineering, China University of Geosciences

1. Background

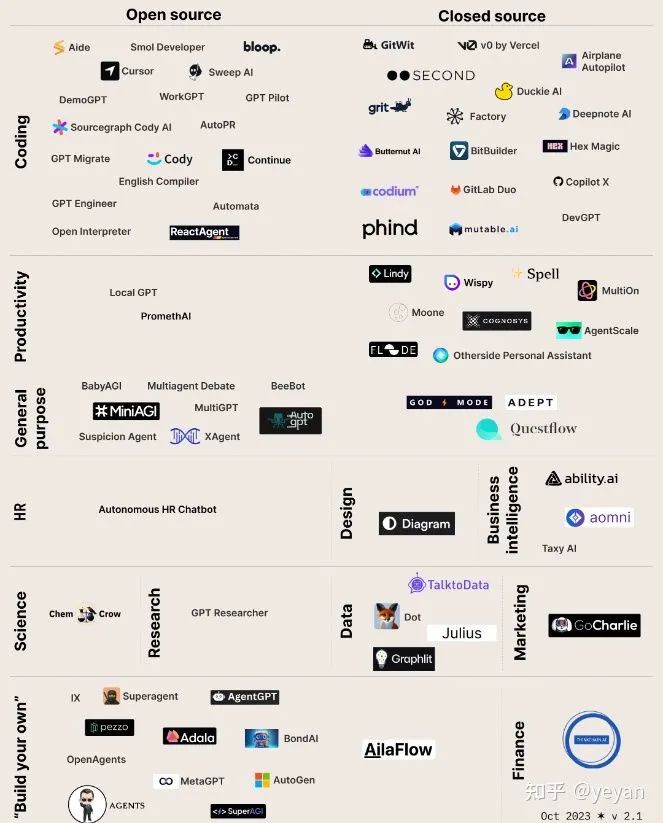

With the development of ChatGPT, the demand for utilizing ChatGPT to accomplish a series of complex tasks has emerged, leading to many application frameworks for AI agents. The specific applications are shown in the figure below, including both open-source and commercial options.

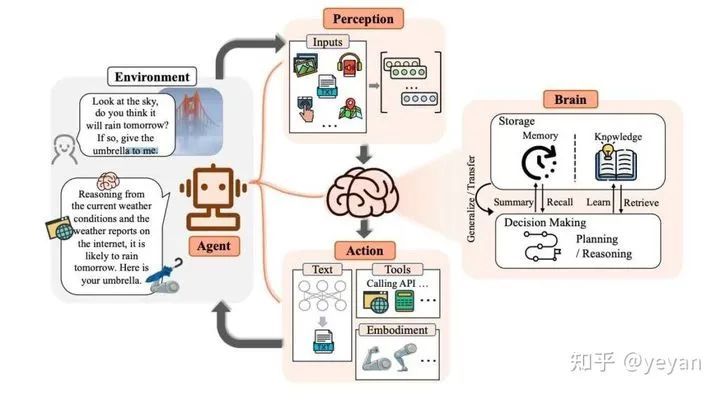

Thanks to the powerful capabilities of LLM models, using LLM as the brain, AI agents can think based on task objectives, decompose tasks, and then call the corresponding tools to complete tasks.

The general structure of AI agents is as follows:

An agent mainly consists of the following three parts:

• (1) Perception: This refers to the input of information, such as text, language, etc.• (2) Brain: This is the core, which formulates task plans based on LLM and input information.• (3) Action: Execution, which involves performing corresponding tasks according to the plan, such as calling third-party APIs and selecting suitable tools from the toolset to execute tasks.

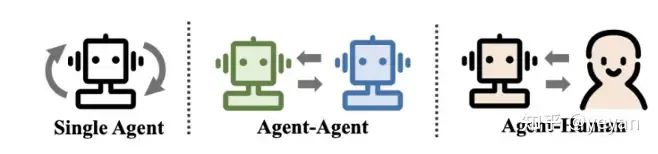

Agents support various forms, as shown in the figure below. A single agent serves as an AI assistant, similar to the current Q&A operations of GPT; agent-agent allows multiple agents to interact with each other, such as ChatDev, which defines multiple roles to create a virtual development company; human-agent incorporates human prompts or feedback, allowing the agent to adjust tasks based on human information, achieving better task completion.

2. Introduction to AgentGPT Technology

Currently, there are many open-source AI agents, such as AutoGPT, BabyAGI, and OpenAgents. This article uses AgentGPT as an example to introduce its general process and structure.

AgentGPT mainly consists of three parts, while other LLM-based agents like AutoGPT and BabyAGI have similar basic structures.

•Reasoning and Planning: If a goal is defined, simply inputting it to the LLM model will yield only a rough answer. By using prompt engineering, the goal can be decomposed into multiple more understandable steps, and reflection can be achieved through chain-of-thought prompting.•Memory: Memory is divided into short-term and long-term memory. Short-term memory learns based on context and is limited by the token length of the LLM; long-term memory considers the historical context when executing complex tasks. When the agent’s tasks run for an extended period, memories that exceed the token length will expire. AgentGPT uses a vector database to store historical information as feature vectors.•Tools: The toolset allows LLM to support only text output. For complex tasks, such as booking a flight, LLM cannot complete the task. The solution is to combine prompt engineering to define a series of toolsets. By describing the prompt and tool functions, the agent can call the appropriate tools based on the task, for instance, defining a “search” tool with a function description that uses the “Google Search” API to search for content, including input/output and content format in the description.

2.1 Prompt Engineering

The key to prompts is designing suitable prompt templates, as different LLMs may have different formats for prompts.

Common methods include:

• Zero-shot method (providing only prompts without examples)

Prompt: Classify the text into neutral, negative or positive.

Text: I think the vacation is okay. Sentiment:

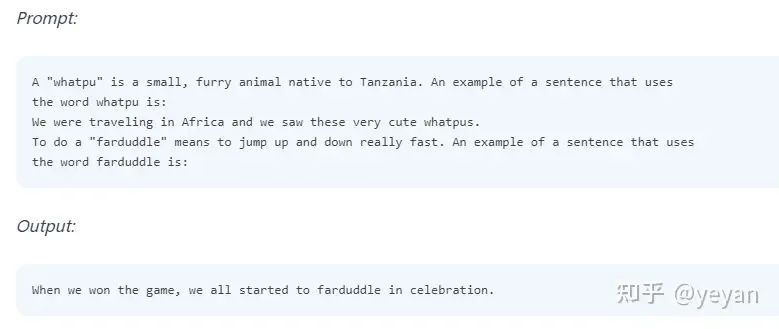

Output: Neutral• One-shot, two-shot, N-shot methods: providing 1, 2, or N examples to improve model accuracy

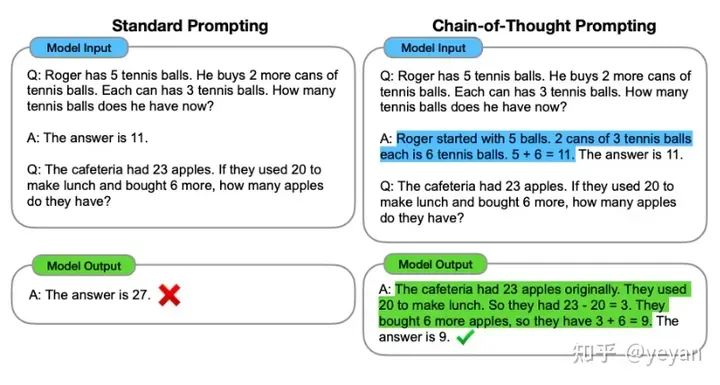

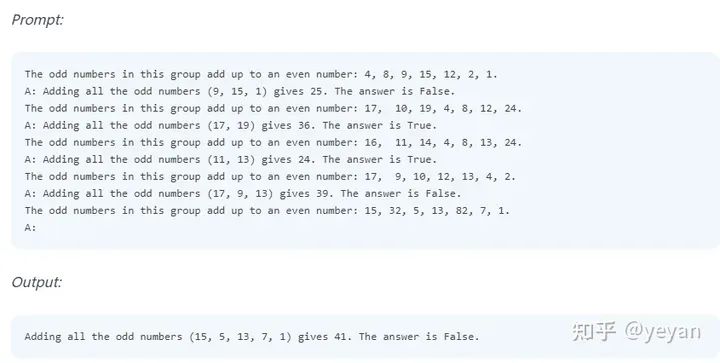

• Chain-of-Thought (CoT) Prompting: By adding logical reasoning steps, more complex tasks can be achieved.

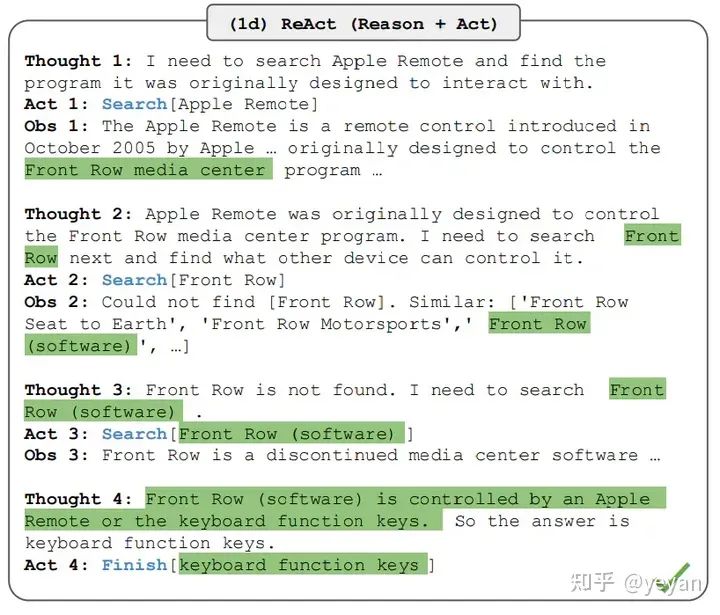

• ReAct technology (Reasoning + Action): ReAct combines reasoning and execution, allowing for dynamic reasoning and execution through continuous thinking and action to obtain the final answer.

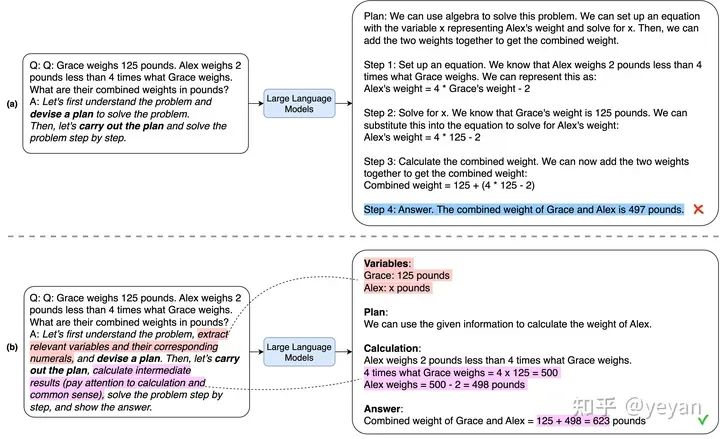

• Plan and Solve: This is also a chain-of-thought method, primarily requiring the model to understand the problem, extract variables and relevant values, and gradually specify a plan.

For specific prompt techniques, you can refer to the following articles, which provide detailed examples.

• Prompt Engineering Guide

https://www.promptingguide.ai

• Learn Prompting: Your Guide to Communicating with AI

https://learnprompting.org/docs/intro

You can also refer to the prompts.py file in the AgentGPT codebase, which contains some prompt templates.

# Analyze task prompt

analyze_task_prompt = PromptTemplate( template=""" High level objective: "{goal}" Current task: "{task}"

Based on this information, use the best function to make progress or accomplish the task entirely. Select the correct function by being smart and efficient. Ensure "reasoning" and only "reasoning" is in the {language} language.

Note you MUST select a function. """, input_variables=["goal", "task", "language"],)2.2 Memory

When an agent executes multiple times, it may forget previous operations. AgentGPT uses Weaviate vector database to solve this problem, enabling the storage of task execution history as memory, allowing the agent to access memory data during task loops.

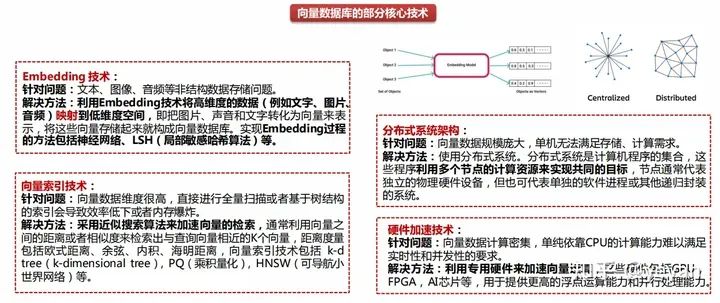

An overview of vector databases is shown in the figure below:

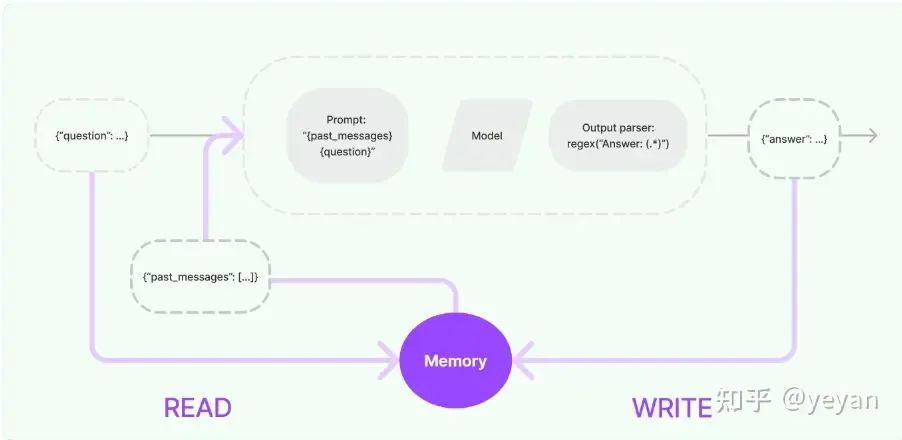

Regarding memory, you can refer to the memory mechanism in LangChain: after user input, before executing core logic, previous information is read from memory as past_messages, modifying user input, and then sending it to the LLM model. After obtaining the current task output and the final task result, the input and output are stored in memory, which can be referenced in subsequent tasks.

2.3 LangChain

The chain-of-thought prompting technology in AgentGPT utilizes LangChain. Currently, this area primarily uses LangChain. For more information about LangChain, you can refer to the article by Kevin: LangChain: An Open-Source Framework to Enhance Your LLM, which is very detailed, and you can also check the official documentation of LangChain: Introduction | LangChain.

https://zhuanlan.zhihu.com/p/636043995

https://python.langchain.com/docs/get_started/introduction

3. AgentGPT Practical Test

Currently, AgentGPT only supports the ChatGPT model and does not support local LLM models. However, you can refer to the code in model_factory.py#L37 and agent_service_provider.py#L18 to modify this section to add local model calling interfaces.

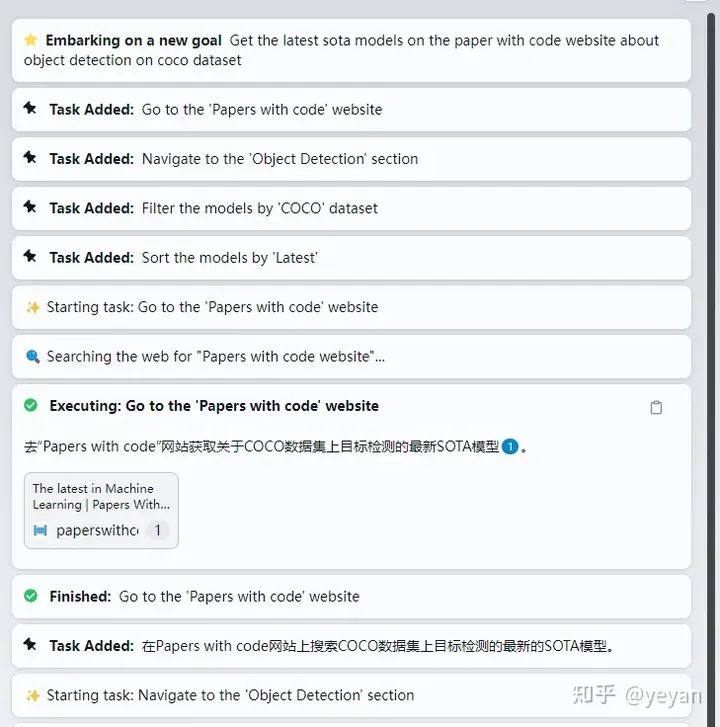

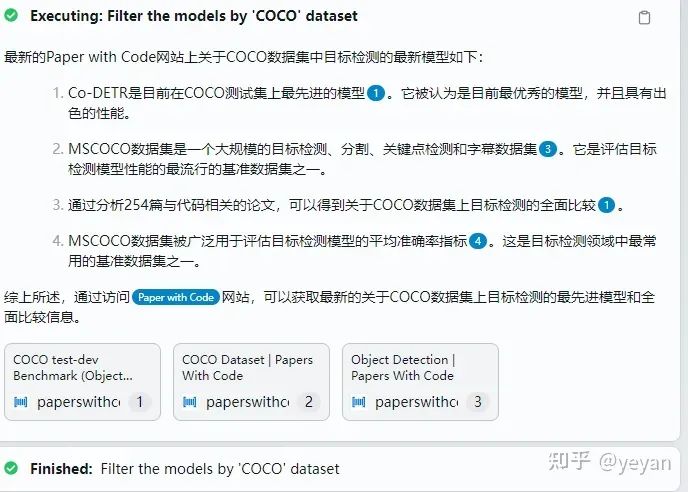

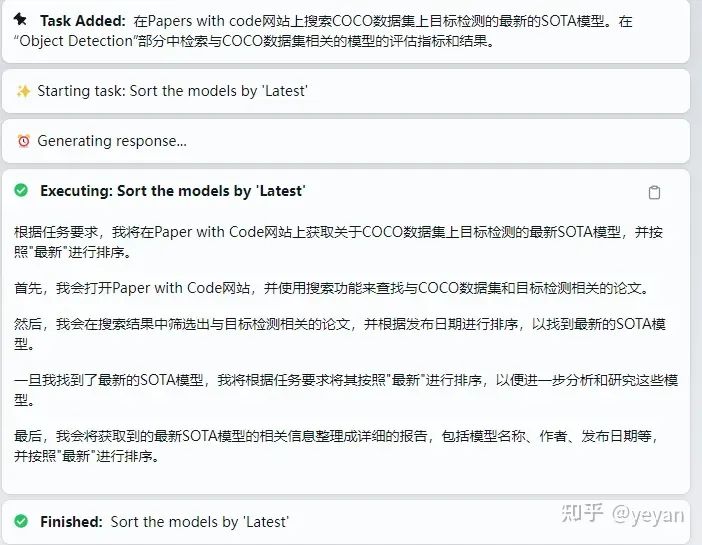

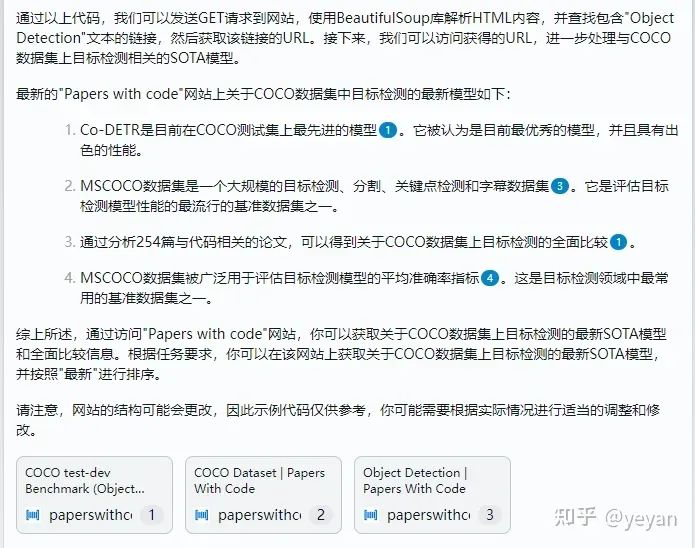

Testing AgentGPT to obtain the latest SOTA algorithms for object detection from the PaperWithCode website.

(1) Open the AgentGPT website and enter the task

Input the task: “Get the latest SOTA models on the PaperWithCode website about object detection on the COCO dataset.”

(2) Task decomposition process

Search the website -> Enter the website -> Internal search on the website -> Filter -> Sort

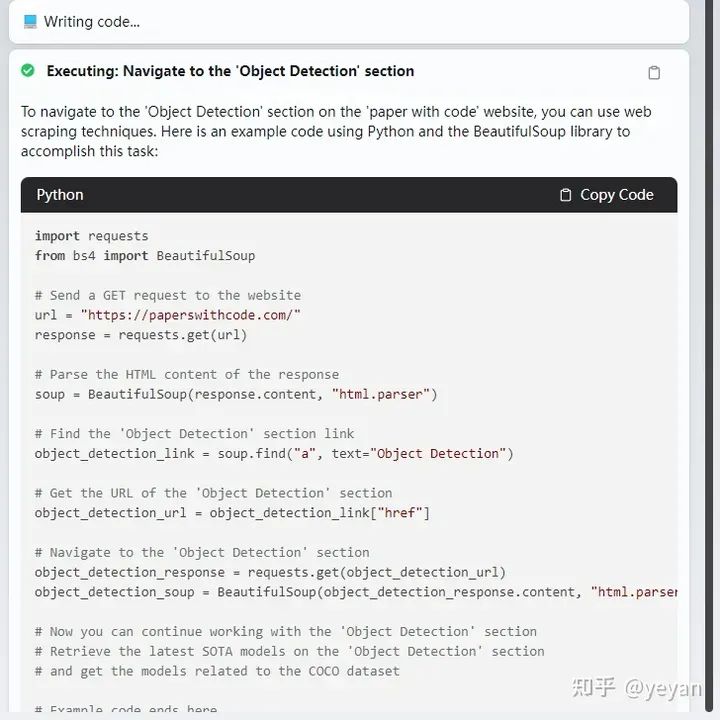

(3) Convert the task into code

(4) Analyze the code results

(5) Filter the content

(6) Sort the retrieved models

(7) Retrieve relevant content of the models

(8) Summarize to obtain the answer

4. Reference Links

[1] Original blog on AgentGPT: Autonomous AI in Your Browser https://reworkd.ai/blog/Understanding-AgentGPT

[2] AgentGPT demo website https://agentgpt.reworkd.ai/zh

[3] Plan-and-Solve-Prompting technology https://github.com/AGI-Edgerunners/Plan-and-Solve-Prompting

[4] Prompt Engineering Guide documentation https://www.promptingguide.ai/

[5] Learn Prompting: Your Guide to Communicating with AI https://learnprompting.org/docs/intro

[6] Kevin: LangChain: An Open-Source Framework to Enhance Your LLM https://zhuanlan.zhihu.com/p/636043995

[7] Memory | LangChain https://python.langchain.com/docs/modules/memory/

[8] List of open-source AI agents: awesome-ai-agents https://github.com/e2b-dev/awesome-ai-agents

[9] Ye Saiwen: What is the role of system, user, and assistant in ChatGPT API? How to use it? https://zhuanlan.zhihu.com/p/638552350

[10] AutoGPT https://github.com/Significant-Gravitas/AutoGPT

[11] BabyAGI https://github.com/yoheinakajima/babyagi

[12] OpenAgents https://github.com/xlang-ai/openagents

[13] LangChain Chinese tutorial https://github.com/liaokongVFX/LangChain-Chinese-Getting-Started-Guide

[14] awesome-langchain https://github.com/kyrolabs/awesome-langchainHave you seen this? Click to follow before you leave🧐~

》 Join the Qiangqu Community · Walk with Qiangke 《

Note: Name + School/Company + Direction