—

Starting from the current battleground of ToB agents, who will become the biggest beneficiary of this technological wave?

Source / Feige Says AI (ID:FeigeandAI)

Author / Gao Jia/Wang Yi

In just one year since the birth of agents, they have become a battleground for tech giants and startups.

However, most agents on the market do not seem to strictly meet the expectations of the business community. Including OpenAI’s GPTs, which are actually just chatbots used for specific knowledge bases or data. These agents, which are based on situational information and used for data analysis and code correction, are merely lightweight personal assistants.

In addition to the safety issues that everyone has been concerned about, such as soft pornography, fake officials, and rampant brushing orders, the lack of necessary user demand and deep gathering of scenarios has led to the absence of disruptive killer applications in the ToC field, and many GPTs have become mere “toys” for the masses. At the same time, there is also much room for improvement in terms of program interaction and automated workflows.

In the early days of large models, what kind of agents are truly needed for commercialization? In what scenarios can agents maximize their value?

When we shift our focus from ToC to ToB, a more promising answer seems to emerge.

Source / ToB Industry Headlines (ID:wwwqifu)

Author / Headlines · Editor / Headlines

ToB: The Real Battlefield for Agents

At the 2024 Sequoia Capital AI Summit, Andrew Ng delivered a speech about agents, in which he proposed four main capabilities of agents: reflection, tool use, planning, and multi-agent collaboration, and emphasized the importance of AI agent workflows, predicting it will become an important trend in the future.

Entrepreneur and platform economy researcher Sangeet Paul Choudary also mentioned in a post in March this year that agents create a possibility for re-integrating scenarios, allowing vertical AI players to achieve horizontal development by coordinating across multiple workflows, which will reshape the B2B value chain.

Compared to scattered individual users, enterprise users typically face more complex business needs, have clearer business scenarios, business logic, and a larger accumulation of industry data and knowledge, making them very compatible with the characteristics of agents, such as autonomy, perception and understanding of the environment, decision-making and execution, interaction, and tool use, making the ToB field an excellent stage for agents to showcase their capabilities.

We previously proposed in “Who Will Become the ‘App Store’ for ToB AI Applications?” that in the mobile internet era, the App Store is considered the most powerful ecological platform in history; similarly, in the era of large models, we also need such a robust ecological platform to create a business closed loop and accelerate the industry. In other words, we need a “ToB Agent Store” to empower enterprises and reduce costs while increasing efficiency.

So what kind of companies can effectively create this “Agent Store”?

Andrew Ng and Sangeet provide an almost standard answer—companies that can intervene in enterprise clients’ “workflows” and those that have “vertical industry” data accumulation, preferably with their own large models for adaptation and empowerment, as LLM is the backbone of agents.

All of this seems to point to collaborative office platforms.

Collaborative office platforms represented by DingTalk, Feishu, and WeChat Work are not only a combination of “PaaS+SaaS” but also possess good API interfaces and plugin systems, allowing them to be firmly embedded in enterprise workflows through various product forms such as instant messaging, video conferencing, scheduling, task management, and collaborative documents; moreover, through years of cultivation, they have accumulated enterprise data assets across multiple industries and tracks. With application scenarios, industry data, and their own large models, they are a natural growth platform for an “Agent Store”.

Before stepping into the ToB battlefield of agents, let us first take a look at how agents have evolved in the past year since their inception.

Source / ToB Industry Headlines (ID:wwwqifu)

Author / Headlines · Editor / Headlines

From Copilot to Agent: The Advancing AI Assistant

Agents have evolved to this day, undergoing a process of “from Copilot to Agent”.

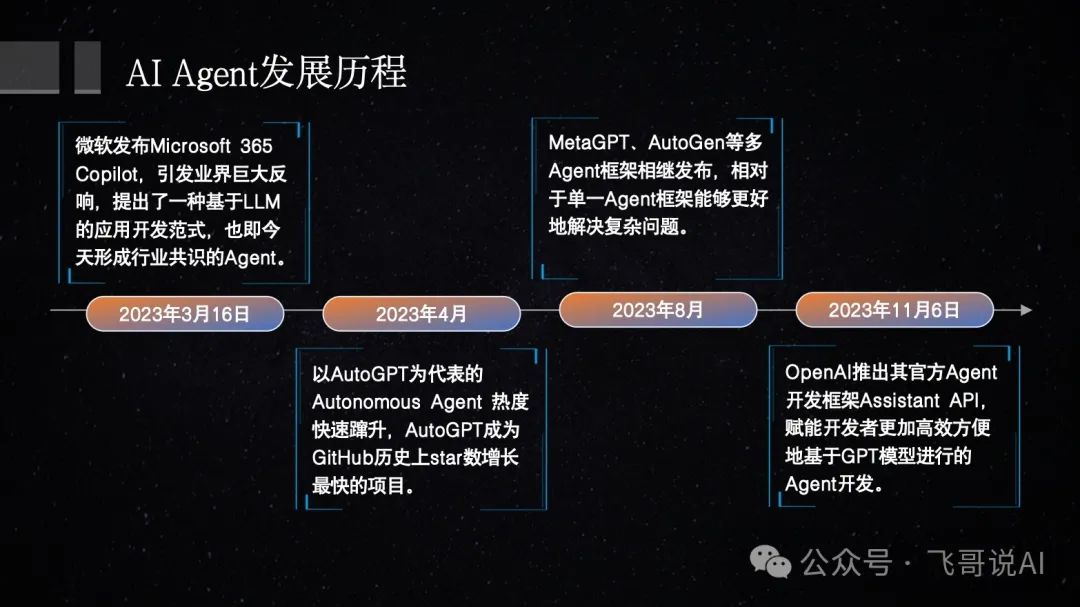

In the past year, backed by large models, the agent field has developed rapidly. Although there is still a considerable distance from true autonomous intelligence, the explosive trend of agents in the industrial sector has become quite evident, and platforms focused on building the agent ecosystem have begun to take shape, attracting developers from various industries. We have seen agents evolve from the early Copilot (co-pilot) model to agents with more autonomous intelligence (intelligent agents, main drivers).

Copilot is a low-level assistant, while the agent is a high-level proxy, and its “high-level” nature means that the agent is already an autonomous AI entity. In other words, Copilot is human-centered, AI-assisted, while the agent is AI-centered, human-supervised.

If we compare with the levels of autonomous driving, L2 level assistance belongs to Copilot, while L4 level driving belongs to the agent, and L3 is in the transition phase from Copilot co-pilot to agent main driver.

In the evolution from Copilot to agent, several key advancements behind the large models empower agents:

1. The application of RAG (Retrieval-Augmented Generation) allows agents to utilize external knowledge and timely information to supplement their deficiencies;

2. With the rapid progress of long-context capabilities in large models, agents have significantly improved their ability to handle complex scenarios and multi-turn dialogues. This progress breaks through the previous bottleneck of insufficient memory capacity in agents, allowing current agents to reason within long contexts, with complex process logic and conditional branches directly described in the window;

3. By connecting with an increasing number of external tools, such as plugins, APIs, etc. With various tools, intelligent assistants are beginning to accelerate their evolution from co-pilots to true intelligent agents;

4. Unique advanced capabilities of agents, such as autonomous planning, environmental interaction, and error reflection, although still in the exploratory stage, have recently made significant progress, especially in establishing and advancing the “agent platform”. The agent platform provides developers with a natural language prompt engineering development environment, allowing iterative optimization of agents through human-computer dialogue in context windows. Developers can thus “train” agents for specific tasks, and after shaping, publish them through the platform, helping to form the agent ecosystem, with the release of GPTs and the GPT Store being a typical example.

The biggest difference between Copilot and agent lies in their abilities of “autonomous planning” and “environmental interaction”. While Copilot relies on human prompts to assist users, agents empowered by large models possess full autonomous capabilities for memory, reasoning, planning, and execution concerning their target tasks, requiring only the user’s initial instructions and feedback on results, without human intervention during the process.

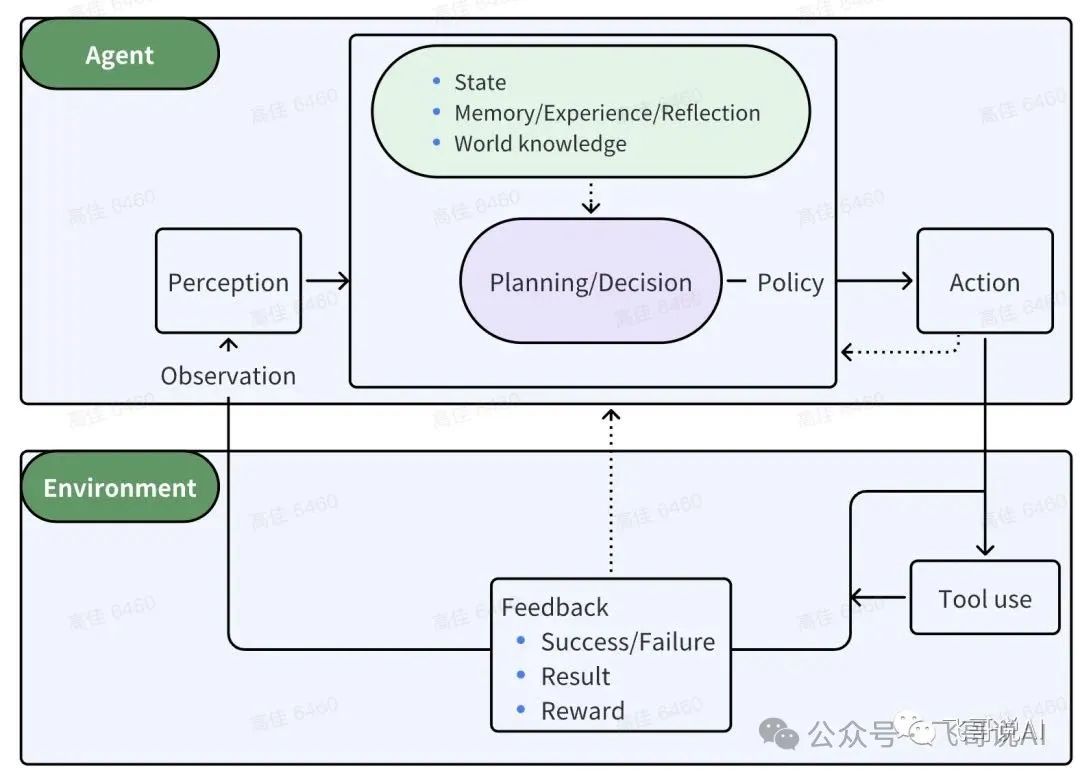

As shown in the figure below, the agent acts autonomously, with “无人” operation; human and external tool interventions serve as interactions between the environment and the agent.

Specifically, from the current main implementation methods of agents, “autonomous planning” is reflected in how developers build agents differently from traditional software engineering: traditional software engineering requires implementing specific algorithms through programming languages that are machine-executable, while in building agents, developers no longer need to provide specific algorithms, nor do they need to use computer languages, not even pseudo code, (pseudo code) is needed; they just need to define tasks using natural language(input and output) to initiate the agent’s autonomous planning to execute tasks and create the initial version of the agent.

On the other hand, the “environmental interaction” capability is reflected in the two types of output results generated by the agent from its initial version to a “product” that can be listed on a platform, driven by sample data input:

1. Error messages indicate that the agent’s autonomous planning path has issues, similar to syntax errors in traditional programming;

2. Unsatisfactory output results, akin to logical errors in traditional programming. In this case, developers can add specific feedback indicating what the expected output corresponding to the sample input is.

Both types of information can be directly fed back to the agent on the development platform; as the interaction between the agent and the environment, the agent will “reflect” on the errors fed back by the environment and attempt to correct them in the next iteration; this cycle results in a usable agent that can be listed as a product on the platform. This is the “internal iteration” of the interaction between the agent and the environment.

After the agent is released, the environmental feedback during the actual use by users constitutes the “external iteration” of the interaction between the agent and the environment. Just like “external iteration” and “internal iteration” can be directly fed to the agent, allowing it to self-improve, aligning with user preferences and iterating new online versions. The process of external iteration marks the establishment of an environmental data flywheel.

From a technological evolution perspective, we have witnessed OpenAI’s transition from the opening of the Plugins feature to the ecological construction of the GPTs platform, as well as Microsoft’s leap from GitHub Copilot to Microsoft 365 Copilot. The traditional pipeline application development in the industry is beginning to evolve towards the end-to-end development paradigm of agents, empowered by large models.

From the evolution path of product forms, we see a shift from single-function coding assistants (like GitHub Co-pilot) to agent platforms like AutoGPT, and then to the release of multi-agent frameworks like MetaGPT and AutoGen, as well as the release of OpenAI’s agent development framework Assistant API, with agent development tools and platforms becoming increasingly simpler, and the capabilities of agents correspondingly enhanced.

In many domestic platforms, especially collaborative office platforms, we find that DingTalk’s development trend in technology paths and product forms over the past year has almost perfectly aligned with that of large models and their agents, and it has rapidly established a development platform and ecosystem for AI assistants by combining agent technology with actual enterprise scenarios.

In this year, DingTalk has taken the lead in using large models to transform its products, with 20 product lines already AI-enabled, and intelligent Q&A and data inquiry have been well applied in enterprises; furthermore, DingTalk’s own large model, Tongyi Qianwen, is also rapidly evolving, such as long text and multi-modal capabilities, laying a solid foundation for the evolution of agents; at the same time, relying on DingTalk’s advantages as a collaborative platform, as well as the engineering capabilities of workflows and AI PaaS, its agents have gradually achieved integration with business processes and data.

DingTalk’s exploration of agent technology has always been centered around actual enterprise needs, and its differentiated advantage lies in its attraction of a large number of ToB users through the office needs of thousands of industries (the “greatest common divisor” of enterprises) and the accumulation of massive applications and data under its unified platform framework. A few days ago, DingTalk launched its own “Agent Store” (named “AI Assistant Market”), which already has over 200 AI assistants.

This customer stickiness and the accumulation of massive user data give DingTalk a natural advantage in the landing application of agents.

Source / ToB Industry Headlines (ID:wwwqifu)

Author / Headlines · Editor / Headlines

Who Has Better Odds in Making Agents?

Why Is a Massive User Base the Confidence for Making Agents?

One important indicator for testing the effectiveness of agent operation is its “information retrieval” capability, which is also why RAG technology is highly valued, as it allows agents to utilize external knowledge and timely information to provide users with more accurate and relevant answers and services.

This requires agents to grow on a platform with massive data, preferably with sufficient plugins and API tools for agents to call upon, maximizing the agent’s retrieval and understanding capabilities to enhance its action ability.

In other words, the amount of user data almost determines the “product ceiling”.

This is precisely the cornerstone of DingTalk’s significant advantage—based on a strong ecosystem and user data, allowing for more optimization space for the product.

Since DingTalk entered the AI space a year ago, 2.2 million enterprises have activated DingTalk AI, covering various industries such as K12, manufacturing, retail, real estate, service industry, and internet. All these have accumulated rich data for DingTalk’s AI platform. DingTalk’s “AI Assistant Market” includes templates derived from different scenarios, allowing users to copy them as starting points for new scenarios, enhancing the “universality” of agents developed on the DingTalk platform.

The second factor for making agents is large models, as agent products cannot be separated from the empowerment of large models, thus “product-model integration” has inherent advantages.

As mentioned earlier, agents represent an end-to-end large model product development paradigm. Traditional AI products generally adopt a procedural pipeline system architecture, with modules relying on and linking together, where there are many intermediate results between the input and output ends, and the modular chain is long; while an ideal large model product is end-to-end, and the iterative enhancement of the product can be automatically improved through end-to-end training with the flowing back data.

The end-to-end development requires a huge challenge for many companies that are “product-model separated,” while a few “product-model integrated” companies provide the possibility for end-to-end training:

On one hand, products continuously collect agreed user feedback “embedded” data, feeding back the integrated large model’s user alignment training, improving the data quality of the model;

On the other hand, the continuously iterating model enhances product experience optimization, aligning products with user expectations, attracting a larger user base and generating more data feedback. This data barrier and user stickiness prevent it from being crushed by the upgrades of other general large models.

DingTalk itself is a true “product-model integrated” company, with its own large model, making its own agent products.

“Product-model integration” is crucial for AI companies. As we mentioned in “Why ‘Product-Model Integration’ is a Better AI Company Model”, companies with both products and models find it easier to form a “data flywheel”, enhancing their core competitiveness.

Products play a key role in providing direction or “beacon” for the model: firstly, product demands can guide product optimization directions; secondly, products help to verify the actual performance of the model.

For DingTalk, the “AI Assistant Market” based on massive data serves as that guiding beacon, making its model training targets more focused.

The third factor for making agents is the need for the engineering capabilities of the platform.

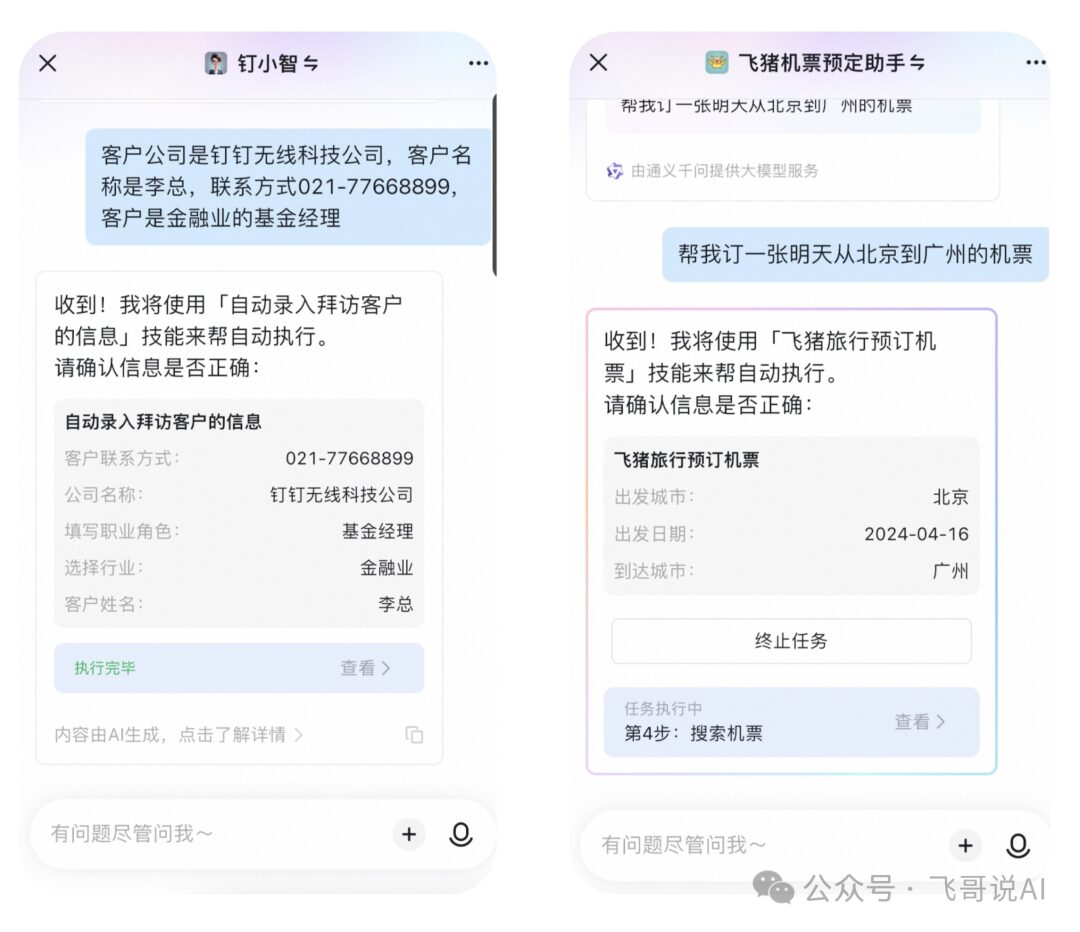

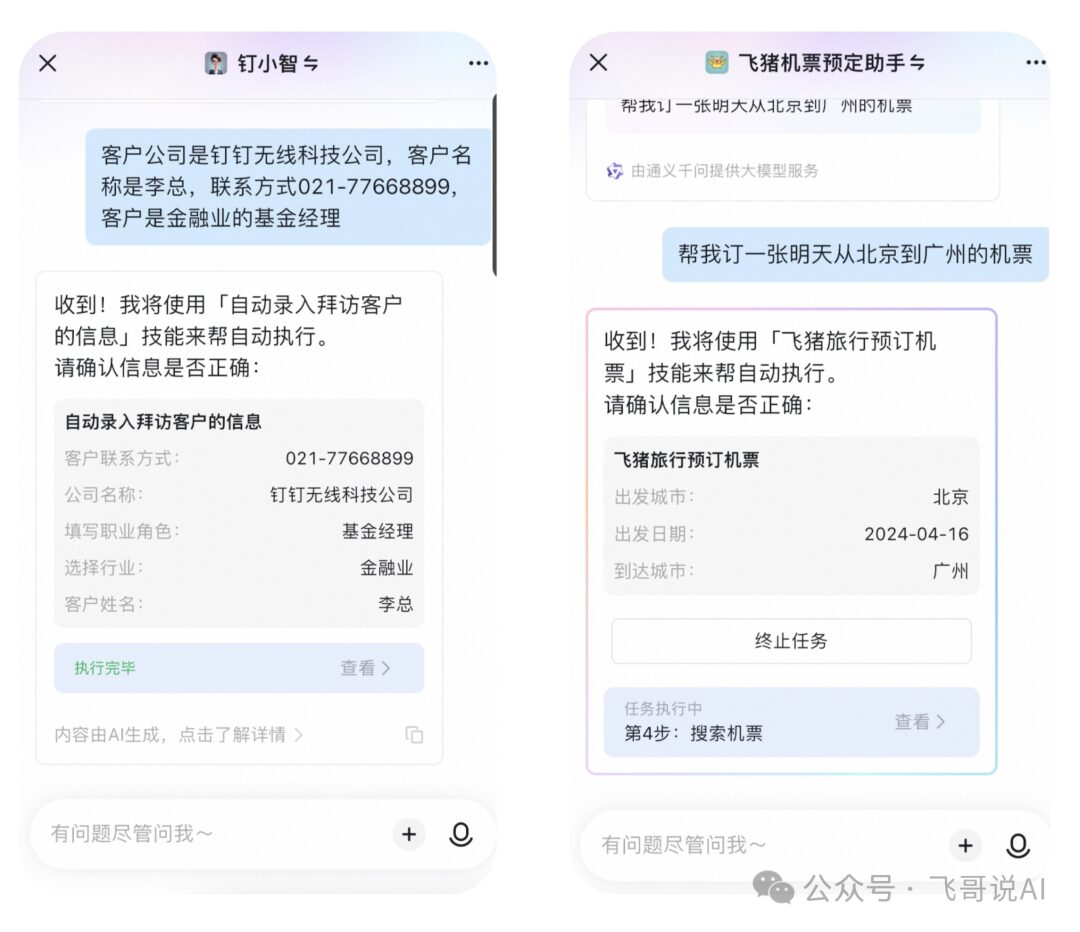

When DingTalk launched the “AI Assistant Market”, its agents had already undergone significant upgrades. For instance, in terms of action systems, the AI assistant’s “human-like operation” capability has greatly enhanced, allowing it to automate page operations after observing the user’s operation path, improving the efficiency of high-frequency business actions, such as having the DingTalk AI assistant automatically enter customer information and submit repair orders with a single command, and also supporting the jump to external web applications like Fliggy to autonomously complete tasks like booking flights and hotels.

Moreover, in terms of workflows, to enable AI assistants to handle more complex tasks, DingTalk has incorporated workflows into the assistant creation process. Users can decompose tasks and let the AI assistant complete them after orchestrating execution actions, making the completion results more accurate and more controllable. Human-like operation, workflows, and connections to external APIs and systems are all advanced capabilities of agents, further expanding their action capabilities.

As a collaborative office platform’s “universality”, the super usability of its large model, and the substantial engineering “certainty” all provide DingTalk with a strong foundation for developing AI assistants.

Moreover, in terms of workflows, to enable AI assistants to handle more complex tasks, DingTalk has incorporated workflows into the assistant creation process. Users can decompose tasks and let the AI assistant complete them after orchestrating execution actions, making the completion results more accurate and more controllable. Human-like operation, workflows, and connections to external APIs and systems are all advanced capabilities of agents, further expanding their action capabilities.

As a collaborative office platform’s “universality”, the super usability of its large model, and the substantial engineering “certainty” all provide DingTalk with a strong foundation for developing AI assistants.

Source / ToB Industry Headlines (ID:wwwqifu)

Author / Headlines · Editor / Headlines

Vertical Depth or Horizontal Development?

Based on AI large models, product forms can derive open MaaS platforms, and intermediate-layer products represented by AI Infra, etc. In the blue ocean of the field, there is also a vertical depth development branch. So why does DingTalk choose to promote the agent ecosystem and create a horizontally covering agent market across various industries?

One insight may answer this question: in the long run, one of the winning methods for vertical solutions is horizontal development.

Diving deep into vertical fields is still a blue ocean market, likely to be divided by two major powers. One is horizontal entry, and the other is vertical deepening—based on general large models, creating industry-specific large models, and then creating industry scenario agents.

It is hard to say that the latter will necessarily be crushed by the former, while those choosing horizontal entry find it difficult to create industry-specific large models for every vertical field; they usually can only temporarily enhance using scenario data, which is reflected in fine-tuning and in-context learning, but cannot significantly change the foundational model.

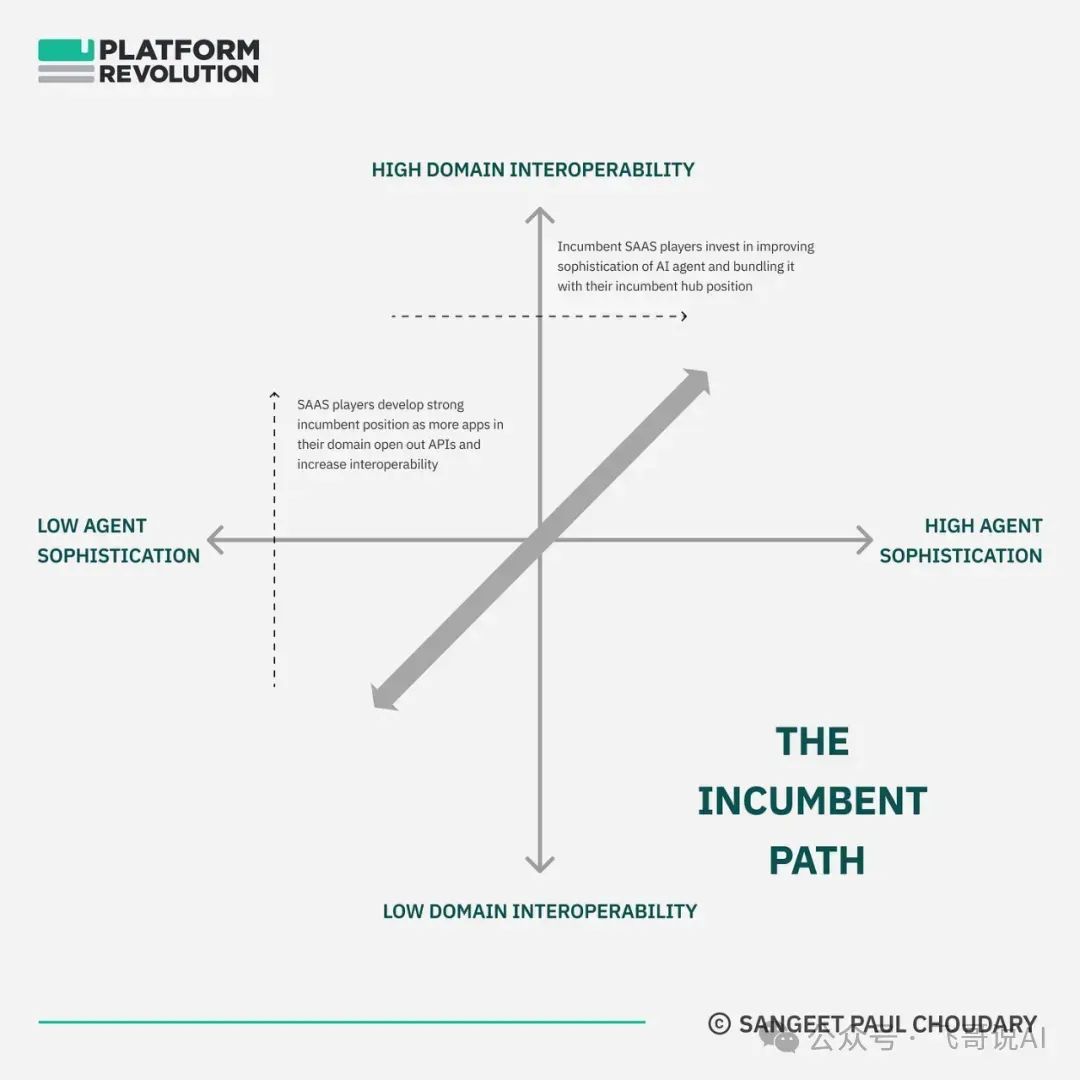

Recently, entrepreneur and platform economist Sangeet Paul Choudary proposed in a blog that agents create a possibility for re-integrating scenarios, enabling vertical AI players to achieve “horizontal” development through coordination across multiple workflows, which will reshape the B2B value chain.

Similar to SaaS, the rise of vertical SaaS previously followed two logics:

One is to seize core scenarios for rapid development; the second is to extend scenarios around core scenarios.

For example, Square started with payment SaaS and gradually expanded to a dual ecosystem of ToB and ToC, developing various product lines such as developer tools, virtual terminals, sales, e-commerce, customer management, invoicing, stock investment, installment payments, and virtual currencies, covering various industries like catering, retail, finance, and e-commerce, becoming a comprehensive SaaS solution provider.

Similarly, Toast expanded from providing single-point solutions with POS machines for restaurants to a comprehensive restaurant SaaS platform that includes software (restaurant management, channels, ordering, delivery, payroll management, marketing, scan-to-order), hardware (fixed terminals, handheld terminals, contactless terminals), and supporting services (after-sales, micro-loans).

It can be seen that SaaS giants like Square and Toast follow a development strategy of expanding from vertical to horizontal.

Sangeet believes that most of the “disruption” of the status quo (which can be understood as innovation) occurs through deep exploration of niche scenarios, but most venture capital returns are achieved through “integration”.

Splitting cannot obtain sustainable value; for instance, many VCs may initially focus on innovators in niche scenarios, but in the end, most benefits are taken by the “integrators” who build ecosystems.

To capture value on a large scale, software companies need to continuously extend scenarios—ultimately, all vertical games seek horizontal development.

This reasoning may also apply to agents. Although agents possess excellent perception, reasoning, and action capabilities, applying them to vertical fields can quickly and effectively solve pain points, but this does not constitute a moat; rather, the true moat lies in the interaction and coordination between agents after the underlying data is integrated, meaning agents can re-integrate workflows across APIs, ultimately enhancing the entire system’s quality and efficiency.

The AI Agent Store, also known as the AI Assistant Market, is precisely the embodiment of this “integration” and “unification”; this is also the strategic layout behind DingTalk’s launch of AI assistants—DingTalk aims to transform the entire ToB ecosystem using the AI assistant market built on its “Hub”, achieving the highest quality and efficiency in the ToB field based on existing industry and data accumulation.

In the past year, from the intelligent transformation of various product lines to opening AI PaaS to ecological partners and customers, from AI Copilot to AI Agent, and then to AI Agent Store, DingTalk has gradually paved the way for a scalable landing of AI applications. At a time when various industries are eager to find scenarios for large model implementation, DingTalk provides a model for AI application landing.

We believe that the application of agents in the ToB field reflects the acceleration of the digital transformation of enterprises through AI applications. The core problem solved by the empowerment of agents is “reducing costs and increasing efficiency”, and this characteristic also determines that AI assistants represented by DingTalk can have greater scalability in the blue ocean of the ToB field.

With the further enhancement of AI agents’ autonomy, agents will evolve into more specialized proxies, replacing most professional jobs and skills. From a trend perspective, it is not far-fetched to say that large model agents will replace 90% of professional jobs, and the remaining 10% will still have CoPilots to assist human professionals.

In the distant future, agents may evolve into “omnipotent intelligent agents”, completely replacing human jobs and integrating with more hardware products (not limited to embodied intelligence and humanoid robots). What will the relationship between human civilization and AI agents look like then?

Everything is beginning from the current battleground of ToB agents.

And who will be the first to become the biggest beneficiary of this technological wave?

▼Click the image to jump to read

Tap on “Looking”, and let’s chat