Accurately identifying human eyes and locating their positions is crucial for numerous applications. From identity verification in security monitoring to gaze tracking in human-computer interaction, reliable human eye recognition technology is indispensable. The following code demonstrates a process for human eye recognition and position marking using MATLAB. Estimated reading time: 5 minutes.

1. Code

%% Human Eye Recognition, Marking Eye Positions

% Open a file selection dialog for the user to choose an image file

[file, path]=uigetfile({'*.*';'*.png';'*.jpg';'*.tif'},'Image');

filename=[path,file];

input_image=imread(filename);

figure();imshow(input_image);title('Original Image');

%% Apply Gaussian filtering to the input image

gauss_image=imgaussfilt(input_image,1); % Gaussian filter with standard deviation of 1

% Create a cascade object detector for eye detection, detecting both eyes

% detector=vision.CascadeObjectDetector('LeftEyeCART');

% detector=vision.CascadeObjectDetector('EyePairBig');

detector=vision.CascadeObjectDetector('RightEyeCART');

% Detect human eyes

eyes=step(detector,gauss_image);

%% Get the number and position information of detected eyes

size_eyes = size(eyes); % Number of eyes

hold on;

% % Iterate through each detected eye and draw a rectangle to mark the eye position

% for i=1:size_eyes(1)

% rectangle('Position',...

% [eyes(i,1),eyes(i,2),eyes(i,3),eyes(i,4)],...

% 'EdgeColor','r');

% end

%% Draw a cross to mark the center position of the eyes

for i =1:size_eyes(1)

% Get the top-left coordinates of the current eye

eyes_final=eyes(i,1:2);

% Crop the current eye region from the Gaussian filtered image

image = imcrop(gauss_image,eyes(i,:));

% Draw a blue rectangle around the eye region

rectangle('Position',eyes(i,:),'edgeColor','b','LineWidth',2);

% Convert the cropped eye region image to grayscale

image = rgb2gray(image);

% Convert image data type to double precision

image = im2double(image);

% Calculate the gradient of the image, obtaining gradients in x and y directions

[grad_x,grad_y] = gradient(image);

% Calculate the gradient magnitude of the image

g = grad_x.*grad_x + grad_y.*grad_y; g = sqrt(g);

% Calculate the standard deviation and mean of the gradient magnitude

std_g = std(g(:)); mean_g = mean(g(:));

% Process the gradient magnitude image based on the mean and standard deviation

g_1 = g; g_1(g_1 < mean_g + 0.5*std_g) = 0; g_2 = g; g_2(g_2 > mean_g - 0.5*std_g) = 0; g = g_1 + g_2; temp_g = g; w = 1-image; temp_g(temp_g ~= 0) = 1; grad_x = grad_x.*temp_g; grad_y = grad_y.*temp_g;

% Get the dimensions of the cropped image

size_img = size(image);

% Create x coordinate matrix

Dx = repmat(1:size_img(2),size_img(1),1);

% Create y coordinate matrix

Dy = repmat(transpose(1:size_img(1)),1,size_img(2));

C_max = 0; X = 0; Y = 0;

% Iterate through each pixel in the image to find the center position of the eyes

for x = 1:size_img(2)

for y = 1:size_img(1) D_x = Dx - x; D_y = Dy - y; D_t = D_x.*D_x + D_y.*D_y; E = sqrt(D_t); D_x = D_x./E; D_y = D_y./E; C_x = D_x.*grad_x; C_y = D_y.*grad_y; C = w(y,x)*(C_x + C_y); C(C < 0) = 0; ind = find(isnan(C)); C(ind)=0; total_C = sum(sum(C));if(total_C > C_max) C_max = total_C; X = x; Y = y;endendend

% Draw a red cross on the image to mark the center position of the eyes

plot(X+eyes_final(1),Y+eyes_final(2),'r+','MarkerSize',10);

end2. Principles

-

vision.CascadeObjectDetector is a function in MATLAB used for object detection, which employs a cascade classifier to detect specific targets in images.

(1) It is based on the cascade classifier algorithm, capable of quickly and effectively detecting specific targets in images.

(2) Different pre-trained model names can be specified, such as ‘EyePairBig’, ‘LeftEyeCART’, ‘RightEyeCART’, to detect different types of targets, such as eyes.

(3) It has a high detection accuracy and robustness, performing well under various lighting conditions and image backgrounds.

(4) It is user-friendly; simply input the image and call the step function to obtain detection results, returning information such as the position and size of the targets.

-

Differences in detectors using pre-trained models ‘EyePairBig’, ‘LeftEyeCART’, ‘RightEyeCART’:

detector=vision.CascadeObjectDetector('LeftEyeCART'); detector=vision.CascadeObjectDetector('EyePairBig'); detector=vision.CascadeObjectDetector('RightEyeCART');Different detection targets:

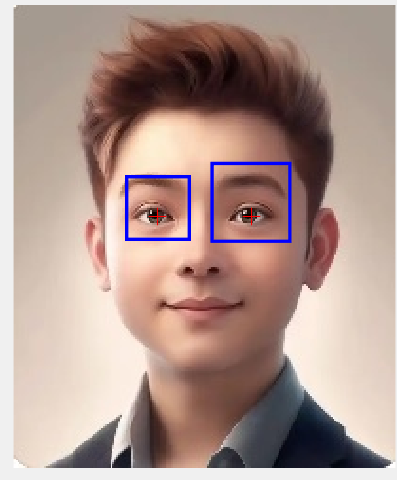

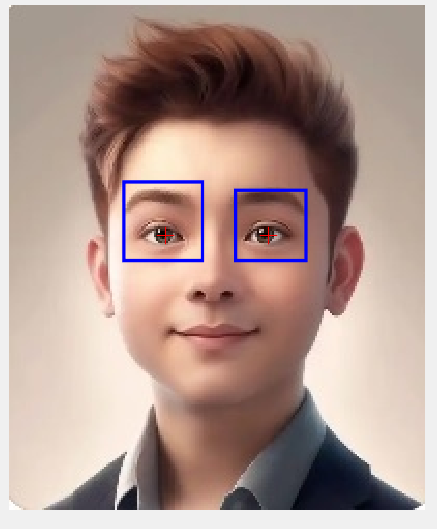

LeftEyeCART and RightEyeCART are used to detect the left and right eyes, respectively. They are trained based on the Classification and Regression Trees (CART) algorithm, providing high accuracy and robustness for single eye detection.

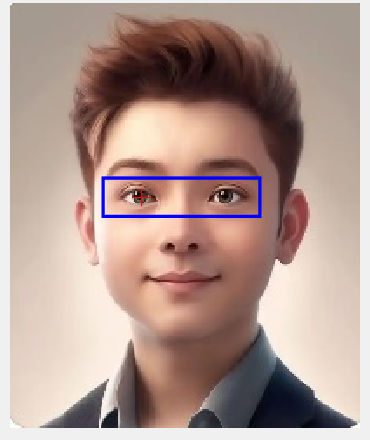

EyePairBig is used to detect a pair of eyes (usually both eyes simultaneously), which may perform better in detecting the overall position and relative relationship of both eyes.

The effects are as follows (left eye, right eye, both):

Different Applicable Scenarios:

If precise individual localization of the left or right eye is required, such as in eye disease diagnosis or eye behavior analysis tasks, LeftEyeCART or RightEyeCART would be more suitable.

When quickly locating the approximate position of both eyes in a face, or in tasks such as face recognition or face pose estimation, EyePairBig may be more applicable, as it can simultaneously detect a pair of eyes, aiding in quickly determining the direction and position of the face.

-

In addition to the aforementioned eye detectors, vision.CascadeObjectDetector can also be used to detect other targets, commonly including:

‘Nose’: Used to detect the nose, which may be used in facial feature localization and emotion analysis tasks.

‘Mouth’: Used to detect the mouth, applicable in speech recognition (assisting through mouth movements) and emotion recognition fields.

‘Face’: Used to detect faces, one of the most common applications, usable in facial recognition systems, security monitoring, image classification, and various other scenarios.

3. Code Analysis

-

Image Reading and Display:

Using the uigetfile function to allow the user to select an image file, concatenating the path and filename, and then using the imread function to read the image.

-

Human Eye Detection:

Applying Gaussian filtering with a standard deviation of 1 to the original image to smooth it,

then using the vision.CascadeObjectDetector function to create a cascade object detector based on the ‘EyePairBig’ model for human eye detection,

and using the step function to detect human eyes in the filtered image, obtaining the position information of the eyes.

-

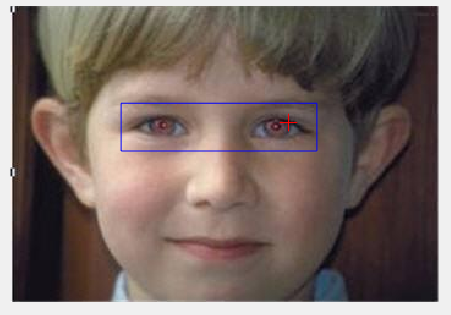

Marking Eye Positions:

Obtaining the number and position information of detected eyes, using hold on to maintain the image state,

and iterating through each detected eye to use the rectangle function to draw red rectangles marking the positions of the eyes.

-

Marking Eye Centers:

Iterating through each detected eye again, first obtaining the top-left coordinates of the eye, cropping the eye region from the filtered image and drawing a blue rectangle,

converting the cropped eye region image to grayscale and double precision, calculating its gradient magnitude, processing the gradient image based on the standard deviation and mean of the gradient magnitude,

then performing a series of calculations and iterations to find the center position of the eye,

and finally using the plot function to draw a red cross on the image to mark the center position of the eye.

Sometimes inaccurate (affected by image quality, lighting, etc.)..

4. Summary of MATLAB Functions

-

imgaussfilt: Gaussian filtering for smoothing.

-

vision.CascadeObjectDetector: Creates a cascade object detector.

-

step: Calls the step function on the cascade object detector, passing in the image to be detected, returning the position and size information of the detected targets (human eyes).

-

rectangle: Draws rectangles on the image.

-

imcrop: Crops a sub-image from a specified region.

-

gradient: Calculates the gradient of the image, obtaining gradient components in the x and y directions.

This example fully implements human eye recognition and marking of positions and centers. It detects human eyes based on the ‘EyePairBig’ model in vision.CascadeObjectDetector and marks them.

The current code primarily targets human eye detection in clear images and standard poses. In practical applications, consider expanding the code in various ways. For example, addressing different lighting conditions; dealing with diverse facial poses, integrating facial pose estimation methods. If applying this technology to video stream processing, consider how to optimize the algorithm to meet real-time requirements, such as using frame differencing techniques to reduce redundant computation.

Try modifying the code: (1) to detect faces, noses, mouths, ears, etc.; (2) connect a camera for real-time video detection.

If you have any questions, feel free to leave a message.