Reply “001” below in the public account to obtain the 154-page Linux study notes compiled by Ke Gan.

Introduction

I have been struggling in the operations and maintenance field for several years. I still remember when I started, I only knew how to use some simple commands, and when writing scripts, I made them as simple as possible, resulting in long and clunky scripts.

Had I known some more advanced commands, like the Xargs command, pipeline commands, and automated response commands, I could have written concise and efficient scripts.

For whatever reason, I want to provide explanations on the usage of some advanced Linux commands for the benefit of others and myself, so that I can refer back to them later if I forget.

1. Useful xargs Command

In my daily use, I find the xargs command to be quite important and convenient. We can use this command to pass the output of one command as parameters to another command.

For example, if we want to find files ending with .conf in a certain path and classify them, the usual method would be to first find the files ending with .conf, output them to a file, then cat that file and use the file classification command to classify the output files.

This ordinary method is indeed a bit cumbersome, and this is where the xargs command comes in handy.

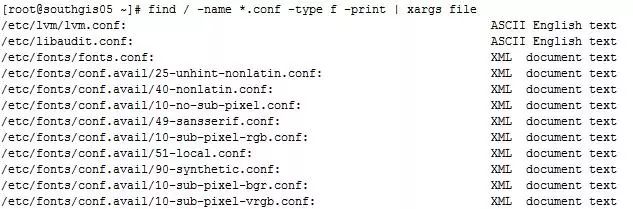

Example 1: Find files ending with .conf in the / directory and classify them

Command: # find / -name *.conf -type f -print | xargs file

The output is as follows:

After xargs, you can not only add file classification commands but also many other commands. For instance, you can use the find command with the tar command to find special files in a specified path and then package them directly with the tar command. The command is as follows:

# find / -name *.conf -type f -print | xargs tar cjf test.tar.gz

2. Running Commands or Scripts in the Background

Sometimes when we perform certain operations, we do not want our operations to terminate when the terminal session is disconnected, especially for database import and export operations. If large amounts of data are involved, we cannot guarantee that our network will not have issues during our operations, so running scripts or commands in the background is a significant safeguard for us.

For example, if we want to run a database export operation in the background and record the command output to a file, we can do the following:

nohup mysqldump -uroot -pxxxxx --all-databases > ./alldatabases.sql & (xxxxx is the password)

If you do not want the password to be in plain text, you can also do this:

nohup mysqldump -uroot -pxxxxx --all-databases > ./alldatabases.sql (do not add & symbol at the end)

After executing the above command, it will prompt you to enter the password. After entering the password, the command will still run in the foreground, but our goal is to run the command in the background. At this point, you can press Ctrl+Z and then enter bg to achieve the effect of running the first command in the background while also allowing for hidden password input.

The result of the command running in the background will leave a nohup.out file in the current directory where the command was executed, and you can check this file to see if there were any execution errors.

3. Find Processes with High Memory Usage in the Current System

In many operations, we find that memory consumption is quite serious. So how can we find out the processes consuming memory sorted by usage?

Command: # ps -aux | sort -rnk 4 | head -20

The output’s fourth column is the percentage of memory usage. The last column corresponds to the process.

4. Find Processes with High CPU Usage in the Current System

In many operations, we find that CPU consumption is quite serious. So how can we find out the processes consuming CPU sorted by usage?

Command: # ps -aux | sort -rnk 3 | head -20

The output’s third column is the percentage of CPU usage, and the last column corresponds to the process.

As you may have noticed, the 3 and 4 after the sort command represent sorting by the third and fourth columns, respectively.

5. View Multiple Logs or Data Files Simultaneously

In daily work, we may check log files using the tail command in separate terminals, one terminal per log file. I, too, have done this, but sometimes it feels a bit cumbersome. There is a tool called multitail that allows you to view multiple log files in the same terminal.

First, install multitail:

# wget ftp://ftp.is.co.za/mirror/ftp.rpmforge.net/redhat/el6/en/x86_64/dag/RPMS/multitail-5.2.9-1.el6.rf.x86_64.rpm

# yum -y localinstall multitail-5.2.9-1.el6.rf.x86_64.rpm

The multitail tool supports text highlighting, content filtering, and many more features you may need.

Here’s a useful example:

At this time, we want to check the secure log with specific filtering keywords while also monitoring real-time network ping:

The command is as follows:

# multitail -e "Accepted" /var/log/secure -l "ping baidu.com"

Isn’t it convenient? If we want to check the correlation between two logs, we can observe if there are any triggers in the log output. If we separate them into two terminals, switching back and forth can be a bit of a waste of time, so this multitail tool is a good method to view them simultaneously.

6. Continuous Ping and Log Results

Many times, operations personnel will hear a voice asking if there is a network issue causing strange symptoms in the business, leading to the assumption that the server network is the problem. This is commonly referred to as “passing the buck”; when a business issue arises, the first relevant personnel cannot find the cause and often blame it on the server network.

At this point, if you ping a few packets and show the results, people might refute you, saying the issue was only temporary and that the network is normal now. This can be quite frustrating.

If you bring out monitoring data from Zabbix, it may not be appropriate since you cannot set the data collection interval to once every second, right? I have encountered this issue before, and I used the following command for ping monitoring collection.

Then, when someone tries to pass the buck to me again, I can extract the ping database for the time period when the issue occurred, and we can discuss it openly. As a result, I was able to turn the tables on them, and they no longer dared to pass the buck easily. That felt great.

Command:

ping api.jpush.cn | awk '{ print $0" " strftime("%Y-%m-%d %H:%M:%S",systime()) } ' >> /tmp/jiguang.log &

The output will be recorded in /tmp/jiguang.log, adding a new ping record every second.

7. Check TCP Connection Status

Specify to check the TCP connection status on port 80, which is helpful for analyzing whether connections are released or for status analysis during an attack.

Command:

netstat -nat |awk '{print $6}'|sort|uniq -c|sort -rn

8. Find the Top 20 IPs with the Most Requests on Port 80

Sometimes when the request volume of a business suddenly increases, we can check the source IPs of the requests. If they are concentrated on a few IPs, there may be an attack. We can use the firewall to block them.

The command is as follows:

# netstat -anlp|grep 80|grep tcp|awk '{print $5}'|awk -F: '{print $1}'|sort|uniq -c|sort -nr|head -n20

9. SSH Port Forwarding

Many friends may have heard that SSH is a secure protocol for remote login under Linux, commonly used for remotely managing servers. However, few may know that SSH can also perform port forwarding. In fact, SSH has a powerful function for port forwarding, which I will demonstrate below.

Example Background: Our company has a bastion host, and all operations must be performed on the bastion host. Some developers need to access the ElasticSearch head panel to check the cluster status, but we do not want to expose the 9200 port of ElasticSearch and still want to access it through the bastion host.

Therefore, we will forward requests to the bastion host (192.168.1.15) to the ElasticSearch server (192.168.1.19) on port 9200.

Example:

Forward access to port 9200 on the local machine (192.168.1.15) to port 9200 on 192.168.1.19

ssh -p 22 -C -f -N -g -L 9200:192.168.1.19:9200 [email protected]

Remember: The prerequisite is to first perform key transmission.

After executing the command, accessing port 192.168.1.15:9200 will actually access port 192.168.1.19:9200.