This article is from the Automotive Electronics Network

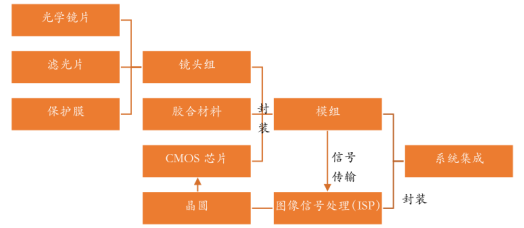

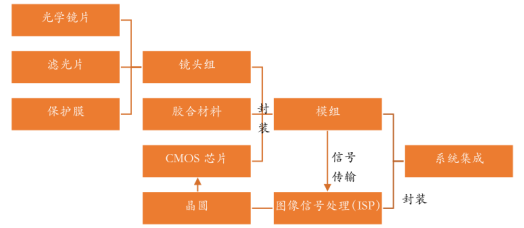

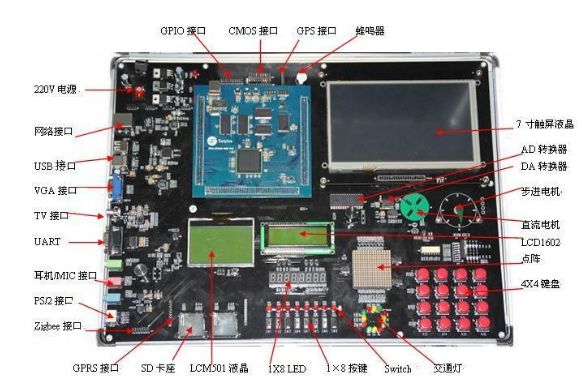

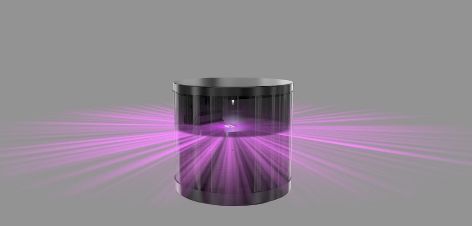

Fig. 3 Image Signal Processor (ISP)

Fig. 3 Image Signal Processor (ISP)

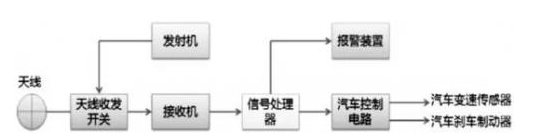

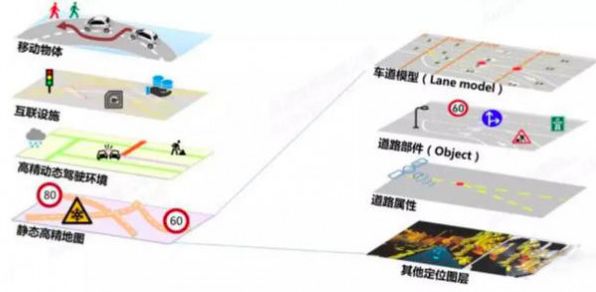

Fig. 21 V2X (Vehicle-to-Everything) Communication Modes

Fig. 21 V2X (Vehicle-to-Everything) Communication Modes

This article is from the Automotive Electronics Network

Fig. 3 Image Signal Processor (ISP)

Fig. 3 Image Signal Processor (ISP)

Fig. 21 V2X (Vehicle-to-Everything) Communication Modes

Fig. 21 V2X (Vehicle-to-Everything) Communication Modes