1: Classification of AI Chips

There are many AI chips on the market, which can be quite dazzling.

Based on their application scope, they can generally be divided into several categories.

Terminal AI Chips: Terminal AI chips require low power consumption and have relatively low computing power demands, mainly for AI inference applications. Terminal AI chips are represented by various MCUs with AI modules, focusing on specific applications. For example, the AI chip in a smart speaker can be used for voice recognition, while the AI chip in a smart lock can be used for facial recognition, and so on.

Cloud AI Chips: Cloud AI chips are used in data centers for cloud AI acceleration, capable of both inference and training. For instance, NVIDIA’s GPGPU cards and Google’s TPU. Cloud AI chips have strong performance and large sizes; for example, the A100 reportedly has an area of 826mm² at 7nm and offers robust performance!

In addition, there are Edge AI chips.

So what are Edge AI chips used for?

When it comes to edge computing, there is a very famous “Octopus Theory”.

The octopus is a strange creature with eight legs, but some of its decisions are not processed in its brain but are computed in its legs.

This octopus leg, compared to the octopus brain (cloud), represents the edge!

This metaphor is so interesting that it is often referenced in edge computing scenarios.

For example, in autonomous driving or ADAS (Advanced Driver Assistance Systems), decisions and designs need to be completed locally.

There are many scenarios that require large amounts of data computation but have high real-time requirements, without needing to go around to a cloud center for computation.

For example, smart driving, smart factories, and traffic management combined with security, etc.

Compared to terminal AI chips used in many consumer-grade scenarios, edge AI chips are more often applied in industrial fields.

Edge AI basically confines applications within a certain range, which could be a car, a train, a factory, or a store.

Within this range, there are some real-time AI decision-making and processing needs that must be met.

Accordingly, we refer to the AI empowerment as autonomous driving, smart manufacturing, smart retail, etc.

The core purpose mainly emphasizes solving problems on the data source side.

This is the demand for the existence of edge AI chips.

2: Features of Edge AI Chips

So what features do edge AI chips have?

1: Strong Computing Power: Edge AI has stronger computing power than terminal AI, usually solving problems independently. However, its performance is 1-2 orders of magnitude stronger than that of terminal AI chips focused on specific applications like facial recognition or voice recognition in smart speakers.

2: Rich Peripherals: Edge AI emphasizes the availability of information, for example, the need for multiple camera inputs, which significantly increases the demand for interfaces like MIPI, allowing simultaneous support for multiple camera and audio inputs.

3: Programmability: Edge AI chips are typically designed for industrial users, requiring AI to empower users. In other words, AI must be integrated with user application scenarios, often requiring programming based on different industrial users’ needs to adapt to different models and scenarios. They are not limited to specific applications.

A well-designed programmable architecture is key to solving problems. Edge AI chips are not directly provided to industrial customers; rather, they must be customized according to the needs of industrial customers for AI empowerment, which is a core feature of edge AI chips.

3: Architecture of Edge AI Chips

So what does the architecture of edge AI chips look like?

For example, the edge AI computing platform, JETSON, should be one of them.

The latest generation released is JETSON AGX Orin.

JETSON, as NVIDIA’s edge AI computing platform, is not as well-known as NVIDIA’s GPGPU.

However, JETSON inherits the GPGPU architecture of Ampere and ARM Cortex-A78, allowing it to perform both inference and training on edge AI chips.

As an edge AI product, it has a processing performance of 200 TOPS (INT8).

Using JETSON AGX Orin as an example, let’s explore its internal chip architecture.

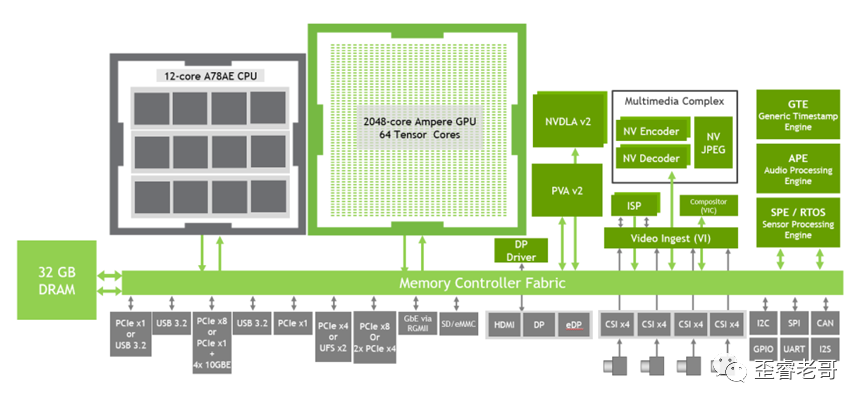

The computing components of this chip mainly consist of three parts: CPU, GPU, DSA (NVDLA + PVA).

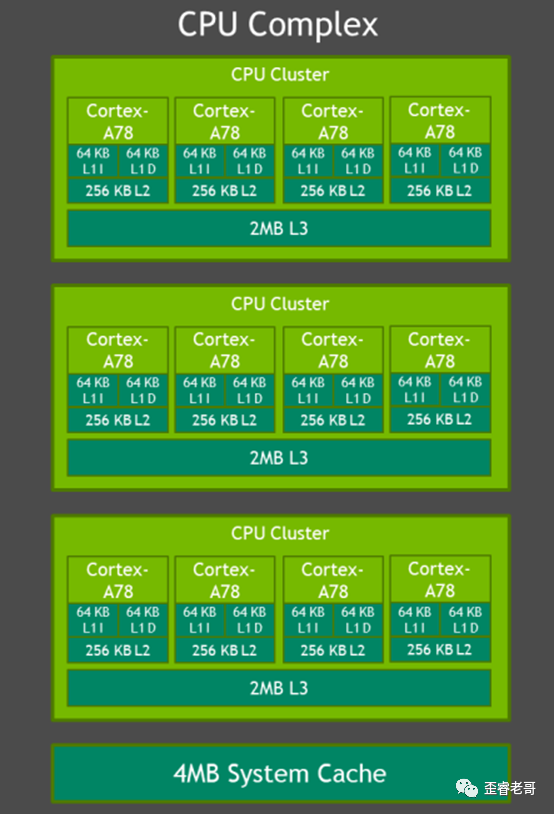

CPU:

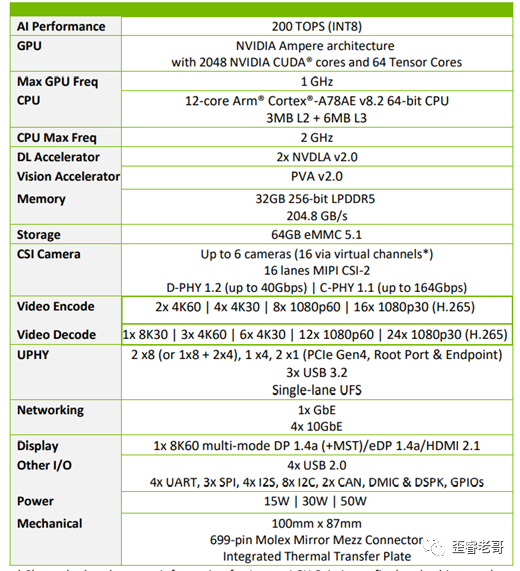

JETSON includes three groups of four-core A78 processors, with frequencies reaching up to 2 GHz. This means that the chip has a total of 12 A78 cores, which differ from mobile processors; the three clusters of A78 are symmetrical, not designed with big and small cores like mobile processors. They are primarily aimed at computational services rather than low power consumption for different loads in mobile applications. In some scalar operations, the multi-core A78’s computational ability is also very impressive.

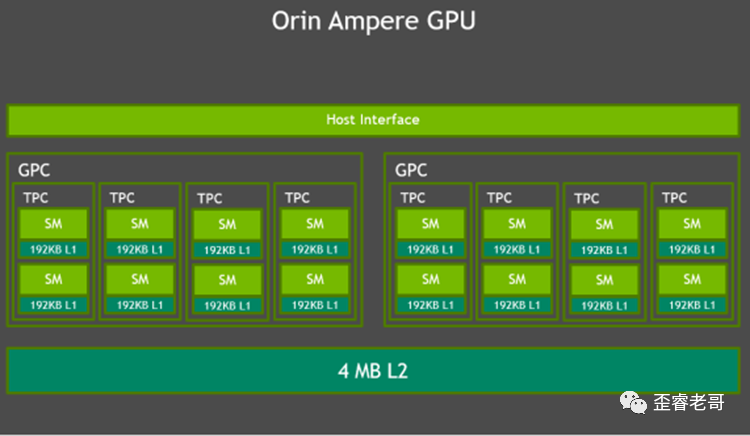

GPU:

The GPU is NVIDIA’s latest Ampere architecture, featuring 2048 CUDA cores and 64 Tensor cores. All of these are programmable. The Ampere architecture is the latest generation of GPGPU architecture, with previous generations being Kepler, Maxwell, Pascal, Volta, etc. The latest generation of the Ampere architecture has upgraded the tensor core. After using the Ampere GPU, unlike other edge AI chips, it can support both inference and training.

The most important aspect is that this AI chip can be programmed using CUDA, and programmability is a core requirement for edge AI chips.

DSA:

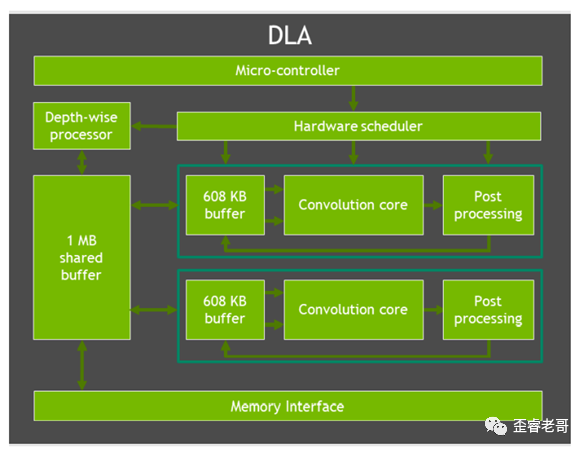

As an AI acceleration unit, JETSON also has another two NVDLA cores, as well as a VISION accelerator (PVA);

NVDLA is mainly used for inference. The core remains a large matrix convolution operation.

Among them, NVDLA is already open-source, and interested parties can download and run the source code on GITHUB. For details, see: nvdla· GitHub.

This demonstrates the practical open-source achievements in the industry, greatly promoting the industry.

IO Resources:

In addition to computing resources, IO resources are also quite rich. After all, edge AI requires rich inputs, supporting six cameras and 16 sets of MIPI interfaces.

If we were to choose an important interface for edge AI chips, it would definitely be MIPI. After all, edge AI chips, besides computing power, also lack the MIPI interface.

MIPI is the “eyes” of edge AI chips (used to connect cameras). Unlike humans, it needs many “eyes”; edge AI chips need to be “aware” of their surroundings.

Without a brain, ears, or eyes, edge AI chips cannot function.

There are also USB interfaces that can support some USB cameras.

PCIe is also supported. Both RC and EP are supported, meaning it can serve as an acceleration card plugged into other hosts or as a primary device plugged into other acceleration cards.

Additionally, in terms of networking, it supports four 10G ports, enabling high-speed interconnection, allowing for high-speed network transmission or interconnection between several JETSON AGX units.

The following image shows the detailed parameters of JETSON AGX Orin; feel free to take a look!

Based on these parameters, the chip’s area cannot be small; I believe this chip might be manufactured using a 7nm process to achieve a balance between area and power consumption.

Its typical power consumption is approximately at levels of 15W, 30W, and 45W.

4: Functions of Edge AI Chips

What can such a powerful AI chip do?

For example, during the pandemic, many places have restrictions on the flow of people (this place limits 100 people!).

From a small store to a large block, timely obtaining crowd density is a typical task.

By using facial recognition, we can determine the density of people in a specific area and make real-time decisions for crowd control.

If it were a terminal AI MCU, it would be difficult to have significant computing power and simultaneously accept multiple video inputs.

That’s where edge AI chips come into play.

As a solution provider, one must not only have a very powerful AI engine but also have many video input sources.

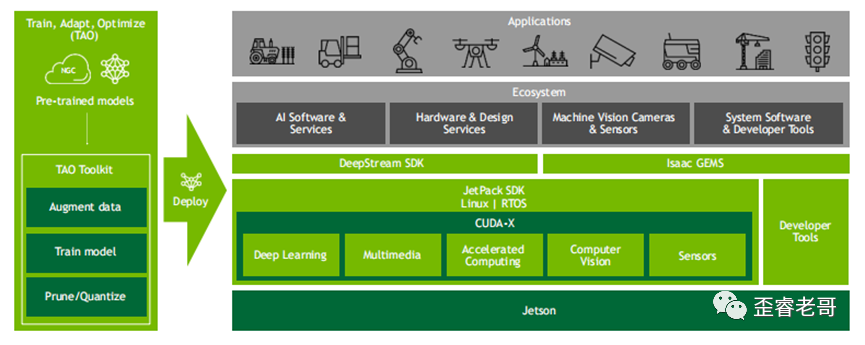

Finally, a robust AI framework (SDK) is required to run all this hardware.

In other words, edge AI needs to be redeveloped based on user AI requirements.

As mentioned earlier, one important feature of edge AI is the redevelopment of AI + scenarios based on industrial contexts.

Many AI chips may have strong paper performance, but how to convert this computing power into user-perceived improvements is where much internal work can be done.

Therefore, industrial users need an open AI platform rather than just a chip with computing power; it is more important to develop AI business according to user needs.

There is an old saying: “Work not directed by the east will yield no results, no matter how hard you try.”

Without software (SDK) or if the software is not user-friendly, it’s like having martial arts techniques without internal skills.

Even if AI chips have strong computing power, they cannot perform without software (SDK).

Balancing hardware and software is always a timeless choice.

How to convert AI computing power into user productivity.

In this regard, JETSON AGX Orin provides JetPack 5.0, supporting CUDA 11 and the latest versions of cuDNN and TensorRT.

Through these software tools, especially CUDA, which aids user development, the robust computing power of the JETSON platform is combined with rich IO.

Ultimately fulfilling the “mission” assigned to AI chips in edge computing.

The end user receives: user-defined AI chips.

Or requirement-defined AI chips.

This is the essence of edge AI chips!

Author: Wei Rui