Source: Shenyang Institute of Automation, Chinese Academy of Sciences

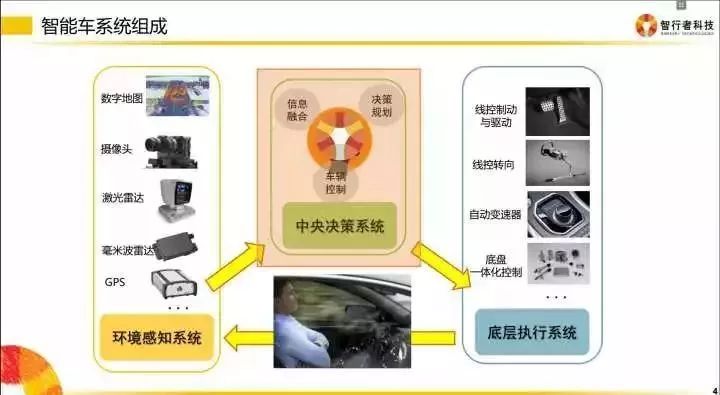

Everyone knows that intelligent vehicles are a comprehensive system that integrates functions such as environmental perception, planning and decision-making, and multi-level assisted driving. It utilizes technologies such as computer science, modern sensing, information fusion, communication, artificial intelligence, and automatic control, making it a typical high-tech composite.

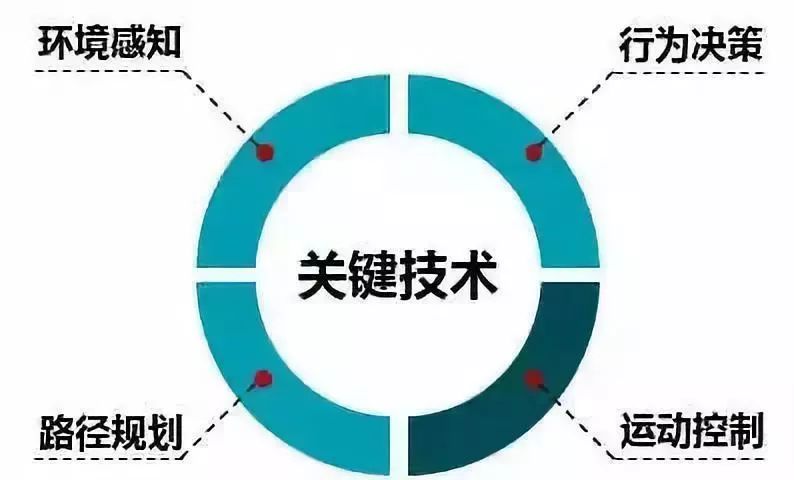

The key technologies of autonomous driving can be divided into four main parts: environmental perception, behavior decision-making, path planning, and motion control.

The theory of autonomous driving sounds simple, with four key technologies, but how exactly is it implemented? Google has been working on autonomous driving since 2009, and after eight years, the technology has not yet been commercialized, showing that autonomous driving technology is not simple. Autonomous driving is a large and complex project that involves many technologies and is very detailed. I will discuss the technologies involved in autonomous vehicles from both hardware and software perspectives.

Hardware

Talking about autonomous driving without mentioning hardware is nonsensical. Let’s take a look at the diagram below, which basically includes all the hardware required for autonomous driving research.

However, not all these sensors will necessarily appear on one vehicle. The presence of certain sensors depends on what tasks the vehicle needs to accomplish. If it only needs to perform highway driving, like Tesla’s AutoPilot function, there is no need to use laser sensors; if you need to perform urban driving, it is very difficult to rely solely on vision without laser sensors.

Autonomous driving system engineers need to select hardware and control costs based on the task. It’s somewhat similar to assembling a computer; give me a requirement, and I will provide you with a configuration list.

Vehicles

Since we are talking about autonomous driving, vehicles are of course indispensable. From SAIC’s experience in autonomous driving development, it is advisable not to choose pure gasoline vehicles if possible. On one hand, the power consumption of the entire autonomous driving system is enormous, and hybrid and pure electric vehicles have obvious advantages in this regard. On the other hand, the underlying control algorithms for engines are much more complex than for electric motors. Rather than spending a lot of time calibrating and debugging the underlying systems, it is better to directly choose electric vehicles to study higher-level algorithms.

Media in China have also conducted research specifically on the choice of test vehicles. “Why did Google and Apple both choose the Lexus RX450h (hybrid vehicle)?” “What considerations do technology companies have when selecting test vehicles for their autonomous driving technologies?” They concluded that “electricity” and “space” are crucial for modifying unmanned vehicles, and that another factor is the “familiarity with the vehicle” from a technical perspective, because if they do not cooperate with car manufacturers for modifications, they need to “hack” into certain control systems.

Controllers

In the early stages of algorithm research, it is recommended to use an Industrial PC (IPC) as the most direct controller solution. This is because IPCs are more stable and reliable than embedded devices, and the community support and accompanying software are also more abundant. Baidu’s open-source Apollo recommends a model of IPC that includes a GPU, specifically the Nuvo-5095GC, as shown in the image below.

When the algorithm research is mature, embedded systems can be used as controllers, such as the zFAS developed by Audi and TTTech, which is now applied in the latest Audi A8 production vehicles.

CAN Cards

The interaction between the IPC and the vehicle chassis must be done through a specific language—CAN. To obtain information such as current speed and steering wheel angle from the chassis, it is necessary to parse the data sent from the chassis to the CAN bus; the IPC must also convert messages into signals recognizable by the chassis through a CAN card based on the calculated steering wheel angle and desired speed, allowing the chassis to respond accordingly.

CAN cards can be directly installed in the IPC and then connected to the CAN bus through external interfaces. The CAN card used by Apollo is the ESD CAN-PCIe/402, as shown in the image below.

Global Positioning System (GPS) + Inertial Measurement Unit (IMU)

When humans drive from point A to point B, they need to know the map from A to B and their current location to determine whether to turn right or go straight at the next intersection. The same applies to unmanned systems; by relying on GPS and IMU, they can know where they are (latitude and longitude) and which direction they are heading (heading). The IMU can also provide richer information such as yaw rate and angular acceleration, which helps with the positioning and decision-making control of autonomous vehicles.

The GPS model used by Apollo is NovAtel GPS-703-GGG-HV, and the IMU model is NovAtel SPAN-IGM-A1.

Perception Sensors

I’m sure everyone is familiar with onboard sensors. Perception sensors can be divided into many types, including visual sensors, laser sensors, and radar sensors. Visual sensors are cameras, which can be categorized into monocular and binocular (stereo) vision. Well-known visual sensor providers include Israel’s Mobileye, Canada’s PointGrey, and Germany’s Pike.

Laser sensors range from single-line to multi-line, with up to 64 lines. Each additional line increases the cost by 10,000 RMB, but the detection effect also improves accordingly. Notable laser sensor providers include the US’s Velodyne and Quanergy, Germany’s Ibeo, and domestically, Supertone.

Radar sensors are a strong point for Tier 1 automotive manufacturers, as they are widely used in vehicles. Well-known suppliers include Bosch, Delphi, and Denso.

Summary of the Hardware Section

Assembling a system capable of completing a specific function requires extensive experience and a thorough understanding of each sensor’s performance boundaries and the controller’s computational capabilities. Excellent system engineers can control costs to a minimum while meeting functional requirements, increasing the likelihood of mass production and implementation.

Software

Software consists of four layers: perception, fusion, decision-making, and control.

Code needs to be written between each layer to achieve information transformation, with more detailed classifications as follows.

First, I would like to share a PPT released by a startup.

To implement an intelligent driving system, there are several levels:

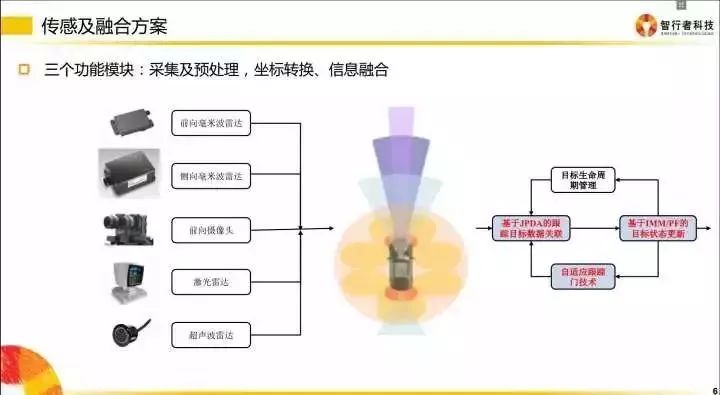

More specifically, the basic levels include: data acquisition and preprocessing, coordinate transformation, and information fusion.

Data Acquisition

When sensors communicate with our PCs or embedded modules, there are different transmission methods. For instance, when acquiring image information from cameras, some use Gigabit Ethernet for communication, while others communicate directly through video cables. Some millimeter-wave radars send information downstream via the CAN bus, so we must write code to parse the CAN information.

Different transmission media require different protocols to parse this information, which is what was mentioned earlier as the “driver layer.” In simple terms, it involves gathering all the information collected by the sensors and encoding it into data that the team can use.

Preprocessing

After obtaining the sensor information, it is found that not all information is useful.

The sensor layer sends data frame by frame at a fixed frequency to the downstream, but the downstream cannot use every frame of data for decision-making or fusion. Why? Because the state of the sensor is not 100% effective; if you determine that there is an obstacle ahead based on a single frame of signal (which may be a false positive from the sensor), it would be irresponsible for downstream decision-making. Therefore, preprocessing is required to ensure that obstacles in front of the vehicle are consistently present over time, rather than just flickering.

This is where a commonly used algorithm in intelligent driving—Kalman filtering—comes into play.

Coordinate Transformation

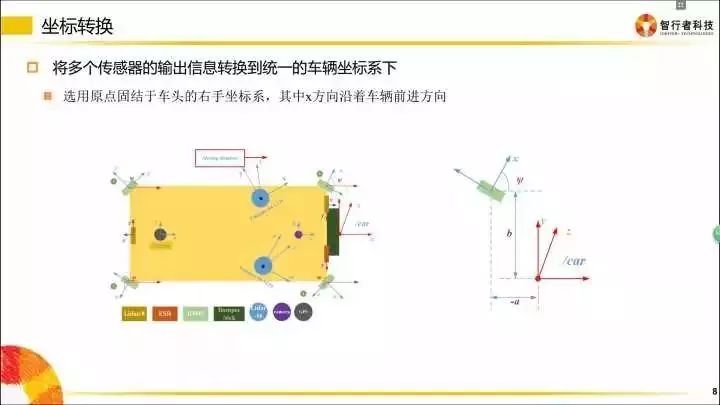

Coordinate transformation is crucial in intelligent driving. Sensors are installed in different locations; for example, the millimeter wave (shown in purple in the image) is located at the front of the vehicle. When there is an obstacle 50 meters away from this millimeter-wave radar, do we consider that the obstacle is 50 meters away from the vehicle?

No! Because when the decision control layer performs vehicle motion planning, it is done in the vehicle’s coordinate system (usually with the rear axle center as point O), so the 50 meters detected by the millimeter-wave radar must be adjusted based on the distance from the sensor to the rear axle.

Ultimately, all sensor information needs to be transformed into the vehicle’s coordinate system so that all sensor data can be unified for planning and decision-making purposes.

Similarly, cameras are generally installed under the windshield, and the data obtained is also based on the camera’s coordinate system, which also needs to be transformed to the vehicle’s coordinate system for downstream data.

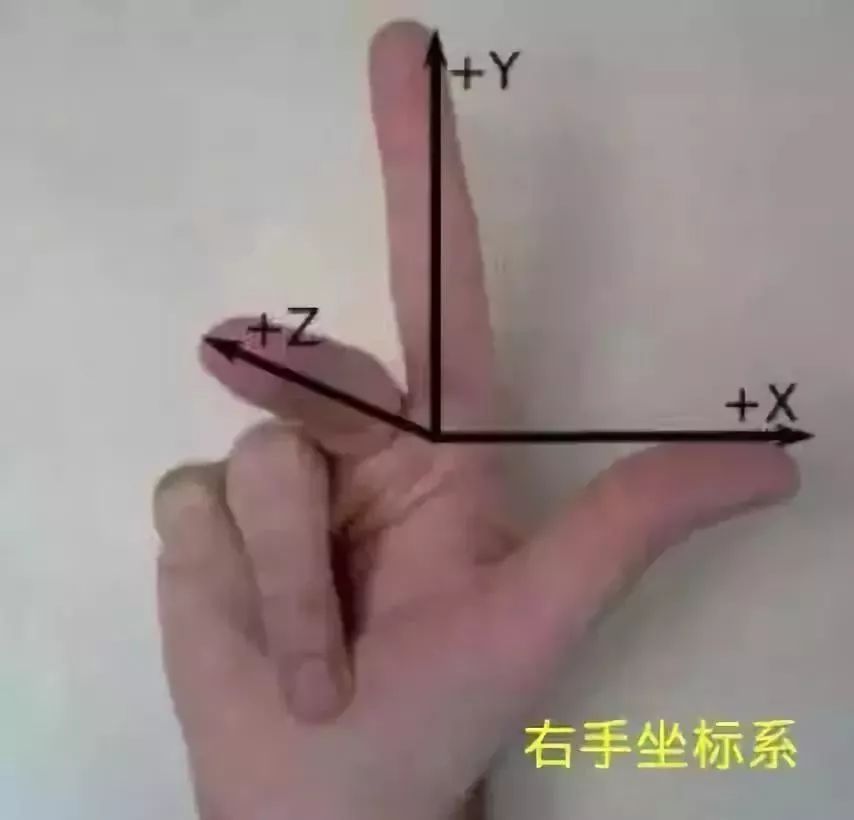

Vehicle coordinate system: Take your right hand and start naming X, Y, Z in the order of thumb → index finger → middle finger. Then shape your hand as shown below:

Place the intersection of the three axes (the base of the index finger) at the center of the vehicle’s rear axle, with the Z axis pointing to the roof and the X axis pointing in the direction of the vehicle’s movement.

The coordinate system directions defined by different teams may not be consistent; as long as there is internal agreement within the development team, that is sufficient.

Information Fusion

Information fusion refers to the process of combining information with the same attributes into a single output. For instance, if the camera detects an obstacle directly in front of the vehicle, and the millimeter wave and laser radar also detect an obstacle ahead, but there is actually only one obstacle, we need to fuse the information from multiple sensors to inform downstream that there is one vehicle ahead, rather than three vehicles.

Decision Planning

This level primarily involves designing how to properly plan after obtaining fused data. Planning includes longitudinal control and lateral control: longitudinal control refers to speed control, determining when to accelerate or brake; lateral control refers to behavioral control, determining when to change lanes or overtake.

I am not very familiar with this area and do not dare to make rash comments.

What does software look like?

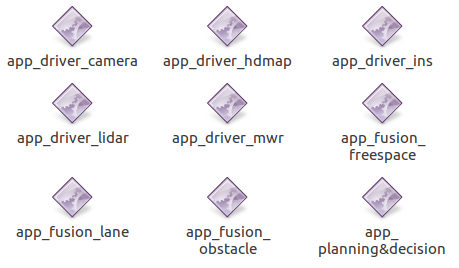

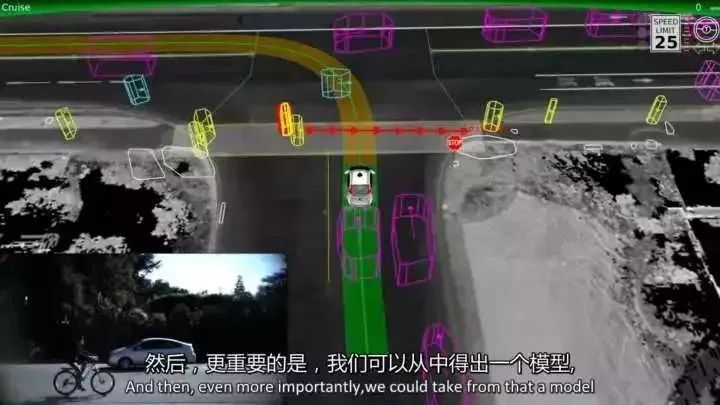

Some software in the autonomous driving system looks similar to the one below.

The software name reflects its actual function:

However, in practice, developers also write other software for debugging purposes, such as tools for recording and replaying data.

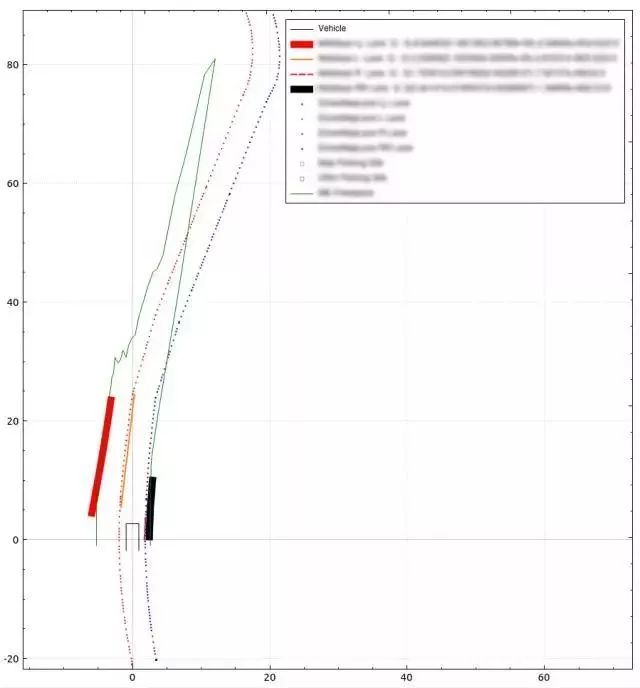

There are also visualization programs for displaying sensor information, similar to the effect shown in the image below.

Having mastered the software concepts, let’s look at what preparations you need to make.

Preparation

Operating System Installation

Since we are developing software, the first step is to have an operating system. Common operating systems include Windows/Linux/Mac… (I haven’t used some operating systems), but considering community support and development efficiency, it is recommended to use Linux as the operating system for autonomous driving research.

Most teams working on autonomous driving use Linux, which can save a lot of trouble by following the trend.

Linux comes in many versions, with the Ubuntu series being the most widely used and popular. Although Ubuntu has been updated to version 17.04, for stability reasons, it is recommended to install version 14.04.

It is recommended to install Linux on a separate SSD or use a virtual machine; dual-booting is not recommended (as it is not very stable). Here is the installation package for Linux Ubuntu 14.04 + virtual machine installation method. (Link: http://pan.baidu.com/s/1jIJNIPg Password: 147y.)

Basic Linux Commands

The core of Linux—command line operations are not only very helpful for developers but also a powerful tool for installation. Another advantage is that using the command “apt-get install” allows for quick installation of many software packages without having to search for compatible installation packages online like in Windows. Linux has many commands, and they can be quite diverse, so it requires learning and practice.

Development Environment Installation

The development environment will involve many libraries that are actually used. Different programmers might use different libraries to solve the same problem. Below, I will introduce the libraries I frequently use in my work and studies to give developers a starting point.

Required installation packages for setting up the environment:

Appendix:Development Environment Introduction

Integrated Development Environment (IDE)

I installed an open-source IDE called Qt, which currently holds the same status in Linux as Visual Studio does in Windows. Unless you are an advanced user who does not use an IDE, most teams developing in Linux will choose to use Qt.

The main function of Qt is to create interactive interfaces, such as displaying various information collected by sensors on the interface. Interactive interfaces significantly speed up the process of debugging programs and calibrating parameters for developers.

Tips:

OpenCV

OpenCV is a powerful library that encapsulates a large number of functions applicable to autonomous driving research, including various filtering algorithms, feature point extraction, matrix operations, projection coordinate transformations, machine learning algorithms, etc. Its influence in the field of computer vision is particularly significant, making it very convenient to use for camera calibration, object detection, recognition, and tracking.

Using the OpenCV library, it is entirely possible to achieve the effect shown in this image.

Tips:

Here is an electronic version that explains everything in detail. Print one chapter at a time for reading, and progress gradually. (Link: http://pan.baidu.com/s/1dE5eom9 Password: n2dn)

libQGLViewer

libQGLViewer is a well-known library that adapts OpenGL to Qt. Its programming interfaces and methods are very similar to OpenGL. The environmental perception information displayed on promotional materials from various autonomous driving companies can be completely created using QGL.

Tips:

Learning libQGLViewer does not require purchasing any textbooks. The official website and the examples in the compressed package are the best teachers. Follow the tutorials on the official website and implement each example to get started.

Official website link: libQGLViewer Home Page

Boost

The Boost library is known as the “C++ Standard Library.” This library contains a large number of “wheels,” which are convenient for C++ developers to directly call, avoiding the need to reinvent the wheel.

Tips:

Boost is developed based on standard C++, and its construction employs exquisite techniques. Do not rush to spend time studying it thoroughly; find a related book (either electronic or paper) and read through the directory to understand the functions it provides. When needed, you can spend time researching specific points.

QCustomplot

In addition to the aforementioned libQGLViewer, we can also display onboard sensor information in graph form. Since Qt only provides basic drawing tools like lines and circles, using QCustomplot is much more convenient. Simply call the API and input the data you want to display as parameters, and you can create impressive graphics like the ones below. It also allows for easy dragging and zooming.

Below are some sensor information displays I used in actual development using QCustomplot.

Tips:

The official website provides the source code for this library. You just need to import the .cpp and .h files into your project. Following the tutorials provided on the official website will help you get started quickly. By referring to the examples in the example folder, you can quickly visualize your data.

LCM (Lightweight Communications and Marshalling)

In team software development, communication issues between programs (multi-process) are inevitable. There are many ways to achieve multi-process communication, each with its own advantages and disadvantages, and the choice is subjective. In December 2014, MIT released the signal transmission mechanism LCM used in the DARPA Robotics Challenge in the US. Source: MIT releases LCM driver for MultiSense SL.

LCM supports multiple languages such as Java and C++ and is designed for real-time systems to send messages and perform data marshalling under high bandwidth and low latency conditions. It provides a publish/subscribe message model and automatic packaging/unpacking code generation tools in various programming language versions. This model is very similar to the communication method between nodes in ROS.

Tips:

The demo for communication between two processes using LCM is available on the official website, and you can quickly establish your own LCM communication mechanism by following the tutorial.

Official website: LCM Projcect

Git & Github

Git is an essential version control tool for team development. When writing papers, everyone definitely has a version every day; without special annotations about what changes were made in each version, it is easy to forget over time. The same goes for coding.

Using Git can greatly improve the efficiency of collaborative development, and version management is standardized, making it very convenient to trace code.

Github is well-known in the software development field; you can directly search for certain codes there.

Tips:

Currently, many books introducing Git are quite challenging to read, and they delve too deeply into trivial details, making it difficult to get started quickly.

Therefore, I highly recommend the introductory tutorial on Git: Liao Xuefeng’s Git tutorial, which is easy to understand and comes with illustrations and videos, making it a great resource.

With a solid understanding of these concepts, you will become an experienced player in the field of autonomous driving.

WeChat ID:imecas_wx