👇 Follow our official account and selectStar, to receive the latest insights daily

Abstract

Embodied Intelligence is a cutting-edge field at the intersection of artificial intelligence and robotics, emphasizing that agents achieve autonomous learning and evolution through dynamic interactions with their bodies and environments, with the core focus on the deep integration of perception, action, and cognition.

Source: PaperAgent

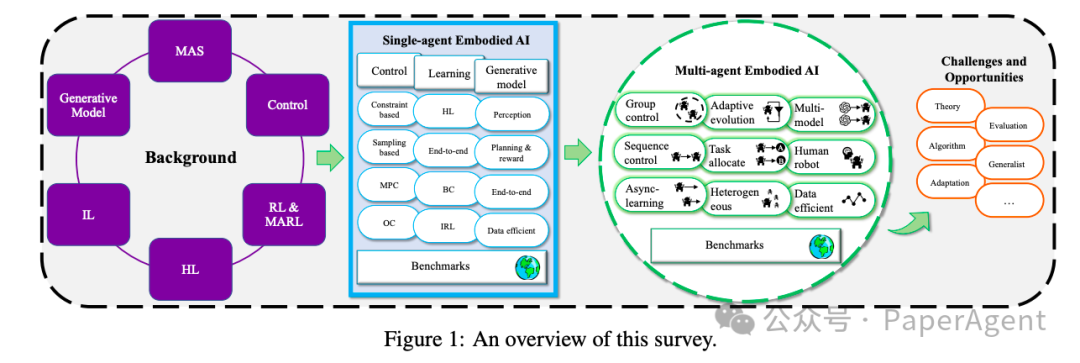

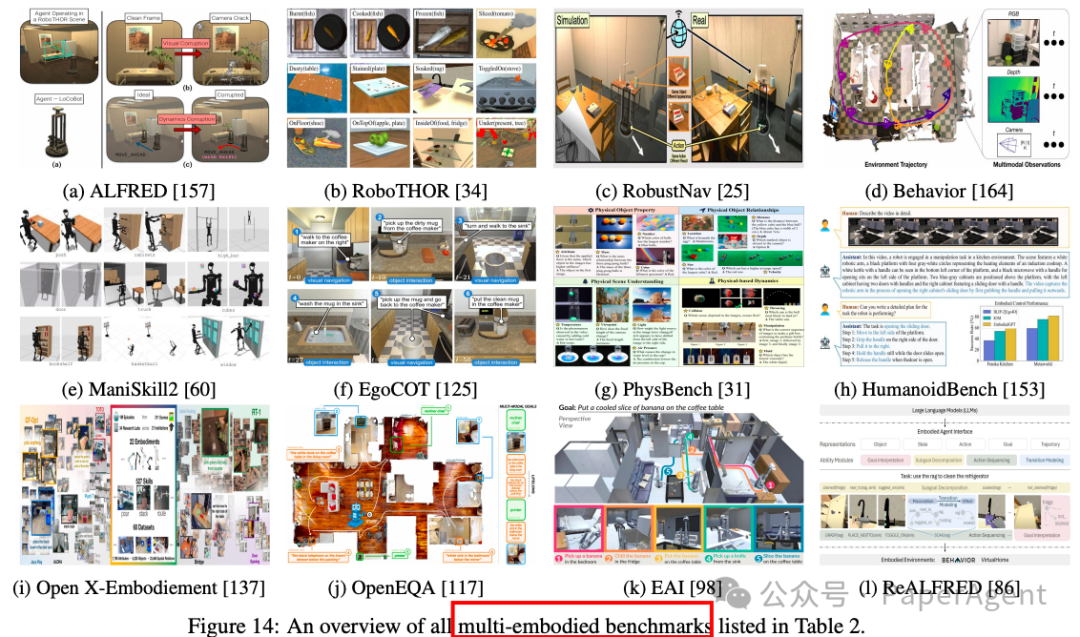

Embodied AI has become a forefront area in both academia and industry, with applications spanning robotics, healthcare, transportation, and manufacturing. However, most research has primarily focused on single-agent systems in static and closed environments, often relying on simplified models that fail to fully capture the complexities of multi-agent embodied AI in dynamic open environments. Beijing University of Technology, Nanjing University, and Xi’an Jiaotong University introduced the fundamental concepts of embodied AI, including Multi-Agent Systems (MAS), Reinforcement Learning (RL), related methods, and research directions (including classical control and planning methods, learning-based methods, and generative models). The focus is on multi-agent embodied AI control and planning, multi-agent learning, and the application of generative models in multi-agent interactions.

Beijing University of Technology, Nanjing University, and Xi’an Jiaotong University introduced the fundamental concepts of embodied AI, including Multi-Agent Systems (MAS), Reinforcement Learning (RL), related methods, and research directions (including classical control and planning methods, learning-based methods, and generative models). The focus is on multi-agent embodied AI control and planning, multi-agent learning, and the application of generative models in multi-agent interactions. 1. Single-Agent Embodied AI

1. Single-Agent Embodied AI

Detailed introduction to the research status, methods, and progress of single-agent embodied artificial intelligence (Embodied AI):

1.1 Classical Control and Planning Methods

Single-agent embodied AI primarily relies on the following methods for classical control and planning:

-

Constraint-based Methods: By encoding task objectives and environmental conditions as logical constraints, planning problems are transformed into symbolic representations, and constraint-solving techniques (such as symbolic search) are used to find feasible solutions. This method emphasizes the feasibility of solutions rather than optimality and has high complexity when dealing with high-dimensional perceptual inputs.

-

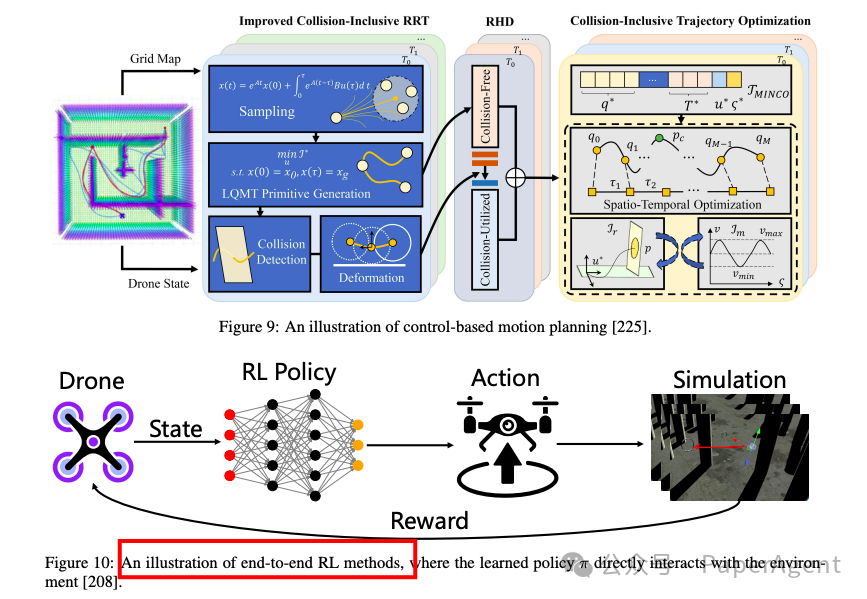

Sampling-based Methods: By using random sampling techniques (such as Rapidly-exploring Random Trees, RRT), trees or graph structures are incrementally constructed to explore feasible motion trajectories. This method is suitable for high-dimensional spaces and can effectively handle complex environments.

-

Optimization-based Methods: Task objectives and performance metrics are modeled as optimization objective functions, while feasibility conditions are represented as constraints, utilizing optimization techniques to search for optimal solutions in the constrained solution space. For example, polynomial trajectory planning, Model Predictive Control (MPC), and Optimal Control (OC) methods excel in scenarios requiring time-optimal operations.

1.2 Learning-based Methods

As the dynamics of environments and the complexity of tasks increase, learning-based methods have gradually become mainstream:

-

End-to-end Reinforcement Learning (RL): By directly learning policies from the environment, mapping perceptual information to action decisions. This method can directly optimize policies but faces issues of low sample efficiency and long training times.

-

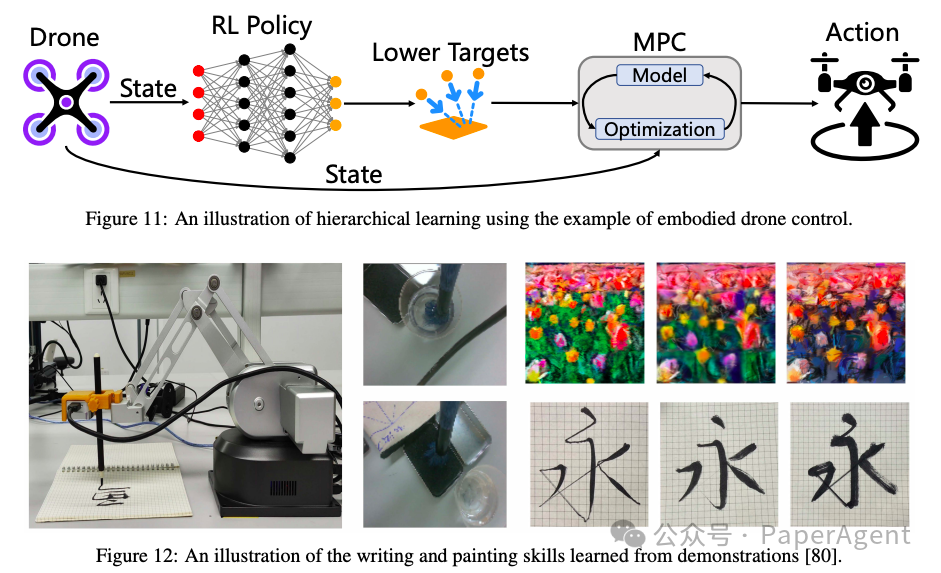

Hierarchical Learning: Decomposing complex tasks into simpler sub-tasks, improving learning efficiency and scalability through hierarchical strategies. For example, using reinforcement learning for high-level planning, combined with classical control methods like Model Predictive Control (MPC) for executing low-level actions.

-

Imitation Learning (IL): Learning task-solving capabilities by mimicking expert behavior, avoiding the complexity of manually designing reward functions. Common methods include Behavior Cloning (BC), Inverse Reinforcement Learning (IRL), and Generative Adversarial Imitation Learning (GAIL). These methods each have advantages in sample efficiency and generalization capabilities.

1.3 Generative Model-based Methods

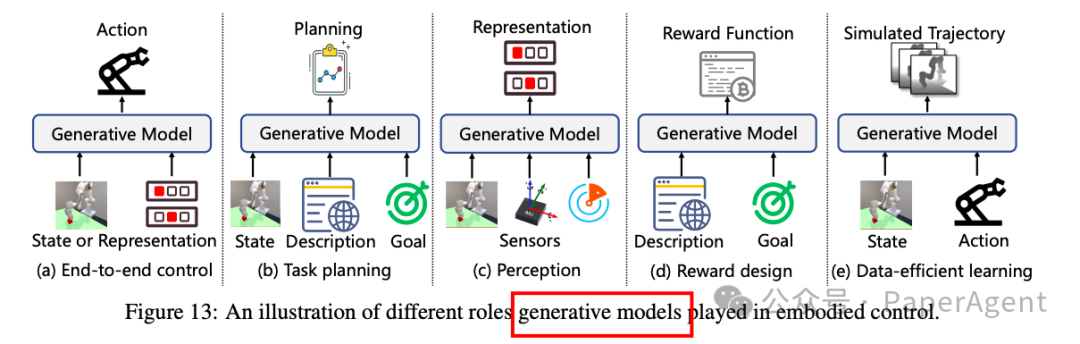

Generative models provide stronger representation capabilities and flexibility for embodied AI by capturing the underlying distribution of data:

-

End-to-end Control: Using generative models (such as large visual-language models, VLM) directly as decision controllers, integrating prior knowledge and pre-training capabilities into embodied systems. For example, formatting inputs and inferring actions directly from pre-trained models.

-

Task Planning: Utilizing the reasoning capabilities of generative models to decompose tasks into actionable sequences of steps. For example, given a high-level task (such as “pouring a glass of water and placing it on the table”), the generative model can break it down into a series of specific actions.

-

Perception: Generative models (such as Transformer architectures) can be used to fuse multimodal perceptual data, providing more effective environmental representations.

-

Reward Design: Utilizing generative models to generate reward signals or reward functions, simplifying the reward design process in complex environments.

-

Data-efficient Learning: Generating data through generative models to improve sample efficiency and reduce the cost of interactions with the physical environment.

2. Multi-Agent Embodied AI In the real world, embodied AI must cope with complex scenarios. In these scenarios, agents not only need to interact with their surrounding environment but also need to collaborate with other agents, requiring complex adaptation mechanisms, real-time learning, and collaborative problem-solving capabilities.

2. Multi-Agent Embodied AI In the real world, embodied AI must cope with complex scenarios. In these scenarios, agents not only need to interact with their surrounding environment but also need to collaborate with other agents, requiring complex adaptation mechanisms, real-time learning, and collaborative problem-solving capabilities.

In-depth exploration of the research progress, challenges, and future directions of multi-agent embodied artificial intelligence (Embodied AI):

2.1 Multi-Agent Control and Planning

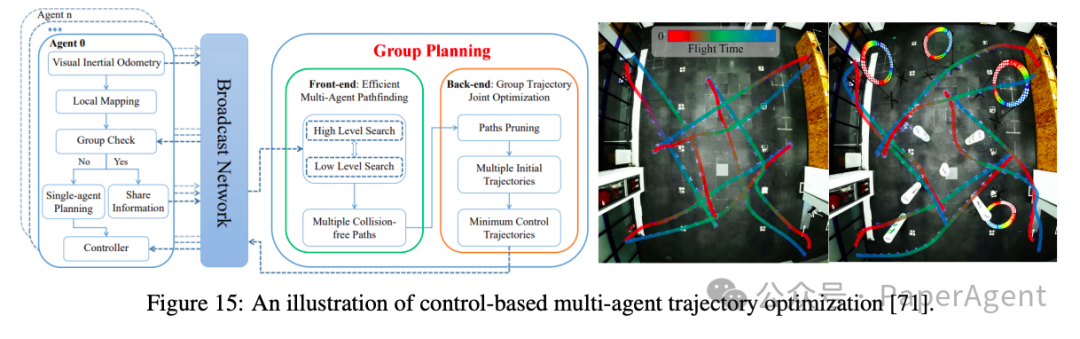

The control and planning methods in Multi-Agent Systems (MAS) are fundamental for achieving high precision and real-time decision-making:

-

Centralized Control: Early methods modeled multi-agent systems as single agents, performing centralized control and planning. However, this approach faces scalability challenges in large-scale systems.

-

Distributed Control: To address the scalability issues of centralized control, distributed control methods were proposed, where each agent operates independently. However, this method faces difficulties in resolving conflicts between agents.

-

Grouped Multi-Agent Control Framework (EMAPF): By dynamically clustering agents, grouping them into smaller teams, each team performs centralized control while maintaining independent control between groups. This method excels in large-scale aerial robot teams.

2.2 Multi-Agent Learning

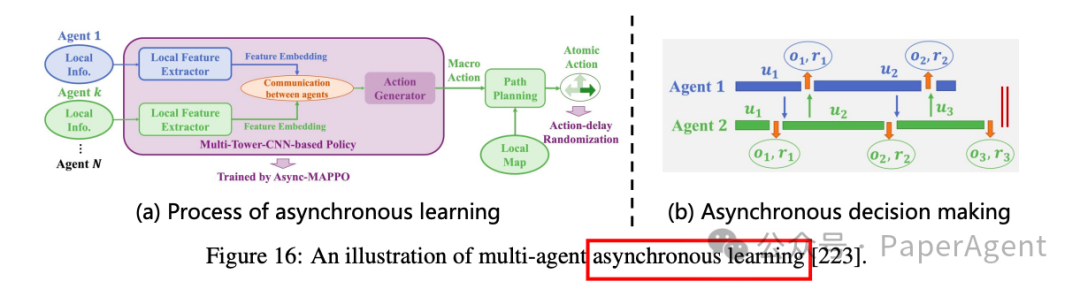

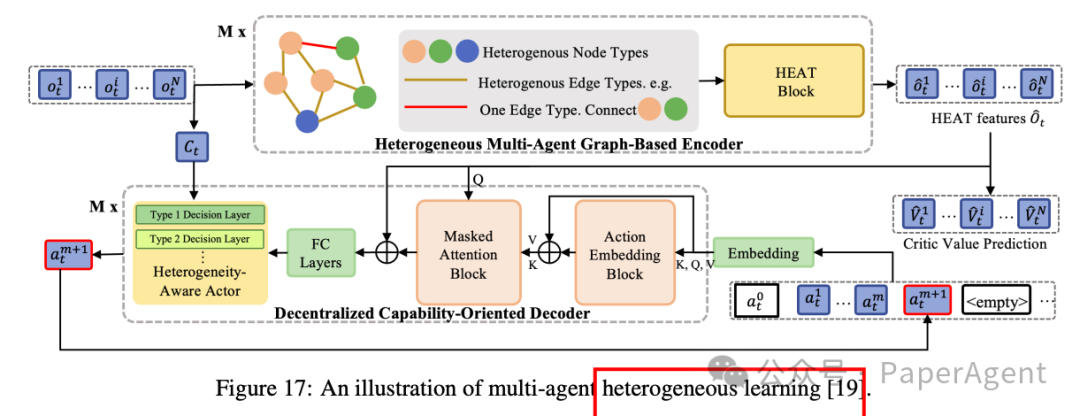

Multi-agent learning needs to address challenges in asynchronous decision-making, heterogeneous agents, and learning in open environments:

-

Asynchronous Collaboration: Due to communication delays and hardware heterogeneity, interactions and feedback between agents are often asynchronous. The article introduces the ACE algorithm, which addresses this issue by introducing macro-actions as centralized goals for the entire MAS, allowing agents to make multiple asynchronous decisions based on this goal.

-

Heterogeneous Collaboration: Agents differ in perceptual capabilities, action spaces, and task objectives. Methods like HetGPPO and COMAT effectively handle heterogeneity by designing independent observation and policy networks for different types of agents and facilitating information exchange through graph neural networks.

-

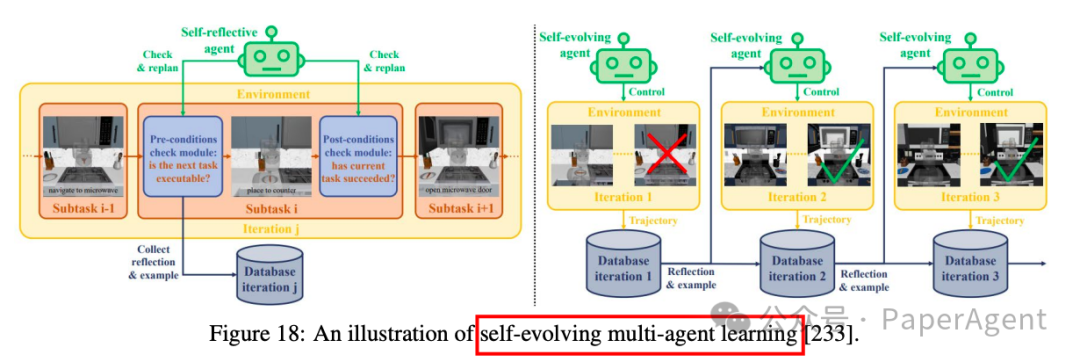

Adaptive Learning in Open Environments: Task objectives, environmental factors, and collaboration patterns in open environments are dynamically changing. Researchers have proposed methods such as robust training and continuous coordination to address these challenges.

2.3 Generative Model-based Multi-Agent Interaction

Generative models play a significant role in multi-agent embodied AI, introducing prior knowledge, facilitating communication between agents, and improving data efficiency:

-

Multi-Agent Task Allocation: Utilizing pre-trained generative models (such as large language models) for task decomposition and allocation, significantly reducing the exploration space for each agent. For example, SMART-LLM achieves efficient task allocation by decomposing tasks and grouping based on agent capabilities.

-

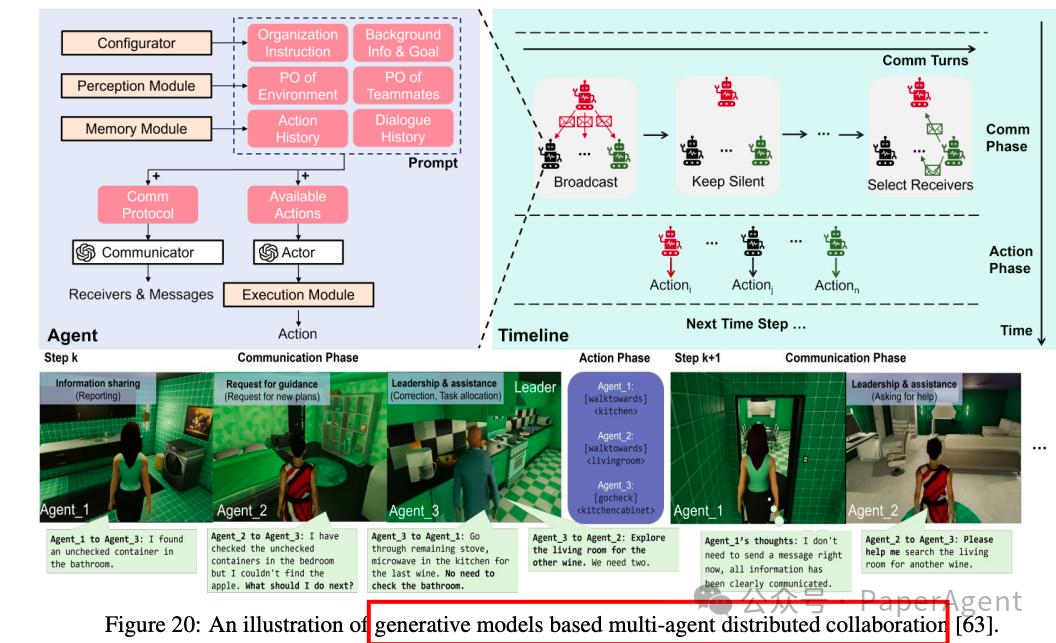

Distributed Decision-Making: Generative models can be used for distributed decision-making, where each agent independently makes decisions and evaluates strategies. By introducing a centralized generative model to assess the decisions of distributed generative models, decision-making capabilities are further enhanced.

-

Human-Machine Collaboration: Generative models can significantly improve human-machine interaction and collaboration through language understanding and generation capabilities. For example, agents can actively query humans for missing information or infer human intentions, enabling more effective collaboration with humans.

-

Data-efficient Learning: In multi-agent settings, the issue of sample efficiency is even more pronounced. By using generative models for data augmentation, data efficiency can be improved, supporting more effective learning.

https://arxiv.org/pdf/2505.05108Multi-agent Embodied AI: Advances and Future Directions—THE END—Experience and latest updates on large model agents can be followed through the mini-program👇👇

Reply in the background“Join Group” to join the mutual assistance group, and you can obtain the complete keyword list (updated periodically).

This article is for academic sharing only. If there is any infringement, please contact us for deletion. Thank you very much!