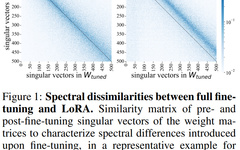

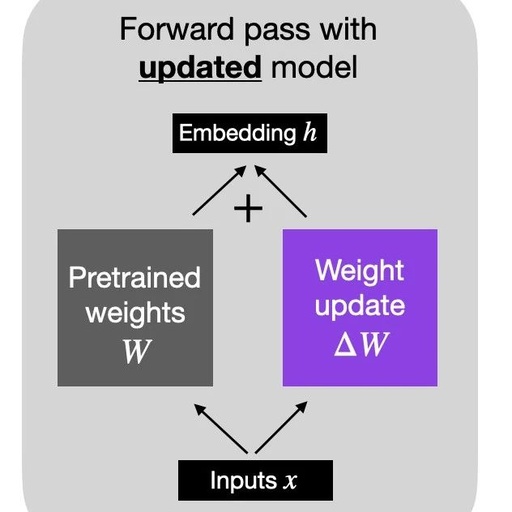

Differences Between LoRA and Full Fine-Tuning Explained in MIT Paper

MLNLP community is a well-known machine learning and natural language processing community both domestically and internationally, covering NLP graduate students, university teachers, and corporate researchers. The vision of the community is to promote communication and progress between the academic and industrial circles of natural language processing and machine learning, especially for beginners. Reprinted from | … Read more