Paper Title: CAMEL: Communicative Agents for “Mind” Exploration of Large Scale Language Model Society Paper Link: https://ghli.org/camel.pdf Code Link: https://github.com/camel-ai/camel Project Homepage: https://www.camel-ai.org/

-

“CAMEL” (Camel: Large Model Mental Interaction Framework) – Released on 2023.3.21 -

“AutoGPT” – Released on 2023.3.30 -

“BabyGPT” – Released on 2023.4.3 -

“Westworld” simulation — Released on 2023.4.7

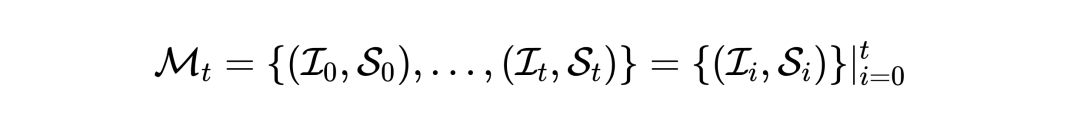

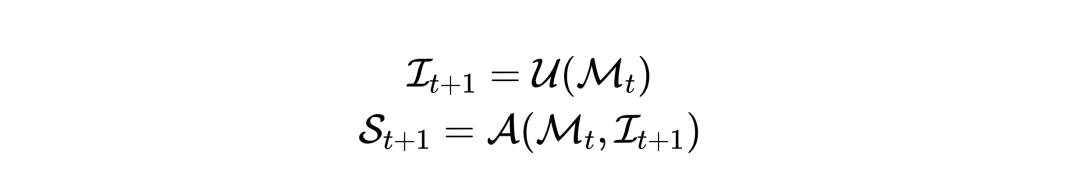

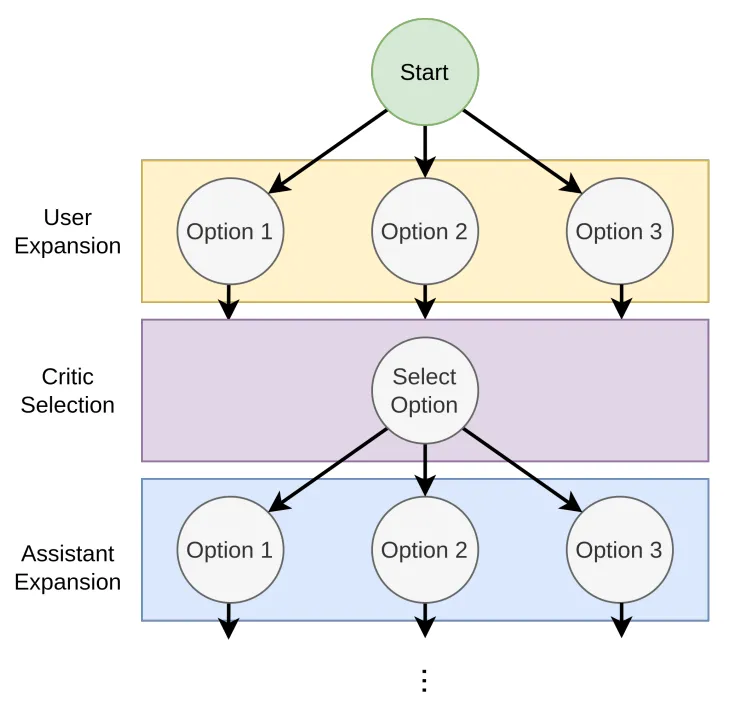

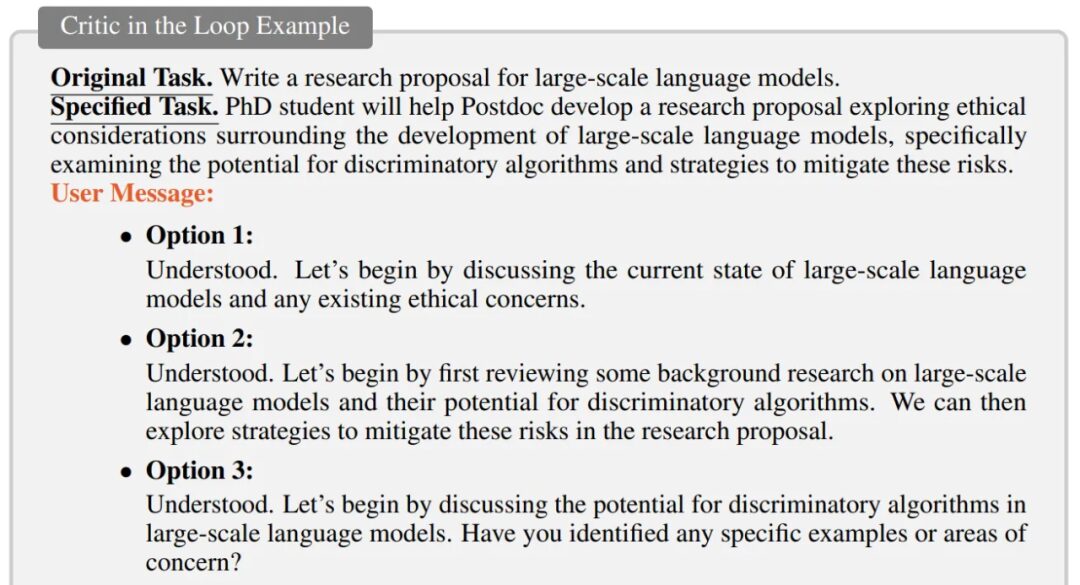

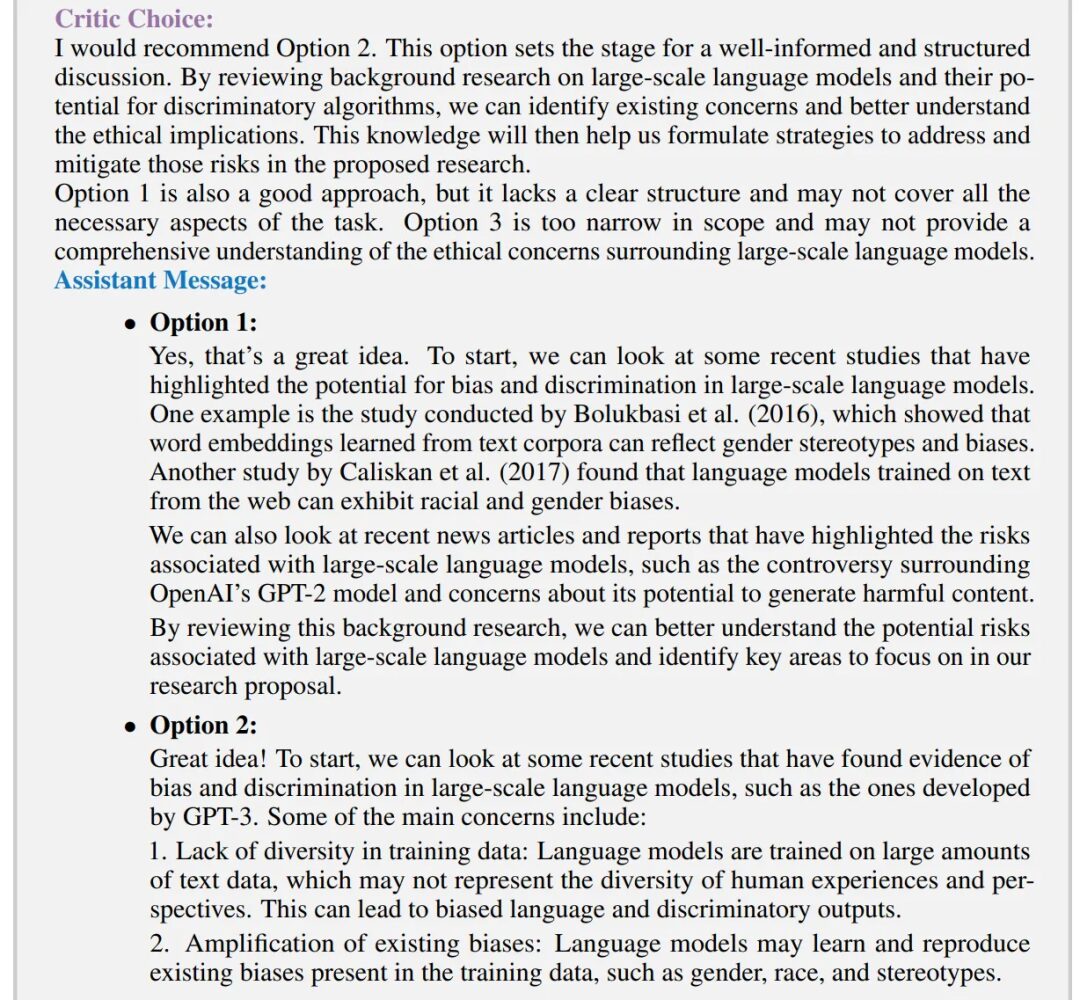

1. CAMEL Framework

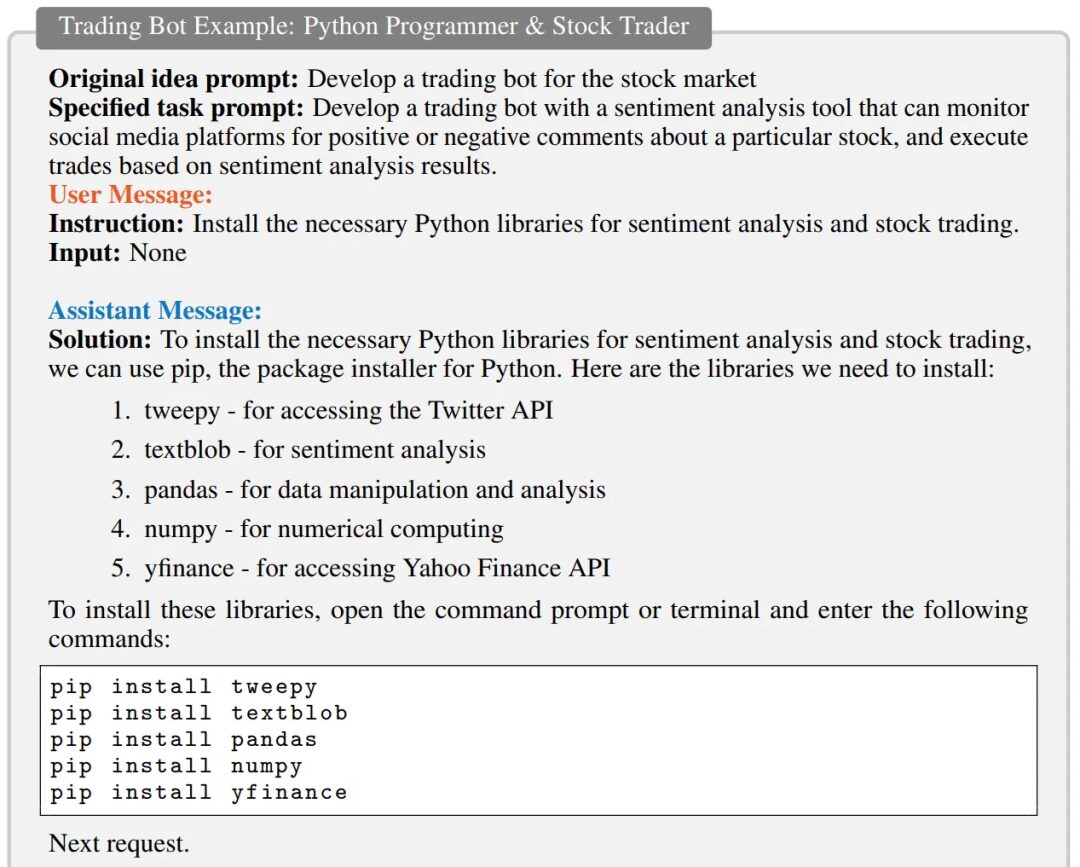

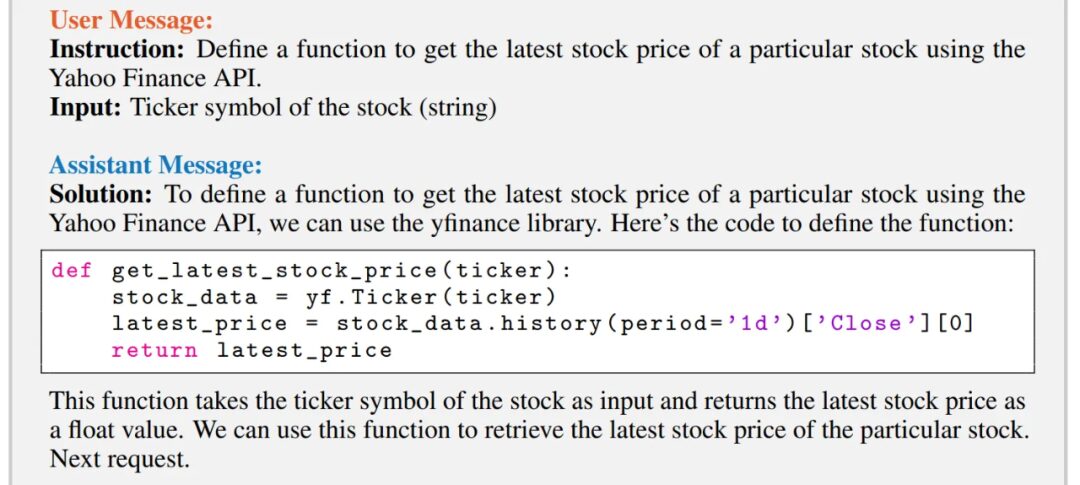

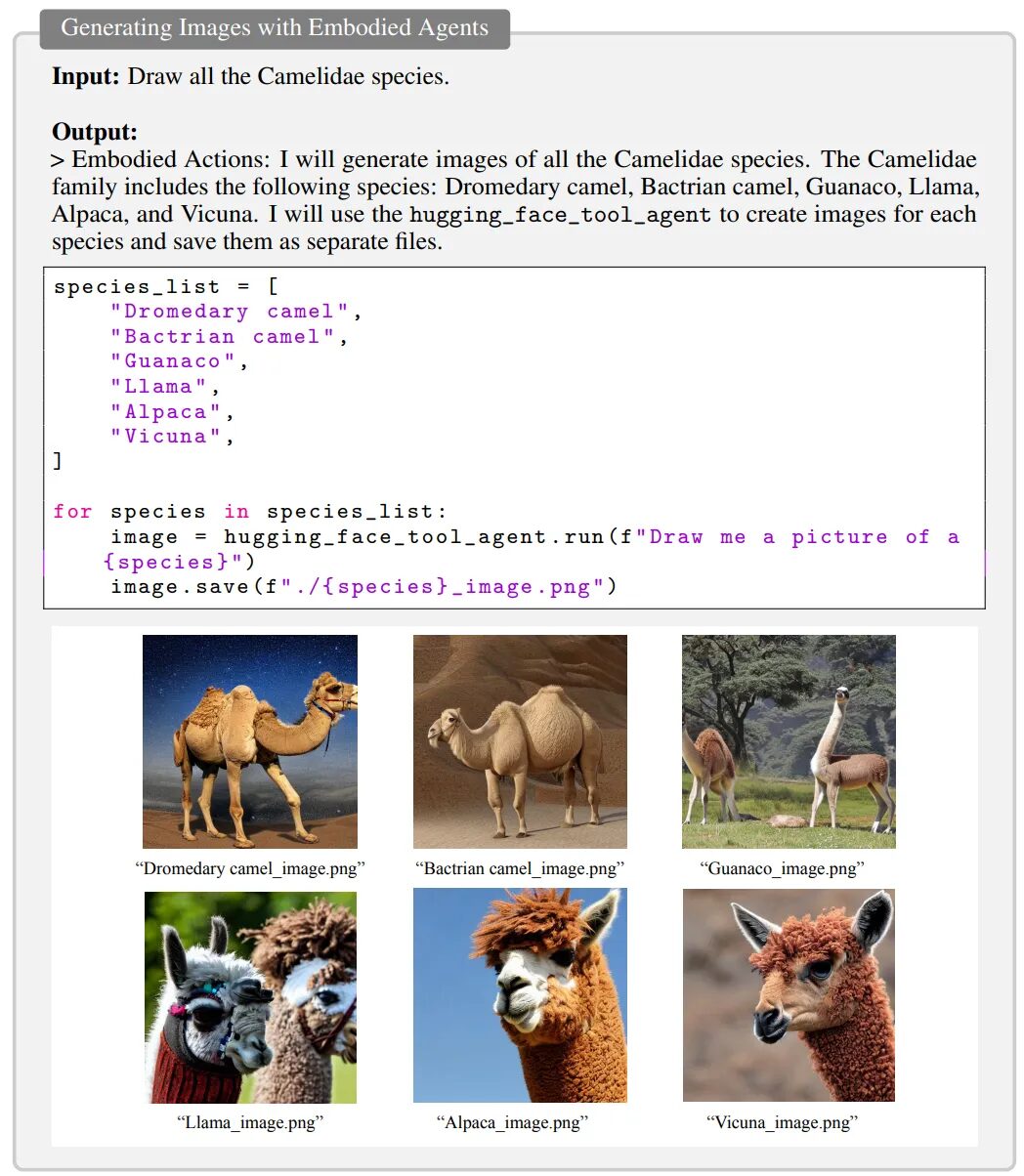

2. CAMEL Usage Examples

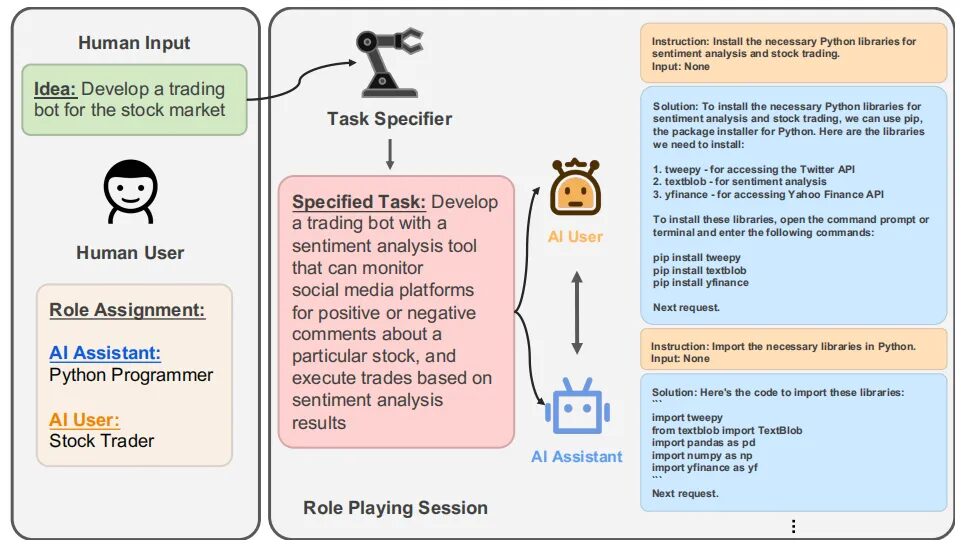

2.1 Cooperative Role-Playing

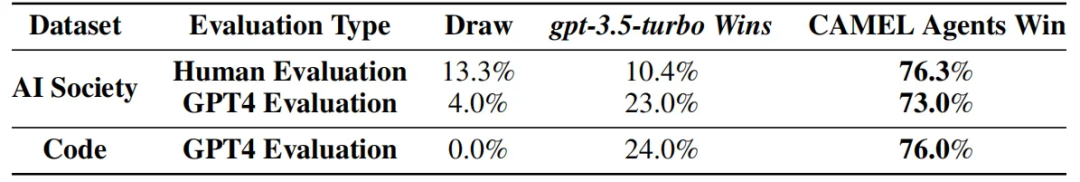

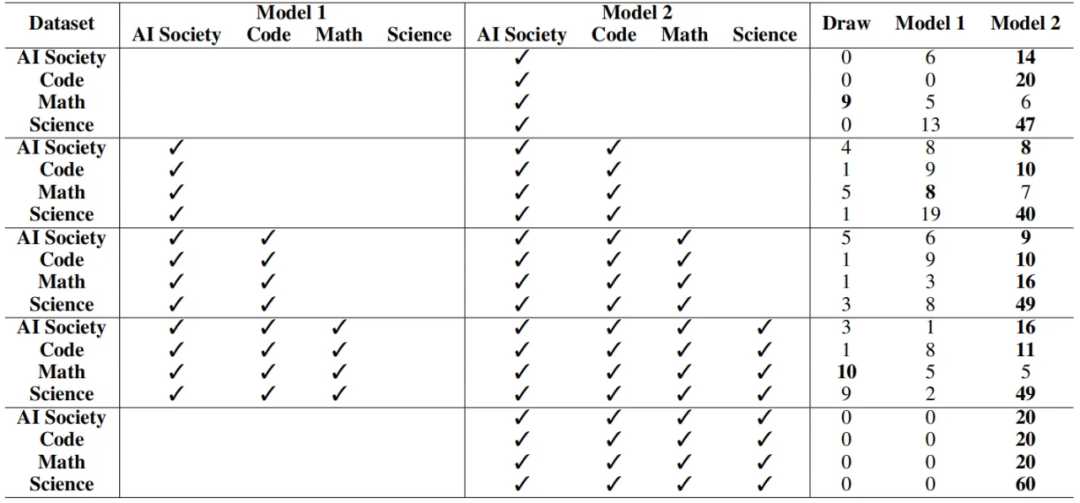

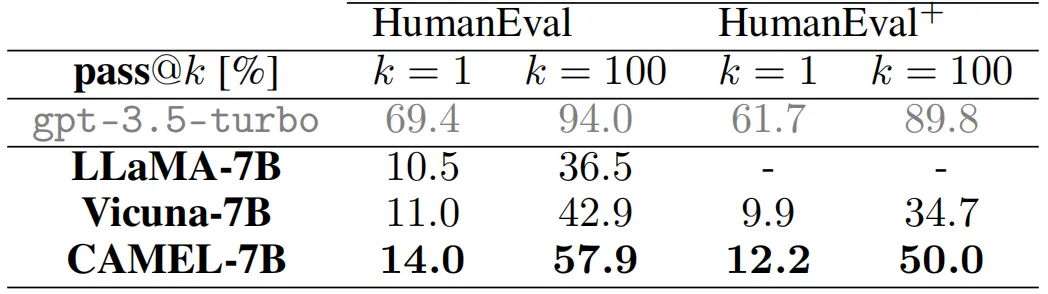

3. Experimental Results

4. CAMEL AI Open Source Community

Scan the QR code to add assistant WeChat

About Us