From Vacuum Tubes to Transistors

The Birth of Chips and Silicon Valley

Photolithography Technology

Extreme Ultraviolet Lithography Technology

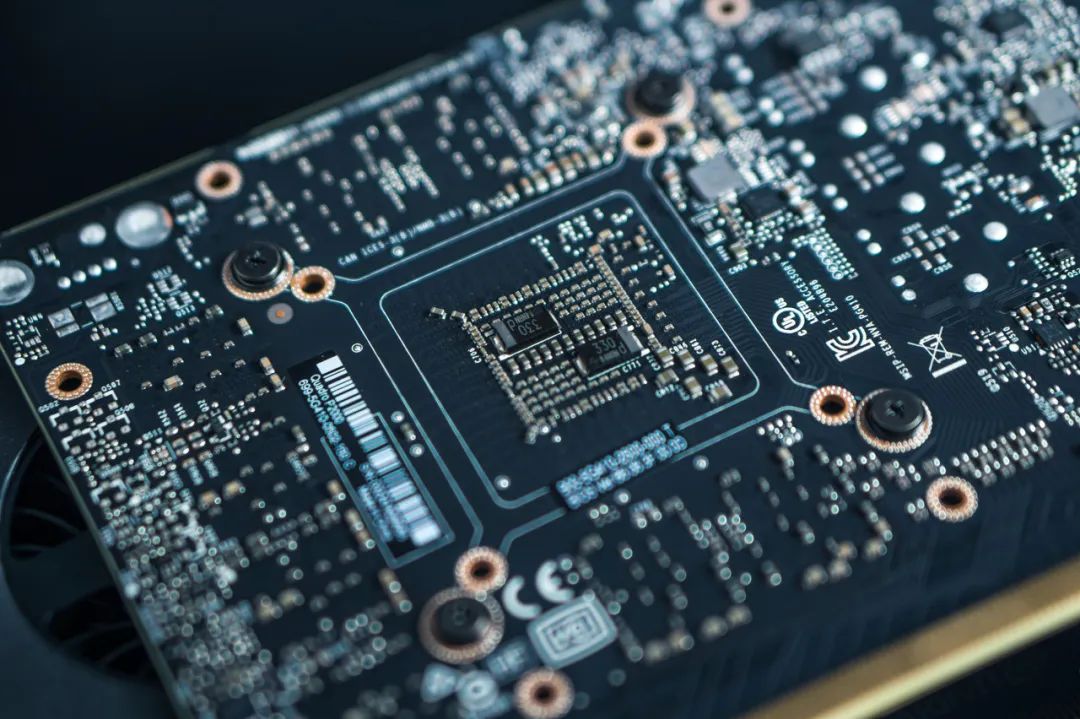

Applications of Chips

The first type is logic chips, used as processors in our computers, phones, or network servers;

The second type is memory chips, with classic examples including DRAM chips developed by Intel—before this product was launched, data storage relied on magnetic cores: magnetized components represented 1, while unmagnetized components represented 0. Intel’s approach combined transistors and capacitors, where charging represented 1 and not charging represented 0. The principle of the new storage tool is similar to that of magnetic cores, but everything is integrated within the chip, making it smaller and with a lower error rate. Such chips provide short-term and long-term memory for computers;

The third type of chip is called “analog chips,” which process analog signals.

Manufacturing and Supply Chain

Conclusion

Planning and Production

Author丨Ye Shi, Popular Science Creator

Reviewed by丨Huang Yongguang, Associate Researcher at the Institute of Semiconductor, Chinese Academy of Sciences

Planning丨Xu Lai

Editor丨Yi Nuo

Previous Issues

Featured

Why Are Contemporary Women Trapped in “Perfectionism Shame,” and Why Is It So Hard to Overcome?

Women’s Incidence Rate Is 8 Times That of Men! Pay Attention to These 5 Dietary Tips When Facing This Disease!

Beware! Smart Bracelets Have Become “Digital Handcuffs,” and Many People Are Forced to Do This…

The cover image and images in this article come from copyright stock photos

Unauthorized use may lead to copyright disputes. For original text and images, please reply “Reprint” in the background.