From Vacuum Tubes to Transistors

The Birth of Chips and Silicon Valley

Photolithography Technology

Extreme Ultraviolet Lithography Technology

Applications of Chips

The first type is logic chips, used as processors in our computers, phones, or network servers;

The second type is memory chips, classic examples include DRAM chips developed by Intel—before this product was launched, data storage relied on magnetic cores: magnetized elements represented 1, and unmagnetized elements represented 0. Intel’s approach was to combine transistors and capacitors, where charging represented 1 and not charging represented 0. Compared to magnetic cores, the new storage tool’s principle is similar, but everything is integrated into the chip, making it smaller and less error-prone. Such chips provide computers with both short-term and long-term memory during operation;

The third type of chip is called “analog chips,” which process analog signals.

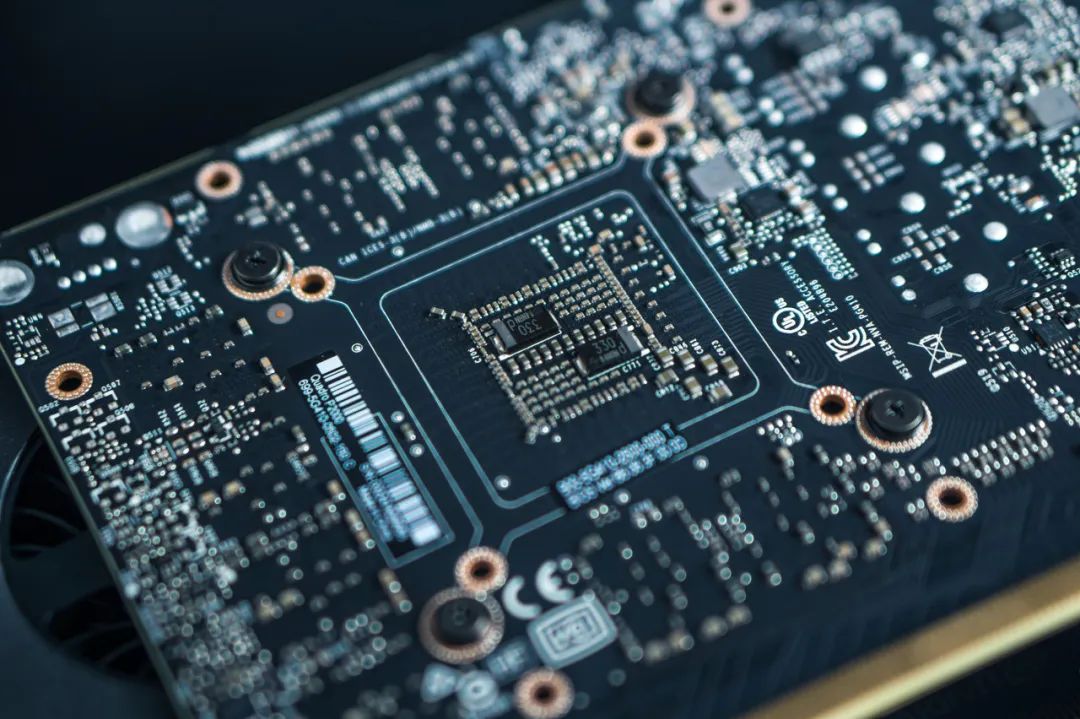

Manufacturing and Supply Chain

Conclusion

Planning and Production

Author: Ye Shi, popular science creator

Reviewed by: Huang Yongguang, associate researcher at the Institute of Semiconductors, Chinese Academy of Sciences

Planning: Xu Lai

Editor: Yi Nuo

Previous Issues

Selected

Why Are Contemporary Women Trapped in “Perfectionism” and Why Is It So Hard to Overcome?

Women’s Disease Rate is 8 Times That of Men! Pay Attention to These 5 Dietary Points!

Beware! Smart Bracelets Become “Digital Handcuffs,” Many People Are Forced to Do This…

Light Up “Watch”

Let’s Learn Together!