Click the card to follow us

Click the card to follow us

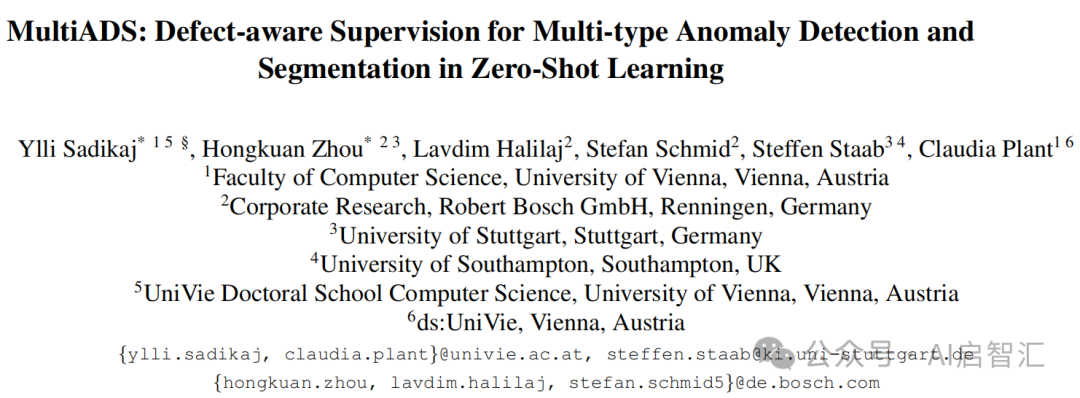

Paper Title: MultiADS: Defect-aware Supervision for Multi-type Anomaly Detection and Segmentation in Zero-Shot Learning

Paper Link:https://arxiv.org/abs/2504.06740

AI Insight Introduction

The paper focuses on anomaly detection and segmentation in industrial applications, aiming to address the issue of existing methods being unable to recognize multiple types of defects. By proposing the MultiADS method, experiments on multiple datasets demonstrate its advantages in zero-shot and few-shot learning settings.

Research Motivation

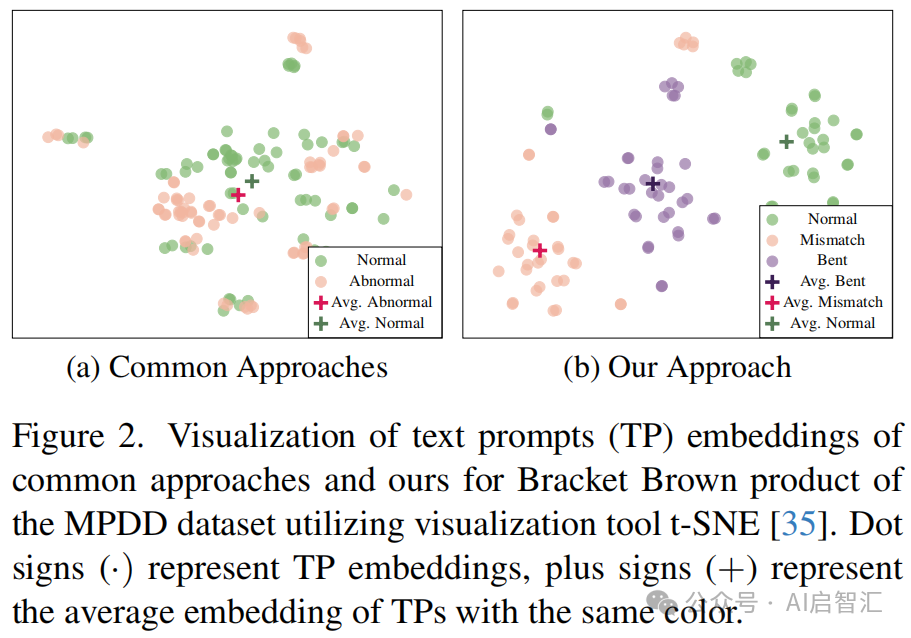

The manufacturing industry aims to improve production flexibility, leading to an increase in product variety and a higher probability of defects. Anomaly detection and segmentation in optical inspection are crucial for identifying defective products and locating abnormal areas. Existing methods based on pre-trained models like CLIP only distinguish between normal and abnormal states during anomaly detection, failing to utilize knowledge related to defect types, and fine-tuning is prone to overfitting, which does not meet industrial needs. Figure 2a illustrates the information loss in common methods when embedding text prompts, making it difficult to differentiate between normal and abnormal states.

Innovations

New Task Proposed: MultiADS can detect multiple identical or different types of defects in abnormal products, proposing multi-type anomaly detection and segmentation tasks, determining defect types at the pixel level, and serving as a baseline method for this new task.

Utilizing Pre-trained Knowledge: By leveraging prior knowledge of common defect types in pre-trained visual language models (VLMs), detection and segmentation performance is enhanced. For instance, by constructing defect-aware text prompts, the model can better capture image semantics.

Building a Knowledge Base: An anomaly knowledge base (KBA) is proposed to enhance the description of defect types, used for constructing text prompts and fine-tuning VLMs.

Figure 1 compares the differences between common methods and the proposed MultiADS method in anomaly detection and recognition, highlighting the advantages of MultiADS in distinguishing different defect types.

Common methods typically can only distinguish between normal and abnormal states of products (as shown in Figure 1a). In actual industrial inspection scenarios, such methods only determine whether a product has defects but cannot identify the specific type of defect. For example, when inspecting electronic components, it can only indicate that there is a problem with the component but cannot determine whether it is bent, scratched, or has other defects, which provides limited assistance for subsequent precise defect handling and quality improvement.

MultiADS can identify K + 1 states, including one normal state and K corresponding abnormal states of different defect types (as shown in Figure 1b). This means that the method can not only detect whether a product is abnormal but also clearly specify the type of defect. For instance, in mechanical part inspection, it can accurately determine whether the part has defects such as bending, breaking, or wear. This capability is crucial for modern automated production lines, as only by accurately identifying defect types can targeted automated processing measures be taken to improve production efficiency and product quality.

By identifying multiple abnormal states, MultiADS can better distinguish various defect types. In practical applications, this helps operators quickly understand the specific situation of product defects, timely adjust production processes, reduce waste rates, and lower production costs. Compared to common methods, MultiADS has higher application value in industrial product inspection, capable of meeting more complex and refined detection needs.

Methodology

Knowledge Preparation: Metadata is obtained from multiple industrial defect detection datasets to construct the KBA, grouping defect types into abstract classes and integrating defect attributes. Using the KBA, defect-aware text prompts are constructed, such as specific text prompts for each defect type in different datasets, with corresponding text templates for normal states.

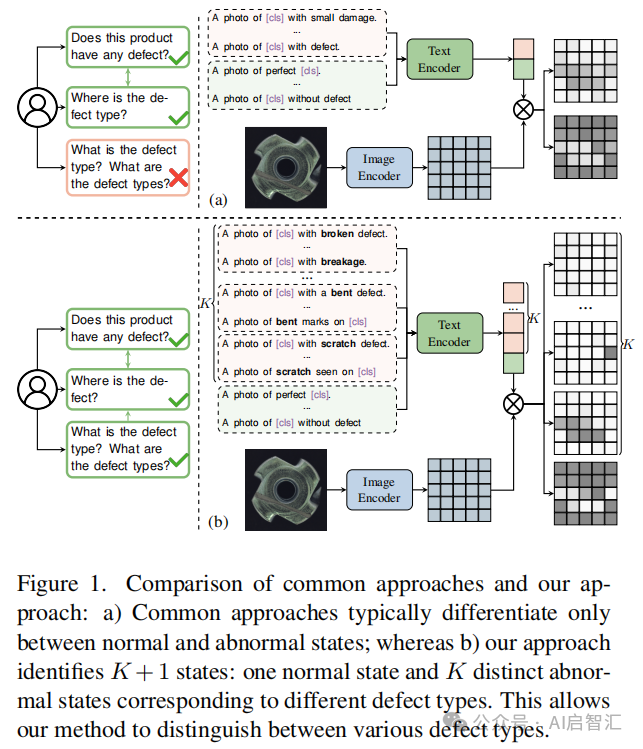

Figure 3 illustrates the training and inference process of the MultiADS method, achieving multi-type anomaly detection and segmentation tasks through different operational processes. This process involves text prompt encoding, image feature processing, loss calculation, anomaly score computation, and detection and segmentation operations under different settings.

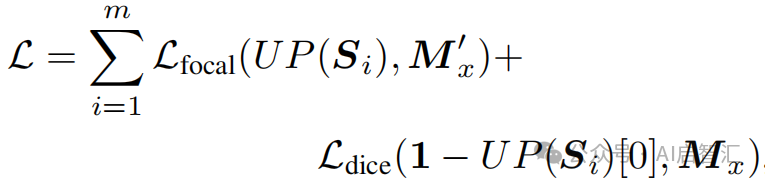

Model Training: During training, images are encoded by the CLIP image encoder to obtain image patch embeddings and global image embeddings at different stages; multiple sets of text prompts are encoded by the CLIP text encoder to obtain average text embeddings. A linear adapter is used to align the visual and text embedding spaces, resulting in a similarity map. The training objective combines focal loss and dice loss, expressed as:

, where is the multi-defect segmentation map,

is the multi-defect segmentation map, is the binary anomaly map,

is the binary anomaly map, is the upsampling function.

is the upsampling function.

Model Inference: During inference, a text prompt set for the target domain is constructed, and images and text prompts are encoded through the encoder and adapter to obtain a similarity map. After upsampling, multi-defect segmentation maps and anomaly maps are computed. The global anomaly score is obtained through the similarity between global image embeddings and text embeddings, used for zero-shot learning. In few-shot learning, the similarity between the query image and reference normal images is computed to obtain the reference anomaly map, which is combined with the original anomaly map for detection and segmentation. Additionally, text prompt sets for defect types unrelated to the product can be filtered out (MultiADS-F).

Experiments

Experimental Setup: Five datasets, MVTec-AD, VisA, MPDD, MAD (simulated and real), and Real-IAD, are used, employing a transfer learning setup to evaluate the model in zero-shot and few-shot learning scenarios. The ViT-L-14-336 CLIP backbone network is used, with parameters such as learning rate, batch size, and number of stages set accordingly.

Evaluation Metrics: Anomaly detection is evaluated using AUROC, F1-score, and AP; anomaly segmentation is evaluated using AUROC, F1-score, AP, and PRO; multi-type anomaly segmentation is evaluated using AUROC, F1-score, and AP (macro average).

Comparison Baselines: Comparison is made with 12 baseline methods including CLIP, CLIP-AC, CoOp, covering zero-shot and few-shot learning methods.

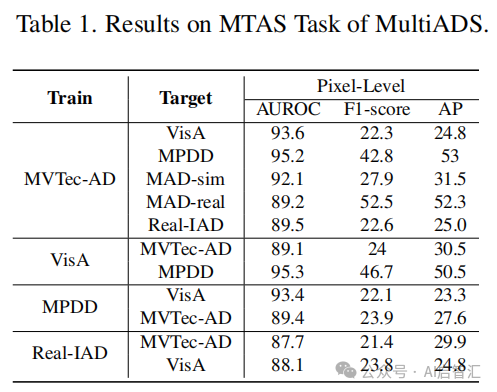

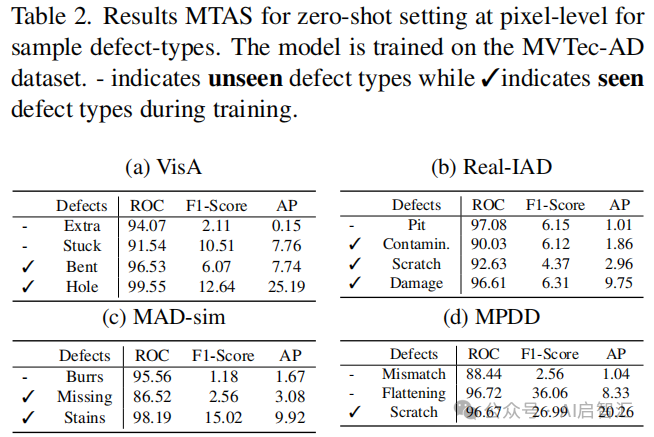

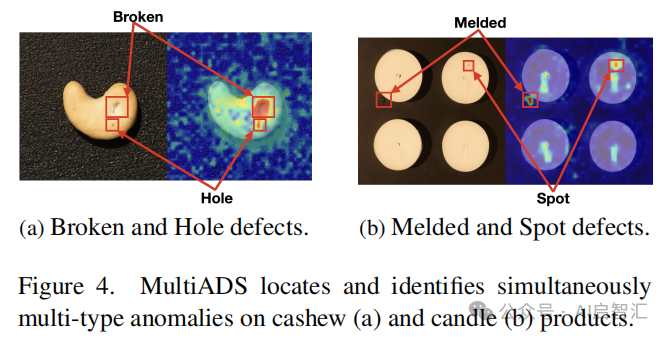

Multi-Type Anomaly Segmentation Task Results: Table 1 presents the results of MultiADS in the multi-type anomaly segmentation (MTAS) task under zero-shot learning settings, covering pixel-level AUROC, F1-score, and AP metrics under different training set and target set combinations, visually presenting the accuracy performance of the method in distinguishing various defect types. Table 2 lists pixel-level ROC, F1-Score, and AP results under zero-shot settings for some sample defect types, comparing the segmentation performance of defect types seen and unseen during the training phase, reflecting the model’s recognition ability and generalization for different defects. Figure 4 visually presents the detection results of cashew and candle products, demonstrating MultiADS’s ability to successfully locate and classify multiple defect types in a single image, such as broken and hole defects, allowing readers to intuitively understand the model’s actual performance in multi-type anomaly segmentation tasks.

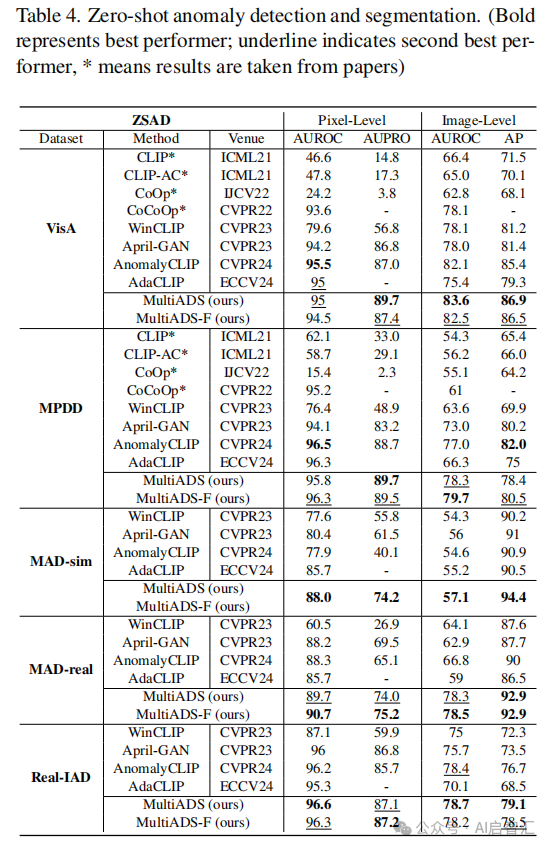

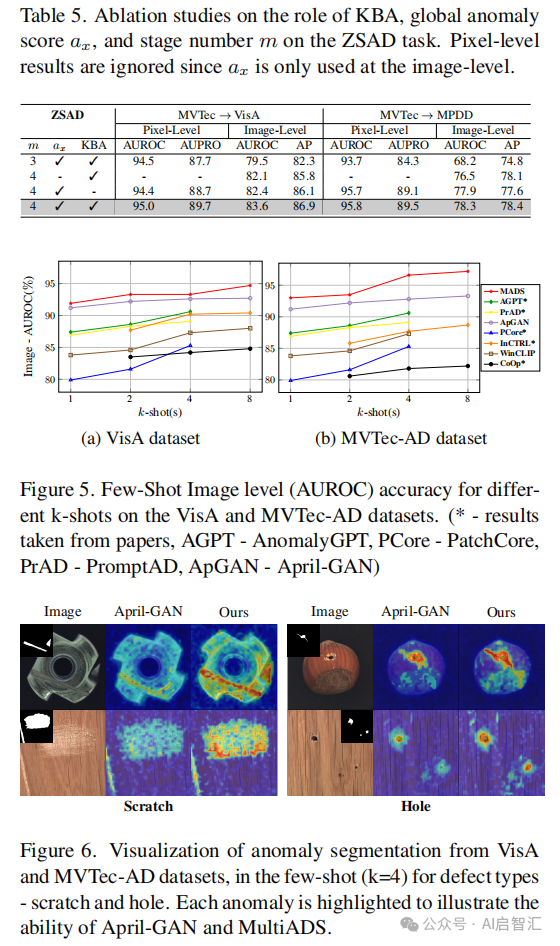

Binary Detection and Segmentation Task Results: Table 4 presents the pixel-level (AUROC, AUPRO) and image-level (AUROC, AP) performance of MultiADS and various baseline methods in zero-shot anomaly detection and segmentation (ZSAD) tasks across the VisA, MPDD, MAD (sim and real), and Real-IAD datasets, clearly demonstrating the advantages of MultiADS and MultiADS-F in this task compared to other baseline methods. Table 5 quantifies the impact of KBA, global anomaly scores, and different stage numbers on the ZSAD task through ablation experiments, helping readers understand the contribution of each factor to model performance. Figure 5 showcases the image-level AUROC results of different methods in few-shot anomaly detection (FSAD) tasks across the VisA and MVTec-AD datasets under different shot numbers (k=[1,2,4,8]), highlighting MultiADS’s overall best performance in this task. Figure 6 visually compares the anomaly segmentation results of MultiADS and April-GAN for scratch and hole defect types under few-shot (k = 4) conditions across the VisA and MVTec-AD datasets, illustrating the advantages of MultiADS in recognizing anomalies and segmenting different defect types.

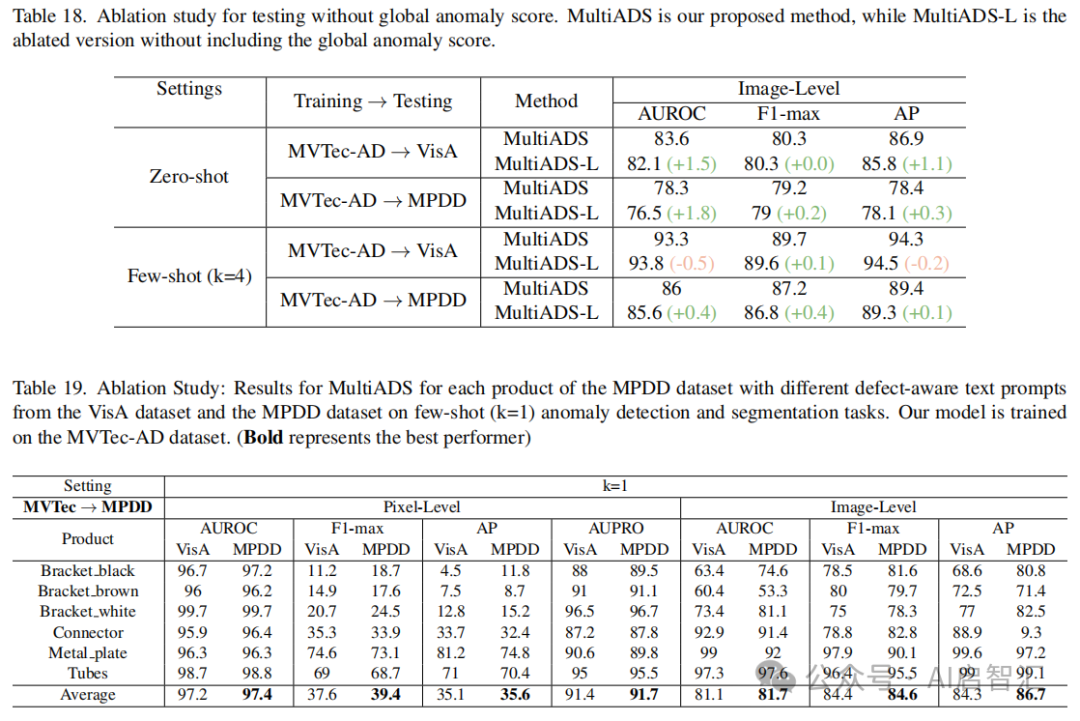

Ablation Experiments: Table 18 presents the results of ablation experiments removing the global anomaly score, comparing the performance differences between MultiADS and MultiADS-L under zero-shot and few-shot settings, assessing the impact of the global anomaly score on anomaly detection. Table 19 conducts ablation experiments on defect-aware text prompts, demonstrating the influence of using different datasets’ defect-aware text prompts on the few-shot anomaly detection and segmentation tasks on the MPDD dataset.

The paper provides a wealth of experimental data; interested readers can refer to the original text.

Innovations

The paper proposes a zero-shot learning method called MultiADS, focusing on multi-type anomaly detection and segmentation tasks in industrial scenarios. Traditional methods can only distinguish between normal and abnormal states, failing to identify specific defect types (such as bending, scratches, holes, etc.). MultiADS addresses this limitation through multi-type anomaly segmentation, knowledge base and text prompt design, zero-shot/few-shot learning methods.

Editor | AI Insight

END

END

Click on “AI Insight” above to follow us

Get AI sharing faster

PASSION FOR SHARINGPrevious Recommendations:

CVPR2025 | University of Maryland & Adobe Research Institute’s new work: AI understands “body shape differences”, generating more realistic human actions!

AAAI 2025 | Chongqing University and others propose SSLFusion: A scale and spatial alignment potential fusion model for multi-modal 3D object detection!

Hiera+DINOv2: Yale team proposes a new framework for real-time ultrasound image segmentation, addressing the challenges of generalization and real-time performance in ultrasound image segmentation!

CVPR2025 Oral | Sungkyunkwan University proposes TEAM: Unaligned matching and class boundary adaptive strategies to improve efficiency and accuracy in few-shot action recognition!

Johns Hopkins University proposes F-ViTA: Achieving multi-band thermal imaging generation!