Journal Information:

IEEE Transactions on Industrial Informatics (CAS Q1, JCR Q1 TOP, IF=11.7)

the College of Information Science and Technology, Beijing University of Chemical Technology, Beijing 100029, China

the Engineering Research Center of Intelligent PSE, Ministry of Education of China, Beijing 100029, China

Soft measurement using neural network technology has seen increasing applications in industrial processes. In recent years, the safety and robustness of neural network-based soft sensors have become major concerns. Furthermore, current research indicates that neural networks are vulnerable to adversarial attacks. In other words, small perturbations applied to the input can lead to significant deviations in the output. If the soft sensor for critical process variables is attacked, it may cause considerable damage to the industrial process. This paper primarily studies the attack methods for neural network-based industrial soft sensors. Considering the characteristics of industrial soft sensors, this paper proposes two novel adversarial attack methods. The first method is called Mirror Output Attack (MOA), a subtle attack that flips the output curve to change the output direction. The second method is called Translation MOA (TMOA), which can easily lead operators to misoperate. TMOA translates the output curve while translating the output curve to change the output conditions. The effectiveness of MOA and TMOA is demonstrated through an industrial case study of a sulfur recovery unit process. Simulation results show that neural network-based industrial soft sensors can be defeated by the two proposed adversarial attack methods. Research on adversarial attack methods can provide a basis for defending against attacks, thereby enhancing the security and robustness of soft sensors.

Malicious attacks, Mirror Output Attack (MOA), soft measurement, Translation Mirror Output Attack.

Monitoring critical quality variables is an effective way to ensure industrial process safety and improve product yield [1]. However, due to the limitations of sensors and the complexity of the industrial field, direct measurement of critical quality variables can be challenging. Fortunately, some auxiliary variables, such as temperature, pressure, and flow, can be easily measured. Soft sensors can predict critical quality variables by using mathematical models that map relevant auxiliary variables to critical quality variables [2]. The purpose of soft sensors is to provide accurate measurements of critical quality variables; in other words, to make the soft sensor output as close to the actual value as possible. With the help of soft sensors, operators can understand the current state of the industrial process. Currently, there are mainly two types of soft sensors: knowledge-driven soft sensors and data-driven soft sensors [3], [4]. Knowledge-based soft sensors use physical equations based on industrial process principles. Unfortunately, the large scale of modern industrial processes makes it challenging to fully understand their underlying principles and mechanisms. Therefore, developing and implementing knowledge-based soft sensors can be a time-consuming and costly process. On the other hand, the advent of the industrial big data era provides a foundation for data-driven soft sensors, making data-driven soft sensors increasingly popular in industrial processes. In data-driven soft sensors, neural network (NN) technology has been widely applied due to its powerful capabilities in nonlinear regression [6], [7]. Several NN models such as Recurrent Neural Networks (RNN) [8], Long Short-Term Memory [9], Convolutional Neural Networks [10], and Autoencoders [11] have been extensively developed as soft sensors [12].

Although neural networks have shown good performance in industrial soft sensors, recent studies indicate that neural networks have vulnerabilities in security and are susceptible to adversarial samples. Adversarial samples are carefully designed samples that can cause significant changes in neural network outputs by adding imperceptible perturbations to the input. This type of attack is referred to as adversarial attack [l6]. In recent years, extensive research has been conducted in the field of adversarial attacks, including the Fast Gradient Sign Method (FGSM) proposed by Goodfellow et al. [17], the Projected Gradient Descent Method (PGD) proposed by Madry et al. [18], the Carlini and Wagner attack proposed by Carlini and Wagner [19], and the DeepFool attack proposed by Moosavi Dezfooli et al. [20]. Additionally, Xiao et al. [21] proposed an attack method called Adversarial Generative Adversarial Network (AdvGAN), which utilizes the structure of generative adversarial networks.

Despite the interest in adversarial attacks, their primary applications have focused on classification tasks. In the field of soft sensors, there is little research. In practical industrial processes, neural network-based soft sensors have been widely adopted. The safety of production depends on the accuracy and robustness of the neural network-based soft sensors used. However, due to the sensitivity of neural networks to adversarial samples, there are limitations in neural network (NN)-based soft sensors. If a neural network-based soft sensor is attacked, it may cause significant damage to the industrial process [22]. Moreover, in recent years, the intelligent upgrading of industries has broken down the “information silos” of traditional Industrial Control Systems (ICSs). Instead, ICSs connect production equipment through the Industrial Internet, providing convenience for manufacturers but also vulnerabilities for attackers. Unfortunately, there are weak cybersecurity mechanisms and protections in ICSs. Therefore, many factories and equipment still have significant security vulnerabilities and risks, allowing attackers to launch attacks on soft sensors [23], [24], [25]. Considering the uniqueness of industrial processes, Kong and Ge [26] proposed Direct Output Attack (DAO) and Iterative DAO (IDAO) to attack neural network-based soft sensors.

In this paper, we propose two attack methods based on AdvGAN, one called Mirror Output Attack (MOA) and the other referred to in this paper as Translation MOA (TMOA). The contributions of this paper are summarized as follows.

1) For the first time, adversarial attack methods based on AdvGAN are introduced into the field of industrial soft sensors. Due to its black-box attack capability, AdvGAN can be effectively used to attack industrial soft sensors.

2) This paper proposes two methods for the Mirror Output Attack. The first attack method targets a single operating condition called MOA. MOA changes the output direction, making the adversarial output symmetric to the actual output without changing the output interval. The second attack method, TMOA, is designed for multiple operating conditions. Based on MOA, the output value is not only symmetrically flipped but also the output interval is changed in a controllable manner. As a result, operators may mistakenly believe that the operating state has changed.

3) An industrial case study of a Sulfur Recovery Unit (SRU) [27] is used to validate the attack effects of the proposed MOA and TMOA. Compared to methods based on FGSM and PGD, MOA and TMOA are more aggressive in white-box attacks and more reasonable and stealthy in black-box attacks. Therefore, MOA and TMOA have the potential to cause serious harm to industrial processes.

The main objective of adversarial attacks on soft sensors is to reduce the accuracy of the soft sensor, resulting in a significant error between the output of the soft sensor and the actual value. At the same time, industrial processes are usually in a stable state, which means that yield fluctuations are minimal. Therefore, the attacked output should also remain in a stable state to be received by the operator. To meet the above requirements, this paper proposes and designs two adversarial attack methods targeting neural network-based soft sensors. The first adversarial attack method, called MOA, triggers the output and changes the direction of the output curve. MOA can ensure that the output remains in a stable state while increasing the error between the soft sensor output and the actual value. The second method, called Translation MOA (TMOA), is used to change the output interval based on changing the direction of the output curve. TMOA can enlarge or shrink the output according to the attacker’s requirements while controlling the output interval. In industrial production processes, there are various operating conditions. Different conditions are reflected in different intervals of process data. Field operators need to continuously adjust operations according to different operating conditions. After adversarial attacks, regardless of the output size, operators may be deceived into believing that the operating conditions have changed, leading to misoperations.

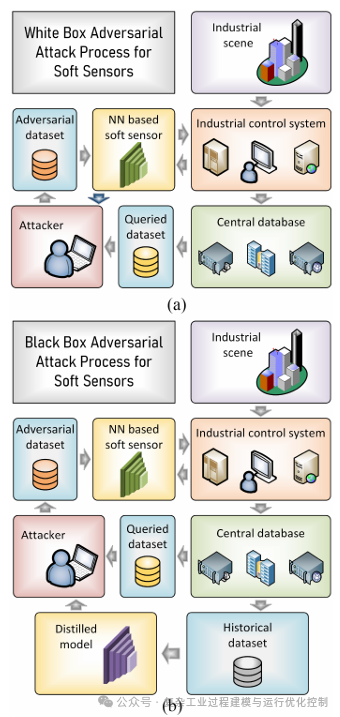

Based on the background knowledge of the attacked neural network-based soft measurement model, adversarial attacks can be divided into white-box attacks and black-box attacks. In white-box attacks, the structure and parameters of the neural network-based soft sensor are known. Therefore, perturbations can be directly generated based on the neural network-based soft sensor. Thus, white-box attacks are relatively easy to implement. In contrast, black-box attacks do not have knowledge of the neural network-based soft measurement. Therefore, a distillation model needs to be established to implement the attack. The distillation model is trained by querying the input and output of the neural network-based soft sensor. The purpose of the extraction model is to replicate or approximate the neural network-based soft sensor. Then, the attack strategy for white-box attacks is implemented on the well-constructed distillation model. Therefore, the deployment of black-box attacks is more complex compared to white-box attacks. Furthermore, some necessary statements and assumptions are given for adversarial attacks on neural network-based soft sensors. It is worth noting that the research on adversarial attacks is based on these statements and assumptions; otherwise, the research is invalid.

Statement 1: Both MOA and TMOA attack methods target neural network-based soft sensors. Currently, all adversarial attack methods target neural network-based models.

Statement 2: The queried dataset refers to auxiliary data used for soft sensors during the online period of industrial processes. The queried dataset can be understood as the test samples during the online testing phase. The generated adversarial perturbations need to be applied to the queried dataset.

Assumption 1: The attacker has the right to access and modify the queried dataset.

Assumption 2: Black-box attacks can query the historical inputs and outputs of the neural network-based soft sensor to establish

a distillation model. Based on the above statements and assumptions, the specific steps for deploying MOA and TMOA in white-box attacks are as follows.

Step 1: Obtain the queried dataset and knowledge of the NN-based soft sensor from the ICS.

Step 2: Generate adversarial perturbations based on the queried dataset and the neural network-based soft sensor. Apply adversarial perturbations to the queried dataset to obtain the adversarial dataset.

Step 3: Input the adversarial dataset into the neural network-based soft sensor to obtain adversarial outputs. The specific steps for deploying MOA and TMOA in black-box attacks are as follows.

Step 1: Obtain the queried dataset and historical inputs and outputs of the NN-based soft sensor from the ICS.

Step 2: Train the extraction model based on the historical inputs and outputs of the neural network-based soft measurement.

Step 3: Generate adversarial perturbations based on the queried dataset and the extracted model. Apply adversarial perturbations to the queried dataset to obtain the adversarial dataset.

Step 4: Input the adversarial dataset into the neural network-based soft sensor to obtain adversarial outputs. Based on the discussion of white-box and black-box attacks, Figure 2 shows the deployment diagram for white-box and black-box attacks.

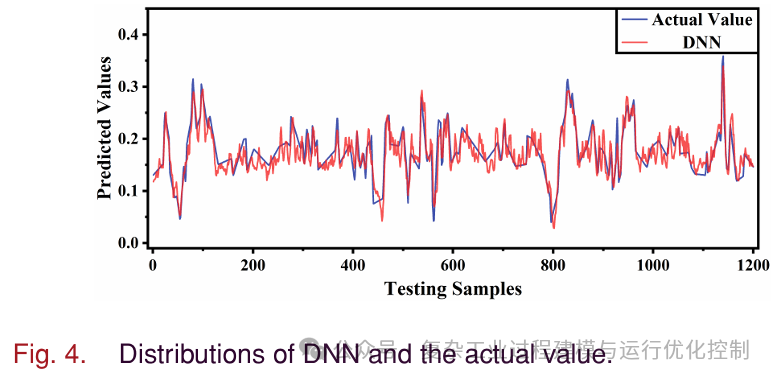

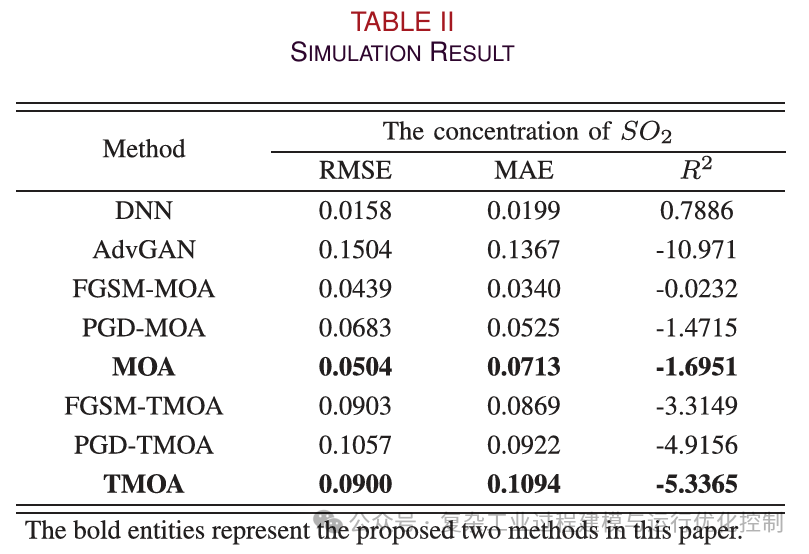

Figure 4 shows the distribution of DNN outputs and actual outputs. It can be seen that the DNN is able to track the actual values well (in fact, the DNN is just a neural network-based soft sensor with acceptable accuracy). The simulation results are shown in Table II. Although Table II shows that AdyGAN has the largest attack error, it is not suitable for adversarial attacks.

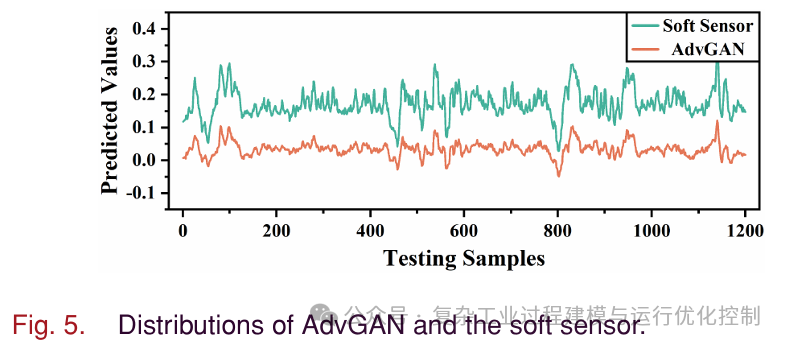

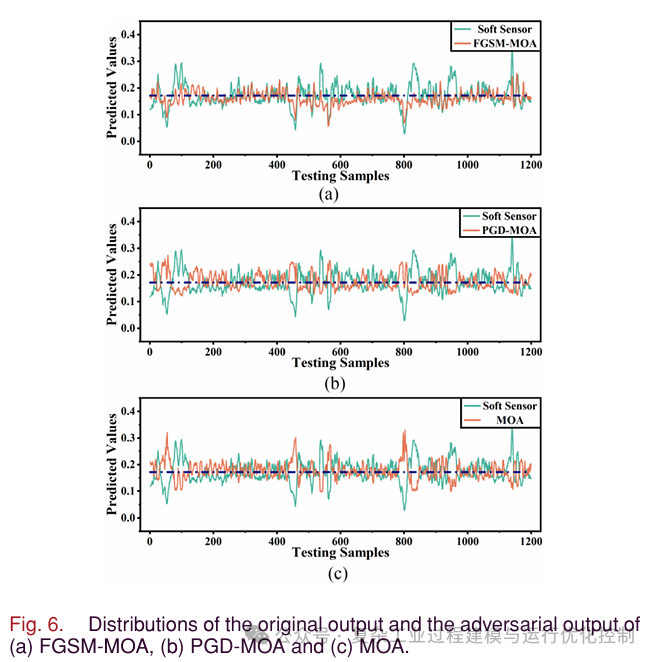

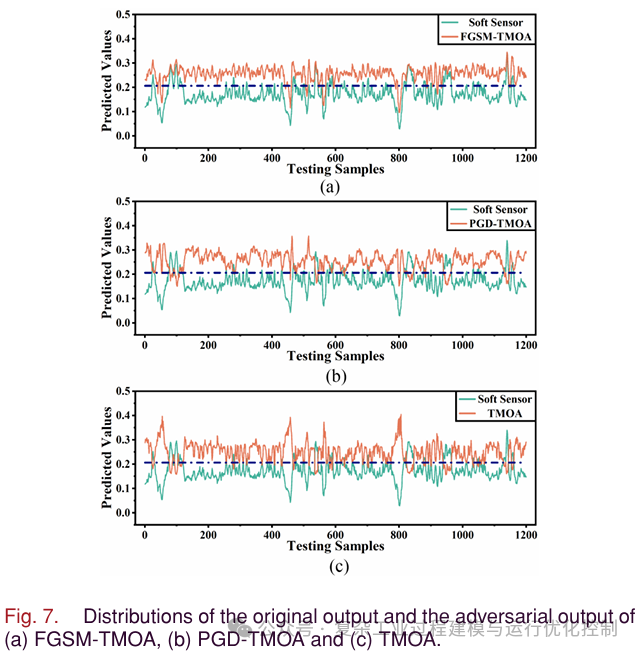

The output curves of AdvGAN and soft sensors are shown in Figure 5. It can be seen that the output of AdvGAN is negative at some sampling points, which is unreasonable. Operators can easily detect faults in the soft sensor, leading to attack failure. Therefore, simply increasing the gap between the adversarial output and the soft sensor output is not feasible. Further restrictions on yield are needed. As shown in Table II, compared to methods based on FGSM and PGD, MOA and TMOA have larger output errors. To intuitively show the dynamic relationship of MOA and TMOA, Figure 6 and Figure 7 are plotted. In Figure 6, the black line is the average of the soft sensor output. In Figure 7, the black line is the average of TMOA and all outputs of the soft sensor. As shown in Figure 6(a), FGSM-MOA only achieves mirror attacks at a few sampling points. In Figure 6(b), compared to FGSM-MOA, PGD-MOA implements mirror attacks at most sampling points, but the output curve is relatively smooth. In Figure 6(c), MOA achieves mirror outputs for all sampling points. The fluctuations of MOA output are similar to the output of the soft sensor, which may confuse the operators. As shown in Figure 7, TMOA achieves the purpose of translating and mirroring outputs. At the same time, the fluctuations of TMOA output are similar to the output of soft measurement. It can be seen that the one-time attack intensity of methods based on FGSM is insufficient. The iterative attack intensity of methods based on PGD has improved, but there is still room for improvement. In the next section on black-box attacks, these two gradient-based attack methods will show greater shortcomings.

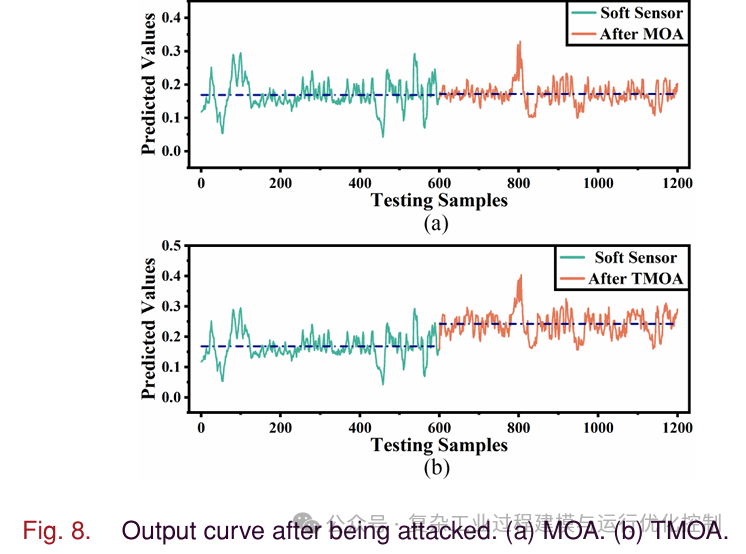

Figure 8 shows the output changes of the neural network-based soft sensor after being attacked. The green line in the upper part indicates the DNN output before the attack, while the orange line in the lower part indicates the output after the attack. The black dotted line refers to the average of the output curve. As shown in Figure 8(a), the average of MOA is almost the same as the original average. Furthermore, even after the attack, the output of the soft sensor remains stable. This means that the soft sensor has been attacked without the operator’s knowledge. As shown in Figure 8(b), the average output after TMOA has changed, indicating that the output has switched from the original steady state to a new steady state. TMOA achieves the switching of operating conditions. Operators are easily misled, which can cause problems in the industrial process. One important prerequisite for the success of adversarial attacks is that the applied perturbations are small and difficult to detect.

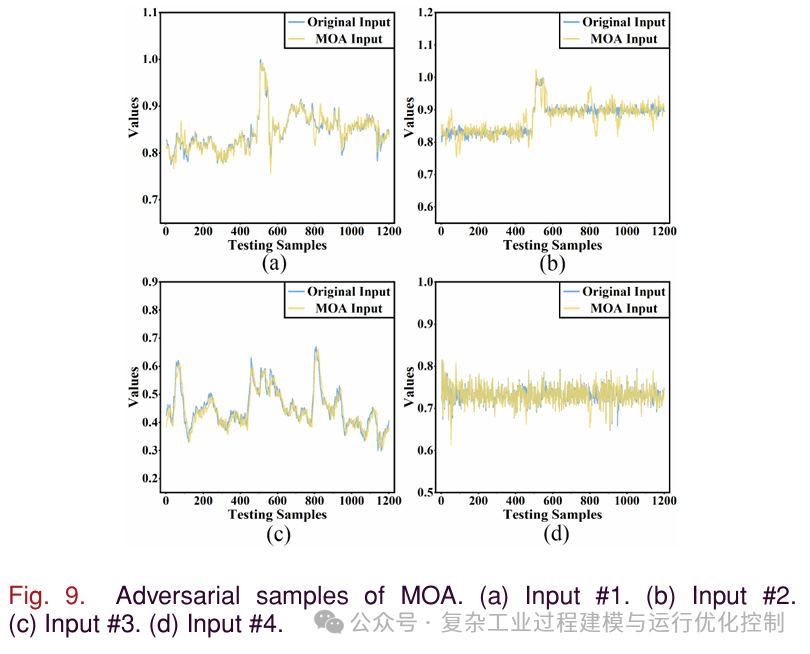

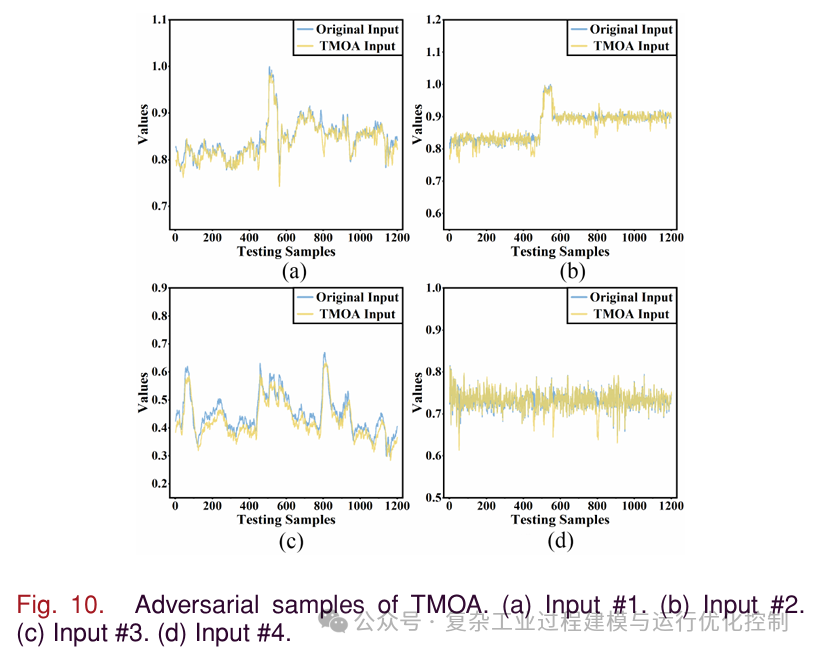

Figures 9 and 10 show the distribution of original samples and the difference samples generated by MOA and TMOA. It can be seen that the adversarial samples are almost identical to the original samples, ensuring that the adversarial samples are imperceptible. Due to the length limitation of the article, only the first four input variables are displayed here. In fact, the remaining input variables are consistent with the shown variables, almost identical to the original samples.

This paper studied adversarial attacks on neural network-based soft sensors. Based on the practical characteristics of industrial processes, two attack methods, MOA and TMOA, were proposed. MOA achieves the flipping of the soft sensor output curve while maintaining ordered and stable outputs. Based on the mirror output, TMOA achieves the translation of the output curve to change the operating state, making it easier for operators to misoperate. Additionally, this paper used an industrial case to validate the proposed methods. Simulation results show that MOA and TMOA can produce reasonable attacks. In terms of white-box attacks, the proposed methods are powerful. In terms of black-box attacks, only the proposed MOA and TMOA can achieve the set attack objectives. The comparison methods only produce disordered and unreasonable attack outputs.

https://ieeexplore.ieee.org/document/10172037