Chip design and verification are facing increasing challenges. How to address these issues—especially in the context of incorporating machine learning—is a major concern for the EDA industry, which was a common theme among the four keynote speakers at this month’s Design Automation Conference (DAC).

This year, DAC returned as an in-person event, featuring keynote speeches from a leader of a systems company, an EDA vendor, a startup, and a university professor.

Papermaster began his talk by sharing an observation. He noted, “There has never been a more exciting time in the field of technology and computing. We are facing a massive inflection point. The explosive amount of data we see combined with more effective analytical techniques in new AI algorithms means that putting all that data to use will create an insatiable demand for computing. In my 40 years in the industry, we have relied on Moore’s Law for 30 of those years. I could count on significant improvements every 18 months, reducing device costs while increasing density and performance at every process node. But as the industry enters these smaller lithography technologies, the complexity of manufacturing has increased dramatically. It is clear that Moore’s Law has slowed. The cost at each node is increasing. The number of masks is increasing, and while our density is increasing, we are not seeing the same scaling factors or performance improvements we used to achieve. The way we handle next-generation devices will change.“

Papermaster pointed out that embedded devices are becoming ubiquitous, and they are becoming increasingly intelligent. AI-driven computing demands are rising everywhere, necessitating new approaches to accelerate improvements. “Experts predict that by 2025, the amount of machine-generated data will exceed that generated by humans. This is driving us to rethink the way we think about computing. It makes us consider new ways of incorporating accelerators as chips or chiplets into devices. As an industry, we must collectively face these challenges, and that is what excites me about the transformation—this is how we will overcome these challenges.”

One major issue involves reticle limitations, which determine how much can be packed onto a single silicon wafer. Papermaster stated that this will lead to more designs and more design automation, which can only be achieved through collaboration and partnerships. Solutions will depend on heterogeneity and how to handle complexity. Software needs to be designed in a “shift left” manner alongside hardware. “In the past decade, the number of transistors has increased 225 times, which means we are now looking at designs with 146 billion transistors, and we must deploy chiplets.”

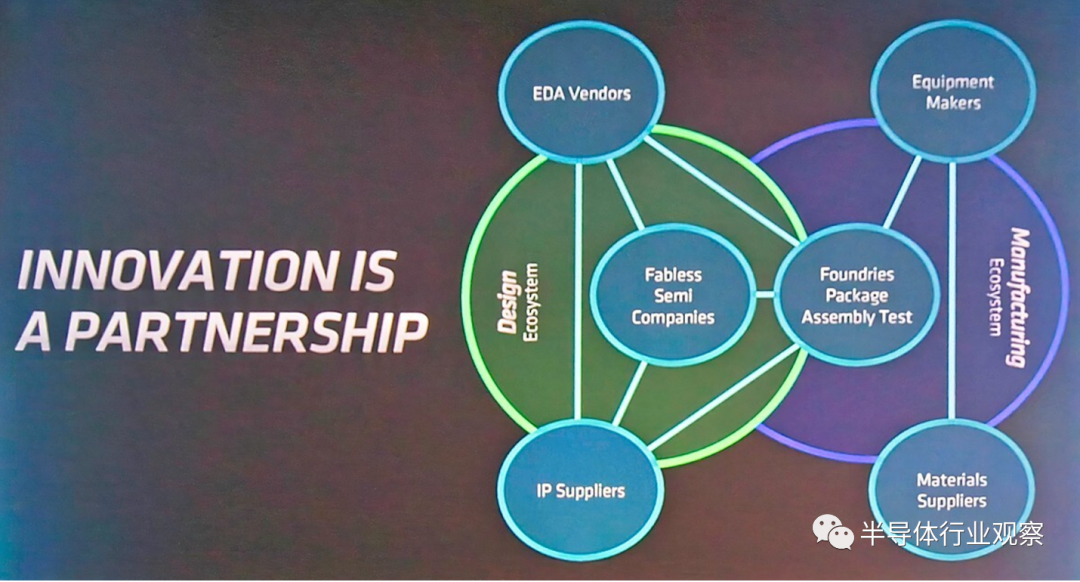

Figure 1: Ecosystem created through partnerships.

Source: AMD (based on Needham & Co. data)

However, this is not a new idea. “If we look back at the first DAC in 1964, it was created to help avoid redundant work (SHARE) association. This acronym is very indicative of what we need now. We need a common vision for the problems we are solving,” he said.

In short, addressing the problems the industry faces today is not something any one company can accomplish alone, and much of the innovation happens in the overlap of partners.

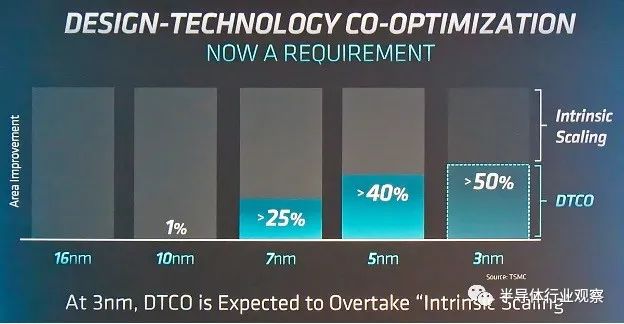

Figure 2: Percentage of scaling benefits. Source AMD

At 3nm, design technology co-optimization (DTCO) is expected to exceed intrinsic scaling. These trends pose challenges for EDA, application developers, and the design community. To address these issues, there is a need to rebuild solution platforms, particularly for AI. This involves combining engines and interconnects with chiplets through a software stack layer to create platforms. Engines are becoming increasingly specific, and more tasks require domain-specific accelerators.

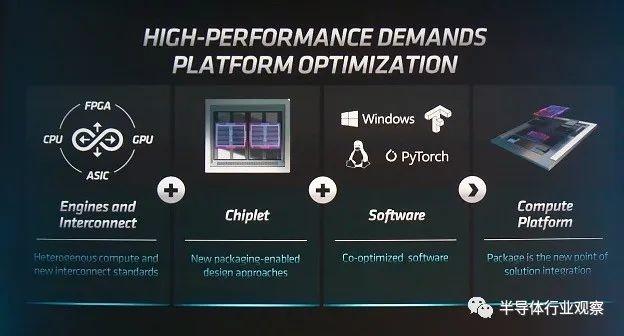

Figure 3: Platform approach to problem-solving. Source: AMD

“In the next chiplet era, we will see a combination of 2D and 3D approaches, and the partitioning of performance and power will open up new design possibilities. This will create incredible opportunities for EDA, and you will have to rethink many things from the past. We must also do this sustainably and consider power more. IT computing is on a trajectory of consuming all available energy, and we must now limit it.”

Papermaster also invited Aart de Geus, Chairman and CEO of Synopsys, to discuss the sustainability of computing.

In De Geus’s speech, he focused on the exponential nature of Moore’s Law, which overlaps with the exponential nature of CO2 emissions. “The fact that these two curves almost perfectly align should be very alarming for all of us,” he said. “Our goal is clear. In this decade, we must increase performance per watt by a factor of 100. We need breakthroughs in energy production, distribution, storage, utilization, and optimization. So we call on—those with brains should have the heart to help. You should have the courage to act.”

Papermaster followed up by stating that AMD has set a goal of improving energy efficiency by 30 times by 2025, exceeding the industry target by 2.5 times. He stated that AMD is on track, having already achieved a 7-fold improvement. “If the entire industry achieves this, it will save 51 billion kilowatt-hours of energy over 10 years, reducing energy costs by $6.2 billion, and decreasing CO2 emissions equivalent to 600 million tree seedlings.”

Papermaster added that AI is at a transformative point in the design automation industry. “It touches almost every aspect of our activities today,” he said, noting various technologies such as simulation, digital twins, derivative design, and design optimization that are driving use cases for EDA. “We are using AI to help improve outcome quality, explore design space, and enhance productivity.”

He also provided an example of how packaging can help. By stacking cache on top of logic, AMD can improve RTL simulation speed by 66%.

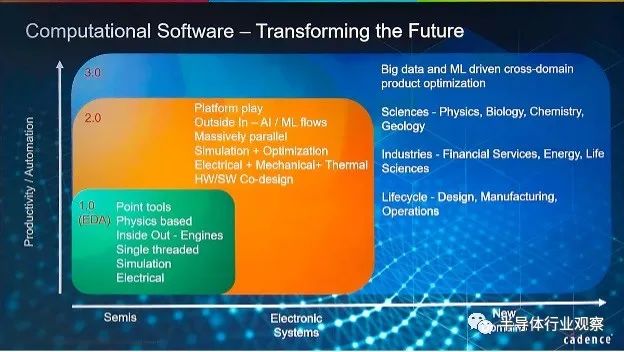

Devgan’s speech was titled “Computational Software and the Future of Intelligent Electronic Systems,” which he defined as computer science and mathematics, noting that this is the foundation of EDA.

“EDA has been doing this for a long time, since the late 60s to early 70s,” Devgan said. “Computational software has been applied to semiconductors and remains strong, but I believe it can be applied to many other fields, including electronic systems. The past decade has seen software shine, especially in social media, but in the next 10 to 20 years, even software will become more ‘computational.’”

There are many generational drivers for semiconductor growth. In the past, a single product category experienced booms and then busts. “The question has always been, ‘Will it continue to be cyclical or will it become more generationally driven?’” Devgan said. “I believe that given the number of applications, semiconductors will become less cyclical.”

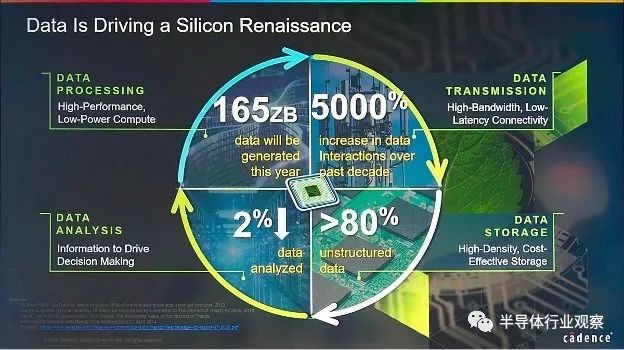

He stated that while design costs are rising, people often overlook the quantity. “The number of semiconductors is growing exponentially, so if we normalize design costs, are costs really rising? Semiconductors must provide better value, which is happening, and this is reflected in revenues over the past few years. The amount of data that needs to be analyzed is also increasing. This is changing computing storage and networking patterns. While specific domain computing was discussed in the 90s, it has become very important in recent years. This brings us closer to consumers and allows systems companies to make more chips. The interaction between hardware, software, and mechanics is driving the resurgence of data-driven systems companies.”

“45% of our customers are what we consider systems companies,” Anirudh Devgan emphasized.

Figure 4: The impact of data is increasing.

Source: Cadence

Devgan pointed out three trends. First, systems companies are building chips. The second is the emergence of 3D-IC or chiplet-based designs. The third is that EDA can leverage AI to provide more automation. He provided some supporting information for each area, then explored various application domains and how models apply to them. He agreed with Papermaster, stating that gains are no longer just coming from scaling; integration is becoming a bigger deal. He also outlined the stages of the emergence of computational software in different generations of EDA.

Figure 5: The era of EDA software.

Source: Cadence

Perhaps the most important takeaway from this discussion is that EDA must begin to address the entire stack, not just the chip. It must include systems and packaging. “The fusion of mechanical and electrical requires different approaches, and traditional algorithms must be rewritten,” Devgan said. “Thermal considerations are different. Geometries are different. Classical EDA has always been about improving productivity. A combination of physics-based methods and data-driven methods works well, but EDA has traditionally focused only on single runs. There is no knowledge transfer from one run to the next. We need a framework and mathematical methods to optimize multiple runs, which is where data-driven methods are useful.”

Optimization is one area he provided examples for, demonstrating how numerical methods can be useful and provide intelligent searches over space. He stated that this approach can yield better results in less time than a person can achieve.

Devgan also discussed sustainability. “This is a big deal for our employees, investors, and customers,” he said. “Semiconductors are essential, but they also consume a lot of power. We have an opportunity to reduce power consumption, and power will be a driving factor for PPA—not just at the chip level but at the data center and system level. We are several orders of magnitude behind biological systems.”

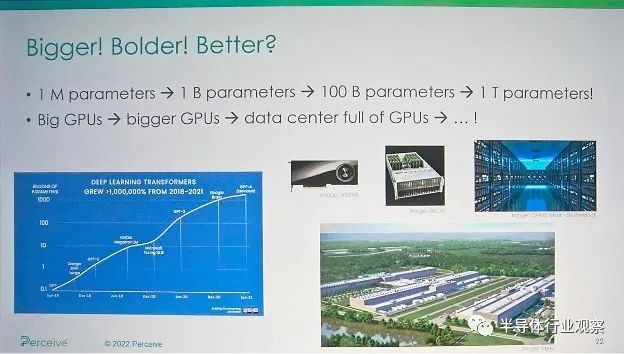

After over thirty years working in machine learning applications, Steve Teig firmly believes that AI can do more. “First, if we reduce our reliance on folklore and anecdotes and spend a little more time on mathematics and principles, deep learning will be stronger than it is now,” he said. “Second, I think efficiency is important. Simply creating models that look effective is not enough; we should be concerned about throughput per dollar, per watt, or other metrics.”

Teig observed that deep learning is impressive, noting that the technology was considered witchcraft 15 years ago. “But we need to recognize their magic,” he said. “We have been making larger and worse models. We have forgotten that the driving force behind innovation for the past 100 years has been efficiency. This is the driving force behind Moore’s Law, from CISC to RISC, from CPU to GPU. In software, we have seen advancements in computer science and improvements in algorithms. When they are doing deep learning, we are now in an anti-efficiency era. We spend about $8 million to train a large language model, whose carbon footprint is more than five times that of driving a car for a lifetime. This planet cannot afford to go down this path.”

Figure 6: Growing scale of AI/ML models.

Source: Perceive

He also stated that from a technical perspective, these huge models are unreliable because they capture noise in the training data, which is particularly problematic in medical applications. “Why are they so inefficient and unreliable? The most important reason is that we rely on folklore.”

He organized the rest of his talk around the themes of “a myth, a misunderstanding, and a mistake.” The “myth” is that average accuracy is the right way to optimize. “Some events are not important, while others are more severe. The neural networks we have do not distinguish between serious errors and non-serious errors. They score the same. Average accuracy is almost never what people want. We need to consider punishing errors based on severity rather than frequency. Not all data points are equally important. So how do you correct this problem? The loss function must be based on severity, and the training set should be scored based on the importance of the data.”

“The misunderstanding is the mistaken belief that neural networks can serve as computational devices to express.” Many assumptions and theorems are very specific, and real-life neural networks do not satisfy them. People think that networks can approximate any continuous combinatorial function as closely as possible with feedforward neural networks. This depends on having non-polynomial activation functions. If this is true, we need an arbitrary number of bits. More worryingly, the only functions you can build in this type of neural network are combinatorial functions, meaning you cannot represent anything that requires state. Some theorems suggest that NN is Turing complete, but how can this be true when you have no memory? RNNs are essentially finite state machines, but their memory is very limited.

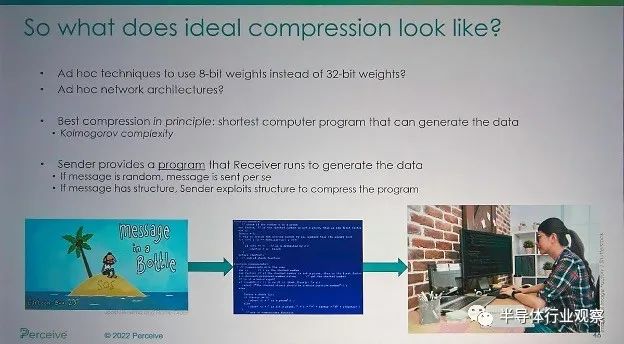

“The mistake is thinking that compression will harm accuracy, so we should not compress our models. You want to find structure in the data and distinguish structure from noise. You want to do this with the least resources possible. Random data cannot be compressed because there is no structure. The more structure you have, the more compression you can achieve. Learning is, in principle, compressible, meaning it has structure. Information theory can help us create better networks. Occam’s razor says the simplest model is the best, but what does that mean? Any regularity or structure in the data can be used to compress that data. Better compression reduces the arbitrariness of the network’s choices. If the model is too complex, you are fitting noise.”

Figure 7: Types of compression.

Source: Perceive

What would perfect compression look like? Teig provided an interesting example. “The best compression has already been mathematically described. It can be captured by the shortest computer program that can generate the data. This is known as Kolmogorov complexity. Consider Pi. I can send you the digits 31459, but the program that calculates the digits of Pi can generate the trillionth digit without sending so many digits. We need to move away from trivial forms of compression, such as the number of bits of weights. Is 100X compression possible? Yes.”

After studying how cycles have existed historically in music, art, and mathematics, Giovanni De Micheli, Professor of Electrical Engineering and Computer Science at EPFL, examined the interactions among participants—industry, academia, finance, startups, and conferences like DAC that provide data exchange.

He used all of this to introduce three questions. Will silicon and CMOS forever be our labor force? Will classical computing be replaced by new paradigms? Will life materials and computers merge?

First, looking at silicon and CMOS.

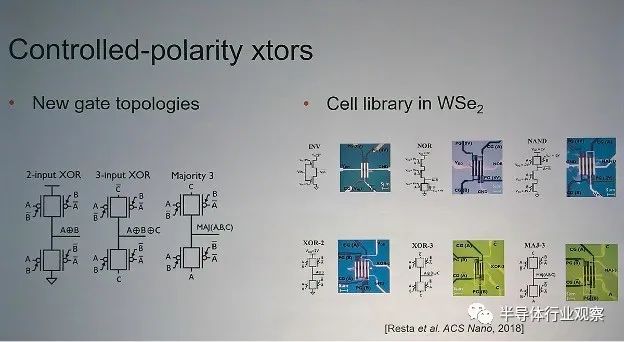

De Micheli examined some emerging technologies, from carbon nanotubes to superconducting electronic devices, to logic in memory and optical computing for machine learning. “Many of these are paradigm shifts, but you have to consider the effort required to transform these technologies into products. You need new models. You need to adjust or create EDA tools and processes. In doing so, you may find things that can improve existing things.”

Research on nanowires led to electrostatic doping, which created new gate topologies. He also studied tungsten diselenide (WSe2) and demonstrated a possible cell library where gates like XOR and majority gates can be implemented very efficiently. “Let’s revisit logic abstraction,” he said. “For decades, we have designed digital circuits using NAND and NOR. Why? Because we were brainwashed at the beginning. In NMOS and CMOS, this is the most convenient to implement. However, if you look at the Majority operator, you will find it is a key operator for performing addition and multiplication. Everything we do today requires these operations. You can build EDA tools based on this that perform better in synthesis.”

Figure 8: Gate topologies and libraries for different manufacturing technologies.

Source: EPFL

After understanding all the background on how to use majority operators, De Micheli claimed that compared to previous methods, it could reduce delay by 15% to 20%. This is an example of his cycle where an alternative technology taught us something about existing technology and helped improve it, as well as being applicable to new technologies like superconducting electronics.

“EDA is the driver of technology. It provides a way to evaluate emerging technologies and see useful methods in virtual labs. It creates new abstractions, new methods, and new algorithms that benefit not only these emerging technologies but also existing technologies. And we do know that current EDA tools cannot take us to optimal circuits, so it is always interesting to look for new ways to optimize circuits.”

Secondly, looking at computational paradigms.

De Micheli began exploring quantum computing and some applications it helps solve. The cycles here need to add the concepts of superposition and entanglement. “This is a paradigm shift that changes how we conceive algorithms, how we create languages, debuggers, etc.,” he said. “We must rethink many concepts of synthesis. Similarly, EDA is a technology-independent optimization that will lead to reversible logic. It is reversible because physical processes are inherently reversible. Then map to libraries. You must be able to embed constraints. Quantum EDA will enable us to design better quantum computers. Quantum computing is advancing computation theory, which includes polynomial problems that can be solved in polynomial time.

Next, looking at life materials and computers.

“An important factor in this cycle is calibration and enhancement. For over a thousand years, we have been using glasses for correction. The progress has been enormous.” De Micheli discussed many of the technologies available today and how they are changing our lives.

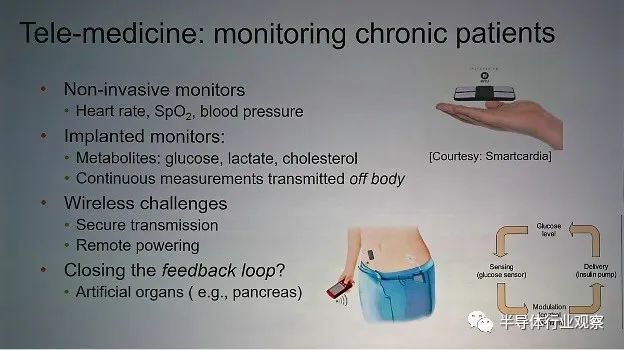

Figure 9: Creating feedback loops in medical applications.

Source: EPFL

“This leads to new EDA requirements, allowing us to co-design sensors and electronic devices,” he said. “But the ultimate challenge is to understand and mimic the brain. This requires us to decode or interpret brain signals, replicate neuromorphic systems, and learning models. This presents interface challenges for controllability, observability, and connectivity.”

This is the first step. “The next level—the future—is brain-to-brain, essentially being able to connect artificial intelligence and natural intelligence. Advances in biology and medicine, combined with new electronic and interface technologies, will enable us to design better biomedical systems,” he said.

Performing tasks in the most efficient way involves many people. It involves carefully designing algorithms, the platforms on which these algorithms run, the tools for executing software and hardware, and the mapping between hardware and silicon, or alternative manufacturing technologies. When each of these is done in isolation, it can yield small improvements. However, when each participant works together in partnership, significant improvements can occur. This may be the only way to stop the ongoing destruction of our planet.

Source: Content from Semiconductor Industry Observer (ID: icbank) compiled from SE, thanks.

This article is reprinted from the internet, and the purpose of reprinting this article is to disseminate relevant technical knowledge. The copyright belongs to the original author. If there is any infringement, please contact the editor for deletion (contact email: [email protected]).