RKNN is the model type used by the Rockchip npu platform, with model files ending in .rknn. Rockchip provides a complete model conversion Python tool, making it convenient for users to convert their self-developed algorithm models into RKNN models. Additionally, Rockchip offers C/C++ and Python API interfaces.

Use RKNN-Toolkit2 on a computer to complete model conversion, and deploy it to the Rockchip development board via RKNPU2.

Introduction to Rockchip NPU SDK

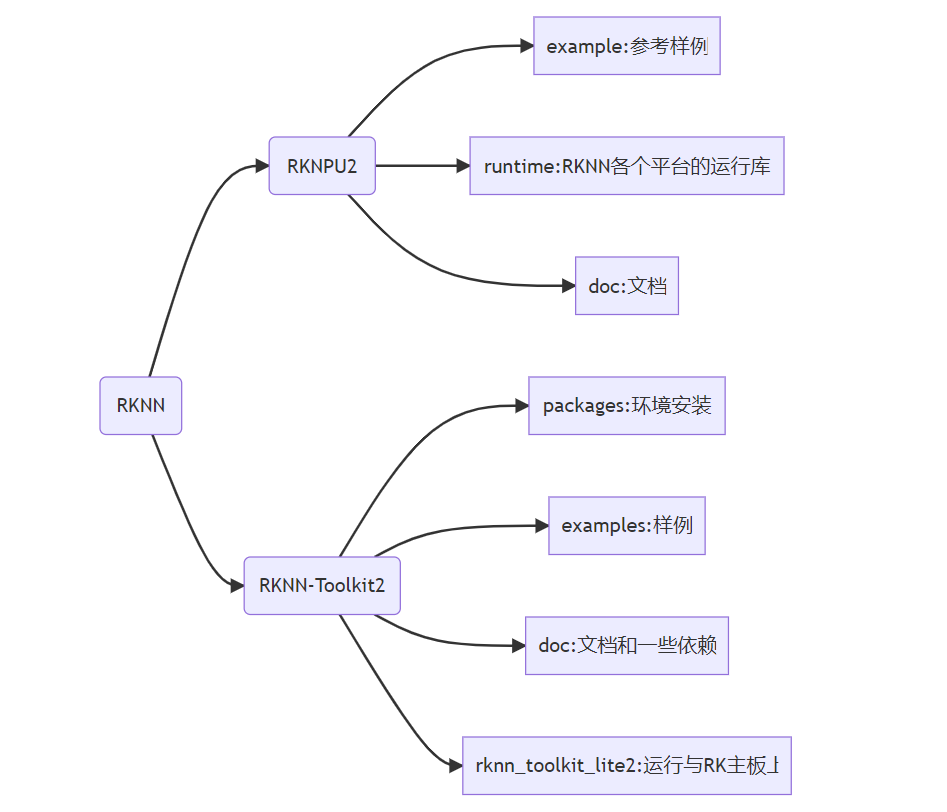

The Rockchip NPU SDK is divided into two parts. The PC side uses rknn-toolkit2 for model conversion, inference, and performance evaluation. Specifically, it converts mainstream models such as Caffe, TensorFlow, TensorFlow Lite, ONNX, DarkNet, and PyTorch into RKNN models, which can be used for inference simulation on the PC side to calculate time and memory overhead. The board side includes the rknn runtime environment, which contains a set of C API libraries and driver modules for communication with the NPU, as well as executable programs.

The RKNN software stack helps users quickly deploy AI models onto Rockchip chips. The overall framework is as follows:

-

RKNN-Toolkit2 is a software development kit for users to perform model conversion, inference, and performance evaluation on PC and Rockchip NPU platforms.

-

RKNN-Toolkit-Lite2 provides a Python programming interface for the Rockchip NPU platform, helping users deploy RKNN models and accelerate AI application implementation.

-

RKNN Runtime provides a C/C++ programming interface for the Rockchip NPU platform, helping users deploy RKNN models and accelerate AI application implementation.

-

The RKNPU kernel driver is responsible for interacting with the NPU hardware. It is open-source and can be found in the Rockchip kernel code.

-

Using RKNN

RKNN-Toolkit2 is a development kit that provides users with model conversion, inference, and performance evaluation on the PC platform. Users can conveniently perform the following functions using the Python interface provided by this tool:

-

Model conversion: Supports converting models such as Caffe, TensorFlow, TensorFlow Lite, ONNX, DarkNet, and PyTorch into RKNN models, and supports importing and exporting RKNN models, which can be loaded and used on the Rockchip NPU platform.

-

Quantization capability: Supports quantizing floating-point models into fixed-point models. Currently, the supported quantization method is asymmetric quantization (asymmetric_quantized-8), and it supports mixed quantization capabilities.

-

Model inference: Can simulate NPU running RKNN models on a PC and obtain inference results; or distribute RKNN models to specified NPU devices for inference and obtain inference results.

-

Performance and memory evaluation: Distributes RKNN models to specified NPU devices to evaluate the performance and memory usage of the model when running on actual devices.

-

Quantization accuracy analysis: This function provides the cosine distance of the inference results of each layer before and after model quantization compared to the floating-point model inference results, facilitating the analysis of how quantization errors occur and providing ideas for improving the accuracy of quantized models.

-

Model encryption function: Uses a specified encryption level to encrypt the entire RKNN model. Since the encryption of RKNN models is completed in the NPU driver, when using the encrypted model, it can be loaded just like a normal RKNN model, and the NPU driver will automatically decrypt it.

rknpu2

The RKNN SDK provides programming interfaces for chip platforms with RKNPU, helping users deploy RKNN models exported by RKNN-Toolkit2 and accelerate AI application implementation.

Environment Installation

System: Ubuntu20.04 LTS x64

Memory: 16GB

Python: 3.8.10

Target Platform: RK356X/RK3568

Collision of YOLOv8 and RKNN: How the New SOTA Vision Model Shines at the Edge

YOLOv8: A Precise & Flexible Visual AI Solution In January 2023, Ultralytics released the latest YOLO series model—YOLOv8. For this product release, Ultralytics officially described it as “a cutting-edge, state-of-the-art vision model”. In addition to performance improvements, YOLOv8 can handle various visual technologies (classification, detection, segmentation, pose estimation, and tracking). The official description states that YOLOv8 supports a full range of vision AI tasks, boasting its capabilities in the field of visual AI.

Precise & Flexible YOLOv8 officially open-sourced five pre-trained models of different parameter sizes: YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l, and YOLOv8x. Compared to the YOLOv5 model with a similar parameter count, YOLOv8 achieved significant accuracy improvements on the COCO dataset. Among them, the accuracy of YOLOv8n and YOLOv8s improved by over 5%, thanks to improvements in network structure, training methods, anchor-free techniques, and loss functions. In contrast, the sacrifices in parameter count and inference time are negligible.

YOLOv8’s flexibility is reflected in the following three aspects: 1. Due to the accuracy improvements of YOLOv8, it can utilize lighter models while achieving the same precision as YOLOv5. 2. The YOLOv8 project supports Python command line, making model training, inference, and export more convenient. 3. YOLOv8 supports tracking algorithms, and its application in fastSAM provides diverse usage methods in engineering.

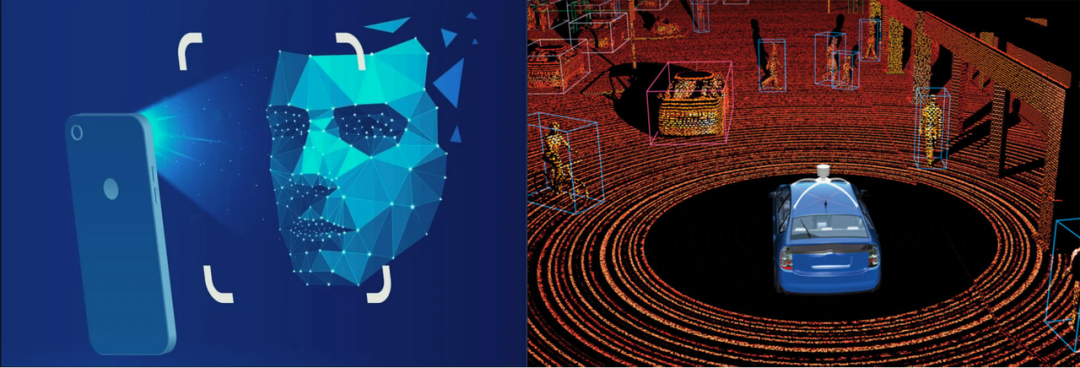

Potential Choices for Edge Visual Solutions The integration of object detection and tracking algorithms is an indispensable visual capability at the edge, with extensive applications in robotic visual navigation, autonomous driving, and military guidance. Furthermore, the complex environments at the edge impose high demands on visual generalization capabilities. YOLOv8 supports both aspects: 1. The YOLOv8 project has implemented the integration of BoT-SORT and ByteTrack tracking algorithms. 2. FastSAM uses the backbone of the YOLOv8 segmentation network for feature extraction and mask generation, providing a solution for achieving visual generalization capabilities using YOLOv8.

Keeping the Model at the Edge To run the YOLOv8 model on edge products like robots, we need to understand the knowledge related to model deployment.

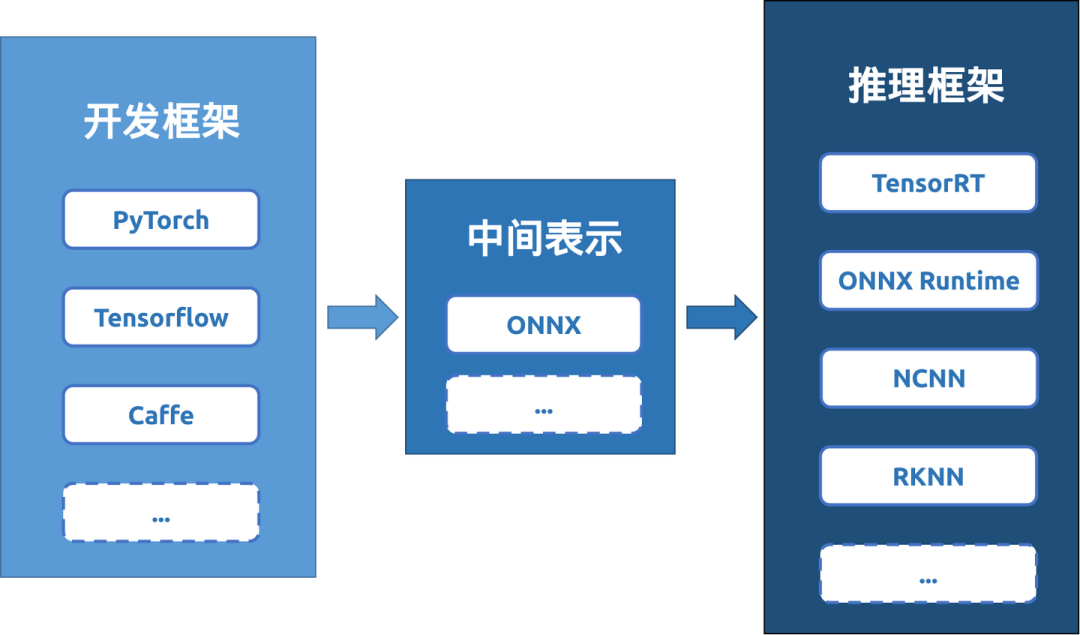

a. Model Deployment Deploying a model refers to placing a machine-learning model into an environment where it can do the job it was created to do. The process of placing a machine learning model in the appropriate “work position” is called model deployment. Model deployment often involves optimizing the model, such as operator fusion and rearrangement, weight quantization, knowledge distillation, etc., in addition to embedding the model’s capabilities into “where it is needed.” The design and training of models are often complex, relying on flexible model development frameworks like PyTorch and TensorFlow; the usage of models aims to achieve “slimming” and accelerated inference under specific circumstances, which requires support from specialized inference frameworks like TensorRT and ONNX Runtime. From this perspective, the model deployment process can also be seen as the migration of the model from development frameworks to specific inference frameworks.

b. From Development Framework to Inference Framework The diversity of model development frameworks and inference frameworks facilitates model design and usage but poses challenges for model conversion. To simplify the model deployment process, Facebook and Microsoft jointly released an intermediate representation for deep learning models in 2017: ONNX. This allows many model training frameworks and inference frameworks to establish connections with ONNX for mutual conversion of model formats. The emergence of the ONNX representation has gradually formed the paradigm of “model development framework -> model intermediate representation -> model inference framework” for model deployment.

So how do we migrate a model from a development framework to an inference framework? One straightforward idea is to parse a model layer by layer and use the tools supported by the inference framework to re-implement the inference process of that model. However, in reality, considering the universality of model conversion, the current mainstream model conversion process is achieved through the “tracing method”: given a set of inputs, run the model and record the computation process that the set of data experiences within the model, saving this computation process as the converted model [1]. During the model conversion process, the input data is used not only to trace the model’s computation process but is sometimes also used to optimize quantization parameters during model conversion. In the conversion process from ONNX to RKNN, the model seeks suitable quantization parameters based on the distribution of input data.

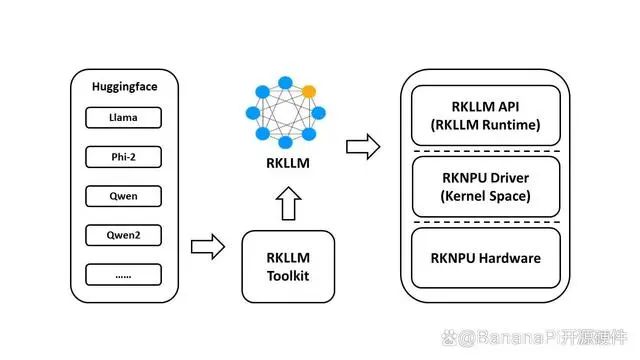

The RKNN framework supports inference of models on NPURKNN is an inference framework used on the Rockchip NPU platform, providing convenience for deep learning model inference on NPU. To support models trained under different frameworks for inference using the RKNN framework, the RKNN official has released the rknn-toolkit development suite. This tool provides a series of Python APIs to support model conversion, model quantization, model inference, and model status detection, among other functions. The installation and usage of rknn-toolkit can refer to the official documentation [2].

A typical RKNN model deployment process generally requires the following four steps: RKNN model configuration -> model loading -> RKNN model construction -> RKNN model export

-

RKNN model configuration is used to set model conversion parameters, including input data mean, quantization type, quantization algorithm, and model deployment platform.

-

Model loading refers to loading the pre-conversion model into the program. Currently, RKNN supports loading and converting models from ONNX, PyTorch, TensorFlow, Caffe, etc. It is worth mentioning that model loading is a key step in the entire conversion process, this step allows engineers to specify the output layers and output layer names of the model loading, determining which parts of the original model participate in the model conversion process.

-

RKNN model construction is used to specify whether the model will be quantized and to specify the dataset used for quantization calibration.

-

RKNN model export is used to save the converted model.

RK3576/RK3588 NPU

As Rockchip’s 8nm high-performance AIOT platform, the RK3576/RK3588 NPU boasts powerful performance, with a design of 6TOPS capable of efficient neural network inference computation. This allows the RK3576/RK3588 to perform exceptionally well in artificial intelligence fields such as image recognition, speech recognition, and natural language processing.

The NPU of RK3576/RK3588 also supports various learning frameworks, including TensorFlow, Pytorch, Caffe, MXNet, and other popular deep learning frameworks in AI development, providing developers with rich tools and libraries to facilitate model training and inference, easily handling various big data computing scenarios.

Typical Applications of RK3576/RK3588 NPU

Computer Vision: The NPU can be used for tasks such as image recognition, object detection, and facial recognition. It has extensive applications in security monitoring, autonomous driving, and medical image analysis.