Author: lulugl, Source: MBP Community Review of “Milray MYC-LR3576 Core Board and Development Board”Event.

Facial fatigue detection: A technology that determines whether a person is in a state of fatigue by analyzing facial features. The principle is mainly based on computer vision and machine learning methods. When a person is fatigued, certain changes in facial features occur, such as increased eye closure, reduced blink frequency, yawning, and changes in head posture. For example, detecting the state of the eyes is a key part in assessing fatigue. Normally, a person’s blink frequency is relatively stable, but when fatigued, the blink frequency decreases, and the duration of each blink may extend. At the same time, the head may unconsciously droop or shake, and these features can serve as the basis for fatigue detection. The Milray MYC-LR3576 uses an 8-core CPU with a 6 TOPS NPU accelerator and a 3D GPU, making it easy to implement this function. Here’s how to achieve this functionality: [Hardware] 1. Milray MYC-LR3576 development board 2. USB camera [Software] v4l2 OpenCV dlib library: dlib is a modern C++ toolkit that includes many algorithms and tools for various tasks such as machine learning, image processing, and numerical computation. Its design goal is to provide a high-performance, easy-to-use library that is widely used in the open-source community. [Implementation Steps] 1. Install python-opencv 2. Install dlib library 3. Install v4l2 library [Code Implementation] 1. Import cv2, dlib, and threading:

import cv2

import dlib

import numpy as np

import time

from concurrent.futures import ThreadPoolExecutor

import threading2. Initialize dlib’s face detector and landmark predictor

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor('shape_predictor_68_face_landmarks.dat')3. Define a function to calculate the eye aspect ratio

def eye_aspect_ratio(eye):

A = np.linalg.norm(np.array(eye[1]) - np.array(eye[5]))

B = np.linalg.norm(np.array(eye[2]) - np.array(eye[4]))

C = np.linalg.norm(np.array(eye[0]) - np.array(eye[3]))

ear = (A + B) / (2.0 * C)

return ear4. Define a function to calculate head pose

def get_head_pose(shape):

# Define the 3D coordinates of facial landmarks

object_points = np.array([

(0.0, 0.0, 0.0), # Nose tip

(0.0, -330.0, -65.0), # Chin

(-225.0, 170.0, -135.0), # Left eye left corner

(225.0, 170.0, -135.0), # Right eye right corner

(-150.0, -150.0, -125.0), # Left mouth corner

(150.0, -150.0, -125.0) # Right mouth corner

], dtype=np.float32)

image_pts = np.float32([shape[i] for i in [30, 8, 36, 45, 48, 54]])

size = frame.shape

focal_length = size[1]

center = (size[1] // 2, size[0] // 2)

camera_matrix = np.array([

[focal_length, 0, center[0]],

[0, focal_length, center[1]],

[0, 0, 1]], dtype="double"

)

dist_coeffs = np.zeros((4, 1))

(success, rotation_vector, translation_vector) = cv2.solvePnP(

object_points, image_pts, camera_matrix, dist_coeffs, flags=cv2.SOLVEPNP_ITERATIVE

)

rmat, _ = cv2.Rodrigues(rotation_vector)

angles, _, _, _, _, _ = cv2.RQDecomp3x3(rmat)

return angles5. Define thresholds for eye aspect ratio and consecutive frames

EYE_AR_THRESH = 0.3

EYE_AR_CONSEC_FRAMES = 486. Open the camera

We first use v4l2-ctl –list-devices to list the devices connected to the development board:

USB Camera: USB Camera (usb-xhci-hcd.0.auto-1.2):

/dev/video60

/dev/video61

/dev/media7Fill in 60 as the camera number in the code:

cap = cv2.VideoCapture(60)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 480) # Reduce resolution

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 320)7. Create a multithreading processing function to separate acquisition and analysis:

# Multithreading processing function

def process_frame(frame):

global COUNTER, TOTAL

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

faces = detector(gray, 0) # The second parameter is 0, indicating no upsampling

for face in faces:

landmarks = predictor(gray, face)

shape = [(landmarks.part(i).x, landmarks.part(i).y) for i in range(68)]

left_eye = shape[36:42]

right_eye = shape[42:48]

left_ear = eye_aspect_ratio(left_eye)

right_ear = eye_aspect_ratio(right_eye)

ear = (left_ear + right_ear) / 2.0

if ear < EYE_AR_THRESH:

with lock:

COUNTER += 1

else:

with lock:

if COUNTER >= EYE_AR_CONSEC_FRAMES:

TOTAL += 1

COUNTER = 0

# Draw 68 landmarks

for n in range(0, 68):

x, y = shape[n]

cv2.circle(frame, (x, y), 2, (0, 255, 0), -1)

cv2.putText(frame, f"Eye AR: {ear:.2f}", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

cv2.putText(frame, f"Blink Count: {TOTAL}", (10, 60), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

# Calculate head pose

angles = get_head_pose(shape)

pitch, yaw, roll = angles

cv2.putText(frame, f"Pitch: {pitch:.2f}", (10, 120), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

cv2.putText(frame, f"Yaw: {yaw:.2f}", (10, 150), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

cv2.putText(frame, f"Roll: {roll:.2f}", (10, 180), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

# Determine fatigue state

if COUNTER >= EYE_AR_CONSEC_FRAMES or abs(pitch) > 30 or abs(yaw) > 30 or abs(roll) > 30:

cv2.putText(frame, "Fatigue Detected!", (10, 210), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

return frame8. Create an image display thread:

with ThreadPoolExecutor(max_workers=2) as executor:

future_to_frame = {}

while True:

ret, frame = cap.read()

if not ret:

break

# Submit the current frame to the thread pool

future = executor.submit(process_frame, frame.copy())

future_to_frame[future] = frame

# Get the completed task results

for future in list(future_to_frame.keys()):

if future.done():

processed_frame = future.result()

cv2.imshow("Frame", processed_frame)

del future_to_frame[future]

break

# Calculate frame rate

fps_counter += 1

elapsed_time = time.time() - start_time

if elapsed_time > 1.0:

fps = fps_counter / elapsed_time

fps_counter = 0

start_time = time.time()

cv2.putText(processed_frame, f"FPS: {fps:.2f}", (10, 90), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

if cv2.waitKey(1) & 0xFF == ord('q'):

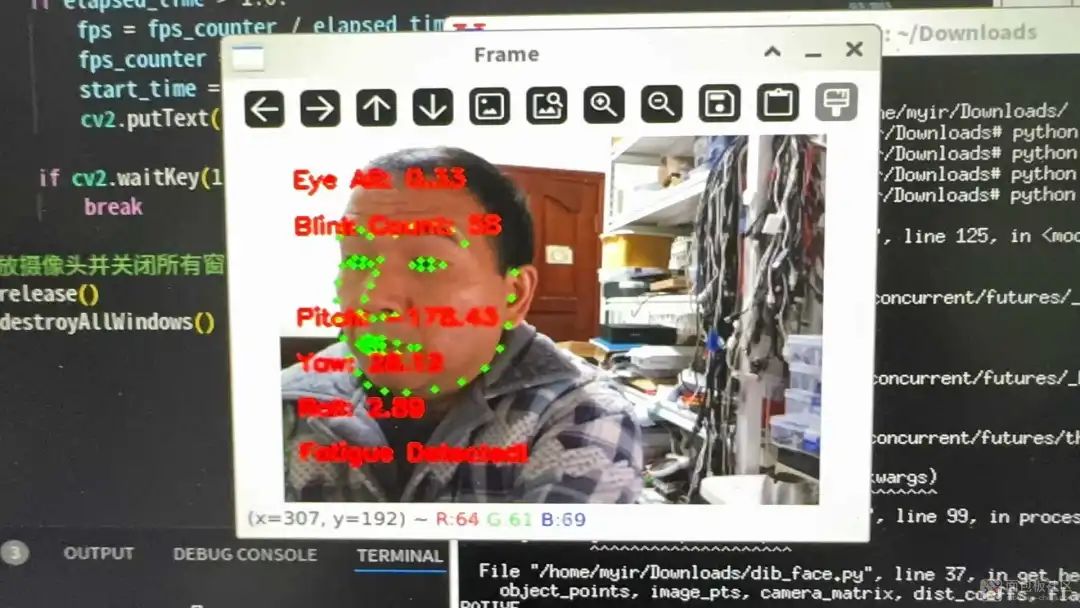

breakImplementation Effect:

Based on the detection results, we can implement fatigue reminders and other functions. The overall code is as follows:

import cv2

import dlib

import numpy as np

import time

from concurrent.futures import ThreadPoolExecutor

import threading

# Initialize dlib's face detector and landmark predictor

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor('shape_predictor_68_face_landmarks.dat')

# Modify font size

font_scale = 0.5 # Original font size is 0.7, now changed to 0.5

# Define a function to calculate the eye aspect ratio

def eye_aspect_ratio(eye):

A = np.linalg.norm(np.array(eye[1]) - np.array(eye[5]))

B = np.linalg.norm(np.array(eye[2]) - np.array(eye[4]))

C = np.linalg.norm(np.array(eye[0]) - np.array(eye[3]))

ear = (A + B) / (2.0 * C)

return ear

# Define a function to calculate head pose

def get_head_pose(shape):

# Define the 3D coordinates of facial landmarks

object_points = np.array([

(0.0, 0.0, 0.0), # Nose tip

(0.0, -330.0, -65.0), # Chin

(-225.0, 170.0, -135.0), # Left eye left corner

(225.0, 170.0, -135.0), # Right eye right corner

(-150.0, -150.0, -125.0), # Left mouth corner

(150.0, -150.0, -125.0) # Right mouth corner

], dtype=np.float32)

image_pts = np.float32([shape[i] for i in [30, 8, 36, 45, 48, 54]])

size = frame.shape

focal_length = size[1]

center = (size[1] // 2, size[0] // 2)

camera_matrix = np.array([

[focal_length, 0, center[0]],

[0, focal_length, center[1]],

[0, 0, 1]], dtype="double"

)

dist_coeffs = np.zeros((4, 1))

(success, rotation_vector, translation_vector) = cv2.solvePnP(

object_points, image_pts, camera_matrix, dist_coeffs, flags=cv2.SOLVEPNP_ITERATIVE

)

rmat, _ = cv2.Rodrigues(rotation_vector)

angles, _, _, _, _, _ = cv2.RQDecomp3x3(rmat)

return angles

# Define thresholds for eye aspect ratio and consecutive frames

EYE_AR_THRESH = 0.3

EYE_AR_CONSEC_FRAMES = 48

# Initialize counters

COUNTER = 0

TOTAL = 0

# Create a lock object

lock = threading.Lock()

# Open the camera

cap = cv2.VideoCapture(60)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 480) # Reduce resolution

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 320)

# Initialize frame counter and timestamp

fps_counter = 0

start_time = time.time()

# Multithreading processing function

def process_frame(frame):

global COUNTER, TOTAL

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

faces = detector(gray, 0) # The second parameter is 0, indicating no upsampling

for face in faces:

landmarks = predictor(gray, face)

shape = [(landmarks.part(i).x, landmarks.part(i).y) for i in range(68)]

left_eye = shape[36:42]

right_eye = shape[42:48]

left_ear = eye_aspect_ratio(left_eye)

right_ear = eye_aspect_ratio(right_eye)

ear = (left_ear + right_ear) / 2.0

if ear < EYE_AR_THRESH:

with lock:

COUNTER += 1

else:

with lock:

if COUNTER >= EYE_AR_CONSEC_FRAMES:

TOTAL += 1

COUNTER = 0

# Draw 68 landmarks

for n in range(0, 68):

x, y = shape[n]

cv2.circle(frame, (x, y), 2, (0, 255, 0), -1)

cv2.putText(frame, f"Eye AR: {ear:.2f}", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

cv2.putText(frame, f"Blink Count: {TOTAL}", (10, 60), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

# Calculate head pose

angles = get_head_pose(shape)

pitch, yaw, roll = angles

cv2.putText(frame, f"Pitch: {pitch:.2f}", (10, 120), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

cv2.putText(frame, f"Yaw: {yaw:.2f}", (10, 150), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

cv2.putText(frame, f"Roll: {roll:.2f}", (10, 180), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

# Determine fatigue state

if COUNTER >= EYE_AR_CONSEC_FRAMES or abs(pitch) > 30 or abs(yaw) > 30 or abs(roll) > 30:

cv2.putText(frame, "Fatigue Detected!", (10, 210), cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 255), 2)

return frame

with ThreadPoolExecutor(max_workers=2) as executor:

future_to_frame = {}

while True:

ret, frame = cap.read()

if not ret:

break

# Submit the current frame to the thread pool

future = executor.submit(process_frame, frame.copy())

future_to_frame[future] = frame

# Get the completed task results

for future in list(future_to_frame.keys()):

if future.done():

processed_frame = future.result()

cv2.imshow("Frame", processed_frame)

del future_to_frame[future]

break

# Calculate frame rate

fps_counter += 1

elapsed_time = time.time() - start_time

if elapsed_time > 1.0:

fps = fps_counter / elapsed_time

fps_counter = 0

start_time = time.time()

cv2.putText(processed_frame, f"FPS: {fps:.2f}", (10, 90), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release the camera and close all windows

cap.release()

cv2.destroyAllWindows()[Summary]The [Milray MYC-LR3576 Core Board and Development Board] is powerful enough to easily implement facial fatigue detection, leading to many practical functions in industry and artificial intelligence.

If you have a development board, you can also use it to participate in our DIY event, designing an interesting smart device!

MBP Community DIY event is ongoing!👇

https://mbb.eet-china.com/forum/topic/147007_1_1.html

Share your DIY electronic designs in the MBP community blog/forum, and add the [Electronic DIY] tag when publishing articles. There is no word limit, and articles will be reviewed by the community for rich rewards!

[Award Setup]

[Article Requirements]

1. Content must beoriginal, and must be related to the electronics industry, and first published on the internet

2. Content should include: design ideas, finished product display (such as circuit principles, function display, cost control plans or lists, code, etc.) Content involving core intellectual property can be omitted.

3. During the event, the same ID can participate multiple times, and multiple works can accumulate scores under quality conditions.

Event Duration: 2024.11.13 – 2025.02.13

Click to read the original text, to learn more about the event details!

Click to read the original text, to learn more about the event details!