Linux is widely used in the consumer electronics field, where power saving is an important topic for consumer electronic products.

Power management in Linux is very complex, involving system-level standby, dynamic voltage and frequency scaling, handling system idleness, and the support of each device driver for system standby and runtime power management for each device. It can be said that it is closely related to every device driver in the system.

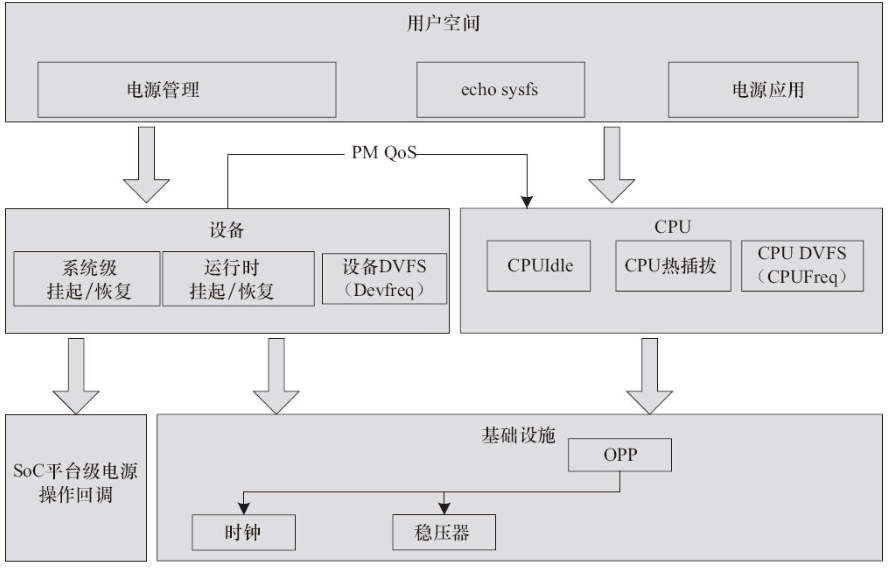

For consumer electronic products, power management is extremely important. Therefore, this part of the work often occupies a considerable proportion of the development cycle. The following diagram presents the overall architecture of Linux kernel power management, which can be summarized into the following categories:

1) CPUFreq, which dynamically adjusts CPU voltage and frequency based on system load during runtime.

2) CPUIdle, which puts the CPU into low power modes based on idle conditions during system idle.

3) Support for hot-plugging CPUs in multi-core systems.

4) PM QoS, which is requested by the system and devices for specific latency needs, affecting the specific strategies of CPUIdle.

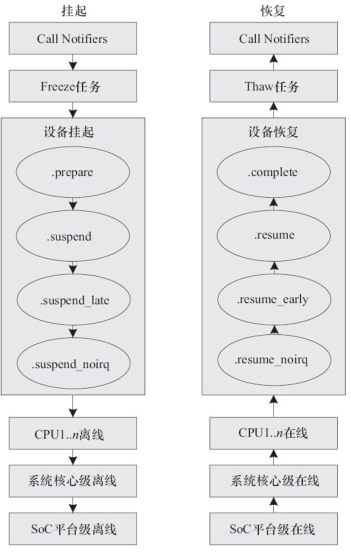

5) A series of entry functions for device drivers when the system suspends to RAM/hard disk.

6) Entry for SoC to enter suspend state and SDRAM self-refresh.

7) Runtime dynamic power management for devices, dynamically turning devices on and off based on usage.

8) Underlying clocks, regulators, and frequency/voltage tables (completed by the OPP module) that may be used by each driver subsystem.

1. CPUFreq Driver

The CPUFreq subsystem is located in the drivers/cpufreq directory, responsible for dynamically adjusting CPU frequency and voltage during operation, i.e., DVFS (Dynamic Voltage Frequency Scaling). The reason for adjusting CPU voltage and frequency during runtime is that the power consumption in CMOS circuits is proportional to the square of the voltage and proportional to the frequency. Therefore, lowering voltage and frequency can reduce power consumption.

The core layer of CPUFreq is located in drivers/cpufreq/cpufreq.c, which provides a unified interface for the implementation of CPUFreq drivers for various SoCs and implements a notifier mechanism that can notify other modules when the CPUFreq policy and frequency change.

2. CPUFreq Policies

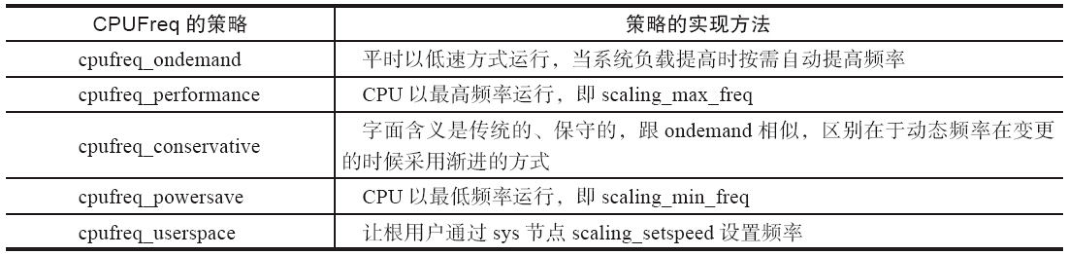

The SoC CPUFreq driver only sets the CPU frequency parameters and provides a way to set the frequency, but it does not manage what frequency the CPU should actually run at. The frequency is determined by the CPUFreq policy, which decides the standards and changes, as shown in the table.

In the Android system, an interactive policy has been added, which is suitable for latency-sensitive UI interaction tasks. When there are UI interaction tasks, this policy will adjust the CPU frequency more aggressively and timely.

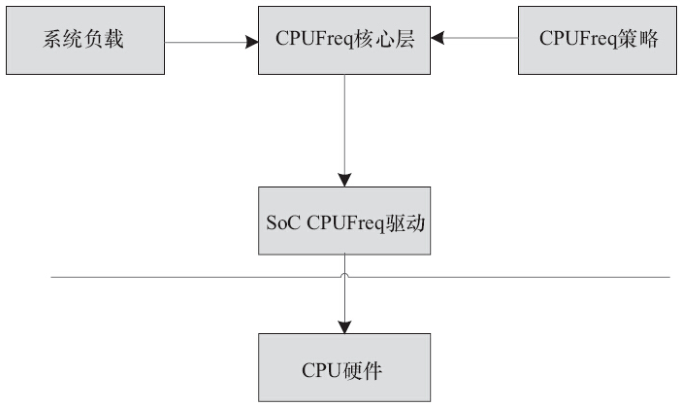

In summary, the state of the system and the CPUFreq policy together determine the target for CPU frequency transitions. The core layer of CPUFreq then passes the target frequency to the specific SoC CPUFreq driver, which modifies the hardware to complete the frequency transition, as shown in the figure.

User space can generally set CPUFreq through the /sys/devices/system/cpu/cpux/cpufreq node. For example, to set CPUFreq to 700MHz using the userspace policy, run the following commands:

# echo userspace > /sys/devices/system/cpu/cpu0/cpufreq/scaling_governor

# echo 700000 > /sys/devices/system/cpu/cpu0/cpufreq/scaling_setspeed

3. CPUFreq Performance Testing and Tuning

Since Linux 3.1, the cpupower-utils toolkit has been included in the kernel’s tools/power/cpupower directory. The cpufreq-bench tool in this toolkit helps engineers analyze the impact of using CPUFreq on system performance.

The cpufreq-bench tool simulates the “idle → busy → idle → busy” scenario during system operation to trigger dynamic frequency changes, then calculates the time ratio of completing tasks using the same operations under ondemand, conservative, interactive, etc., policies compared to performance high-frequency mode.

After cross-compiling this tool, it can be placed in the target board’s file system, such as /usr/sbin/, and run:

# cpufreq-bench -l 50000 -s 100000 -x 50000 -y 100000 -g ondemand -r 5 -n 5 -v

It will output a series of results; we extract lines like Round n, which indicate the performance ratio of the ondemand policy set with -g ondemand compared to the performance policy. Assuming the values are:

Round 1 - 39.74%

Round 2 - 36.35%

Round 3 - 47.91%

Round 4 - 54.22%

Round 5 - 58.64%

This is clearly not ideal. When using Android’s interactive policy on the same platform, we get the new test results:

Round 1 - 72.95%

Round 2 - 87.20%

Round 3 - 91.21%

Round 4 - 94.10%

Round 5 - 94.93%

The general goal is that after dynamically adjusting frequency and voltage using CPUFreq, the performance should be around 90% of the performance policy, which is considered ideal.

4. CPUIdle Driver

Most current ARM SoCs support several different idle levels. The purpose of the CPUIdle driver subsystem is to manage these idle states and enter different idle levels based on the system’s operation. The specific SoC’s underlying CPUIdle driver implementation provides a table of idle levels similar to the CPUFreq driver frequency table, and implements the entry and exit processes for various idle states.

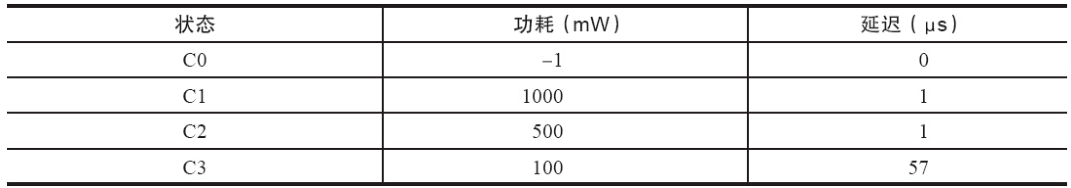

For Intel series laptops, ACPI (Advanced Configuration and Power Interface) is supported, generally having 4 different C states (where C0 is the operational state, C1 is Halt state, C2 is Stop-Clock state, and C3 is Sleep state), as shown in the table.

5. PowerTop

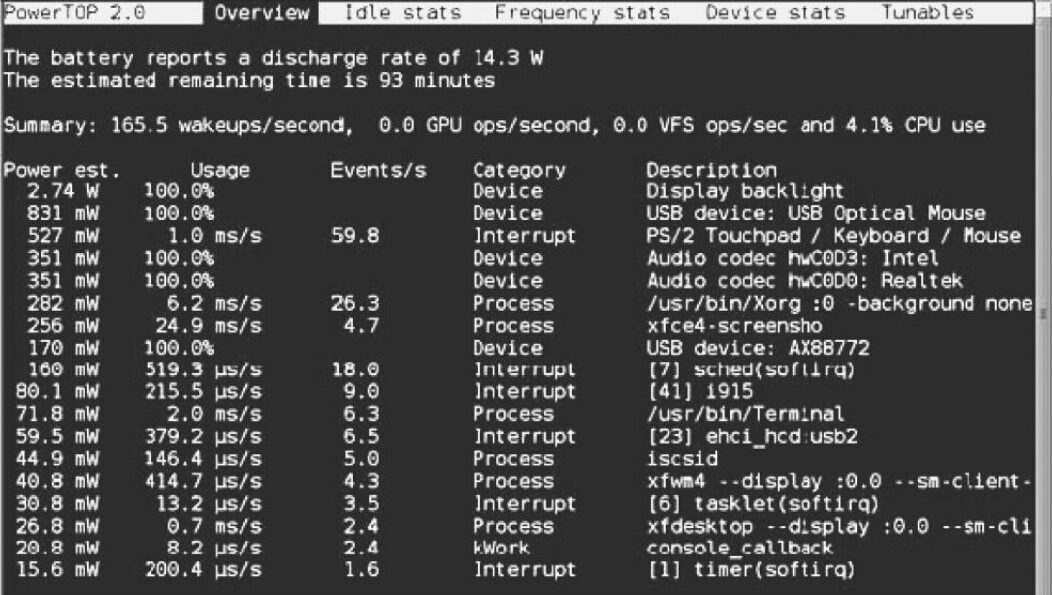

PowerTop is an open-source tool for power consumption analysis and power management diagnostics, with its homepage at the Intel Open Source Technology Center at https://01.org/powertop/. The maintainers are Arjan van de Ven and Kristen Accardi. PowerTop can analyze the power consumption of software in the system to identify power-hungry components and display the time ratios of different C states (corresponding to the CPUIdle driver) and P states (corresponding to the CPUFreq driver) in the system, using a TAB-based interface style, as shown in the figure.

6. Regulator Driver

The Regulator is one of the infrastructures for power management in the Linux system, used for managing voltage regulation, and is the standard interface for setting voltage in various driver subsystems. The previously mentioned CPUFreq driver often uses it to set voltage.

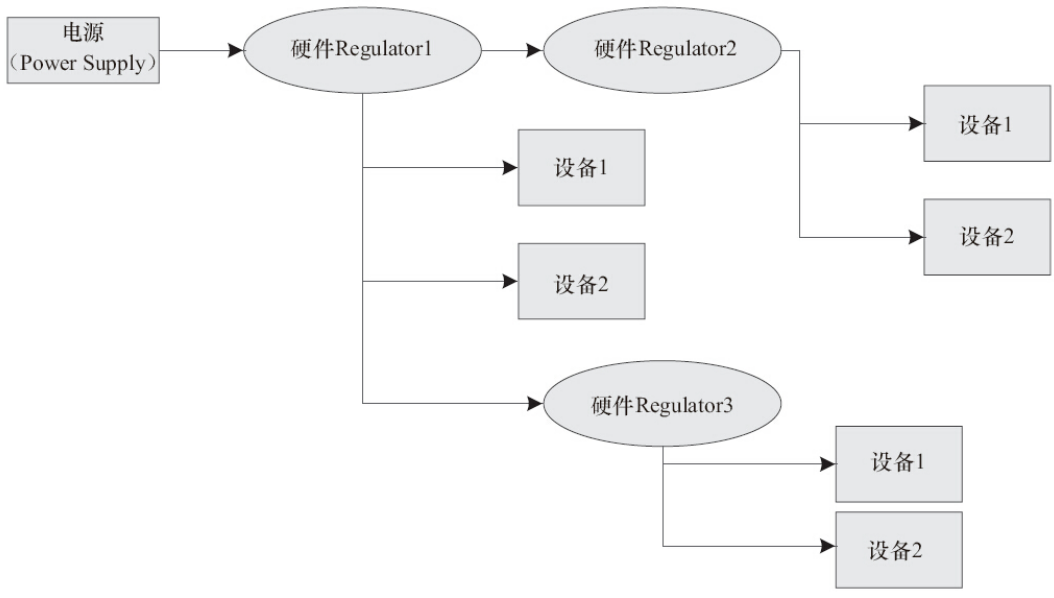

The Regulator can manage the power supply units in the system, i.e., regulators (Low Dropout Regulator, LDO), and provides interfaces to get and set the voltage of these power supply units. Generally, on ARM boards, various regulators and devices form a Regulator tree structure, as shown in the figure.

The Linux Regulator subsystem provides the following APIs for registering/unregistering a regulator:

struct regulator_dev * regulator_register(const struct regulator_desc * regulator_desc, const struct regulator_config * config);

void regulator_unregister(struct regulator_dev * rdev);

7. OPP

Modern SoCs generally include many integrated components, and not all modules need to run at maximum frequency and performance during system operation. Some domains within the SoC can run at lower frequency and voltage, while others can run at higher frequency and voltage. The set of

int opp_add(struct device * dev, unsigned long freq, unsigned long u_volt);

Currently, the TI OMAP CPUFreq driver uses this OPP mechanism to obtain the list of frequencies and voltages supported by the CPU. During the boot process, the TI OMAP4 chip registers an OPP table for the CPU device (the code is located in arch/arm/mach-omap2/).

8. PM QoS

The PM QoS system in the Linux kernel provides a set of interfaces for the kernel and applications, allowing users to set their performance expectations. One type is system-level requirements, set through parameters like cpu_dma_latency, network_latency, and network_throughput; the other type is per-device PM QoS requests initiated based on individual device performance needs.

9. CPU Hot Plugging

The Linux CPU hot-plugging feature has existed for quite some time, and a small improvement in the kernel after Linux 3.8 is that CPU0 can also be hot-plugged.

In general, user space can operate a CPU’s online and offline status through the /sys/devices/system/cpu/cpun/online node:

# echo 0 > /sys/devices/system/cpu/cpu3/online

CPU 3 is now offline

# echo 1 > /sys/devices/system/cpu/cpu3/online

When shutting down CPU3 with echo 0 > /sys/devices/system/cpu/cpu3/online, processes on CPU3 will be migrated to other CPUs to ensure the system continues to run normally during the removal of CPU3. Once CPU3 is restarted with echo 1 > /sys/devices/system/cpu/cpu3/online, it can participate in load balancing and share tasks in the system.

In embedded systems, CPU hot-plugging can be used as a power-saving method, dynamically shutting down CPUs when the system load is low, and turning on previously offline CPUs when the system load increases. Currently, different chip companies may adjust the kernel based on their SoC characteristics to achieve runtime “hot-plugging”.

10. Suspend to RAM

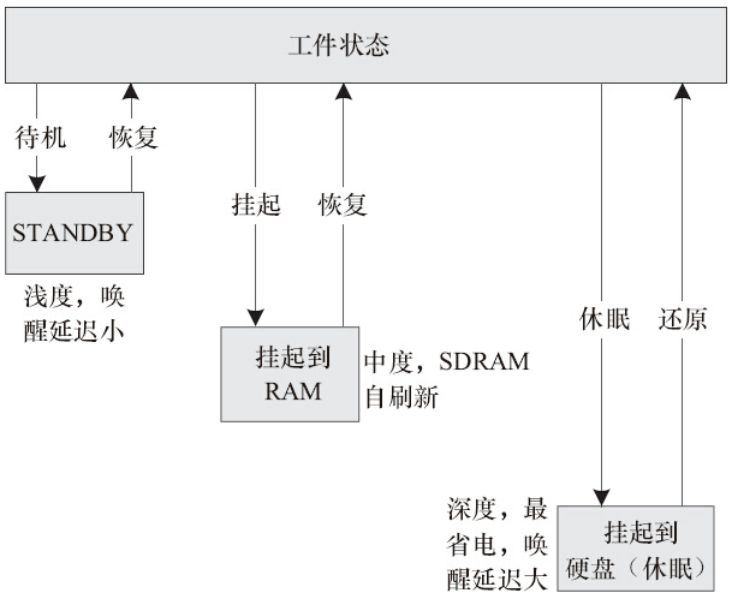

Linux supports various forms of standby, such as STANDBY, suspend to RAM, and suspend to disk, as shown in the figure.

Generally, embedded products only implement suspend to RAM (also referred to as s2ram or commonly abbreviated as STR), which saves the system state in memory and puts SDRAM into self-refresh mode, waiting for user keystrokes or other actions to restore the system. A few embedded Linux systems may implement suspend to disk (abbreviated as STD), which differs from suspend to RAM in that s2ram does not power off, while STD saves the system state to disk and then powers off the entire system.

In Linux, these actions are usually triggered by user space by writing to /sys/power/state to start the suspend to RAM process. Of course, many Linux products will have a button that, when pressed, enters suspend to RAM.

This is usually due to the input device driver corresponding to this button reporting a power-related input_event. The power management daemon process in user space receives this event and then triggers s2ram. Of course, the kernel also has an INPUT_APMPOWER driver located in drivers/input/apm-power.c that can listen for EV_PWR class events at the kernel level and automatically trigger s2ram via apm_queue_event (APM_USER_SUSPEND).

11. Runtime PM

In the dev_pm_ops structure, there are three member functions that start with runtime: runtime_suspend(), runtime_resume(), and runtime_idle(), which assist devices in completing runtime power management:

Runtime PM is different from the system-level suspend to RAM PM described earlier; it is device-specific, meaning that the system can enter runtime suspend for a specific device when it is idle, and resume it when it is not idle, allowing the device to save power during operation. Linux runtime PM was first merged in the Linux 2.6.32 kernel.

Conclusion

The PM framework in the Linux kernel involves numerous components. Understanding the dependencies between these components, optimizing from the right perspective, and using the correct methods for PM programming greatly benefit code quality, assist power consumption, and performance testing.

Moreover, in actual engineering, especially in the field of consumer electronics, it is estimated that over half of the bugs belong to power management. At this point, much of the work in power management is focused on robustness and resilience, which often requires engineers to have sufficient patience.