Kubernetes has become the de facto standard for cloud-native distributed operating systems, with its greatest advantage being scalability. Whether in computing, storage, or networking, it can flexibly expand according to user needs.

I once shared on the topic of Kubernetes Storage within my team, with content that was quite basic, aimed at inspiring everyone’s thoughts. Today, I will organize these shares in written form; upon rereading, I found that I gained a lot from it, and I hope it can also help others.

Due to the length of this article, we will start from the basic concepts and terminology of Kubernetes storage.

Why Is Kubernetes Storage Important?

For developers, Containers are no longer unfamiliar.

Containers are essentially stateless, and the existence of their content is very brief. Once a container shuts down, it returns to its initial state. This means that any data generated by the application while the container is active is lost.

Storage is a cornerstone supporting applications; without data, applications cannot function properly, so this situation is certainly unacceptable for applications.

As applications gradually transition to cloud-native, let’s look at what storage issues applications encounter on the Kubernetes platform.

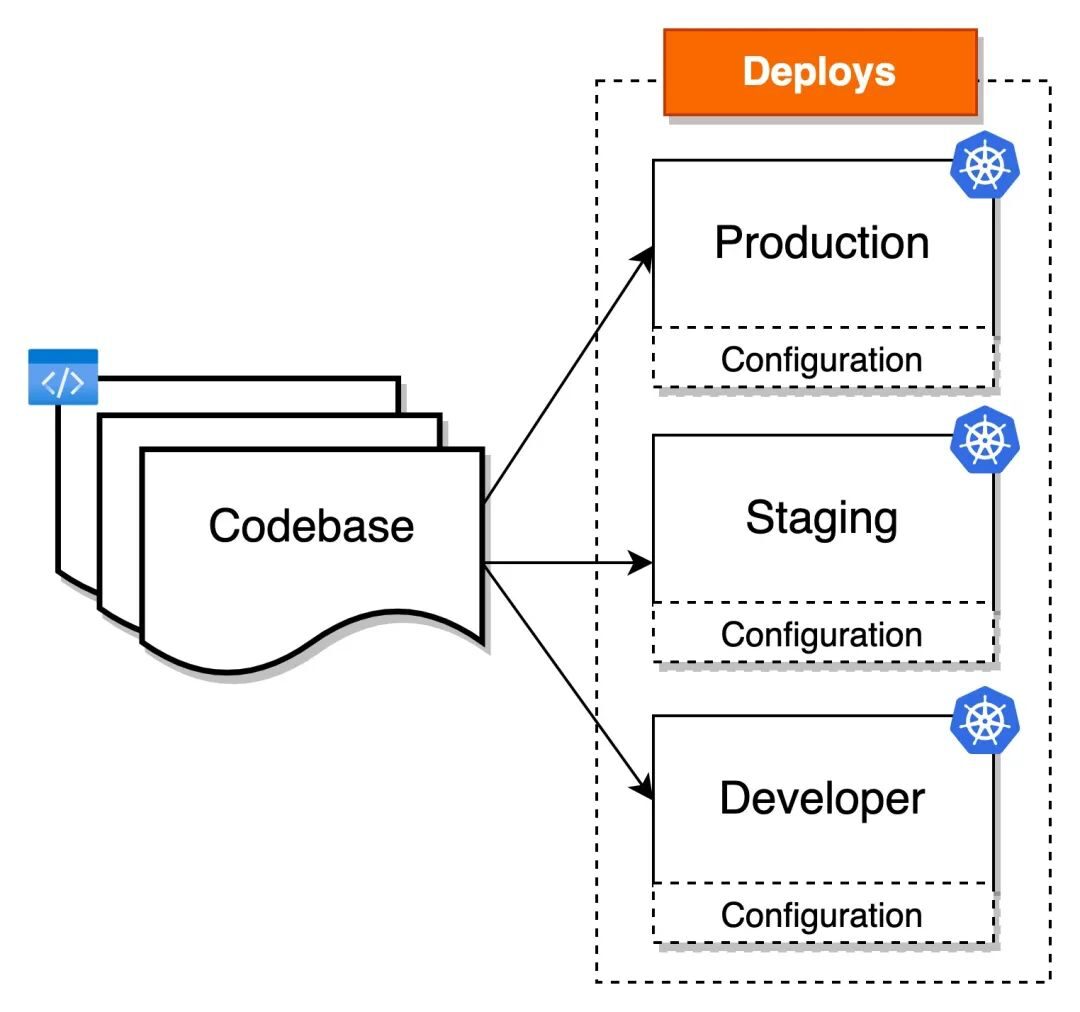

#1. Application Configuration

Every application has its own configuration, and the usual practice is to decouple configuration from code to maintain flexibility. If application code goes through an image, how is configuration handled in Kubernetes?

#2. Data Communication Between Containers

As the smallest unit of deployment in Kubernetes, how do multiple containers within a Pod share data? Or, how do Pods on different nodes communicate data in a microservices architecture?

#3. Data Persistence Within Containers

Pods are very fragile; if a Pod fails and is rescheduled, can the data written in the old Pod still be retrieved?

#4. Integrating Third-Party Storage Services

For example, if we have our own storage service, or if a client requires a specific domestic storage system, is there a way to integrate them into Kubernetes’ storage system?

Solutions

To address the above issues, Kubernetes introduces the abstract concept of Volume. Users only need to describe their intentions through resources and declarative APIs, and Kubernetes will complete the specific operations based on user needs.

The term Volume originally comes from operating system terminology and has a similar concept in Docker. In the Kubernetes world, a Volume is a pluggable abstraction layer used to share and persist data between Pods and containers.

In this article, we will explore the concept and application of Volumes in Kubernetes.

Kubernetes Storage System

We know that there are many types of storage technologies. To accommodate as many storage options as possible, Kubernetes has pre-installed many plugins, giving users the choice to select based on their business needs.

kubectl explain pod.spec.volumesThis command will return a detailed description of the Volume definitions in a Kubernetes Pod, including its fields and usage, helping users understand how to define a Volume in a Pod.

Using the above command, we find that Kubernetes supports at least 20 different types of storage volumes by default, which can meet various storage needs. This feature makes Kubernetes very flexible in storage and can adapt to the storage requirements of various applications.

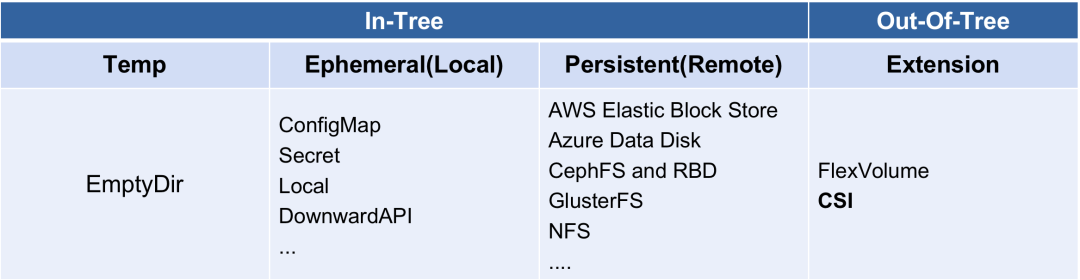

The table below categorizes storage plugins into two main categories, listing only the more commonly used volume types. Other types can be found in the official documentation Types of Persistent Volumes[1]. If anyone is interested in the implementation of In-Tree Volume[2], you can check the source code.

So what are the differences between In-Tree and Out-Of-Tree? We can start by roughly understanding their differences from the literal meanings, and I will detail their differences later.

In-Tree can be understood as implementing code stored in the Kubernetes code repository; while Out-Of-Tree indicates that the code implementation is decoupled from Kubernetes and stored outside of the Kubernetes code repository.

Summary

At first glance, the number of storage volume types supported by Kubernetes may seem overwhelming for those unfamiliar with it. However, it can be summarized into two main types:

-

Non-Persistent Volume (also called ephemeral storage) -

Persistent Volume

Non-Persistent Volume vs Persistent Volume

Before introducing the main differences between these two types of Volumes, it’s necessary to state their common points:

1. Usage Method

-

Both are defined by specifying which Volume type to use in .spec.volumes[] -

Then mount it to the specified location in the container .spec.containers[].volumeMounts[]

2. Host Directory

Regardless of the Volume type, Kubelet will create a Volume directory for the Pod scheduled on the current HOST, formatted as follows:

/var/lib/kubelet/pods/<Pod-UID>/volumes/kubernetes.io~<Volume-Type>/<Volume-Name>#1. Non-Persistent Volume

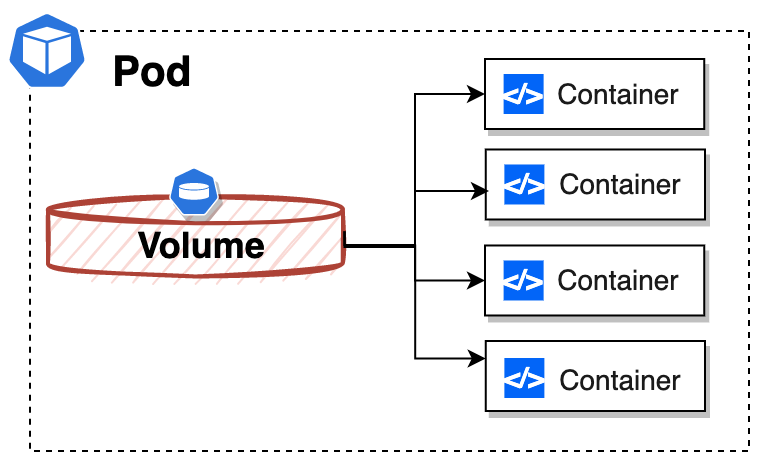

The purpose of designing Non-Persistent Volume is not to persistently save data; it is to provide shared storage resources among multiple Containers within the same Pod.

From the architecture diagram above, it can be seen that a Volume is encapsulated within a Pod, so its lifecycle is consistent with that of the Pod it is mounted to. When a Pod is destroyed for some reason, the Volume will also be deleted, but at least its lifecycle is longer than that of any Container running within the Pod.

Thoughts

Below is the official description of Pod lifecycle from Kubernetes documentation:

Pods are only scheduled once in their lifetime. Once a Pod is scheduled (assigned) to a Node, the Pod runs on that Node until it stops or is terminated.

For Pods configured with restartPolicy != Never, even if an exception causes the Pod to restart, the Pod itself will not be rescheduled, so the data of these Volumes will still be present because the Pod’s UID has not changed, and it has not been deleted.

⚠️ It is also necessary to explain here that even though the Container behind the restarted Pod is brand new, the old Container still exists on the current HOST; it is just in an Exited state. I believe you understand what I mean. This means that while the old Container is active, the data written by the user in the normal directory can still be retrieved through the container read-write layer.

Unless it has been cleaned up by the Kubelet GC mechanism.

#2. Persistent Volume

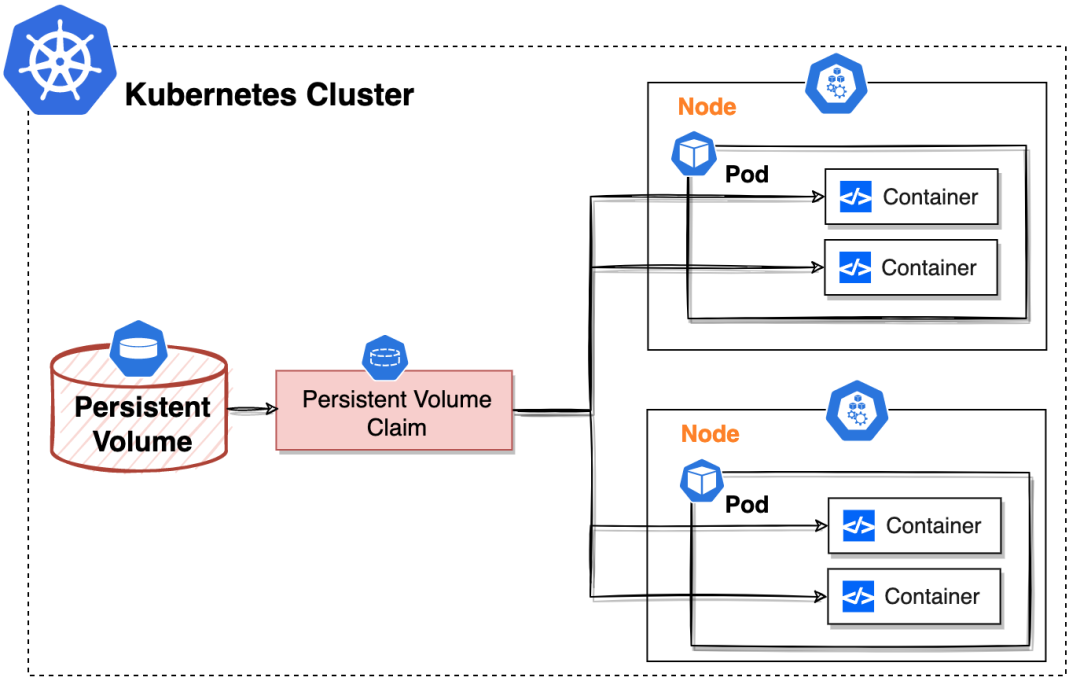

The purpose of designing Persistent Volume is to persistently save data and provide shared storage resources among multiple Containers in Pods on different nodes.

Clearly, from the architecture above, the Persistent Volume exists independently of the Pod, so its lifecycle is unrelated to that of the Pod.

ConfigMap/Secret & EmptyDir & HostPath

Next, let’s briefly go over the storage volumes commonly used on the Kubernetes platform. We will first look at how these storage volumes are used from the user’s perspective.

I have shared these three types in previous articles, but I didn’t specifically emphasize the storage concept. Now I will put them together, which may help everyone understand their applicable scenarios more deeply.

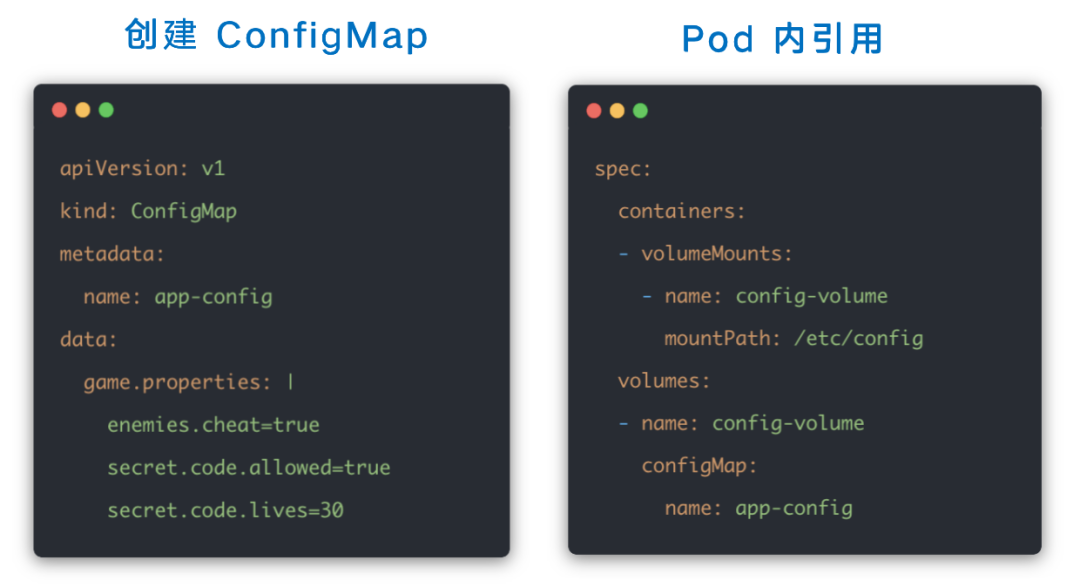

ConfigMap/Secret Applicable Scenarios

ConfigMap and Secret are typically used to store application configurations and are a common means of decoupling configuration from code on the Kubernetes platform.

Best Practices

We can use them as a storage volume. Below is a common usage example; you can click the image to jump directly to the details.

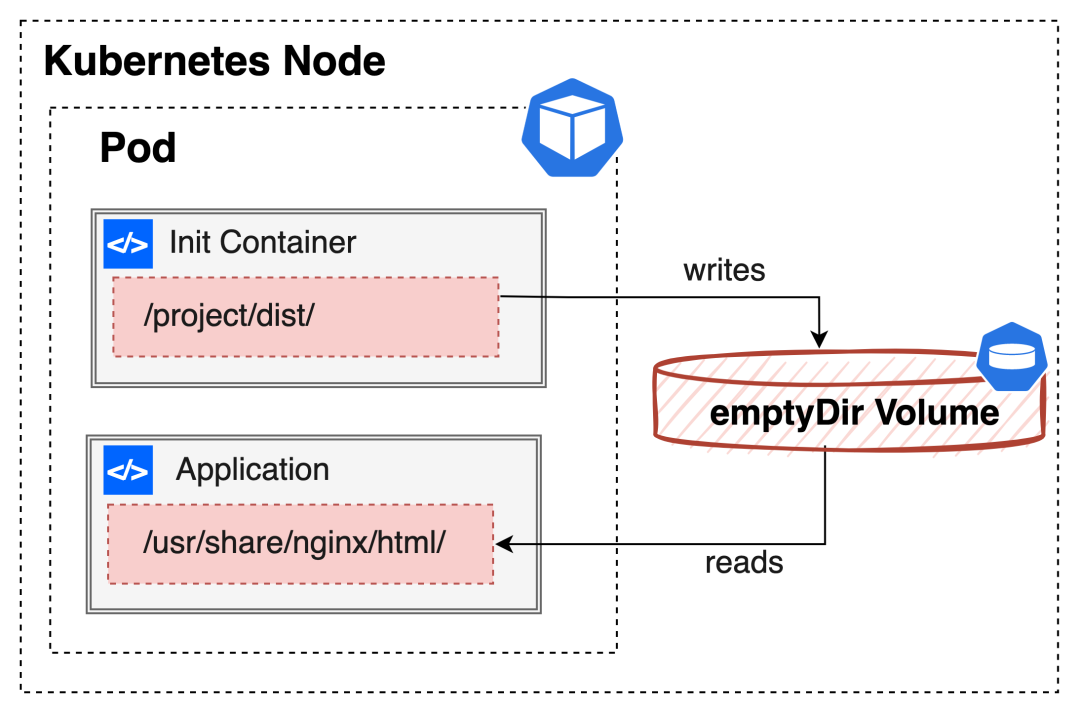

EmptyDir Applicable Scenarios

EmptyDir can be considered a very commonly used Volume. As the name suggests, it is an empty directory when created, usually used for data sharing between multiple containers in a Pod. It belongs to Non-Persistent Volume; when the Pod is deleted, the data generated within EmptyDir will also be cleared.

Best Practices

Let’s take the following architecture as an example, where the Init Container is used for data preparation, responsible for writing content; the Application Container serves as the main application container, responsible for reading data.

This example not only illustrates the charm of Kubernetes container orchestration but also serves as a rich container solution. You can click the image for detailed content Scenario 03.

Next, let’s look at another common example where a sidecar container reads the log file from another container using EmptyDir.

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: counter

spec:

containers:

- name: count

image: lqshow/busybox-curl:1.28

args:

- /bin/sh

- -c

- >

i=0;

while true;

do

echo "$i: $(date)" >> /var/log/1.log;

i=$((i+1));

sleep 1;

done

volumeMounts:

- name: varlog

mountPath: /var/log

- name: count-log-sidecar

image: lqshow/busybox-curl:1.28

args: [/bin/sh, -c, 'tail -n+1 -f /var/log/1.log']

volumeMounts:

- name: varlog

mountPath: /var/log

volumes:

- name: varlog

emptyDir: {}

EOFAs you can see, the logs from the count container in the Pod are not output via stdout; we wouldn’t be able to capture the logs using the following command.

kubectl logs -f counter -c countHowever, we can use a sidecar container to read the log file and output it to stdout again.

Of course, this method of handling logs is not optimal because it results in two identical log files on the host, which is unnecessary. But this example is appropriate to help understand the applicable scenarios of EmptyDir.

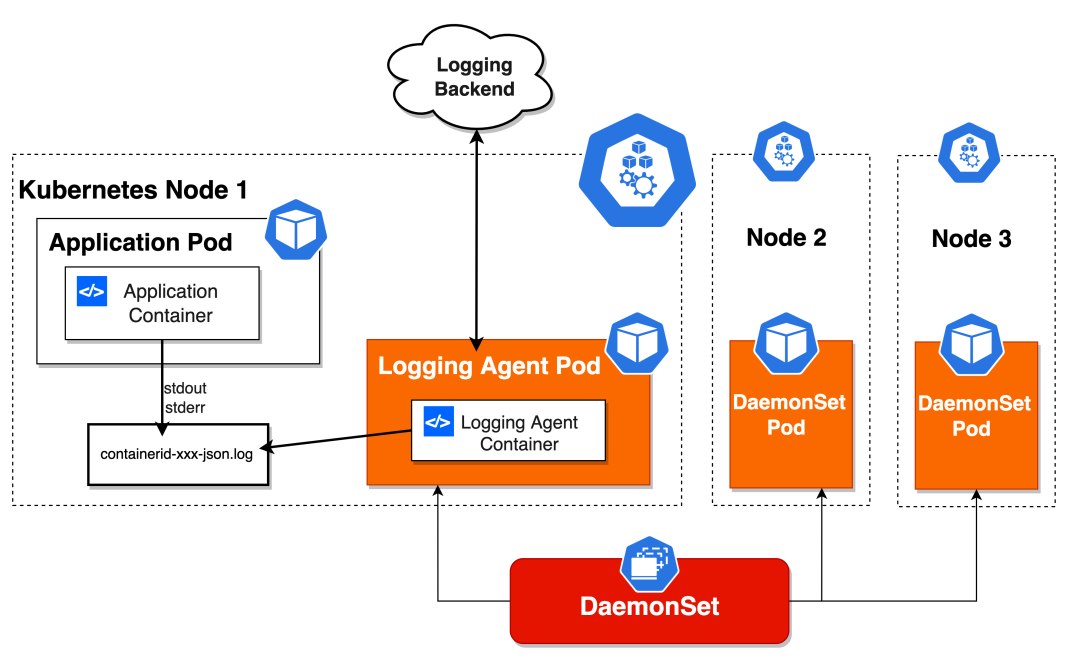

kubectl logs -f counter -c count-log-sidecarHostPath Applicable Scenarios

HostPath mounts files or directories from the host node’s filesystem directly into the Pod. Additionally, it should be noted that the volume data is persisted within the host node’s filesystem. This type of Volume is generally not suitable for most applications because the content in the Volume only remains on a specific node; once the Pod is rescheduled to another node, the original data will not be carried over.

Therefore, it is generally not recommended to use HostPath unless you have a very specific requirement and understand what you are doing, does that make sense?

Best Practices

HostPath is usually used in conjunction with DaemonSet. Continuing with the log collection example mentioned earlier, a Logging agent (Fluentd) runs on each node, mounting the container log directory on the host to collect the current host logs.

Additionally, one of the themes of our current sharing, various storage plugin Agent components (CSI), must also run on each node to mount remote storage directories on that node and operate on the container’s Volume directory.

Of course, there are many other uses for it, and you can discover them yourself; I won’t elaborate further.

Thoughts

Although HostPath + NodeAffinity can achieve pseudo-persistence, seemingly acquiring PV capabilities, we do not recommend using it because this approach can easily fill up the host’s disk, ultimately leading to the current node becoming NotReady.

Moreover, due to cluster security considerations, we usually restrict HostPath mounting. After all, HostPath Volumes pose many security risks, and if not limited, users could potentially do anything to the Node.

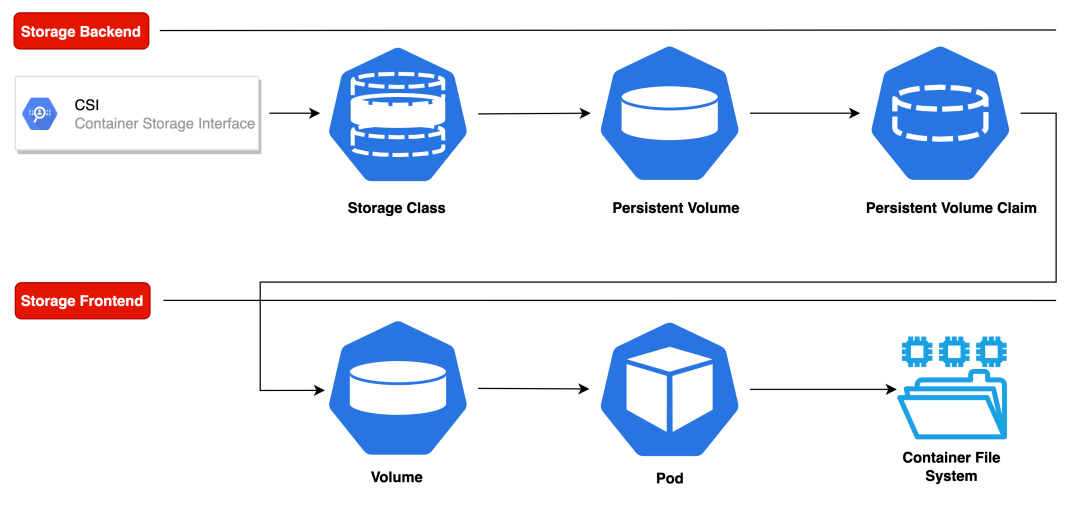

PV, PVC & Storage Class

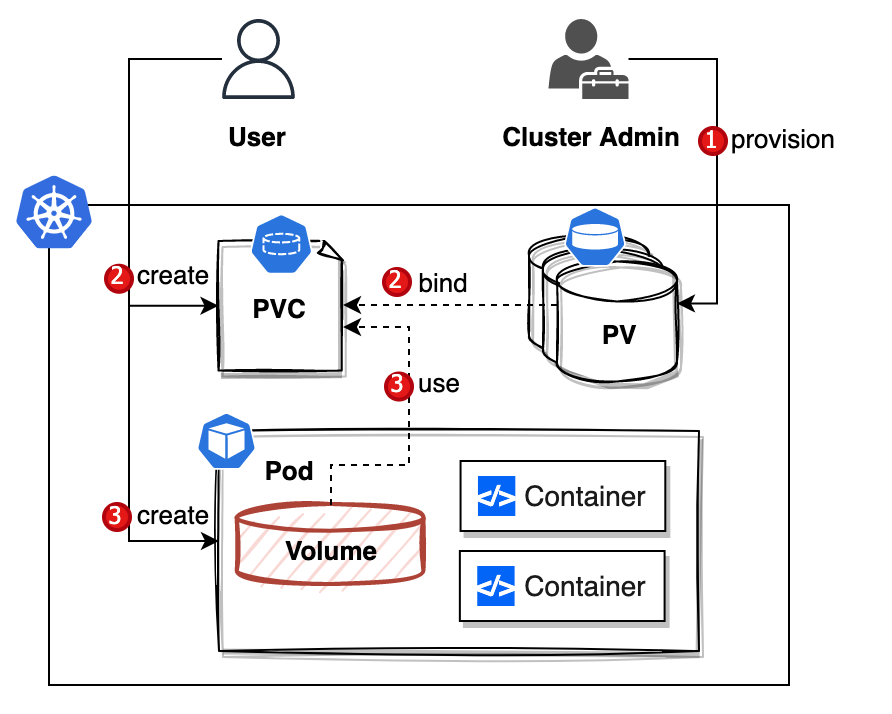

PV and PVC are two very important resources in the Kubernetes storage system, introduced mainly to achieve separation of responsibilities and decoupling.

Let’s put it this way: for application developers, they do not need to care about the details of the storage facilities; they only need to focus on the storage resource requirements of the application.

There’s another reason: as developers, we do not know what volume types are available in the cluster, and the configurations related to storage are indeed very complex. The knowledge area involved in storage is very specialized; as the saying goes, let professionals do professional things, which can improve efficiency.

Let’s first look at the official interpretations of these two resources.

Terminology Explanation

#1. Persistent Volume (PV)

A Cluster-level resource created by cluster administrators or the External Provisioner component.

It describes a persistent storage data volume.

#2. Persistent Volume Claim (PVC)

A Namespace-level resource created by developers or the StatefulSet controller (based on VolumeClaimTemplate). Additionally, generic ephemeral volumes may also create temporary storage volumes with lifecycles consistent with Pods.

It describes the attribute requirements for Pods regarding persistent storage, such as volume capacity and access modes, providing an abstract representation of the underlying storage resources.

By using PVCs, Pods can be made portable across clusters.

#3. Storage Class

A Cluster-level resource created by cluster administrators, defining the capabilities for dynamic provisioning and configuring PVs. Storage Class provides a mechanism for dynamically allocating PVs, automatically creating PVs based on PVC requests, and achieving dynamic provisioning and management of storage.

It is worth mentioning the parameters field in StorageClass, which, although optional, is very useful for specifying parameters related to the storage class when developing a CSI Driver. For details, see: Secrets and Credentials[3]. The specific meanings of these parameters depend on the storage plugin and storage vendor used.

How to Understand?

For those who have just started with Kubernetes, it might not be easy to understand just from the terminology. I was confused at first, not knowing what these resources were, how to use them, and what scenarios they were suitable for.

No worries, next we will interpret these resources from different perspectives, hoping to help everyone establish the concepts. Finally, I will introduce their specific usages.

#1. From the Resource Dimension

The following is an appropriate analogy, though the source is unknown, I think it fits well, so I quoted it directly.

The .Spec of PV resources contains detailed information about the storage devices, and we can think of PVC as a Pod.

-

Pod consumes Node resources, while PVC consumes PV resources. -

Pods can request specific levels of resources (such as CPU and memory), while PVC can request specific storage volume sizes and access modes. -

PVC decouples the application from the specific storage behind it.

#2. From the Separation of Concerns Dimension

Application Developers

First, it is essential to clarify that for application developers, we will only interact with the PVC resource because only we developers know roughly how much storage space our applications need.

In Kubernetes, PVC can be seen as an abstract interface for persistent storage. It describes the requirements and attributes for specific persistent storage, but does not handle the actual storage implementation. PV is responsible for implementing the specific storage and binding with PVC.

We only need to clarify our needs, such as the required storage size and access modes, without worrying about whether the storage is implemented via NFS or Ceph, etc. This is not something we need to care about.

This separation design allows us to focus on application development without worrying about the underlying storage technology choices and implementations, thereby improving our efficiency and flexibility.

Operations Personnel

PV resources are usually created by operations personnel, as only they know what storage is available within the cluster, which should be easy to understand.

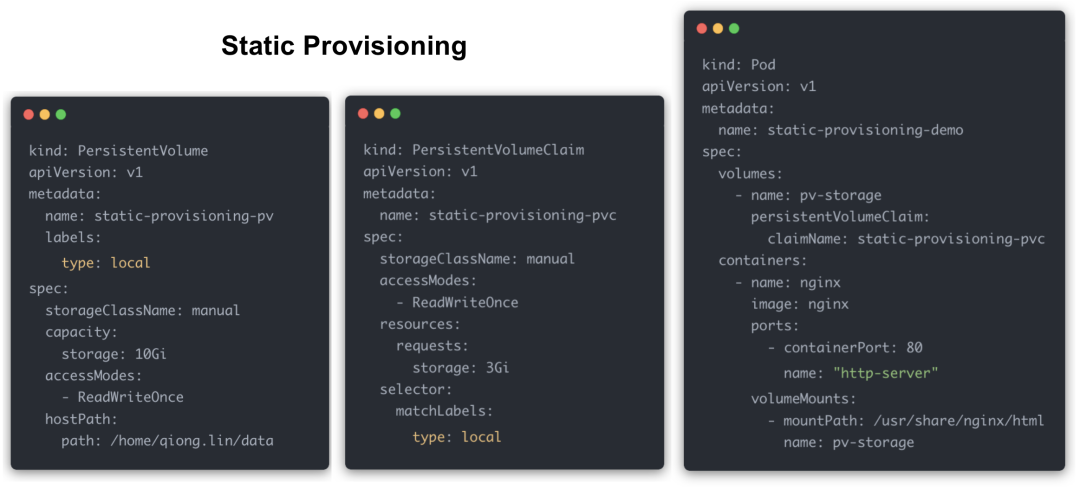

Provisioning

There are generally two ways to provide PVs within a cluster; let’s look at the pros and cons of these two methods.

#1. Static Provisioning

Usage Process

-

First, a cluster administrator creates a static persistent volume based on some underlying network storage resources. -

Then, the user creates a PVC declaration to request the specific persistent volume from the first step. -

Finally, create a Pod that uses this PVC.

Thoughts

The advantages of this method are very obvious; it can be said that all storage resources within the cluster are under the control of operations.

However, the process of providing static PVs, where PV resources must be created with the participation of operations personnel, also brings some issues:

-

It’s fine for small clusters, but in large-scale production environments, user application instances are uncontrollable, potentially requiring thousands of PVs, which are unpredictable. This manual and repetitive work is inefficient and can be a painful operation for administrators. -

Additionally, this method can easily lead to inefficient storage usage.

-

Either the configuration is insufficient, limiting program operation. -

Or it is over-provisioned, leading to storage waste.

So how to achieve automation? This relies on the concept of Storage Class, which introduces dynamic storage configuration processes.

#2. Dynamic Provisioning

Usage Process

From the flowchart above, it is evident that the whole process lacks administrator involvement.

-

When a user creates a PVC, the corresponding Provisioner automatically creates a PV that binds to it. How do they correspond? It’s through storageClassNameto find. -

Create a Pod that uses this PVC, and this process is the same for both.

Thoughts

The automated creation of PVs can be said to completely change the way deployments work.

It not only frees operations personnel but also significantly aids the advancement of DevOps.

PV and PVC Binding Conditions

✔️ StorageClass: PV and PVC must belong to the same StorageClass. ✔️ Access Modes: The access modes of PV and PVC must be compatible, defining how Pods access storage volumes. ✔️ Capacity: The requested capacity of PVC cannot exceed that of PV. PVC can request a specific size of storage volume, while PV must have enough capacity to meet the PVC request. ✔️ Selector: PV can define one or more Label Selectors, while PVC can select matching PVs through Label Selectors. The Selector of PVC must match the Label Selector of PV to bind.

When these binding conditions are met, Kubernetes will automatically bind the PVC to the corresponding PV, thus achieving the allocation and use of persistent storage.

Thoughts

In Kubernetes, PV and PVC have a one-to-one relationship; a PV can only bind to one PVC. However, multiple Pods can share the same PVC, thus using the persistent storage provided by that PVC. In fact, in production environments, we do not directly use Pods because they can be stopped for various reasons and no longer provide service, such as being evicted by nodes.

Generally, we use Deployment, which can manage multiple replicas of Pods. In the following scenario, we have 3 Pod replicas that mount the same PVC; if that PVC has read-only permissions, there will be no issues.

However, if that PVC has read-write permissions and is shared by 3 Pod replicas, it may lead to data inconsistency issues. For example, you may encounter a Race Condition: if two or more Pods write to the same file simultaneously, data overwriting or inconsistency may occur.

So what can be done to resolve this issue? That would be the StatefulSet workload, which also has the concept of multiple replicas.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

...

replicas: 3

template:

...

volumeClaimTemplates: # Define templates for creating PVCs associated with this StatefulSet

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "my-storage-class"

resources:

requests:

storage: 1Gi

The difference is that we can define volumeClaimTemplates to automatically create a new PVC for each replica, as shown below.

Of course, actual scenarios are often more complex, especially for distributed applications, where master-slave relationships need to consider the characteristics of Pod identification and ordering provided by StatefulSet.

Next, let’s look at the last way to create a PVC: the generic ephemeral volume.

It is important to note that the data in the Generic Ephemeral Volume is retained only during the container’s lifecycle. When the Pod is deleted or restarted, the PVC generated simultaneously is also deleted. Therefore, Generic Ephemeral Volumes are suitable for scenarios where temporary data needs to be retained during the container’s lifecycle, such as cache files or temporary configuration files.

kind: Pod

apiVersion: v1

metadata:

name: my-app

spec:

containers:

- name: my-frontend

volumeMounts:

- mountPath: "/scratch"

name: scratch-volume

...

volumes:

- name: scratch-volume

ephemeral:

volumeClaimTemplate:

metadata:

labels:

type: my-frontend-volume

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "scratch-storage-class"

resources:

requests:

storage: 1Gi

Default Storage Class

Administrators can annotate a certain storage class within the cluster as the Default Storage Class, which is a predefined special storage class used to specify the default storage class to be used when none is explicitly specified.

kubectl patch storageclass <STORAGE-CLASS-NAME> -p

'{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'Container Storage Interface

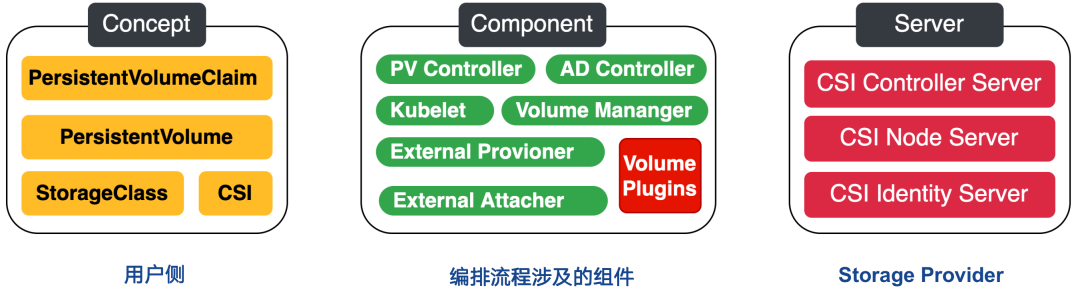

Although the Kubernetes platform itself supports various storage plugins, these plugins often cannot meet all requirements due to growing user needs. For example, when clients require our PaaS platform to integrate with a specific domestic storage, how should we respond?

Moreover, these storage plugins are basically In-tree, and if the plugin needs patching, the current model complicates testing and maintenance.

This brings us to the Container Storage Interface (CSI), which essentially defines a set of protocol standards. Third-party storage plugins can connect to Kubernetes as long as they implement these unified interfaces, and users do not need to touch the core Kubernetes code.

The adaptation work is jointly accomplished by the container orchestration system (like Kubernetes) and the storage provider (SP), with CO communicating with CSI plugins via gRPC.

CSI is quite complex, involving many components. I will write a separate article later to introduce the working principles of CSI and the challenges encountered.

In Conclusion

By understanding these basic concepts, we can correctly configure and manage storage resources in Kubernetes to meet applications’ persistent storage requirements.

In the next issue, I will continue to share with you the implementation methods of Kubernetes persistent storage processes.

References

Types of Persistent Volumes: https://kubernetes.io/docs/concepts/storage/persistent-volumes/#types-of-persistent-volumes

[2]In-Tree Volume: https://github.com/kubernetes/kubernetes/tree/master/pkg/volume

[3]Secrets and Credentials: https://kubernetes-csi.github.io/docs/secrets-and-credentials.html

END