Skip to content

Artificial Intelligence (AI) is not a new technological concept; its history can be traced back to the 1950s. Since entering the 21st century, AI has made breakthrough progress in areas such as image recognition, speech recognition, natural language processing, robotics, and autonomous driving, benefitting from rapid increases in computing power and the swift development of technologies like big data, cloud computing, and deep learning. In recent years, concepts such as “Edge AI” / “Embedded AI” / “On-device AI” have gradually become hot topics in the industry.

Although the terminology differs, these concepts essentially refer to the same thing—”embedding AI algorithms on the device side to enable smart, automated, and efficient capabilities.” This trend is most evident in the AI solutions of leading global microcontroller (MCU) manufacturers.

Embedding AI Deeply into the Edge

2023 marks a significant turning point in the development of artificial intelligence. In the technical domain, the development of large models and generative AI has further triggered changes in computational paradigms, industry momentum, and computing service patterns; in the application domain, enterprises are accelerating their transition from business digitization to business intelligence; and in the chip sector, the variety of lightweight end-side MCUs is gradually expanding from server GPUs to edge MPUs. This means that as the demand for artificial intelligence grows rapidly, a comprehensive AI architecture characterized by “cloud-edge-end” has already formed.

Currently, developers of end-side products and applications are increasingly adopting AI and machine learning (AI/ML) algorithms to match and identify complex patterns, helping to analyze data and make decisions accordingly. As the digital logic device that directly controls material actions, MCUs should also be AI-enabled and not excluded from AI. In fact, the use of AI/ML technologies is growing rapidly; in terms of numbers, while there are millions to tens of millions of AI servers, by 2030, it is expected that shipments of end-side embedded AI devices will reach 2.5 billion units, indicating a huge market potential.

By adding these intelligent features and placing them as close to critical operations as possible, edge AI can reduce device latency and improve the accuracy of real-time operations, making human-computer interaction, status monitoring, and predictive maintenance in smart factories smarter and more energy-efficient. It can be used to optimize battery management systems in new energy vehicles and adjust vehicle states to fit drivers’ habits, ensuring energy-efficient driving; it can also utilize networks composed of millions of smart sensors and IoT nodes to improve monitoring and resource management in smart cities, assisting citizens, and enhancing logistics with autonomous drones and vehicles.

Cross-industry Integration is Challenging

Many network edge applications that can leverage AI/ML functionalities need to operate under extremely stringent power consumption constraints, with many devices needing to run for extended periods—potentially months or years—on a single charge or solely relying on collected and stored energy. On the other hand, in the past decade, the rapid development of AI models has led to an increasing number of innovations in AI classification models, resulting in constantly emerging new implementations that require optimization in both hardware and algorithms.

At the same time, due to the performance limitations of MCU hardware, the high complexity of AI software, stringent real-time requirements in industry applications, strict energy consumption limits, and high data security requirements, the implementation of embedded AI in MCUs necessitates business teams to possess rich knowledge and experience in AI, as well as embedded software and hardware capabilities.

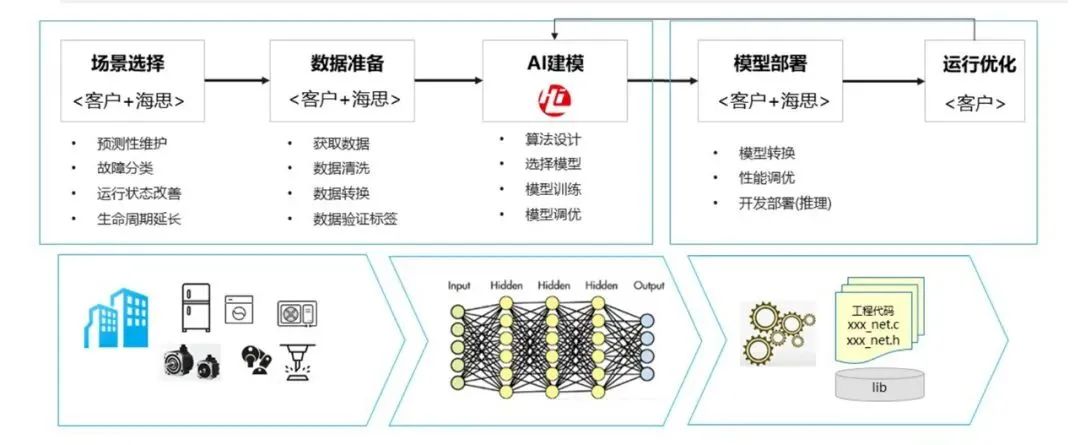

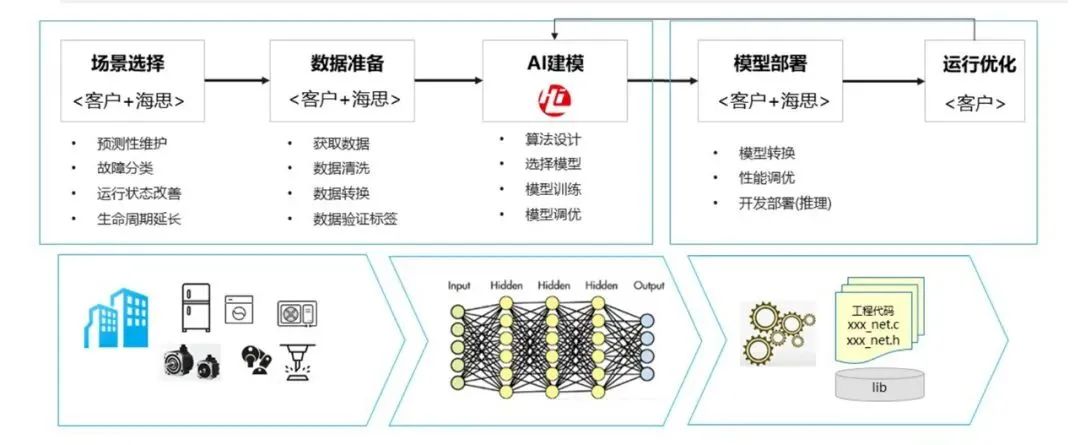

On one hand, there needs to be deep collaboration between development teams and application scenarios. Only by utilizing foundational data from real scenarios and the specialized customized solutions provided by embedded AI teams can genuine problems be solved and value created.

On the other hand, there must be a capability for rapid deployment of AI models to MCUs. General AI models are often not designed for embedded applications, and the variety of AI development tools is vast. Given that MCUs typically have very limited RAM, Flash resources, and CPU computing power, selecting appropriate AI models and development tools to create solutions for specific scenario problems, and deploying AI models onto resource-constrained MCUs, is a complex engineering task.

A²MCU: Empowering Industries with Embedded AI

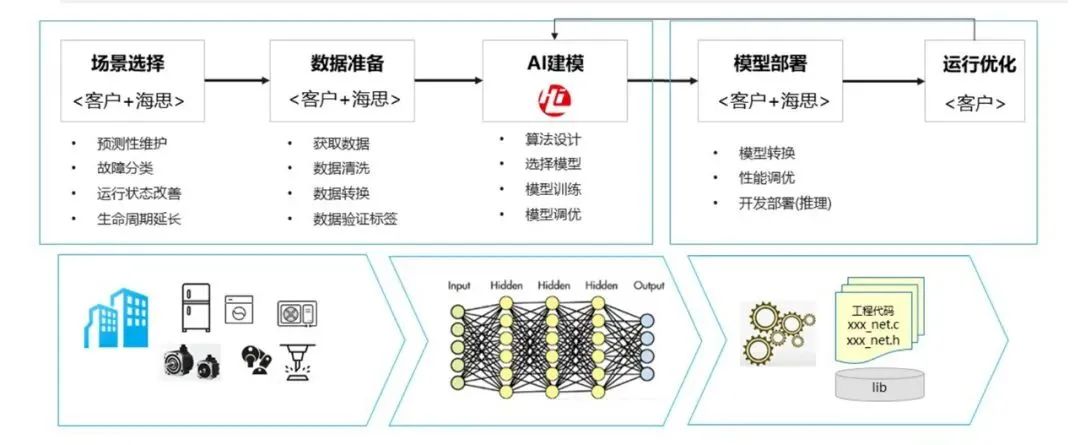

As a chip company committed to “enabling intelligent terminals for all things connected, serving as the foundation for digitalization, networking, intelligence, and low carbon industries,” HiSilicon is deeply integrating ultra-lightweight technology frameworks, extreme performance inference requirements, and convenient rapid deployment capabilities in the AI field with its A²MCU solution, providing new options for MCU industry clients to explore intelligent applications.

The name A² symbolizes “two As multiplied together, creating an exponential accumulation effect.” One A represents “Application Specific,” reflecting HiSilicon’s customer-centric approach, pursuing a close integration between chip design and customer application scenarios; the other A represents the application of “lightweight embedded AI technology” in MCUs and embedded fields.However, it is important to emphasize that unlike MCU solutions with built-in NPUs, HiSilicon’s solution focuses more on achieving integrated AI training and inference on limited computing power and configured RISC-V CPUs.

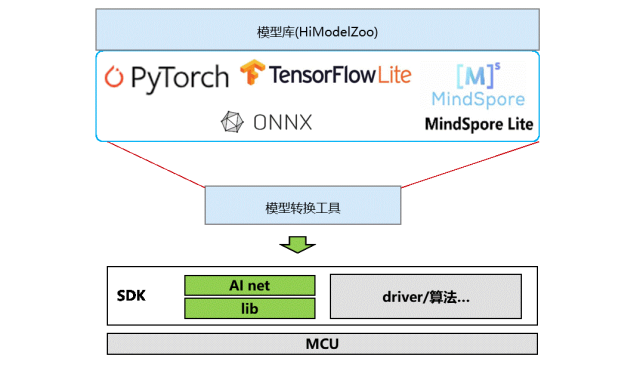

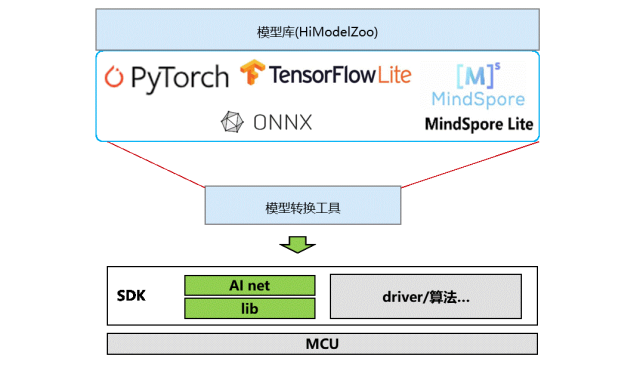

HiSilicon’s full range of MCUs provides an ultra-lightweight AI technology framework capable of meeting multi-scenario AI training and inference requirements. It can quickly convert multiple models into code and import them into projects, allowing developers to deploy products conveniently and rapidly.

1) Simplified Framework: The AI models deployed on MCUs, once converted to network layer runtime code, directly call the optimized operator library of the RISC-V core, eliminating the need for complex frameworks like model parsers. The open-source architecture of RISC-V supports custom instruction sets, enabling better support for optimized implementations of operator libraries, which is a key advantage of RISC-V over other cores.

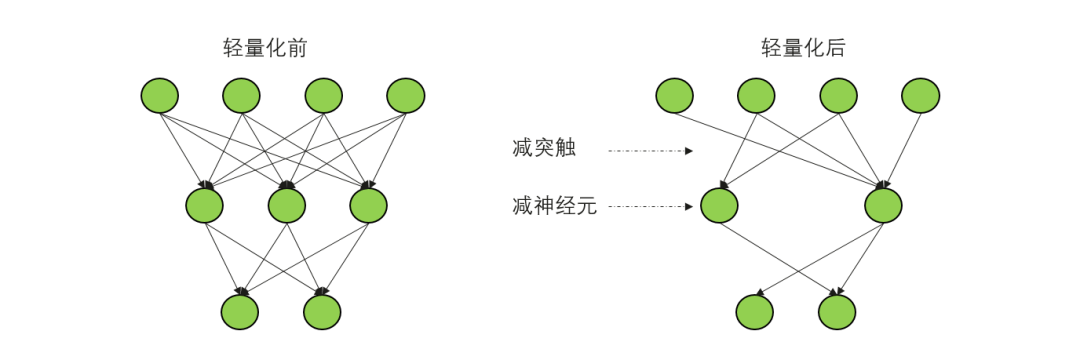

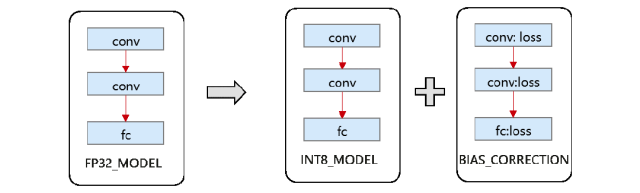

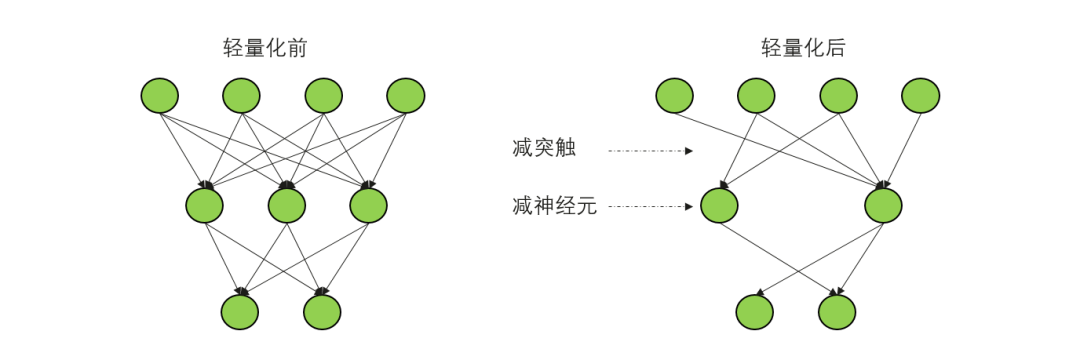

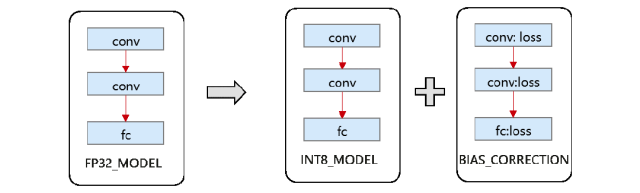

2) Extreme Performance: This means achieving a highly simplified training and inference process while ensuring scenario benefits. This includes but is not limited to: training model optimization, which reduces memory read/write and computational load; post-training quantization to make models smaller and inference faster; lightweighting operators, optimizing memory, and tuning deep performance: optimizing operator libraries, pre-rearranging operator data, reusing memory, reducing multiplication operations and memory access overhead through algorithms, and balancing computational counts with memory access overhead.

Lightweight model pruning and compression

Post-training quantization, making models smaller and inference faster

3) Easy Development and Deployment: HiSilicon’s A²MCU embedded AI solution can provide conversion for various models, such as those developed using TensorFlow Lite, PyTorch, MindSpore, etc., which can be quickly and conveniently converted into code and imported into project code.

Additionally, HiSilicon’s A² solution has achieved innovations in OS and algorithm integration:

4) Deep OS Integration: Recognizing the value brought by deep integration of chips and operating systems, HiSilicon’s A² solution deeply coordinates and optimizes MCU chips and OS that require high-performance real-time computing. Generally, to ensure high real-time performance, most existing MCU solutions do not use operating systems. However, this absence of basic scheduling functionality leads to a significant increase in coding complexity and subsequent maintenance difficulties once the MCU code exceeds tens of thousands of lines. HiSilicon has developed a hybrid deployment solution that can run on limited MCU resources through deep optimization of A²MCU and UniProton, in collaboration with openEuler. This solution requires minimal hardware resources—only 4KB of RAM and 4KB of Flash to run. By deploying this hybrid solution, the original high real-time task priorities and real-time performance remain unaffected, while tasks that do not require high real-time performance can be managed using a scheduler for multi-threaded task management. This reduces the complexity of code development for developers and helps clients with easier subsequent maintenance and modifications, as well as cross-chip porting of applications.

5) Industry Customization: From the current application landscape, with the increasing segmentation of industry applications, the advantages of industry-specific MCUs are evident. In fields such as motor control and power control, as well as automotive domain control, specialized MCUs are predominant to meet the higher energy efficiency and power density requirements of energy products. Furthermore, the rapid growth of advantageous industries in China, such as home appliances, industrial, energy, and new energy vehicles, has spurred the rapid growth of local chip companies. Statistical data indicates that the domestic MCU market was approximately 40 billion yuan in 2022, with domestic MCU sales accounting for about 1/4 of this, showcasing immense potential in sectors like consumer electronics, home appliances, industry, and energy. HiSilicon’s A² solution emphasizes the optimization and customization of algorithms for specific scenarios; the “A²MCU, making air conditioning more energy-efficient with use” solution is just the beginning of A²MCU’s brilliance. This solution increases overall energy efficiency during the operation cycle by learning complex working conditions through embedded AI algorithms based on environmental, operational, and target parameters for air conditioning. By deeply integrating business scenarios with AI reinforcement learning models, it provides differentiated competitive advantages in energy saving for air conditioning products, ultimately achieving a 16% reduction in energy consumption during the temperature adjustment phase.

2) Extreme Performance: This means achieving a highly simplified training and inference process while ensuring scenario benefits. This includes but is not limited to: training model optimization, which reduces memory read/write and computational load; post-training quantization to make models smaller and inference faster; lightweighting operators, optimizing memory, and tuning deep performance: optimizing operator libraries, pre-rearranging operator data, reusing memory, reducing multiplication operations and memory access overhead through algorithms, and balancing computational counts with memory access overhead.

Lightweight model pruning and compression

Post-training quantization, making models smaller and inference faster

3) Easy Development and Deployment: HiSilicon’s A²MCU embedded AI solution can provide conversion for various models, such as those developed using TensorFlow Lite, PyTorch, MindSpore, etc., which can be quickly and conveniently converted into code and imported into project code.

Additionally, HiSilicon’s A² solution has achieved innovations in OS and algorithm integration:

4) Deep OS Integration: Recognizing the value brought by deep integration of chips and operating systems, HiSilicon’s A² solution deeply coordinates and optimizes MCU chips and OS that require high-performance real-time computing. Generally, to ensure high real-time performance, most existing MCU solutions do not use operating systems. However, this absence of basic scheduling functionality leads to a significant increase in coding complexity and subsequent maintenance difficulties once the MCU code exceeds tens of thousands of lines. HiSilicon has developed a hybrid deployment solution that can run on limited MCU resources through deep optimization of A²MCU and UniProton, in collaboration with openEuler. This solution requires minimal hardware resources—only 4KB of RAM and 4KB of Flash to run. By deploying this hybrid solution, the original high real-time task priorities and real-time performance remain unaffected, while tasks that do not require high real-time performance can be managed using a scheduler for multi-threaded task management. This reduces the complexity of code development for developers and helps clients with easier subsequent maintenance and modifications, as well as cross-chip porting of applications.

5) Industry Customization: From the current application landscape, with the increasing segmentation of industry applications, the advantages of industry-specific MCUs are evident. In fields such as motor control and power control, as well as automotive domain control, specialized MCUs are predominant to meet the higher energy efficiency and power density requirements of energy products. Furthermore, the rapid growth of advantageous industries in China, such as home appliances, industrial, energy, and new energy vehicles, has spurred the rapid growth of local chip companies. Statistical data indicates that the domestic MCU market was approximately 40 billion yuan in 2022, with domestic MCU sales accounting for about 1/4 of this, showcasing immense potential in sectors like consumer electronics, home appliances, industry, and energy. HiSilicon’s A² solution emphasizes the optimization and customization of algorithms for specific scenarios; the “A²MCU, making air conditioning more energy-efficient with use” solution is just the beginning of A²MCU’s brilliance. This solution increases overall energy efficiency during the operation cycle by learning complex working conditions through embedded AI algorithms based on environmental, operational, and target parameters for air conditioning. By deeply integrating business scenarios with AI reinforcement learning models, it provides differentiated competitive advantages in energy saving for air conditioning products, ultimately achieving a 16% reduction in energy consumption during the temperature adjustment phase.

In summary, transitioning AI data processing from the cloud to edge deployment requires a series of innovative semiconductor technologies, including ultra-low power technologies and system methods. The combination of these technologies reduces system power consumption and bandwidth requirements while further enhancing the computational efficiency of a new generation of microcontrollers designed for edge devices. However, for developers, this means they need to find a “critical balance” among multiple factors such as performance, efficiency, safety, and total system cost right from the initial design of the MCU. Without sufficient technical accumulation and rich application experience, achieving innovation and creating incremental value in low-power AI scenarios is no easy task.

In summary, transitioning AI data processing from the cloud to edge deployment requires a series of innovative semiconductor technologies, including ultra-low power technologies and system methods. The combination of these technologies reduces system power consumption and bandwidth requirements while further enhancing the computational efficiency of a new generation of microcontrollers designed for edge devices. However, for developers, this means they need to find a “critical balance” among multiple factors such as performance, efficiency, safety, and total system cost right from the initial design of the MCU. Without sufficient technical accumulation and rich application experience, achieving innovation and creating incremental value in low-power AI scenarios is no easy task.