This article contains 8284 words, estimated reading time is 21 minutes.

· Abstract ·With the acceleration of digital transformation and the exponential increase in the complexity of cyber threats, security operation models are gradually shifting from single-product defenses and rule-driven approaches to a data-driven and AI-empowered intelligent defense era. Major cybersecurity vendors have launched their own large models in the security domain, such as Qihoo 360’s QAX-GPT security robot, Sangfor’s GPT, 360 Security Agent, Tianrongxin’s TopASK model, and Anheng’s Hengnao, all of which are creating a new paradigm of intelligent security operations characterized by “model-driven” and “AI against AI”.Recently, I have often been asked about AI agents during discussions, and this article will provide a deep interpretation of how to define and understand AI agents in the cybersecurity industry, as well as multi-agent systems and their specific applications in AI+SOC.

▌1. What is an AI Agent?

In today’s rapidly developing AI technology landscape, the term “Agent” frequently appears in various technology forums, industry reports, and startup projects. However, when we try to understand what an “Agent” is, we find that this concept is not as clear as we might imagine. Many people might say: “Isn’t it just calling an API of a large model?” But this is only a partial understanding of what an Agent is.Before delving into how to define an “Agent” in the cybersecurity industry, let’s first clarify what an Agent actually is.

1. Agent ≠ API Call of a Large Model

Many people mistakenly believe that as long as a large language model (LLM) calls external tools or interfaces (such as weather queries or database access), it qualifies as an Agent. However, this is not the case.

While such operations are part of an Agent’s functionality, they do not encompass the full meaning of an Agent. If we only focus on the level of “API calls”, it would be like defining a car as “something that can move when the accelerator is pressed”, ignoring the existence of core components such as the steering wheel, engine, and navigation system.

2. Etymology: Agent Means “Proxy”

From the original English term, Agent primarily means “representative” or “proxy”. This emphasizes the ability of an entity to act on behalf of someone else.

In the context of artificial intelligence, we can understand it as follows: an Agent is a system that can simulate human behavior and accomplish tasks by using tools or performing actions.

In other words, the essence of an Agent is to enable machines to think, decide, and act like humans. It does not merely passively answer questions but actively plans, remembers, reasons, and interacts with the environment.

3. Classic Definition Interpretation: Perspectives from OpenAI and Fudan University

Currently, there are differences in the definitions of Agents in academia and industry, but they all revolve around the core concept of “simulating human capabilities”.

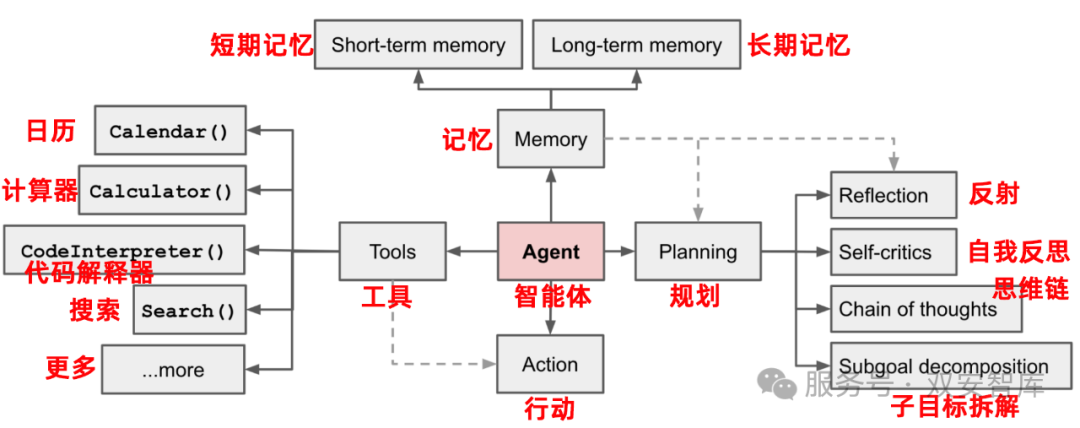

✅ OpenAI’s Definition:Agent = LLM + Planning + Memory + Tool Use

Lilian Weng, the research director at OpenAI, proposed a very classic structured definition in her blog:

Agent = Large Model (LLM) + Planning + Memory + Tool Use

Figure: Autonomous Agent System Driven by LLM (OpenAI)

(1) LLM (Large Model): As the brain of the agent, responsible for understanding questions and generating responses;

(2) Planning:

Sub-goals and decomposition: The agent breaks down large tasks into smaller, more manageable sub-goals to efficiently handle complex tasks.

Reflection and improvement: The agent can self-critique and reflect on past actions, learning from mistakes and improving future steps to enhance the quality of the final outcome.

(3) Memory:

Short-term memory: All contextual learning is viewed as utilizing the model’s short-term memory for learning.

Long-term memory: This provides the agent with the ability to retain and recall (infinite) information over long periods, typically achieved through external vector storage and rapid retrieval.

(4) Tool Use:

The agent learns to call external APIs to obtain additional information missing from the model weights (which is often difficult to change after pre-training), including current information, code execution capabilities, access to proprietary information sources, etc.

These four modules together constitute an Agent system built around a large language model (LLM) as the core controller. The potential of LLMs is not limited to generating beautifully written articles, stories, papers, and programs; they can also build a powerful general problem solver.

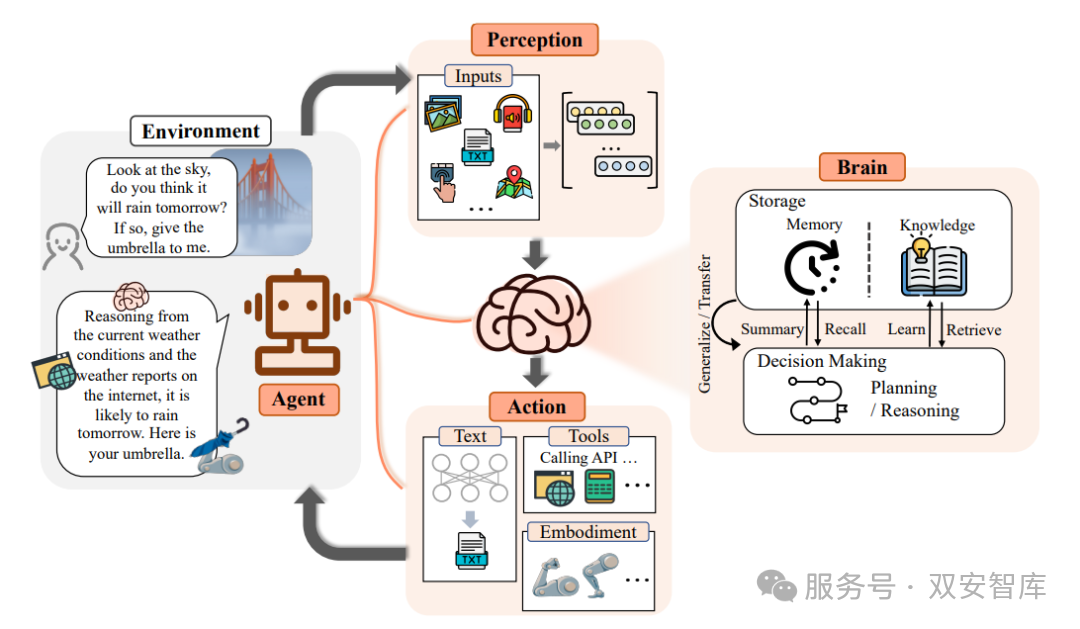

✅ Fudan University’s NLP Team’s Definition:Agent = Perception + Brain + Action

The NLP team at Fudan University takes a more macro perspective, dividing Agents into three main modules:

(1) Perception Module:: Receives information from the environment (such as voice, images, text);

(2) Brain Module:: Responsible for memory storage, logical reasoning, and decision-making;

(3) Action Module:: Executes operations, calls tools, and influences the external world.

For example:

When you ask:

“Will it rain tomorrow?”

-

The perception module converts voice into text;

-

The brain module reasons based on the current time and historical data;

-

The action module calls a weather API to get information and feedback whether you need to bring an umbrella.

Figure: Definition of Agent Decomposed into Perception, Brain, and Action (Fudan NLP)

Figure: Definition of Agent Decomposed into Perception, Brain, and Action (Fudan NLP)

▌2. Redefining Cybersecurity AI Agents

Through the previous analysis of agents, I believe everyone has a sufficient understanding of agents. Returning to the large security models launched by major security companies, you may still have a question: What is the relationship between large security models (LLMs) and agents? The relationship between large security models and agents can be understood as a collaborative system of “brain” and “executor”. The large security model serves as the underlying technical foundation, empowering agents with perception, decision-making, and execution capabilities through massive security corpus training and specialized offensive and defensive logic learning; while the agent relies on the capabilities of the large model to dynamically decompose tasks in specific scenarios, call tools, and achieve closed-loop responses. Therefore, an agent is a composite of “large model (LLM) + action capability”. Simply put, an agent generally includes a large model (LLM), with the large model being the core component of the agent. The large security models launched by various cybersecurity vendors are essentially security agents, because they conform to the logic of perceiving, planning, and ultimately calling a series of tools to achieve a certain result. Using OpenAI’s agent framework, the framework diagram of AI-driven cybersecurity agents is as follows:

Figure: AI-Driven Cybersecurity Agent (Shuang’an Think Tank Definition)

For cybersecurity agents, the Shuang’an Think Tank defines:A cybersecurity agent is a cognitive defense system with autonomous evolution capabilities in the digital space confrontation. Its essence is to construct a dynamic adversarial agent (Dynamic Adversarial Agent) architecture, forming a digital defense entity with multi-dimensional perception, strategic game, and cognitive countermeasure capabilities. Unlike traditional automated defense systems, its core breakthrough lies in achieving closed-loop intelligence of perception – reasoning – decision-making – execution, capable of real-time game simulation through reinforcement learning algorithms at various stages of the attack chain, dynamically generating optimal defense strategies.

Specifically, the construction of cybersecurity agents mainly involves the following aspects:

1.Perception Layer: Full-dimensional data capture and standardized processing

The agent collects raw data streams such as network traffic, system logs, and terminal behaviors in real-time through a distributed sensor network, while also connecting to threat intelligence sources to obtain structured intelligence data. It employs a knowledge graph-based semantic parsing engine to clean and normalize heterogeneous data, and constructs a multi-dimensional data cube with temporal and spatial correlation characteristics through time-series feature extraction techniques, forming a foundational data pool.

2.Reasoning Layer: Deep feature modeling and threat cognition construction

Relying on a multi-modal feature fusion framework (MFF), the agent uses a deep semantic parsing engine (DSEE) to hierarchically extract network behavior features, constructing a three-dimensional threat map with temporal and spatial correlations. It innovatively employs adversarial knowledge distillation technology to achieve bi-directional knowledge transfer between expert experience and machine learning models, forming an interpretable composite reasoning model. In the APT attack recognition scenario, the reasoning engine utilizes the MITRE ATT&CK tactical chain backtracking algorithm to achieve penetrating analysis of the attacker’s tactical intent.

3.Decision Layer: Multi-modal reasoning and game strategy generation

The agent integrates symbolic reasoning engines and deep reinforcement learning frameworks to construct a “rule-driven + data-driven” dual-track decision system. At the tactical level, it identifies APT attack paths through attack chain modeling techniques, and can use Markov Decision Processes (MDP) to infer the attacker’s intent; at the strategic level, it builds an attack-defense situation assessment model based on game theory, dynamically generating response plans that include blocking, trapping, and tracing strategies. The decision system’s built-in trusted AI module can verify the rationality of strategies in real-time, ensuring that decisions comply with the principle of least privilege and regulatory requirements.

4.Execution Layer: Adaptive response and continuous evolution mechanism

Through a security capability middle platform, it achieves API-level linkage with over 20 types of security products (tools) such as SIEM, EDR, and FW, automatically recommending disposal actions for different events and targets, and automatically linking response plans to achieve:threat assessment, alert interpretation, IP blocking, report generation, etc., achieving a response disposal closed loop in minutes. By constructing a bi-directional reinforcement learning loop, real-time feedback from security experts and disposal effect data is injected into the knowledge graph, leveraging collective intelligence optimization algorithms to achieve iterative upgrades of defense strategies. In high-level attack-defense drills, this system can compress the average response time to minutes, ultimately forming a closed-loop intelligent defense ecology of perception – cognition – decision – action.

In the architecture of cybersecurity agents, the memory module and planning module are the core engines for achieving autonomous decision-making. Their collaborative work enables agents to accumulate experience, analyze problems, and formulate strategies like human experts. They each have different functions and roles, and I will explain the applications and roles of short-term memory and long-term memory in the memory module, as well as feedback, adaptive evolution (supervised evaluation), thinking chains, and sub-goal decomposition in the planning module.

1. Memory

(1) Short-term Memory (STM)

Short-term memory is typically used to store immediate, temporary information, such as real-time caching of dynamic data related to current tasks (e.g., attack log streams being analyzed, temporarily extracted IoC indicators), equivalent to the security analyst’s “working memory area”. It allows the agent to quickly respond to environmental changes and react promptly to emergencies. The content of short-term memory is usually transient, lasting only until the task is completed or overwritten after a period.

Application scenarios:

-

Real-time correlation scenarios for threat hunting

When abnormal external connection behavior is detected on a host, short-term memory can temporarily store metadata of network traffic and process call chains from the last 30 minutes for real-time cross-validation of the attack chain.

-

API call context retention scenarios

When executing multi-tool collaborative tasks (e.g., first calling EDR to kill, then linking the firewall to block), short-term memory temporarily stores intermediate state data to avoid repeated queries.

(2) Long-term Memory (LTM)

Long-term memory is used to retain information that needs to be preserved for a long time, such as storing verified structured knowledge (e.g., historical attack patterns, effective disposal plans, vulnerability feature libraries), utilizing vector databases for semantic retrieval. Long-term memory helps the agent learn and accumulate experience, enabling faster decision-making when encountering similar issues in the future. It supports the agent in conducting deeper analyses, such as identifying complex attack patterns or predicting potential threats.

Application scenarios:

-

APT attack pattern matching scenarios

Comparing the behavior of new ransomware with historical Conti/LockBit attack chain templates stored in long-term memory, identifying features with 80% similarity such as “deleting system snapshots before file encryption”.

-

Reuse of disposal plans scenarios

When detecting attempts to exploit the Log4j vulnerability, automatically retrieving verified disposal plans from long-term memory:

1. Block the attacking source IP → 2. Scan affected hosts → 3. Inject temporary patches

-

Memory collaboration scenarios

When suspicious PowerShell commands are detected:

-

STM (short-term memory) caches the current process tree and command line parameters

-

LTM (long-term memory) retrieves historical records and finds that the command is 92% similar to the PowerShell Empire attack framework → triggers a high-risk alert and automatically isolates the host.

2. Planning

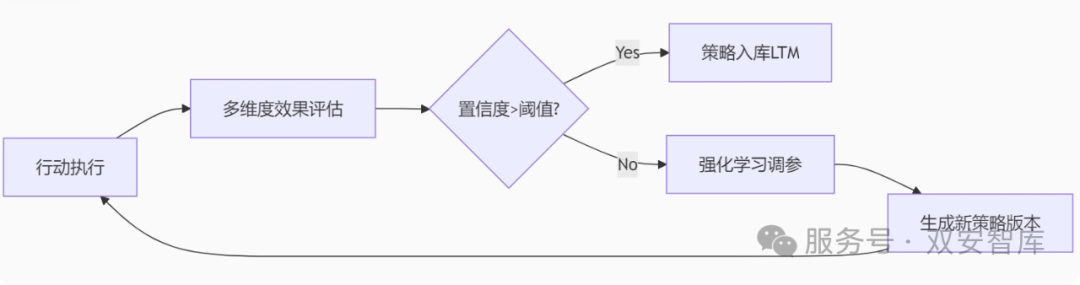

(1) Feedback

In the planning process, the feedback mechanism allows the agent to adjust its behavior based on execution results. In this way, the agent can continuously optimize its strategies and improve efficiency in responding to threats. For example, after implementing a certain defense measure, the agent collects data about the effectiveness of that measure. If the agent initially misjudges a normal operational script as malicious, through the feedback mechanism:

1. Human labels the false positive sample

2. The reinforcement learning module adjusts the behavior model

3. Long-term memory adds “legitimate operational features” knowledge

→ Reduces similar false positives by over 80%.

Figure: Feedback Loop Closed Learning Flowchart

Figure: Feedback Loop Closed Learning Flowchart

(2) Adaptive Evolution (Supervised Evaluation)

Adaptive evolution is the core engine for achieving dynamic defense capabilities. It enables the agent to possess adaptive capabilities similar to biological evolution through continuous environmental feedback and strategy optimization. Its technical mechanism is illustrated in the following diagram:

Figure: Closed-loop Feedback Driven Strategy Mechanism

Evaluation dimensions may include:

-

Threat detection rate (e.g., ransomware identification accuracy)

-

Business impact score (duration of business interruption caused by disposal actions)

-

Resource consumption ratio (CPU/memory usage)

For example:When a blocking action causes core business delays >200ms, it automatically downgrades to alert without disposal.

(3) Thinking Chain

The thinking chain refers to a series of logical steps that the agent takes when solving a problem. It emphasizes breaking down complex thinking or problem-solving into a series of interrelated, logically ordered small steps, tightly connected like links in a chain, generating decision paths through multi-step reasoning to avoid single-step judgment errors. For example, when we ask DeepSeek some questions, the deep thinking before generating results (gradually demonstrating its reasoning process) is the process of constructing a thinking chain. It can be used for complex problem decomposition and solution process recording. This is particularly important for handling complex attacks in cybersecurity, as attacks often involve multiple layers and steps.

Example: Determining whether a certain encrypted traffic is malicious

Step 1: Decryption failure rate >95% → suspected malicious obfuscation

Step 2: TLS certificate validity period anomaly → matches Cobalt Strike characteristics

Step 3: Target IP in threat intelligence database → confirmed as C2 communication

(4) Sub-goal Decomposition

When facing a large or complex goal, the goal decomposition technique can break down the large goal into a series of smaller goals or sub-tasks. This allows the agent to work more systematically and gradually achieve the final goal.

Example 1: Decomposing an abstract security goal (e.g., “contain ransomware spread”) into executable sub-task chains:

Locate the initial intrusion point → Block C2 communication → Isolate infected hosts → Patch vulnerabilities

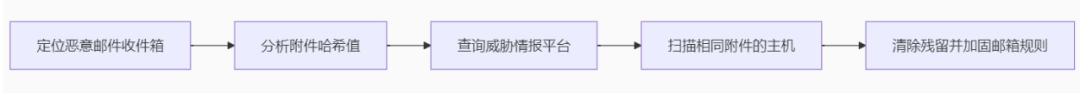

Example 2: After encountering a phishing email attack, the agent automatically generates a task chain:

▌3. Cybersecurity Multi-Agent Systems

When the capabilities of a single agent are limited, multi-agent collaboration becomes a necessary choice. This technology enables multiple specialized agents to collaborate, overcoming the limitations of traditional penetration testing that relies on human experience and has high false positive rates.

For cybersecurity multi-agent systems, the Shuang’an Think Tank defines:A cybersecurity multi-agent system is a distributed collaborative defense system composed of multiple agents (AI Agents) with autonomous decision-making capabilities. These agents, based on large language models (LLMs) or generative AI technologies, can perceive the cybersecurity environment, independently analyze threats, dynamically plan tasks, and achieve cross-functional collaboration through standardized communication mechanisms to jointly complete complex security tasks such as threat detection, vulnerability remediation, identity verification, and attack tracing.Based onLLMdriven cybersecurity multi-agent framework diagram is as follows:

Figure: Cybersecurity Multi-Agent System Driven by LLM (Shuang’an Think Tank Definition)

Currently, major cybersecurity companies are building alarm assessment agents, malicious sample analysis agents, phishing email agents, incident investigation agents, vulnerability assessment agents, traffic analysis agents, etc., based on LLM large model foundations in the AI+SOC business application layer. They achieve efficient collaboration between agents through standardized protocols (such as MCP protocol).

The advantages of multi-agent systems lie in their ability to decompose complex security tasks into multiple sub-tasks, handled by agents with different expertise, ultimately forming a complete collaborative defense solution.To work effectively together, they typically adopt the following collaboration mechanisms:

1. Information Sharing and Synchronization

Agents need to frequently share information and synchronize states with each other. For example, when the alarm assessment agent detects abnormal activity, it can send suspicious files to the malicious sample analysis agent for further analysis; at the same time, it can notify the phishing email agent to check whether related emails have phishing intentions. Once a threat is confirmed, the incident investigation agent can begin to investigate the full scope of the incident.

2. Workflow Orchestration

Through pre-defined workflows or playbooks, a series of actions can be automatically triggered. For example, upon detecting a potential cyber attack, the alarm assessment agent will initiate the corresponding response program based on preset logic, including calling the malicious sample analysis agent for detailed analysis or directing the phishing email agent to block suspicious sources.

3. Collaborative Decision Support

Agents can jointly participate in the decision-making process, leveraging their expertise to provide comprehensive recommendations. For instance, when facing a complex APT attack, multiple agents may assess evidence together, forming a comprehensive risk assessment report and proposing joint response strategies.

4. Real-time Feedback Loop

During execution, agents should be able to receive real-time feedback and adjust their behavior accordingly. For example, if the incident investigation agent discovers a new attack pattern, it can immediately update the knowledge base and notify other agents to adjust their detection rules.

5. Security and Privacy Protection

Throughout the collaboration process, it is essential to ensure the security of data transmission and the protection of user privacy. Encryption technologies and other security measures are employed to ensure that communication between agents is not intercepted or tampered with.

The cybersecurity multi-agent system marks a shift in the defense paradigm from “passive rule matching” to “proactive AI-driven”. Its essence is to build a “digital immune system” with adaptive capabilities through distributed intelligence and collaborative learning.

▌4. Specific Applications of Cybersecurity Agents in AI+SOC

AI+SOC (Security Operations Center) combines artificial intelligence technology with traditional SOC functions, aiming to improve the efficiency of threat detection and response. The specific applications of cybersecurity agents in AI+SOC mainly include the following aspects:

1. Automated Threat Detection

Cybersecurity agents can utilize machine learning algorithms to analyze network traffic, log data, and other information in real-time, automatically identifying potential security threats. For example, using pattern recognition technology to discover abnormal behaviors or known attack patterns, and continuously learning to adapt to new threats.

Traditional defense: relies on static rule libraries, with a false positive rate exceeding 30% and a detection rate for new threats below 50%.

Security agents:

-

Efficiency mechanism: Real-time analysis of behavior patterns (e.g., file entropy, process chain anomalies) based on deep learning, combined with generative adversarial networks (GAN) to identify unknown threats.

-

Effectiveness: A certain security vendor’s security GPT detection rate improved from 45.6% to 95.7%, and the false positive rate dropped from 21.4% to 4.3%.

2. Intelligent Alert Management

Agents can prioritize and classify security alerts from various sources, reducing false positive rates and ensuring that real threats receive timely attention. They can assess the authenticity and urgency of alerts based on historical data and contextual information.

Traditional defense: processes tens of thousands of alerts daily, with manual screening taking a long time and 40% of alerts going undetected.

Security agents:

-

Efficiency mechanism: Multi-agent collaboration achieves alert aggregation, deduplication, and prioritization (e.g., data ingestion agent + context collector agent), automatically associating with ATT&CK framework classifications.

-

Effectiveness: Alert processing volume increased tenfold, and manual intervention decreased by 70%.

3. Threat Intelligence Integration

Cybersecurity agents can integrate data from different threat intelligence sources, providing a more comprehensive view of threats. This helps SOC teams understand the threat environment more quickly and take appropriate defensive measures.

Traditional defense: suffers from delayed intelligence updates, low efficiency in manual entry, and difficulties in cross-platform sharing.

Security agents:

-

Efficiency mechanism: Automatically fetch global threat intelligence (e.g., C2 domain names, vulnerability features), compress storage through knowledge distillation technology, and synchronize in real-time to the LTM (long-term memory) database.

-

Effectiveness: After integrating DeepSeek, a certain security vendor’s threat intelligence analysis speed improved by 50%, and operational costs decreased by 30%.

4. Security Incident Response

When a security incident is detected, agents can automate predefined response strategies, such as isolating affected systems, updating firewall rules, or notifying relevant personnel. This rapid response capability is crucial for limiting damage.

Traditional defense: has an average response time of 42 minutes, relying on manual script execution.

Security agents:

-

Efficiency mechanism: Task token-driven multi-agent relay (e.g., investigation agent → response agent), automatically executing the closed loop of “block IP → isolate host → patch vulnerabilities”.

-

Effectiveness: A certain security vendor’s XDR+ agent reduced response time from days to minutes, improving efficiency by 90%.

5. Attack Chain Reconstruction

Agents can reconstruct the attack chain by collecting and analyzing multi-dimensional data, helping security analysts better understand the attack process and providing references for future protective strategies.

Traditional defense: struggles with fragmented logs that are difficult to correlate, and attack path reconstruction relies on expert experience.

Security agents:

-

Efficiency mechanism: Combining the ATT&CK matrix to visualize attack paths, STM (short-term memory) caches real-time evidence chains, and LTM (long-term memory) matches historical APT templates.

-

Effectiveness: A certain event’s agent cluster actively closed 152 high-risk ports and implanted tracking programs.

6. Continuous Monitoring and Analysis

AI-driven agents can achieve 24/7 continuous monitoring without human intervention, processing large amounts of data, thereby improving the coverage and depth of monitoring.

Traditional defense: incurs high costs for manual shifts, with insufficient coverage at night.

Security agents:

-

Efficiency mechanism: 24/7 asynchronous pipeline monitoring, using a sliding window attention mechanism to dynamically retain key events.

7. Reporting and Visualization

Agents can generate detailed reports and transform complex security data into easily understandable visual charts, enabling non-technical personnel to quickly grasp the current security status.

Traditional defense: involves manually writing reports, which is time-consuming and prone to errors.

Security agents:

-

Efficiency mechanism: Natural language generation (NLG) automatically outputs tracing reports, supporting multi-dimensional views (timeline/relationship network).

-

Effectiveness: Analysis report generation time reduced from hours to seconds, with accuracy improved by 40%.

8. Knowledge Sharing and Learning

In the AI+SOC environment, multiple agents can collaborate, sharing knowledge and experience to collectively enhance the overall security defense level. Additionally, agents can learn from each incident, gradually optimizing their decision models.

Traditional defense: experiences slow knowledge accumulation and knowledge silos across teams.

Security agents:

-

Efficiency mechanism: Federated learning shares distilled knowledge (e.g., malicious sample analysis weights 0.6), optimizing strategies through feedback loops.

-

Effectiveness: A certain technology company’s threat detection speed improved by 40%, with false positives reduced by 87%.

The ultimate form of security defense is beginning to emerge. As attackers utilize AI to launch more complex attacks, security agents can use the planning module (goal decomposition + thinking chain) to break down complex tasks into sub-chains, completing automated responses within minutes or seconds, with overall efficiency improving by over 90%.

In the next five years, a defense system without cybersecurity agents may be rendered ineffective. According to Gartner’s predictions, by 2027, 70% of medium to large enterprises will deploy such multi-agent systems. When each agent operates like a special forces member, performing their duties while collaborating closely, cybersecurity defense will officially enter a new era of “agent cluster operations”.

This revolution in security, from point defenses to collective intelligence, has only just begun. When security agents become the standard immune system of the digital world, we will usher in a new era of a safer, more autonomous, and more intelligent cyberspace.

References:

[1] Lilian Weng, What is a Language Agent? (OpenAI Blog);

[2] Fudan University NLP Laboratory, Research Report on Agent Architecture.

If you liked this article, feel free to share it with more friends interested in AI! Also, follow our public account for more in-depth content on agents, large models, and AI application implementation!

Previous Exciting Reads

Shuang’an Think Tank Releases AI+”Five-in-One” Data Security Governance Framework

70% Power Grid Paralysis! Pakistan’s Cyber Attack Highlights, Lessons from Russia-Ukraine Sound Alarm! Cyber Warfare has Become the “Deciding Factor” in Modern Warfare

Lessons from the Russia-Ukraine Battlefield: How to Protect Low-Altitude Security Lines When Drones are “Poisoned”? — A New Dimension of Drone Security Protection from the Ukraine Malware Offensive and Defensive War

Shuang’an Think TankProduced | AI Era NetworkSecurity + DataSecurity Evolution Indicator!

Join Shuang’an Think Tank,

Using AI to Command Cybersecurity + Data Security New Battlefield

If this article has helped you in any way,

Feel free to like, share, and engage!