Reported by New Intelligence

Reported by New Intelligence

[New Intelligence Guide] How far are humans from AGI? Perhaps large language models are not the final answer; a model that understands the world is the future direction.

In human cognition, it seems we have long been accustomed to setting Artificial General Intelligence (AGI) as the ultimate form of artificial intelligence and the final goal of its development.

Although OpenAI has long set the company’s goal as achieving AGI, even OpenAI CEO Sam Altman cannot provide a specific definition of what AGI is.

As for when AGI might arrive, it only exists in the near-future narrative scenarios thrown out by big names, seemingly within reach but also far away.

Today, on the well-known podcast platform Substack, an AI industry veteran named Valentino Zocca wrote an essay from the narrative perspective of human history, comprehensively and profoundly discussing the distance between humanity and AGI.

In the article, AGI is roughly defined as a “model that can understand the world,” rather than just a “model that describes the world.”

He believes that for humanity to truly reach a world of AGI, it is necessary to establish a system that “can question its own reality and can self-explore.”

And in this great exploration process, perhaps no one is qualified or capable of providing a specific roadmap.

As OpenAI scientists Kenneth Stanley and Joel Lehman recently elaborated in their new book “Why Greatness Cannot Be Planned,” the pursuit of greatness is a direction, but the specific results may be unexpected.

How far are we from AGI?

About 200,000 years ago, Homo sapiens began to walk upright on Earth, and at the same time, they also began to explore the realms of thought and knowledge.

A series of discoveries and inventions in human history have shaped human history. Some of these not only influenced our language and thinking but also had potential impacts on our physiological structure.

For example, the discovery of fire allowed early humans to cook food. Cooked food provided more energy for the brain, thereby promoting the development of human intelligence.

From the invention of the wheel to the creation of the steam engine, humanity ushered in the Industrial Revolution. Electricity further paved the way for today’s technological development, and printing accelerated the widespread dissemination of new ideas and new cultures, promoting human innovation.

However, progress comes not only from new physical discoveries but also from new ideas.

The history of the Western world transitioned from the decline of the Roman Empire to the Middle Ages, experiencing a rebirth during the Renaissance and the Enlightenment.

But as human knowledge grows, the human species gradually begins to realize its own insignificance.

For over two thousand years since Socrates, humanity has begun to “know that it knows nothing”; our Earth is no longer seen as the center of the universe. The universe itself is expanding, and we are just a speck of dust within it.

Changing Our View of Reality

In 1931, Kurt Gödel published the incompleteness theorem.

Just four years later, to continue the theme of “completeness,” Einstein, Podolsky, and Rosen published a paper titled “Can Quantum-Mechanical Description of Physical Reality Be Considered Complete?”

Subsequently, Niels Bohr rebutted this paper, proving the validity of quantum physics.

The Gödel theorem indicates that even mathematics cannot ultimately prove everything—there will always be facts that humans cannot prove—while quantum theory shows that our world lacks certainty, making it impossible to predict certain events, such as the speed and position of electrons.

Although Einstein famously expressed the view that “God does not play dice with the universe,” the limitations of humanity are vividly reflected when it comes to predicting or understanding things in physics.

No matter how hard humanity tries to design a mathematical universe governed by human-set rules, such an abstract universe will always be incomplete, hiding objective axioms that cannot be proven or denied.

Apart from the abstract representation of mathematics, humanity’s world is also described by philosophy that describes reality.

However, humans find themselves unable to describe, fully express, understand, or even just define these representations.

The concept of “truth” in the early 20th century remained uncertain, and concepts like “art,” “beauty,” and “life” also lack basic consensus at the definitional level.

Many other important concepts are similarly elusive; “wisdom” and “consciousness” also cannot be clearly defined by humans.

Definition of Intelligence

Similarly, in the book “Problem Solving and Intelligence,” Hambrick, Burgoyne, and Altman argue that the ability to solve problems is not just an aspect or feature of intelligence, but the essence of intelligence itself.

These two statements share similarities in their linguistic descriptions, both asserting that “achieving goals” can be linked to “solving problems.”

“Intelligence is a very general cognitive ability, including reasoning, planning, problem-solving, abstract thinking, understanding complex ideas, rapid learning, and learning from experience. It is not merely book knowledge, narrow academic skills, or test-taking strategies. Rather, it reflects a broader and deeper understanding of the surrounding environment—the ability to ‘capture,’ ‘understand,’ or ‘figure out’ what to do.”

This definition elevates the construct of intelligence beyond mere “problem-solving skills,” introducing two key dimensions: the ability to learn from experience and the ability to understand the surrounding environment.

In other words, intelligence should not be seen as an abstract ability to find solutions to general problems, but as a concrete ability to apply what we have learned from past experiences to the different situations that may arise in our environment.

This emphasizes the intrinsic link between intelligence and learning.

In the book “How We Learn,” Stanislas Dehaene defines learning as “the process of forming a world model,” implying that intelligence is also an ability that requires understanding the surrounding environment and creating internal models to describe that environment.

Thus, intelligence also requires the ability to create world models, although it encompasses more than just that ability.

How Intelligent Are Current Machines?

Narrow AI (or weak AI) is very common and successful, often outperforming humans in specific tasks.

For instance, a well-known example is that in 2016, the narrow AI AlphaGo defeated world champion Lee Sedol in Go with a score of 4 to 1.

However, in 2023, amateur player Kellin Perline managed to win a game against AlphaGo using tactics that the AI could not handle, illustrating the limitations of narrow AI in certain situations.

It lacks the ability to recognize uncommon tactics and make corresponding adjustments like humans do.

Moreover, at the most basic level, even a novice data scientist understands that every machine learning model that AI relies on must strike a balance between bias and variance.

This means learning from data, understanding, and generalizing solutions, rather than merely memorizing.

Narrow AI utilizes the computational power and memory capacity of computers to relatively easily generate complex models based on large amounts of observed data.

However, once conditions change slightly, these models often fail to generalize.

This is akin to proposing a theory of gravity based on observations of Earth, only to find that objects weigh much less on the moon.

If we use variables instead of numbers based on our knowledge of gravity theory, we will understand how to quickly predict the gravitational force on each planet or satellite.

However, if we only use numerical equations without variables, we will not be able to correctly generalize these equations to other planets without rewriting them.

In other words, AI may not truly “learn” but merely distills information or experiences. AI does not understand by forming a comprehensive world model but merely creates an outline to express it.

Have We Really Reached AGI?

What we currently use for specific tasks is merely weak AI, such as AlphaGo in Go.

AGI represents an AI system that encompasses various domains of abstract thinking at a human intelligence level.

This means that the AGI we need is a world model that is consistent with experience and can make accurate predictions.

As pointed out by Everitt, Lea, and Hutter in the “Safety Literature Review”: AGI has not yet arrived.

Regarding the question of “How far are we from true AGI?” there are significant differences between predictions.

However, it aligns with the views of most AI researchers and authoritative institutions that humanity is at least several years away from true AGI.

After the release of GPT-4, many people viewed GPT-4 as a spark of AGI.

On April 13, OpenAI’s partner Microsoft published a paper titled “Sparks of Artificial General Intelligence: Early experiments with GPT-4.”

Paper link:https://arxiv.org/pdf/2303.12712

Paper link:https://arxiv.org/pdf/2303.12712

It mentions:

“GPT-4 not only mastered language but also solved cutting-edge tasks across fields including mathematics, coding, vision, medicine, law, and psychology, without requiring any special prompts from humans.

Moreover, in all of the above tasks, GPT-4’s performance levels were nearly on par with human levels. Based on the breadth and depth of GPT-4’s capabilities, we believe it can reasonably be viewed as an almost but not fully realized version of artificial general intelligence.”

However, as Carnegie Mellon University professor Maarten Sap commented, “the spark of AGI” is merely an example of how big companies incorporate research papers into their public relations efforts.

On the other hand, researcher and machine entrepreneur Rodney Brooks pointed out a misconception in people’s understanding: “When evaluating systems like ChatGPT, we often equate performance with capability.”

Equating performance with capability incorrectly suggests that what GPT-4 generates is a summary description of the world, which is mistaken for an understanding of the real world.

This relates to the data on which AI models are trained.

Most current models are trained solely on text and lack the ability to speak, hear, smell, and act in the real world.

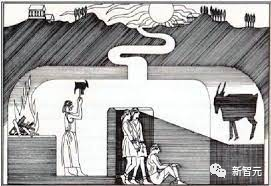

This situation is reminiscent of Plato’s Allegory of the Cave, where people living in a cave can only see shadows on the wall and fail to recognize the true existence of things.

A world model trained only on text can only ensure grammatical correctness but fundamentally lacks understanding of the objects denoted by language and lacks common sense from direct contact with the environment.

Major Limitations of Current Large Models

The most controversial challenge faced by large language models (LLMs) is their tendency to produce hallucinations.

Hallucinations refer to situations where models fabricate references and facts or generate nonsensical content in logical reasoning, causal inference, etc.

The hallucinations of large language models stem from their lack of understanding of the causal relationships between events.

In the paper “Is ChatGPT a Good Causal Reasoner? A Comprehensive Evaluation,” researchers confirmed the fact that:

LLMs like ChatGPT tend to assume causal relationships between events, regardless of whether such relationships exist in reality.

Paper link:https://arxiv.org/pdf/2305.07375

Paper link:https://arxiv.org/pdf/2305.07375

The researchers concluded:

“ChatGPT is an excellent causal interpreter but not a good causal reasoner.”

Similarly, this conclusion can be extended to other LLMs.

This means that LLMs essentially only possess the ability to make causal inductions through observation and lack the ability for causal deduction.

This also leads to the limitations of LLMs; if intelligence means learning from experience and converting what is learned into a world model for understanding the surrounding environment, then causal inference, as a fundamental component of learning, is an indispensable part of intelligence.

Existing LLMs currently lack this aspect, which is also the reason Yann LeCun believes that current large language models cannot become AGI.

Conclusion

The language, knowledge, textual materials, and even video and audio materials we construct are merely a limited part of the reality we can experience.

Just as we explore, learn, and master a reality that contradicts our intuition and experience, AGI can only truly be realized when we can build a system capable of questioning its own reality and self-exploration.

At least at this stage, we should build a model that can make causal inferences and understand the world.

This prospect represents another advancement in human history, signifying a deeper understanding of the essence of the world.

Although the emergence of AGI will weaken our certainty about our unique value and the importance of existence, through continuous progress and the expansion of cognitive boundaries, we will gain a clearer understanding of humanity’s place in the universe and the relationship between humanity and the universe.