Author: Hezi Saar, Product Marketing Manager at Synopsys

The use of cameras and displays is increasing in automotive, IoT, and multimedia applications, and designers require a variety of image and display interface solutions to meet increasingly stringent power and performance requirements. Traditionally, designers have adopted MIPI Camera Serial Interface (CSI-2) and Display Serial Interface (DSI) to connect image sensors or displays to application processors or SoCs in mobile applications such as smartphones. However, due to the reliable advantages and successful implementation of MIPI interfaces, they are now being implemented in numerous new applications, such as Advanced Driver Assistance Systems (ADAS), infotainment, wearable devices, and augmented/virtual reality headsets. This article discusses how designers implement MIPI DSI and CSI-2 in automotive, IoT, and multimedia applications to enable multiple camera and display I/O while meeting their bandwidth and power requirements.

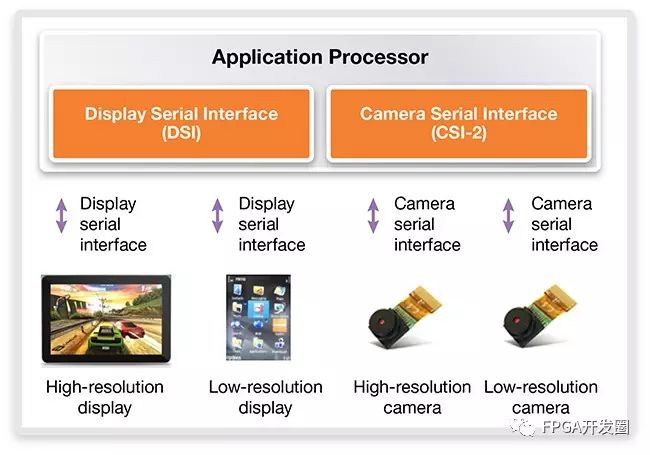

Over the past decade, the mobile market, particularly smartphones, has seen tremendous growth, opting for MIPI CSI-2 and DSI interfaces to enable multiple cameras and displays in mobile devices. With these interfaces, low-power, low-latency, and low-cost chip-to-chip connections can be achieved between the host and the device, allowing designers to connect both low-resolution and high-resolution cameras and displays. Both interfaces utilize the same physical layer, MIPI D-PHY, to transmit data to application processors or SoCs (Figure 1).

Figure 1: MIPI DSI and CSI-2 Implementation in Mobile Applications

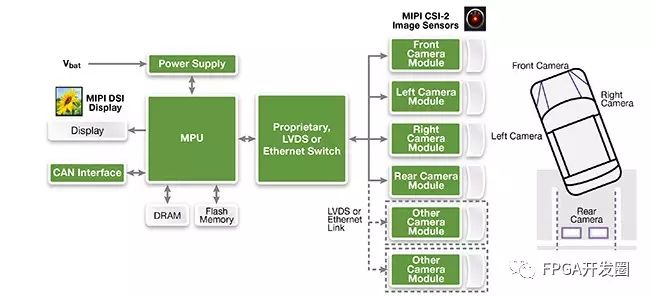

Figure 2 illustrates the implementation of MIPI camera and display interfaces in ADAS and infotainment applications. In today’s vehicles, multiple cameras are installed at the front, rear, and sides to provide a 360-degree view around the driver. In such implementations, MIPI CSI-2 image sensors are connected to image signal processors, which are then connected to bridge devices, linking the entire module to the vehicle’s main system. In some cases, in-vehicle infotainment systems utilize DSI for display interfaces using the same implementation scheme.

Figure 2: ADAS Application Examples Using MIPI DSI and CSI-2 Standards

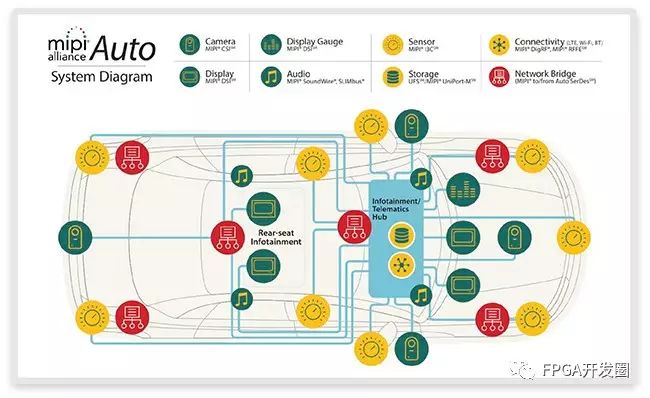

MIPI provides a complete set of specifications for automotive applications (Figure 3):

-

DSI: For driver information, mirrorless displays, and infotainment.

-

CSI: For ADAS applications, backup cameras, collision avoidance, mirrorless vehicles, and in-vehicle passenger information acquisition.

-

Other interfaces: MIPI I3C for sensor connections, JEDEC UFS for embedded and removable storage, and SoundWire and RFFE.

Figure 3: Using MIPI Alliance Specifications in Automotive Applications (Image from MIPI Alliance)

MIPI Interfaces in IoT Applications

There are various forms of IoT SoCs, and we introduce a super set of components or interfaces that typically include MIPI in IoT SoCs. CSI-2, possible DSI, and processors containing SOC visual processing components. Storage components include LPDDR for low-power DRAM and embedded multimedia cards (EMMC) for embedded flash. For wired and wireless communications, leverage is achieved through various standards such as low-power Bluetooth, Secure Digital Input Output (SDIO), and USB, depending on the target application. To ensure the security of data transmitted to the cloud and stored in devices, security is a fundamental part, mainly composed of various engines such as true random number generators and encryption algorithm accelerators.

In a component, dozens or more sensors are connected, which are sensors and control subsystems with I2C or I3C and Serial Peripheral Interface (SPI). I3C is a new MIPI specification that adopts key features of I2C and SPI and unifies them while retaining the two-wire serial interface. System designers can connect a large number of sensors in the device while minimizing power consumption and reducing component and implementation costs. At the same time, by using a single I3C bus, manufacturers can combine various sensors from different vendors, enabling new concepts while supporting longer battery life and cost-effective systems.

New use cases for MIPI camera and display interfaces are emerging in multimedia applications, such as virtual/augmented display devices with high-resolution cameras and displays. In such devices, the interface is responsible for transmitting and receiving multiple graphics from various sources, processing them, and delivering them to users with the utmost quality. Below are three implementation examples of multimedia applications:

-

High-end multimedia processors: In this implementation, multiple display and camera inputs enter the image signal processor via CSI-2 and DSI, typically from another application processor that has processed and received the images. The image signal processor then transmits the images to cameras or displays via CSI-2 or DSI.

-

Multimedia processors: This implementation is mainly used for posture or motion recognition or human-machine interfaces. Two image sensors work with the processor via the CSI-2 protocol, identifying and processing motion or posture data for further analysis and control. The processed motion or posture data is then transmitted to the application processing section via the CSI-2 protocol.

-

Bridge IC: Due to the presence of multiple image I/Os, as introduced in the automotive section, there is a demand for bridge ICs. Using bridge ICs, the output from the application processor can be split into two display streams.

MIPI CSI-2 leverages the MIPI D-PHY physical layer to communicate with application processors or SoCs. Image sensors or CSI-2 devices acquire images and transmit them to the CSI-2 host where the SoC is located. Before transmitting images, they are stored in memory in separate frames. Each frame is then transmitted through virtual channels via the CSI-2 interface. For multiple image sensors that support different pixel streams, and sometimes support multiple exposures, virtual channels are adopted and virtual channel identifiers are assigned to each frame. Considering the transmission of complete images from the same image sensor but with multiple pixel streams, each virtual channel is divided into multiple lines, transmitting one line at a time.

MIPI CSI-2 uses packets for communication, which include data formats and Error Correction Code (ECC) features to protect the packet header and perform cyclic redundancy checks (CRC) on the payload. Such implementations apply to all packets of data being transmitted from image sensors to SoCs. A single packet is transmitted through D-PHY, via the CSI-2 device controller, and then separated into multiple data channels. D-PHY allocates data to several data channels operating in high-speed mode, transmitting the packets to the receiver through the channels. For CSI-2 receivers using its D-PHY physical layer, it is responsible for packet extraction and decoding, ultimately transmitting the packets to the CSI-2 host controller. This process is then repeated frame by frame in an efficient, low-power, and low-cost manner between the CSI-2 device and the host.

In a typical system with multiple cameras and displays, the CSI-2 and DSI protocols will use the same physical layer (D-PHY). Depending on the specific target application, there are many considerations to take into account during the discovery phase, such as the required bandwidth and device types. Understanding these considerations helps designers determine the D-PHY version, the required number of data channels, and the speed of the data channels, which can then determine the number of pins needed in the system. Finally, designers can identify the required interfaces and memory for their target applications. For example, there are various implementation schemes where CSI-2 based on D-PHY operates at speeds of 1.5 Gbps per data channel, while in other implementations, speeds can reach 2.5 Gbps per data channel. Operating at lower speeds means optimized power consumption and area, but most importantly, the newer image sensors and displays that support faster speeds may not be suitable for all application scenarios.

In numerous applications beyond mobile, such as automotive, IoT, and multimedia applications including augmented/virtual reality, multiple cameras and displays are now in use. For all these applications, high-speed, low-power camera and display interface solutions are needed to meet today’s high-resolution image processing demands. MIPI CSI-2 and DSI are reliable interfaces in the mobile market, primarily for smartphones, and due to their successful implementation in this space, they are now being trialed in numerous new applications. Synopsys offers a wide range of MIPI IP solutions, including controllers, PHYs, verification IP, and IP prototyping kits, compatible with the latest MIPI specifications, enabling designers to incorporate the required functionality into their mobile, automotive, and IoT SoCs while meeting power, performance, and time-to-market requirements.

Tencent Cloud launches FPGA-based cloud servers

Antique IBM PCjr based on FPGA MCL86 8088 processor “reborn”!

Only 10 days left – Free download of the eBook “FPGA Frontier: New Applications of Reconfigurable Computing”

PCI Express 4.0 margin and its advantages

Ultra HD (UHD) H.264 codec IP ported to Zynq Z-7045 SoC

Ensuring signal offset control on data buses

Open source is mainstream: Open-source Axiom Beta 4K Cinema Camera

Getting more for less! Prodigy Kintex UltraScale Proto creates miracles

About the concept of time borrowing in latches

FPGA deep learning drone application makes its global debut: Zero Degree Intelligent Control collaborates with Deep Vision Technology for smart drone’s mysterious appearance at CES

Comparison of the top 10 popular programming languages for robotics, which one do you master?

[HLS Teaching Video 13] Interface synthesis – Handling arrays

FPGA prototyping systems accelerate IoT design implementation

VadaTech launches New Virtex UltraScale FPGA Carrier

For more information, please follow up and give me feedback