If you want to engage in camera driver software development, what basic information is needed? This article summarizes it.

From the perspective of independent components, it includes two parts: the sensor module and the core board; thus, the core of the driver software is to ensure the connection between the two. The common hardware interfaces are MIPI and USB, with a focus on the PHY layer during MIPI interface debugging; common software driver frameworks are V4L2 and UVC.

There is too much content; this chapter summarizes MIPI camera and USB camera, and another article will follow.

The camera sensor (sensor) module serves as the image input, providing data sources;

The core board receives and processes the input from the module for processing and display;

The hardware interface provides physical connection methods, and the camera driver software drives the soft connection between the two, generally assisted by some mature software driver frameworks.

00 Common Basic Concepts

Video input technology refers to the technology that captures real images through photoelectric technology and stores them on a storage medium via a camera.

The camera module, as a video input device, is a physical device that captures images and is widely used in various fields such as real-time monitoring, vehicle-mounted systems, and video recording.

Local camera refers to the integrated camera used in this article.

Network cameras can connect directly to the network; using video codecs, they connect to portable network devices and then transmit images back to human-computer interaction terminals through the network.

MIPI (Mobile Industry Processor Interface) defines a series of interface standards for internal connections in mobile devices. The MIPI interface defines four pairs of differential signals for transmitting image data and one pair of differential clock signals, initially established to reduce the number of connections between the LCD and the main control chip (replacing parallel interfaces), ultimately supporting high-definition displays and cameras.

Taking the RK3399 chip platform as an example, it integrates three MIPI interfaces: DPHY0 (TX0), DPHY1 (TX1RX1), DPHY2 (RX0), where TX can only send data, and RX can only receive data to ISP0. There are two PHYs, each PHY can support 1 lane, 2 lanes, or 4 lanes, with a maximum support of 13M pixel raw sensors.

01 Sensor Interface

The camera sensor driver interface consists of two aspects: (1) sending and receiving control commands and configuration parameters via the IIC interface to initialize the sensor and configure its parameters (such as resolution, frame rate, exposure, etc.); (2) correctly transmitting and parsing image data via the MIPI interface.

The IIC bus consists of two signal lines (SCL clock line and SDA data line), with the camera sensor typically acting as a slave device, and the master device (such as the processor) completing configuration and control of the slave device via the IIC interface.

IIC function: Through the IIC interface, the master device can send control commands and configuration parameters to the camera sensor, such as setting image resolution, exposure time, white balance, etc.

MIPI interface: Using differential signal transmission technology can effectively reduce signal interference and power consumption, making it a high-performance, low-power serial communication interface.

MIPI function: Supports various data encoding formats (such as YUV, RGB, RAW, etc.) and different data widths (such as 8-bit, 10-bit, 12-bit, etc.) for transmitting image data from camera sensors. Additionally, some advanced features like phase detection and HDR also need to be implemented via the MIPI interface.

Other power supply and clock interfaces can be connected according to hardware connections.

02 MIPI Interface Parameters

The following are some examples of commonly used MIPI parameters (for reference only; different hardware platforms and standards may vary):

| Parameter Name | Example Specifications | Description |

|---|---|---|

| Data Rate | 1.5 Gbps / 3 Gbps / 6 Gbps | The range of supported data transmission rates, depending on the hardware platform and standards |

| Number of Lanes | 2 Lane / 4 Lane | The number of MIPI lanes supported; increasing the number of lanes can improve data transmission rates |

| Clock Frequency | 100 MHz / 200 MHz | The frequency of the clock signal driving the MIPI interface, affecting data transmission rates and stability |

| Rise/Fall Time | < 100 ps | The rise and fall times of MIPI signals, affecting signal integrity |

| Eye Diagram Opening | > 80% @ 1 Gbps | A key indicator for assessing signal integrity, affecting the reliability and stability of data transmission |

Parameter Description:

(1) Data Rate refers to the speed of data transmission through the MIPI interface, measured in Gbps (gigabits per second).

The range of data rates supported varies by MIPI standards and hardware platforms. Increasing the MIPI rate can accelerate data transmission speed, allowing more image data to be transmitted in the same time, thereby increasing the bitrate. However, low-power or low-cost solutions may support lower data rates.

During debugging, it is necessary to select an appropriate data rate based on system requirements and camera performance, and set it through driver configuration. Debugging tools (such as oscilloscopes, logic analyzers) can monitor the waveform and timing of MIPI signals to ensure the adjusted rate is within the hardware-supported range and that signal quality is good.

Common lane counts include 1, 2, 4, etc. The maximum number of lanes supported may vary by different cameras and processors. During debugging, it is necessary to select an appropriate number of lanes based on the system’s data bandwidth requirements and hardware support.

(3) Clock Frequency refers to the frequency of the clock signal used to drive the MIPI interface. The clock frequency directly affects the data transmission rate and stability.

The specifications of the clock frequency depend on the MIPI standards and hardware design used. Engineers need to set an appropriate clock frequency based on system requirements and hardware specifications.

During debugging, it is necessary to ensure the stability and accuracy of the clock signal to avoid data transmission errors or delays.

The specifications of signal integrity parameters are generally determined by the quality of the transmission line used, the performance of the signal driver and receiver, and the electrical characteristics of the system.

During debugging, it is necessary to use tools such as oscilloscopes and logic analyzers to monitor and analyze the waveform and timing of MIPI signals to ensure signal integrity meets the requirements.

(5) Summary. After modifying MIPI parameters, it is necessary to apply to the sensor manufacturer for the corresponding specifications of the sensor settings to ensure that the camera can operate normally and output image data that meets the requirements.

The specifications of the clock frequency depend on the MIPI standards and hardware design used. Engineers need to set an appropriate clock frequency based on system requirements and hardware specifications.

For example, adjusting the MIPI rate is essentially adjusting the clock frequency for data transmission. Therefore, it is necessary to adjust the clock divider inside the sensor to ensure that the sensor’s clock configuration matches the new rate.

After modifying the number of lanes, in multi-lane transmission, it is necessary to ensure data synchronization between the lanes. Therefore, common clock signals and synchronization signals may need to adjust the sensor’s synchronization mechanism.

03 MIPI – CSI Hardware Interface

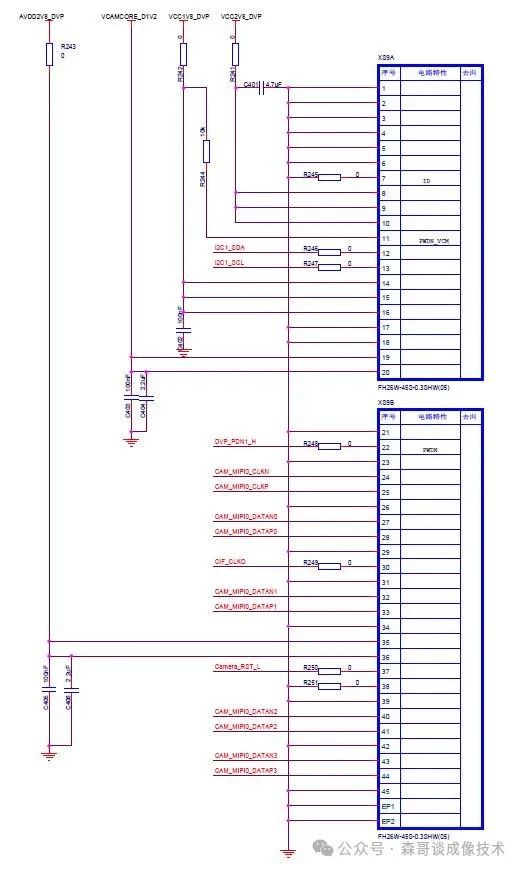

The camera module takes the V13850 module as an example, using the MIPI CSI interface for hardware, with the software on the Android platform completing the driver and configuration of the OV13850 module at the hardware abstraction layer.

The camera control uses the MIPI-CSI mode, which includes not only the MIPI interface but also three power supplies, I2C, MCLK, and GPIO pins.

The three power supplies used include VDDIO (the IO power supply maintains the same voltage as the MIPI signal lines), AVDD (analog power supply 2.8V or 3.3V), and DVDD (digital power supply 1.5V or higher).

GPIO mainly includes power enable pins, PowerDown pins, reset pins, etc. The schematic is as follows:

04 MIPI PHY Physical Layer

01

The MIPI protocol architecture

The overall architecture of the MIPI interface includes the physical layer, protocol layer, and application layer.

The application layer provides data to the protocol layer and specifies the transmission requirements and rules;

The protocol layer packages the data into a format suitable for transmission based on these requirements and rules, providing a framework and rules for data transmission to the physical layer;

The physical layer PHY (Physical Layer) is responsible for sending the data packaged by the protocol layer through the physical medium. The PHY layer is directly connected to the physical medium and is responsible for converting physical signals to digital signals, ensuring that data can be transmitted correctly and efficiently through the physical medium.

02 MIPI PHY

The focus on the PHY during MIPI interface debugging is due to:

(1) The PHY layer is the foundation of data transmission, directly affecting transmission quality due to its physical connections.

(2) The PHY layer has complexity; the MIPI standard defines various PHY layer specifications suitable for various scenarios, involving rich technical details, including differential signal transmission, clock recovery, data encoding and decoding, etc.

(3) The PHY layer offers convenience for debugging and quick issue location; tools such as oscilloscopes and logic analyzers can directly observe and measure signal waveforms, timing, and levels.

03 Types of MIPI PHY

| Type | Lanes | Operating Mode and Features | Main Applications |

|---|---|---|---|

| C-PHY | Each lane contains three differential data lines | Very high transmission rates | Image capture devices |

| D-PHY | Each lane typically consists of a pair of differential lines | Supports both high-speed and low-power modes | Display devices |

| Combo-PHY | Combines the features of C-PHY and D-PHY | Combo-PHY can switch between C-PHY and D-PHY modes for flexible data transmission. |

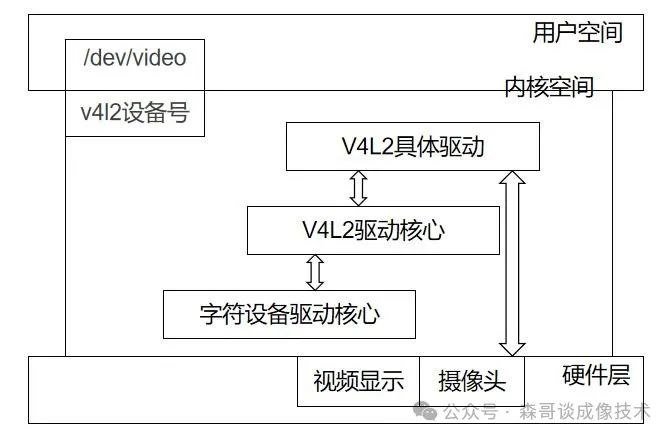

05 Software Driver Architecture V4L2

01

Driver Software Code

V4L2 (Video4Linux2) is a generic API for video devices in the Linux kernel, supporting video capture, streaming playback, video output, etc.

V4L2 supports three types of devices: video input/output devices, VBI devices, and radio devices, with the most widely used video input device being the camera input device.

The core source directory of V4L2 is /kernel/drivers/media/v4l2-core/.

The core of V4L2 driver, v4l2_dev.c, includes the registration of the main structure video_device, creating device nodes like video in the /dev directory.

Key structures in V4L2 include v4l2_device, v4l2_subdev, video_device, etc.

Common ioctl commands in V4L2 include VIDIOC_QUERYCAP, VIDIOC_S_FMT, etc.

The core process of driver code:

(1) Initialize the V4L2 device and register the device node (such as <span>/dev/video0</span>).

(2) Register camera sub-devices, such as ISP sensor devices.

(3) Query the supported functionalities of the device using <span>VIDIOC_QUERYCAP</span>;

(4) Set image resolution, pixel format, etc. using <span>VIDIOC_S_FMT</span>;

(5) Request buffers using <span>VIDIOC_REQBUFS</span> and map the buffers to user space using <span>mmap</span>;

(6) Control the start and stop of video streams using <span>VIDIOC_STREAMON</span> and <span>VIDIOC_STREAMOFF</span>.

02 V4L2 Framework Architecture

-

Core Driver Framework of V4L2: The core part of the V4L2 framework is mainly responsible for constructing a standard video device driver framework in the kernel and providing unified interface functions. It includes functionalities such as registering, unregistering, and querying video devices, as well as interaction interfaces with sub-devices.

-

Lower Layer Interface Driver Framework of V4L2: Responsible for connecting specific video devices (such as camera sensors) to the V4L2 framework. It includes implementations of hardware-related interfaces, such as I2C, SPI communication protocols, and interaction logic with sub-devices like sensors.

-

Specific Driver for Sub-Devices: Sub-device drivers (such as sensor drivers, ISP drivers, etc.) interact with the main device through the interfaces provided by V4L2. They implement specific hardware control logic, such as sensor initialization and image data acquisition.

-

Character Device Interface: In Linux, all peripherals are treated as files. The V4L2 framework provides access to video devices to user space through character device interfaces (like /dev/videoX). User space applications can access video devices through standard file operation interfaces (like open, read, write, ioctl, etc.).

-

Video Buffer Management: The V4L2 framework provides a management mechanism for video buffers to efficiently transfer video data between kernel space and user space. This is typically implemented through modules like videobuf2.

Summarizing and organizing is always a painstaking task; the entire article spans too long. Please give a thumbs up and follow for encouragement!