1. Deployment MethodUtilizingopenEuler 22.03 LTS SP4 + twoAscend NPUs, using Docker to launch themindIEimage, the model used is deepseek-32bDeployment process is as follows:1. Install the server operating system2. Install NPU drivers3. Install Docker and download the MindIE image4. Download the large model files5. Launch the MindIE image and load the large model6. Test the output of the large model2. Deployment Process1. Install the Operating SystemChoose a minimal installation for openEuler 22.03 LTS SP4, installation tutorial can be searched online. 2. Install NPU Drivers and FirmwareDownload the corresponding drivers and firmware for the NPU, choose the .run format files

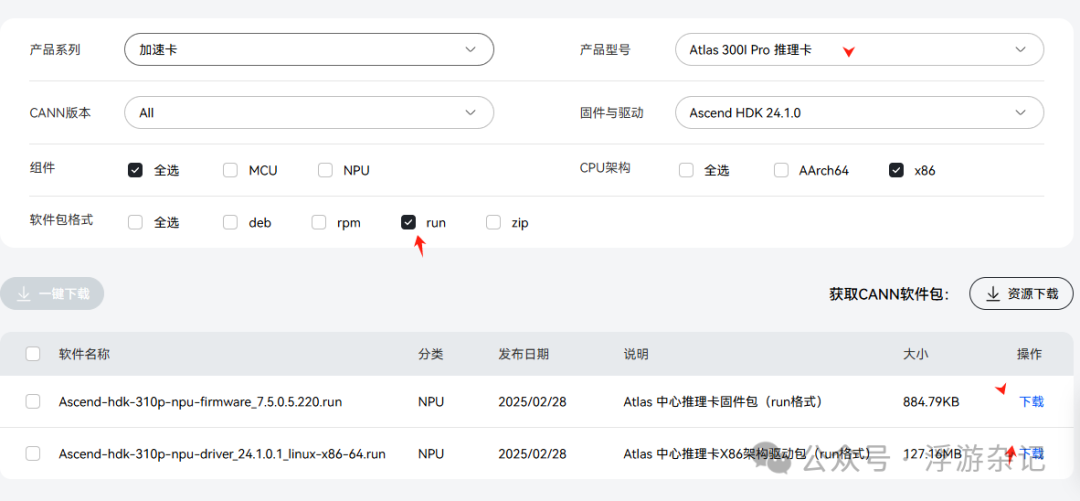

2. Install NPU Drivers and FirmwareDownload the corresponding drivers and firmware for the NPU, choose the .run format files

https://www.hiascend.com/hardware/firmware-drivers/community?product=2&model=15&cann=All&driver=Ascend+HDK+24.1.0 Add execution permissionschmod +x *.run./xx.run –check // Verify the consistency and integrity of the run installation package.If the following echo information appears, it indicates that the software package verification is successful.

Add execution permissionschmod +x *.run./xx.run –check // Verify the consistency and integrity of the run installation package.If the following echo information appears, it indicates that the software package verification is successful.

Verifying archive integrity... 100% SHA256 checksums are OK. All good../xx.run –install // Install the driverIf the following key echo information appears, it indicates that the driver installation is successful.

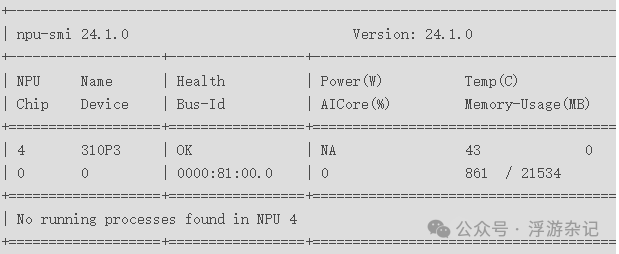

Driver package installed successfully!Use npu-smi info to check the NPU 3. Install Docker

3. Install Docker

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

sudo systemctl status docker // Check service status

sudo systemctl start docker // Start docker service

sudo systemctl stop docker // Stop docker service

sudo systemctl restart docker // Restart docker serviceDownload the MindIE image

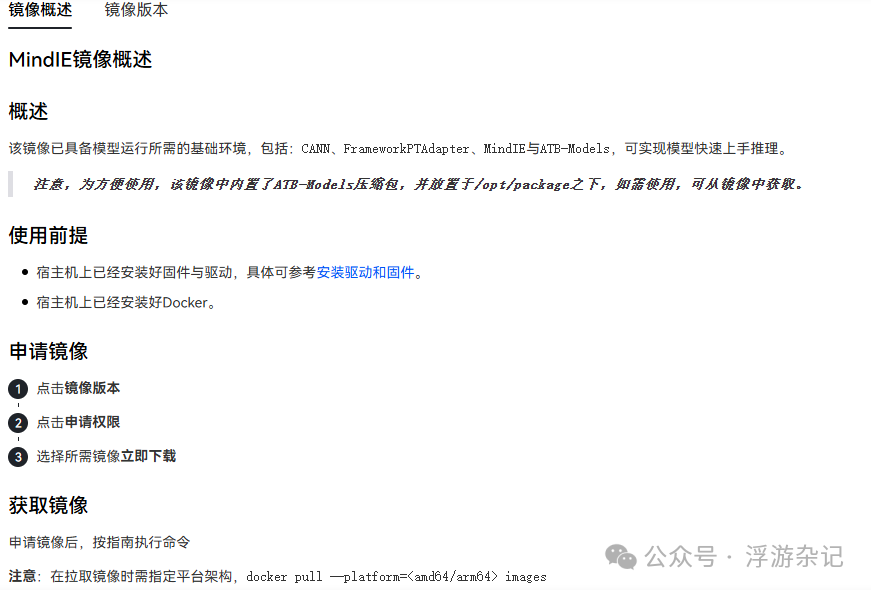

The download link is as follows, permission must be requested before downloading the container image

https://www.hiascend.com/developer/ascendhub/detail/af85b724a7e5469ebd7ea13c3439d48f

4. Download Model Files

Use the Magic Dock community to download the deepseek 32B model files, the link is as follows:

https://www.modelscope.cn/models/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B/filesInstall the python modelscope module as prompted

pip install modelscope

modelscope download --model deepseek-ai/DeepSeek-R1-Distill-Qwen-32B --local_dir5. Launch the MindIE image and load the large model

docker run -it -d --net=host --shm-size=2g \

--user mindieuser:<HDK-user-group> \

--name <container-name> \

--device=/dev/davinci_manager \

--device=/dev/hisi_hdc \

--device=/dev/devmm_svm \

--device=/dev/davinci0 \

// Add several NCI for each NPU core

--device=/dev/davinci1 \

--device=/dev/davinci2 \

--device=/dev/davinci3 \

--device=/dev/davinci4 \

--device=/dev/davinci5 \

--device=/dev/davinci6 \

--device=/dev/davinci7 \

-v /usr/local/Ascend/driver:/usr/local/Ascend/driver:ro \

// Driver program directory

-v /usr/local/sbin:/usr/local/sbin:ro \

-v /path-to-weights:/path-to-weights:ro \

// Model file directory

mindie:1.0.0-800I-A2-py311-openeuler24.03-lts bash // Container image fileEnter the container to modify the configuration file

Configuration parameter link

https://www.hiascend.com/document/detail/zh/mindie/100/mindieservice/servicedev/mindie_service0285.htmlModify“$MIES_INSTALL_PATH/conf/config.json”

npuDeviceIds:[0,1] // Fill in several cores

truncation:false

modelName:deepseek-32b // Model name, can be arbitrary

modelWeightPath:// Model path

worldSize:2

enabled:false

maxIterTimes:5210Start the inference service

cd $MIES_INSTALL_PATH

nohup ./bin/mindieservice_daemon > output.log 2>&1 // The file captured in the standard output stream will print the following information indicating that the startup was successful:

Daemon start success!6. Test the output of the large model

curl 127.0.0.1:1025/generate -d '{"prompt": "What is Python?","maxtokens": 150,"stream": false,"dosample": true,"repetitionpenalty": 1.5,"temperature": 0.7,"topp": 0.95,"topk": 100,"model": "deepseek-32b"}' // The model name defined aboveThe above completes the deployment of the large model based on the Ascend NPU, mainly focusing on obtaining the MindIE container image, which needs to be requested from Huawei. It is also possible to build the container image based on the official source.Note:Do not install an overly new operating system, as the NPU driver only supports up toopenEuler 22.03 LTS SP4.If errors occur during the installation process, resolve them according to the prompts.