Source: Semiconductor Industry Overview, Author: Feng Ning

Source: Semiconductor Industry Overview, Author: Feng Ning

Data centers are a hot topic.

In the recent quarter, NVIDIA, Broadcom, AMD, Intel, Marvell, SK Hynix, Micron, and Samsung had data center-related shipments exceeding $220 billion in annual shipments (excluding power chips).

With the rapid expansion of LLM , it is expected that by 2030, semiconductor spending in data centers will exceed $500 billion, accounting for more than 50% of the entire semiconductor industry.

So, which series of chips will benefit from the rise of data centers? Before that, let’s understand what a data center is.

01What is a Data Center?

01What is a Data Center?

Data centers can be divided into IDC (Internet Data Center, Internet Data Center), EDC (Enterprise Data Center, Enterprise Data Center), NSC (National Supercomputing Center, National Supercomputing Center).

Among them, IDC is a standardized telecommunications professional-grade computer room environment established by telecommunications operators using existing internet communication lines and bandwidth resources, providing comprehensive services such as server hosting, leasing, and related value-added services to customers via the internet.

EDC refers to data centers built and owned by enterprises or institutions, serving the business of the enterprise or institution itself. It is a core computing environment for data processing, storage, and exchange, providing data processing, data access, and application support services for enterprises, customers, and partners.

NSC refers to supercomputing centers established by the state, equipped with trillion-level efficient computers.

According to scale capacity, data centers can be divided into ultra-large data centers, large data centers, and medium and small data centers.

Ultra-large data centers: data centers with more than 10,000 standard racks, used to provide high-capacity and high-performance data storage and processing services for large enterprises and internet service providers worldwide, supporting data mining, machine learning, and artificial intelligence for enterprises and research institutions.

Large data centers: data centers with a scale between 3,000~10,000 standard racks, used to provide data storage and processing services for large enterprises or internet companies.

Medium and small data centers: data centers with less than 3,000 standard racks, used to provide data storage and processing services for small and medium-sized enterprises.

The gradual expansion of data center construction naturally leads to a surge in demand for chips, and the following series of chip markets are also welcoming many benefits.

02These Types of Chips Are More Attractive

02These Types of Chips Are More Attractive

Data shows that in 2030, semiconductor spending in data centers will see GPU/AI accelerators accounting for 60%; AI extended network chips accounting for 15%; CPU (x86 and ARM) accounting for 10%; storage chips accounting for 10%; power, BMC etc. accounting for 5%.

Among them, GPU/AI accelerators are the core of computing power, mainly used for AI training and inference, high-performance computing, forming heterogeneous computing with CPU to improve computing efficiency.

AI extended network chips are responsible for building high-bandwidth, low-latency networks, achieving high-speed interconnection between GPUs, offloading CPU network tasks, and optimizing AI traffic transmission.

CPU chips serve as the control center, managing system resources, scheduling tasks, handling general computing and protocol transactions, and coordinating heterogeneous computing.

HBM and other storage chips work with GPU/AI accelerators to support high-performance computing scenarios, providing high-speed storage and reading capabilities for large-scale data processing.

Power, BMC and other chips are used to ensure stable power supply and remote monitoring management of data center equipment, ensuring reliable system operation.

Similarly, more and more chip companies are betting on this market.

03Which Data Center Chips Are More Profitable?

03Which Data Center Chips Are More Profitable?

Currently, almost all data center semiconductor revenue is concentrated in nine companies: NVIDIA, TSMC, Broadcom, Samsung, AMD, Intel, Micron, SK Hynix, and Marvell.

GPU/AI accelerators, NVIDIA is the biggest winner

In the GPU/AI accelerator market, Nvidia is the biggest winner, with competitors being AMD and Broadcom.

In the recently concluded quarter, NVIDIA’s quarterly revenue from AI accelerators was $33 billion, Broadcom’s revenue from AI accelerators was about $4 billion, Marvell’s quarterly revenue from AI accelerators was about $1 billion, and AMD’s revenue from AI accelerators was less than $1 billion.

Domestic GPU companies are also challenging this market. For example, Huawei’s Ascend 910B, Biren BR100, Tianjin Zhixin Tianhai 100, Haiguang DCU K100, Moore Threads MTT S4000 are all good solutions they have provided, but the market share of domestic GPU companies is still relatively small compared to international giants.

CPU has many highlights

In the CPU market, there are many highlights.

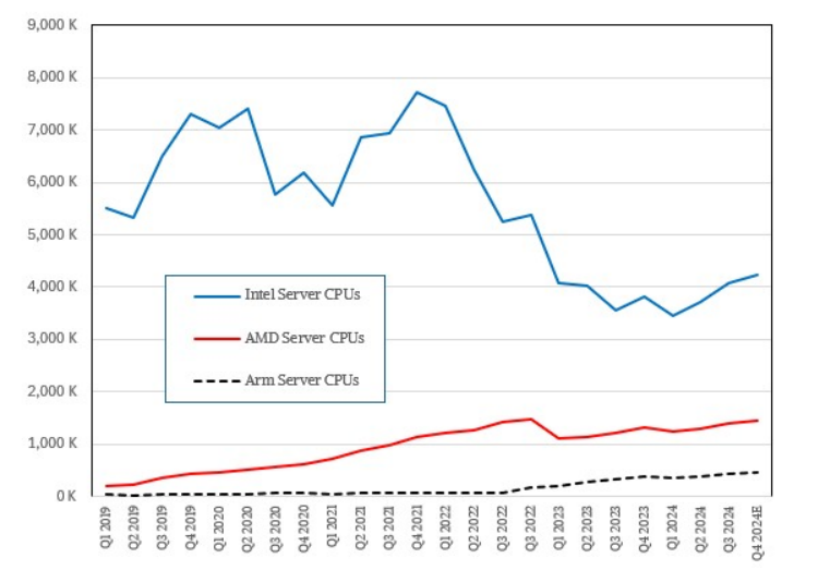

For more than twenty years, Intel has been the undisputed leader in the data center CPU market, with its Xeon processors powering most servers worldwide. On the other hand, just seven or eight years ago, AMD processors could only occupy single-digit market shares.

Now, the situation has changed dramatically.

Although Intel’s Xeon CPU still powers most servers, an increasing number of new servers, especially high-end devices, are tending to choose AMD EPYC processors.

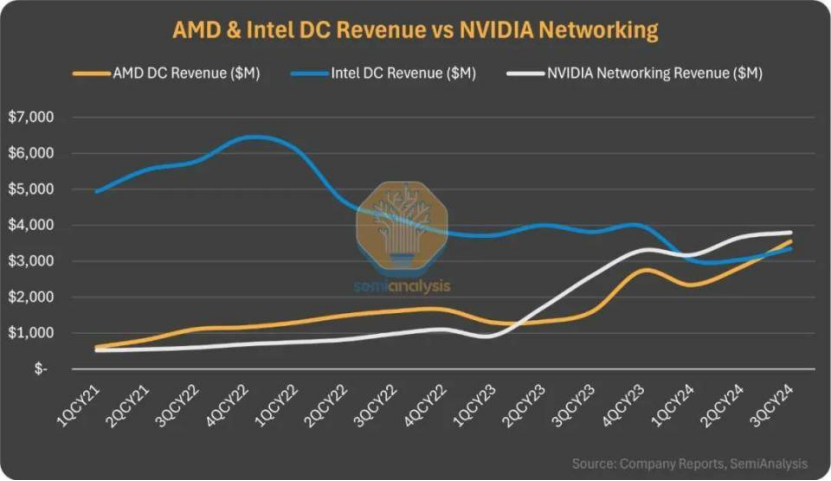

In November last year, independent research firm SemiAnalysis pointed out that AMD’s data center business sales have now surpassed Intel’s data center and AI business.

It is reported that in AMD’s new generation products, AMD’s EPYC processors have gained a competitive advantage over Intel’s Xeon CPU , forcing Intel to sell its server chips at significant discounts, which has reduced the company’s revenue and profit margins.

The following chart clearly shows that since the second quarter of 2022, Intel’s Xeon server CPU shipments have been declining.

Recently, ARM has made a bold statement: by the end of this year, the company’s share in the global data center CPU market will soar to 50%, far exceeding last year’s approximately 15%.Arm infrastructure business head Mohamed Awad stated that this growth is mainly due to the rapid development of the AI industry.

Arm CPUs typically act as the “host” chips for AI computing systems, responsible for scheduling other AI chips. For example, some high-end AI systems from NVIDIA use a chip called Grace based on Arm architecture, which also includes two Blackwell chips. Awad pointed out that Arm chips consume less power than Intel and AMD products in many cases. AI data centers consume a lot of power, leading cloud computing companies to increasingly prefer using Arm chips.

In addition, data center chips often use Arm more intellectual property, resulting in companies in this field earning royalties far exceeding those for chips aimed at other devices.

Moreover, the data center CPU market has recently welcomed an “old friend” — Qualcomm.

Recently, Qualcomm officially partnered with Saudi Arabia’s sovereign wealth fund (PIF) AI company HUMAIN to develop and supply advanced data center CPUs and AI solutions.

As mentioned above, in the current data center market, there are Intel and AMD, and behind them is Arm, the level of competition is far greater than that in the mobile CPU market.

In the data center CPU market, Qualcomm has also had a period of failure. Back in 2017, Qualcomm launched the Arm-based Centriq 2400 processor, attempting to break Intel’s and AMD’s monopoly in the x86 architecture. However, due to insufficient software ecosystem adaptation and performance not meeting expectations, the project was quietly terminated in 2019.

Years later, Qualcomm is making a comeback with a completely different strategy.

Previously, Qualcomm acquired Nuvia to obtain its Phoenix architecture technology and integrated its self-developed architecture into the Qualcomm platform. The company is composed of core members from Apple’s A series chip team, and its designed Oryon cores perform excellently in terms of energy efficiency, causing a stir in the industry at the time. Starting from 2023, Qualcomm officially began to focus on desktop CPUs, with its Snapdragon X Elite processors entering the consumer PC market.

Domestic CPU companies have also been making good progress in data center chips, with related products including Loongson 3C6000 series, Haiguang CPU series, Feiteng S2500 and Tengyun S5000C, Kunpeng 920 etc.

HBM Memory, SK Hynix is making a fortune

In the memory market, HBM is highly regarded.

It is reported that in the recent quarter, almost all HBM revenue (about $25 billion) came from data centers.

With the rise of AI, especially in data-intensive applications such as machine learning and deep learning, the demand for HBM is unprecedented. It is expected that by 2025, HBM shipments will grow by 70%, as data centers and AI processors increasingly rely on this type of memory to handle large amounts of low-latency data. The surge in demand for HBM is expected to reshape the DRAM market, with manufacturers prioritizing the production of HBM over traditional DRAM products.

SK Hynix, Samsung, and Micron are the main suppliers in the HBM field, with SK Hynix alone accounting for over 70% of the HBM market share.

Recently, SK Hynix announced that 12-layer HBM4 has started sampling, with a maximum capacity of 36GB, and mass production preparations will be completed in the second half of the year.

As the company rides the wave in the HBM market, the DRAM market structure is also being reshaped.

In Q1 of this year, SK Hynix, with its absolute advantage in the HBM field, ended Samsung’s more than forty-year market dominance, achieving 36.7% market share to become the first in the global DRAM market. SK Hynix expects HBM to continue to grow by about double year-on-year this year, with sales of 12-layer HBM3E steadily increasing, expected to account for more than half of the total HBM3E sales in Q2.

With the continued hot demand for HBM, SK Hynix is continuously making profits in this market.

AI Extended Network Chips, NVIDIA Again Takes the Lead

In the AI extended network chip market, NVIDIA remains the biggest winner, with competitors including Broadcom, Astera..

Broadcom’s CEO Hok Tan estimates that networking currently accounts for 5% to 10% of data center spending, and as the number of interconnected GPUs increases, the growth rate of interconnection will accelerate, and this proportion will grow to 15% to 20%.

In addition to the aforementioned chip companies, AI foundry companies are also deeply benefiting from this wave, such as TSMC, which produces almost all high-value non-memory chips for data centers due to advanced nodes and 2.5D/3D packaging technology. More than half of its revenue comes from AI/HPC.

Intel and Samsung are also challenging in this market.

Looking ahead, with the rapid development of AI, IoT, and big data technologies, the data center chip market is ushering in new growth opportunities. The application of these technologies drives the demand for high-performance computing and data processing, providing a broad market space for data center chip manufacturers. For example, the application of AI technology in autonomous vehicles, smart homes, and industrial automation requires powerful data center chips to support complex computing tasks. In addition, with the popularization of 5G networks, more devices will connect to the internet, generating massive amounts of data, which will further drive the development of the data center chip market.

The aforementioned chips are also expected to see higher market value in the future.